from datasets import Dataset

from transformers import AutoTokenizer, AutoModelForCausalLM, DataCollatorForSeq2Seq, TrainingArguments, Trainer

ds = Dataset.load_from_disk("../data/alpaca_data_zh/")

from modelscope import snapshot_download

# path = snapshot_download('langboat/bloom-1b4-zh')

path = snapshot_download('AI-ModelScope/bloom-3b')

tokenizer = AutoTokenizer.from_pretrained(path)

def process_func(example):

MAX_LENGTH = 256

input_ids, attention_mask, labels = [], [], []

instruction = tokenizer("\n".join(["Human: " + example["instruction"], example["input"]]).strip() + "\n\nAssistant: ")

response = tokenizer(example["output"] + tokenizer.eos_token)

input_ids = instruction["input_ids"] + response["input_ids"]

attention_mask = instruction["attention_mask"] + response["attention_mask"]

labels = [-100] * len(instruction["input_ids"]) + response["input_ids"]

if len(input_ids) > MAX_LENGTH:

input_ids = input_ids[:MAX_LENGTH]

attention_mask = attention_mask[:MAX_LENGTH]

labels = labels[:MAX_LENGTH]

return {

"input_ids": input_ids,

"attention_mask": attention_mask,

"labels": labels

}

tokenized_ds = ds.map(process_func, remove_columns=ds.column_names)

model = AutoModelForCausalLM.from_pretrained(path, low_cpu_mem_usage=True)

sum(param.numel() for param in model.parameters())

# bitfit

# 选择模型参数里面的所有bias部分

num_param = 0

for name, param in model.named_parameters():

if "bias" not in name:

param.requires_grad = False

else:

num_param += param.numel()

num_param

num_param / sum(param.numel() for param in model.parameters())

args = TrainingArguments(

output_dir="./chatbot",

per_device_train_batch_size=1,

gradient_accumulation_steps=8,

logging_steps=10,

num_train_epochs=1

)

trainer = Trainer(

model=model,

args=args,

train_dataset=tokenized_ds,

data_collator=DataCollatorForSeq2Seq(tokenizer=tokenizer, padding=True),

)

trainer.train()

from modelscope import snapshot_download

# path = snapshot_download('langboat/bloom-1b4-zh')

path = snapshot_download('AI-ModelScope/bloom-3b')

tokenizer = AutoTokenizer.from_pretrained(path)

path = '/mnt/workspace/transformers-code/03-PEFT/17-bitfit/chatbot/checkpoint-3000'

model = AutoModelForCausalLM.from_pretrained(path, low_cpu_mem_usage=True)

model = model.cuda()

ipt = tokenizer("Human: {}\n{}".format("考试有哪些技巧?", "").strip() + "\n\nAssistant: ", return_tensors="pt").to(model.device)

tokenizer.decode(model.generate(**ipt, max_length=128, do_sample=True)[0], skip_special_tokens=True)

from peft import PromptTuningConfig, get_peft_model, TaskType, PromptTuningInit

# Soft Prompt

# config = PromptTuningConfig(task_type=TaskType.CAUSAL_LM, num_virtual_tokens=10)

# config

# Hard Prompt

config = PromptTuningConfig(task_type=TaskType.CAUSAL_LM,

prompt_tuning_init=PromptTuningInit.TEXT,

prompt_tuning_init_text="下面是一段人与机器人的对话。",

num_virtual_tokens=len(tokenizer("下面是一段人与机器人的对话。")["input_ids"]),

tokenizer_name_or_path=path)

model = get_peft_model(model, config)

print(model.print_trainable_parameters())

args = TrainingArguments(

output_dir="./chatbot",

per_device_train_batch_size=1,

gradient_accumulation_steps=8,

logging_steps=10,

num_train_epochs=1,

save_steps=20,

)

trainer = Trainer(

model=model,

args=args,

train_dataset=tokenized_ds,

data_collator=DataCollatorForSeq2Seq(tokenizer=tokenizer, padding=True),

)

trainer.train()

加载

from peft import PeftModel

peft_model = PeftModel.from_pretrained(model=model, model_id="./chatbot/checkpoint-800/")

peft_model = peft_model.cuda()

ipt = tokenizer("Human: {}\n{}".format("考试有哪些技巧?", "").strip() + "\n\nAssistant: ", return_tensors="pt").to(peft_model.device)

print(tokenizer.decode(peft_model.generate(**ipt, max_length=128, do_sample=True)[0], skip_special_tokens=True))

P-tuning

from peft import PromptEncoderConfig, TaskType, get_peft_model, PromptEncoderReparameterizationType

config = PromptEncoderConfig(task_type=TaskType.CAUSAL_LM, num_virtual_tokens=10,

encoder_reparameterization_type=PromptEncoderReparameterizationType.MLP,

encoder_dropout=0.1, encoder_num_layers=5, encoder_hidden_size=1024)

prefix tuning

from peft import PrefixTuningConfig, get_peft_model, TaskType

config = PrefixTuningConfig(task_type=TaskType.CAUSAL_LM, num_virtual_tokens=10, prefix_projection=True)

model = get_peft_model(model, config)

model.print_trainable_parameters()

trainable params: 399,951,360 || all params: 3,402,508,800 || trainable%: 11.754601780897671

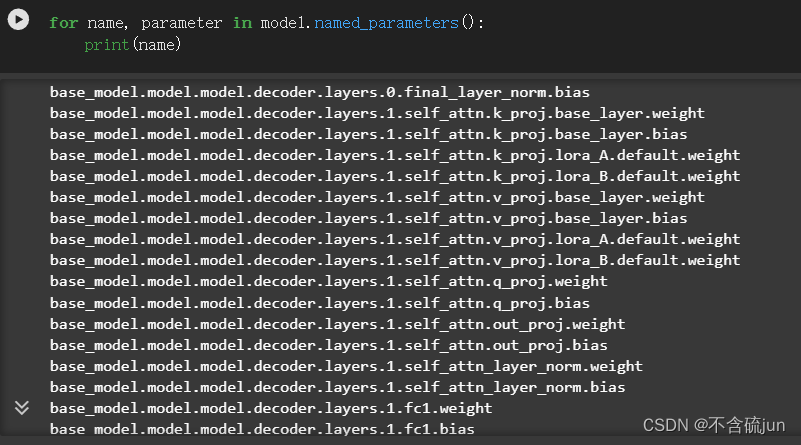

lora

for name, parameter in model.named_parameters():

print(name)

from peft import LoraConfig, TaskType, get_peft_model

config = LoraConfig(task_type=TaskType.CAUSAL_LM, target_modules=".*\.1.*k_proj", modules_to_save=["word_embeddings"])

config = LoraConfig(task_type=TaskType.CAUSAL_LM, target_modules=["k_proj","v_proj"])

config

lora推理

from transformers import AutoModelForCausalLM, AutoTokenizer

from peft import PeftModel

from modelscope import snapshot_download

path = snapshot_download('AI-ModelScope/bloom-3b')

model = AutoModelForCausalLM.from_pretrained(path, low_cpu_mem_usage=True)

tokenizer = AutoTokenizer.from_pretrained(path)

p_model = PeftModel.from_pretrained(model, model_id="./chatbot/checkpoint-500/")

ipt = tokenizer("Human: {}\n{}".format("考试有哪些技巧?", "").strip() + "\n\nAssistant: ", return_tensors="pt")

tokenizer.decode(p_model.generate(**ipt, do_sample=False)[0], skip_special_tokens=True)

#模型合并

merge_model = p_model.merge_and_unload()

ipt = tokenizer("Human: {}\n{}".format("考试有哪些技巧?", "").strip() + "\n\nAssistant: ", return_tensors="pt")

tokenizer.decode(merge_model.generate(**ipt, do_sample=False)[0], skip_special_tokens=True)

#保存模型

merge_model.save_pretrained("./chatbot/merge_model")

文章介绍了如何使用HuggingFaceTransformers库对预训练的Bloom-3B模型进行多种技术的微调,如P-tuning、SoftPrompt和Lora,以及如何进行对话生成和模型融合。

文章介绍了如何使用HuggingFaceTransformers库对预训练的Bloom-3B模型进行多种技术的微调,如P-tuning、SoftPrompt和Lora,以及如何进行对话生成和模型融合。

6万+

6万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?