1.故障现象:迟滞

海康的摄像头迟滞大概会到1秒的量级,一般如果你自己搭个框架做转发,迟滞有时会达到20秒,这是为什么呢?请看例程:

class VideoCamera(object):

def __init__(self):

# 打开系统默认摄像头

self.cap = cv2.VideoCapture(rtsp_url)

if not self.cap.isOpened():

raise RuntimeError('Could not open camera.')

# 设置帧宽和高度

self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, 640)

self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 640)

...

#other init-process.

# main loop

while self.isRunning:

ret, self.frame = self.cap.read()

...注意,cv2的输入流是在 cv2.VideoCapture(rtsp_url)处就打开了。你转换后实际输出的视频,大概率就从这个时候开始,然后在漫长的初始化工作完成后才开始接收。所以,你的输出视频的时间起点,人为地拉长了。

修正原则:对于视频流这类资源,什么时候用,什么时候开闸。

2.故障现象:花屏

导致花屏的错误代码参见:

while self.isRunning:

ret, self.frame = self.cap.read()

else:

flagNotHandle = False

ret = True

if ret:

if(self.queueFrameDone.qsize()>5):

continue;

self.frame = self.frame[zone[1]:zone[1]+zone[3], zone[0]:zone[0]+zone[2]]

self.image = cv2.cvtColor(self.frame, cv2.COLOR_BGR2RGB)

self.image = cv2.resize(self.image, (640,640))

self.outputs = self.rknn_lite.inference(inputs=self.image) #self.gp_inference(self.image)

self.frame = process_image(self.image, self.outputs) #image in...image out.

self.frame = self.image

#print(type(self.image), type(self.outputs), type(self.frame))

rtsp_out.rtsp_out_push(self.out, self.frame, 640, 640)

ret, image = cv2.imencode('.jpg', self.frame) #to bytes

if ret:

arByte = image.tobytes()

#self.queueFrameDone.put(arByte);

self.resultImage = bytearray(arByte);

self.out.release()self.rknn_lite.inference和接下来的process_image是高耗时的计算单元。它一定不能阻塞输入流。这是花屏的根本原因。

3 推荐的处理策略

完全没有技术含量,对吧,是个人都能想到。但程序的bug就是这么造成的。一旦所做的事情比较新,你会很容易丧失掉一些关注点。会犯低级错误。它其实很类似弹吉他。弹吉他没有什么特别大的窍门,但是你是无法自如地弹奏的,你的错误会极顽固。

所以,结对儿编程,代码互检,真的很必要。

如果用python来处理视频转发,一个比较简单的策略是类似马保国师傅的接化发,接收码流,发送码流和码流的帧解算三个线程相互独立。

3.1 接线程

因为python的queue可以自行处理线程同步,所以,利用它做了视频流的输入输出接口。配置移到了cat4Config。然后包含一个简单的自测试。

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# 获取当前脚本文件所在目录的父目录,并构建相对路径

import os

import sys

current_dir = os.path.dirname(os.path.abspath(__file__))

project_path = os.path.join(current_dir, '..')

sys.path.append(project_path)

sys.path.append(current_dir)

import cv2

import threading

import time

import warnings

import queue

import cat4Config

class StreamInThread(threading.Thread):

def __init__(self, name, pjtConfig):

threading.Thread.__init__(self)

self.name = name

self.isRunning = True

self.frame = None #the very last frame

self.cap = None

self.queueIn = queue.Queue()

self.MAX_QUEUE_LEN = 5

self.imageCache = None #the very old frame

self.isFirstImageComing = False

self.width = pjtConfig.width

self.height = pjtConfig.height

self.zone = pjtConfig.zone

self.rtsp_url = pjtConfig.stream_in

def run(self):

flagNotHandle= False

zone = self.zone

self.cap = cv2.VideoCapture(self.rtsp_url)

if not self.cap.isOpened():

return;

self.isFirstImageComing = False

while self.isRunning:

ret, self.frame = self.cap.read()

if ret:

self.frame = self.frame[zone[1]:zone[1]+zone[3], zone[0]:zone[0]+zone[2]] #截取

self.image = cv2.cvtColor(self.frame, cv2.COLOR_BGR2RGB) #色彩变换

self.image = cv2.resize(self.image, (self.width,self.height)) #缩放

self.queueIn.put(self.image)

self.cap.release()

def stop(self):

self.isRunning = False

def __del__(self):

self.isRunning = False

#if(self.cap != None):

# self.cap.release()

def rawImage(self):

while self.queueIn.qsize()>(self.MAX_QUEUE_LEN*2):

self.queueIn.get()

continue;

if(self.queueIn.qsize()>0):

self.imageCache = self.queueIn.get()

self.isFirstImageComing = True

return self.isFirstImageComing, self.imageCache

def purge(self):

while self.queueIn.qsize()>1:

self.queueIn.get()

continue;

if __name__ == "__main__":

pjtConfig = cat4Config.Cat4Config()

thread = StreamInThread("Stream In Thread", pjtConfig)

thread.start()

time.sleep(10)

thread.stop();

3.2 AI解算线程

这个部分很容易可以从接发线程推导出来,逻辑很简单,并且三个线程具备相似的结构。

代码从略...3.3 发线程

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# 获取当前脚本文件所在目录的父目录,并构建相对路径

import os

import sys

current_dir = os.path.dirname(os.path.abspath(__file__))

project_path = os.path.join(current_dir, '..')

sys.path.append(project_path)

sys.path.append(current_dir)

import cv2

import threading

import time

import warnings

import queue

import cat4Config

import thread_stream_ai as stream_ai

import thread_stream_in as stream_in

import numpy as np

class StreamOutThread(threading.Thread):

def __init__(self, name, pjtConfig, streamGeneratorRhs:stream_ai.YoloV5Thread):

threading.Thread.__init__(self)

self.name = name

self.isRunning = True

self.nextTime = time.time() - 3

self.streamGenerator= streamGeneratorRhs

self.MAX_QUEUE_LEN = 5

self.out = None

self.frameCache = None #the very old frame

self.isFirstFrameGenedYet = False

self.nextTime2Sent = None

self.queueOut = queue.Queue()

self.width = pjtConfig.width

self.height = pjtConfig.height

self.zone = pjtConfig.zone

self.rtsp_url = pjtConfig.stream_out

self.fps = pjtConfig.fps_out

self.timeStep = (1.0/self.fps)

def run(self):

self.nextTime2Sent = time.time()-3

self.out = None

self.streamGenerator.purge();

self.isFirstFrameGenedYet = False

while self.isRunning:

ret, frame = self.streamGenerator.outFrame() #read next

#print(ret, frame)

if ret:

#print(">>>>>>>>>>>>>>>>rknn first frame gened");

self.queueOut.put(frame)

self.isFirstFrameGenedYet = True

if(self.out == None):

self.rtsp_out_init()

self.nextTime2Sent = time.time()

ret, frame = self.outFrame()

self.rtsp_out_push(frame)

self.nextTime2Sent += self.timeStep

else:

time.sleep(0.01)

def stop(self):

self.isRunning = False

def __del__(self):

self.isRunning = False

#if(self.cap != None):

# self.cap.release()

def outFrame(self):

while self.queueOut.qsize()>(self.MAX_QUEUE_LEN*2):

self.queueOut.get()

continue;

nOfQueue = self.queueOut.qsize()

if(nOfQueue):

dumb = self.queueOut.get();

#print("rknnQueue=%d" %(nOfQueue), dumb)

arMat = np.zeros((self.height, self.width, 3), dtype=np.uint8)

arMat[:,:,:] = dumb

self.frameCache = arMat

return self.isFirstFrameGenedYet, self.frameCache

def purge(self):

while self.queueOut.qsize()>1:

self.queueOut.get()

continue;

def rtsp_out_init(self):

print('start post to %s' %(self.rtsp_url))

self.out = cv2.VideoWriter('appsrc ! videoconvert' + \

' ! video/x-raw,format=I420' + \

' ! x264enc speed-preset=ultrafast bitrate=600 key-int-max=' + str(self.fps * 2) + \

' ! video/x-h264,profile=baseline' + \

' ! rtspclientsink location=%s' %(self.rtsp_url),

cv2.CAP_GSTREAMER, 0, self.fps, (self.width, self.height), True)

if not self.out.isOpened():

raise Exception("can't open video writer")

def rtsp_out_push(self, npArray):

out = self.out

frame = npArray

out.write(frame)

if __name__ == "__main__":

pjtConfig = cat4Config.Cat4Config()

threadIn = stream_in.StreamInThread("Stream In Thread", pjtConfig)

threadCalc = stream_ai.YoloV5Thread("Stream Calc Thread", pjtConfig, threadIn)

threadOut = StreamOutThread("Stream RTSP Out Thread", pjtConfig, threadCalc)

threadOut.start()

threadCalc.start()

threadIn.start()

time.sleep(3600*24)

threadOut.stop()

threadCalc.stop()

threadIn.stop()

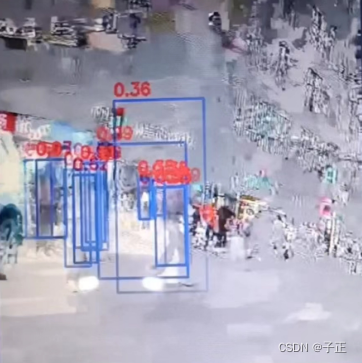

4.最终的实现效果

迟滞还有,但是另外的原因,然后花屏没有了。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?