背景

softmax在MNIST数据集上的正确率只有91%,不是很好,在这里,我们用卷积神经网络(Convolutional Neural Network,CNN)来改善效果。这会达到大概99.2%的准确率。

权重初始化

为了创建这个模型,我们需要创建大量的权重和偏置项。这个模型中的权重在初始化时应该加入少量的噪声来打破对称性以及避免0梯度。由于我们使用的是ReLU(线性纠正函数)神经元,因此比较好的做法是用一个较小的正数来初始化偏置项,以避免神经元节点输出恒为0的问题(dead neurons)。为了不在建立模型的时候反复做初始化操作,我们定义两个函数用于初始化。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;"><span class="hljs-function" style="box-sizing: border-box;"><span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">def</span> <span class="hljs-title" style="box-sizing: border-box;">weight_variable</span><span class="hljs-params" style="color: rgb(102, 0, 102); box-sizing: border-box;">(shape)</span>:</span>

initial = tf.truncated_normal(shape, stddev=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.1</span>)

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">return</span> tf.Variable(initial)

<span class="hljs-function" style="box-sizing: border-box;"><span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">def</span> <span class="hljs-title" style="box-sizing: border-box;">bias_variable</span><span class="hljs-params" style="color: rgb(102, 0, 102); box-sizing: border-box;">(shape)</span>:</span>

initial = tf.constant(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.1</span>, shape=shape)

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">return</span> tf.Variable(initial)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li></ul>

卷积和池化

TensorFlow在卷积和池化上有很强的灵活性。我们怎么处理边界?步长应该设多大?在这个实例里,我们会一直使用vanilla版本。我们的卷积使用1步长(stride size),0边距(padding size)的模板,保证输出和输入是同一个大小。我们的池化用简单传统的2x2大小的模板做max pooling。为了代码更简洁,我们把这部分抽象成一个函数。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;"><span class="hljs-function" style="box-sizing: border-box;"><span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">def</span> <span class="hljs-title" style="box-sizing: border-box;">conv2d</span><span class="hljs-params" style="color: rgb(102, 0, 102); box-sizing: border-box;">(x,w)</span>:</span>

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">return</span> tf.nn.conv2d(x,w,strides=[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>],padding = <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"SAME"</span>)

<span class="hljs-function" style="box-sizing: border-box;"><span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">def</span> <span class="hljs-title" style="box-sizing: border-box;">max_pool_2x2</span><span class="hljs-params" style="color: rgb(102, 0, 102); box-sizing: border-box;">(x)</span>:</span>

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">return</span> tf.nn.max_pool(x,ksize=[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">2</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">2</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>],

strides=[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">2</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">2</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>],padding = <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"SAME"</span>)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li></ul>

第一层卷积

现在我们可以开始实现第一层了。它由一个卷积接一个max pooling完成。卷积在每个5x5的patch中算出32个特征。卷积的权重张量形状是[5, 5, 1, 32],前两个维度是patch的大小,接着是输入的通道数目,最后是输出的通道数目。 而对于每一个输出通道都有一个对应的偏置量。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;">W_conv1 = weight_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">5</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">5</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">32</span>]) b_conv1 = bias_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">32</span>])</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li></ul>

为了用这一层,我们把x变成一个4d向量,其第2、第3维对应图片的宽、高,最后一维代表图片的颜色通道数(因为是灰度图所以这里的通道数为1,如果是rgb彩色图,则为3)。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;">x_image = tf.reshape(x, [-<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">28</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">28</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>])</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li></ul>

我们把x_image和权值向量进行卷积,加上偏置项,然后应用ReLU激活函数,最后进行max pooling。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;">h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1) h_pool1 = max_pool_2x2(h_conv1)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li></ul>

第二层卷积

为了构建一个更深的网络,我们会把几个类似的层堆叠起来。第二层中,每个5x5的patch会得到64个特征。

<code class="hljs ini has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;"><span class="hljs-setting" style="box-sizing: border-box;">W_conv2 = <span class="hljs-value" style="box-sizing: border-box;">weight_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">5</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">5</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">32</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">64</span>])</span></span> <span class="hljs-setting" style="box-sizing: border-box;">b_conv2 = <span class="hljs-value" style="box-sizing: border-box;">bias_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">64</span>])</span></span> <span class="hljs-setting" style="box-sizing: border-box;">h_conv2 = <span class="hljs-value" style="box-sizing: border-box;">tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)</span></span> <span class="hljs-setting" style="box-sizing: border-box;">h_pool2 = <span class="hljs-value" style="box-sizing: border-box;">max_pool_2x2(h_conv2)</span></span></code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li></ul>

全连接层

现在,图片尺寸减小到7x7,我们加入一个有1024个神经元的全连接层,用于处理整个图片。我们把池化层输出的张量reshape成一些向量,乘上权重矩阵,加上偏置,然后对其使用ReLU。

<code class="hljs ini has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;"><span class="hljs-setting" style="box-sizing: border-box;">W_fc1 = <span class="hljs-value" style="box-sizing: border-box;">weight_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">7</span> * <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">7</span> * <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">64</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1024</span>])</span></span> <span class="hljs-setting" style="box-sizing: border-box;">b_fc1 = <span class="hljs-value" style="box-sizing: border-box;">bias_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1024</span>])</span></span> <span class="hljs-setting" style="box-sizing: border-box;">h_pool2_flat = <span class="hljs-value" style="box-sizing: border-box;">tf.reshape(h_pool2, [-<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">7</span>*<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">7</span>*<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">64</span>])</span></span> <span class="hljs-setting" style="box-sizing: border-box;">h_fc1 = <span class="hljs-value" style="box-sizing: border-box;">tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)</span></span></code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li></ul>

Dropout

为了减少过拟合,我们在输出层之前加入dropout。我们用一个placeholder来代表一个神经元的输出在dropout中保持不变的概率。这样我们可以在训练过程中启用dropout,在测试过程中关闭dropout。 TensorFlow的tf.nn.dropout操作除了可以屏蔽神经元的输出外,还会自动处理神经元输出值的scale。所以用dropout的时候可以不用考虑scale。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;">keep_prob = tf.placeholder(<span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"float"</span>) h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li></ul>

输出层

最后,我们添加一个softmax层,就像前面的单层softmax regression一样。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;">W_fc2 = weight_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1024</span>, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">10</span>]) b_fc2 = bias_variable([<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">10</span>]) y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li></ul>

训练和评估模型

为了进行训练和评估,我们使用与之前简单的单层SoftMax神经网络模型几乎相同的一套代码,只是我们会用更加复杂的ADAM优化器来做梯度最速下降,在feed_dict中加入额外的参数keep_prob来控制dropout比例。然后每100次迭代输出一次日志。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-radius: 0px; word-wrap: normal; background: transparent;">cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv))

train_step = tf.train.AdamOptimizer(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1e-4</span>).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>), tf.argmax(y_,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"float"</span>))

sess.run(tf.initialize_all_variables())

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">with</span> tf.device(<span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"/cpu:0"</span>):

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">for</span> i <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">in</span> range(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">20000</span>):

batch = mnist.train.next_batch(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">50</span>)

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">if</span> i%<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">100</span> == <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>:

train_accuracy = accuracy.eval(feed_dict={x:batch[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>], y_: batch[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>], keep_prob: <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1.0</span>})

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">print</span> <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"step %d, training accuracy %g"</span>%(i, train_accuracy)

train_step.run(feed_dict={x: batch[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>], y_: batch[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>], keep_prob: <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.5</span>})

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">print</span> <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"test accuracy %g"</span>%accuracy.eval(feed_dict={

x: mnist.test.images, y_: mnist.test.labels, keep_prob: <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1.0</span>})</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right; background-color: rgb(238, 238, 238);"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li><li style="box-sizing: border-box; padding: 0px 5px;">8</li><li style="box-sizing: border-box; padding: 0px 5px;">9</li><li style="box-sizing: border-box; padding: 0px 5px;">10</li><li style="box-sizing: border-box; padding: 0px 5px;">11</li><li style="box-sizing: border-box; padding: 0px 5px;">12</li><li style="box-sizing: border-box; padding: 0px 5px;">13</li><li style="box-sizing: border-box; padding: 0px 5px;">14</li><li style="box-sizing: border-box; padding: 0px 5px;">15</li></ul>

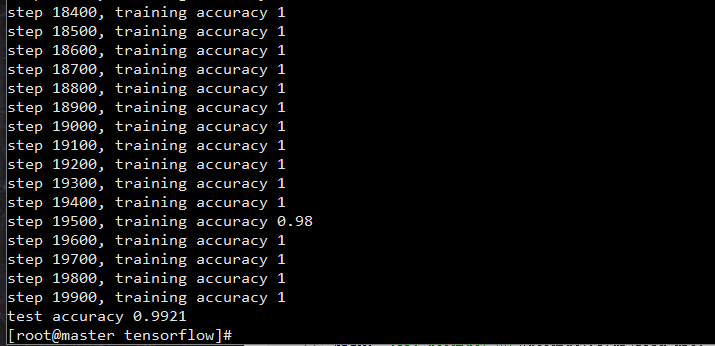

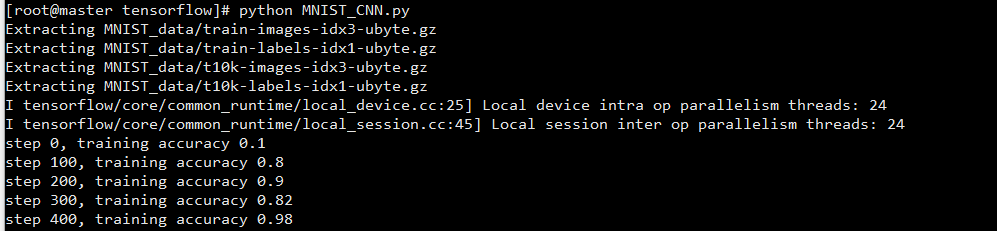

结果

迭代:

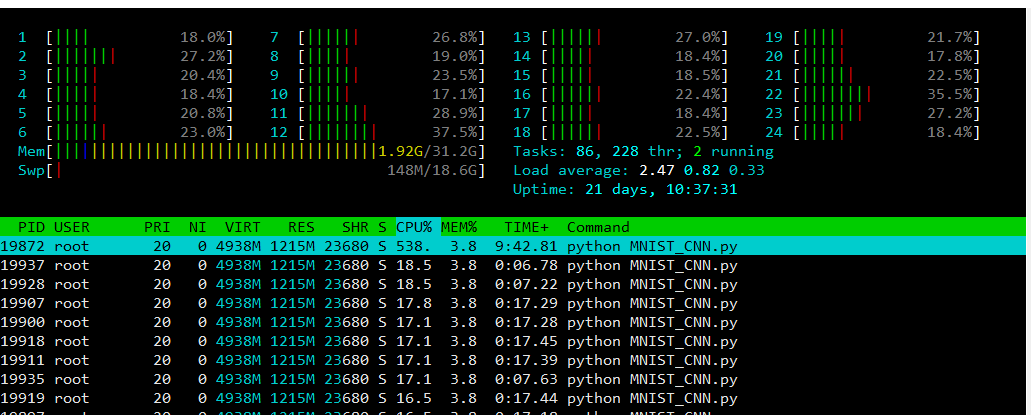

系统资源使用情况:

572

572

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?