FFmpeg滤镜(基础篇-第二章logo)

环境说明

android studio版本4.0以上

ndk16

cmake3.6

demo下载

什么是片尾logo?

一个视频后面增加用户信息和动态logo

命令:

ffmpeg -loop 1 -i /Users/Downloads/logo/imagePath.png -i /Users/Downloads/logo/videoLogoPath.png -filter_complex "[1:v]scale=128:36[watermask];[0:v]fade=in:0:15[video];[video][watermask]overlay=224:924" -vcodec libx264 -r 25 -t 3 -pix_fmt yuv420p -preset ultrafast -y /Users/Downloads/logo/logotrailerLogo.mp4

命令说明:

-filter_complex “[1:v]scale=128:36[watermask];[0:v]fade=in:0:15[video];[video][watermask]overlay=224:924”:设置视频滤镜复杂图形链。具体来说:

[1:v]scale=128:36[watermask]:将第二个输入文件(Logo视频)缩放为指定大小(128x36像素)。

[0:v]fade=in:0:15[video]:对第一个输入文件(静态图片)应用淡入效果,持续时间为15帧。

[video][watermask]overlay=224:924:将经过淡入处理的第一个输入文件和缩放后的第二个输入文件进行叠加,位置为(224, 924)。

-vcodec libx264:指定使用libx264编码器进行视频编码。

-r 25:设置输出视频的帧率为25帧/秒。

-t 3:设置输出视频的持续时间为3秒。

-pix_fmt yuv420p:设置输出视频的像素格式为YUV420P。

-preset ultrafast:指定编码预设为ultrafast,以实现更快速的处理速度。

-y /Users/Downloads/logo/logotrailerLogo.mp4:指定输出文件路径和名称。

x

初始化滤镜

滤镜步骤

1、创建graph

2、创建buffer filter的上下文(要输入帧的载体)

3、创建buffersink filter(要输出帧的载体)

4、升级创建多个buffer filter可以相互关联的

typedef struct {

char name[50];

AVFormatContext *ifmt_ctx;

AVCodecContext *dec_ctx;

AVFilterContext *buffersrc_ctx;

int video_stream_index;

} filter_video_info;

typedef struct {

filter_video_info *dest;

int size;//对应输入文件数

AVFilterContext *buffersink_ctx;

AVFilterGraph *filter_graph;

AVRational time_base;

int width;

int height;

} filters_info;

/**

* 初始化视频滤镜,可以多个视频进行初始化

* @param filters_descr

* @param info

* @return

*/

int init_more_filters(const char *filters_descr,filters_info *info){

int ret = -1;

const AVFilter *buffersink = avfilter_get_by_name("buffersink");

enum AVPixelFormat pix_fmts[] = { AV_PIX_FMT_YUV420P, AV_PIX_FMT_NONE };

AVFilterInOut* filter_outputs[2];

AVFilterInOut *inputs = avfilter_inout_alloc();

info->filter_graph = avfilter_graph_alloc();

if (! info->filter_graph) {

ret = AVERROR(ENOMEM);

goto end;

}

info->filter_graph->nb_threads = 8;

for (int i = 0; i <info->size ; ++i) {

char args[512];

const AVFilter *buffersrc = avfilter_get_by_name("buffer");

AVCodecContext *dec_ctx=info->dest[i].dec_ctx;

// AVFormatContext *ifmt_ctx=info->dest[i].ifmt_ctx;

int video_stream_index=info->dest[i].video_stream_index;

AVRational time_base =info->time_base;

snprintf(info->dest[i].name, sizeof(info->dest[i].name),"in%d",i);

LOGE( "======name=%s==\n",info->dest[i].name);

int width=0;

int height=0;

if ( info->width!=0&&info->height!=0){

width=info->width;

height=info->height;

snprintf(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d",

width, height, AV_PIX_FMT_YUV420P,

time_base.num, time_base.den

);

}else{

width=dec_ctx->width;

height=dec_ctx->height;

snprintf(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

width, height, dec_ctx->pix_fmt,

time_base.num, time_base.den,

dec_ctx->sample_aspect_ratio.num, dec_ctx->sample_aspect_ratio.den);

}

LOGE( "======args=%s==\n",args);

ret = avfilter_graph_create_filter(&info->dest[i].buffersrc_ctx, buffersrc, info->dest[i].name,

args, NULL, info->filter_graph);

if (ret < 0) {

LOGE( "=========Cannot create buffer source\n");

goto end;

}

}

/* buffer video sink: to terminate the filter chain. */

ret = avfilter_graph_create_filter(&info->buffersink_ctx, buffersink, "out",

NULL, NULL, info->filter_graph);

if (ret < 0) {

LOGE( "Cannot create buffer sink\n");

goto end;

}

ret = av_opt_set_int_list(info->buffersink_ctx, "pix_fmts", pix_fmts,

AV_PIX_FMT_NONE, AV_OPT_SEARCH_CHILDREN);

if (ret < 0) {

LOGE( "Cannot set output pixel format\n");

goto end;

}

for (int i = 0; i <info->size ; ++i) {

filter_outputs[i]=avfilter_inout_alloc();

filter_outputs[i]->name=av_strdup(info->dest[i].name);

filter_outputs[i]->filter_ctx=info->dest[i].buffersrc_ctx;

filter_outputs[i]->pad_idx=0;

filter_outputs[i]->next=NULL;

if(i!=0){

filter_outputs[i-1]->next=filter_outputs[i];

}

}

inputs->name = av_strdup("out");

inputs->filter_ctx = info->buffersink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

if ((ret = avfilter_graph_parse_ptr(info->filter_graph, filters_descr,

&inputs, filter_outputs, NULL)) < 0)

{

LOGE( "======avfilter_graph_parse_ptr=%d==\n",ret);

goto end;

}

if ((ret = avfilter_graph_config(info->filter_graph, NULL)) < 0)

{

LOGE( "======avfilter_graph_config=%d==\n",ret);

goto end;

}

end:

avfilter_inout_free(&inputs);

avfilter_inout_free(filter_outputs);

// av_free(buffersink);

return ret;

}

addLogo步骤解说:

1、打开对应文件

2、初始化滤镜

3、创建编码线程

4、解码后传入滤镜后再获取的帧传入队列

5、读取队列编码生成视频

int addPicLogo(const char *pngPath,const char *videoPath,const char *logoPath, const char *dstPath)

{

int ret;

int64_t pts=0;

int frame_rate=1000/25;

AVRational enc_timebase;

int video_width=0;

int video_height=0;

int logo_width=0;

int logo_height=0;

int dst_x=0;

int det_y=0;

//视频合成背景图

int png_width = 0;

int png_height = 0;

AVPacket packet;

AVPacket logo_packet;

AVFrame *video_Frame;

AVFrame *wFrame;

AVFrame *pFrame_out;

OutEntity * outEntity=NULL;

media_info *logo_entity;

media_info *png_entity;

media_info *video_entity;

int got_frame;

char* filter_args[520];

double fps=0;

filters_info* transcoder=NULL;

// char* filter_args="[in0][in1]overlay=10:10[out]";

// char* filter_args = "[in0]split[main][tmp];[tmp]scale=w=960:h=1080[inn0];[main][inn0]overlay=0:0[x1];[in1]scale=w=960:h=1080[inn1];[x1][inn1]overlay=960:0[out]";

// char* filter_args="[in1]scale=128:36[watermask];[in0]fade=in:0:15[video];[video][watermask]overlay=224:924[out]";

av_register_all();

avfilter_register_all();

//todo 这个如果只是一张图片要先转视频不然会有问题,只会显示一张图片

if ((ret = open_input_video(pngPath,&png_entity,0)) < 0)

{

LOGE("==open_input_file==png_entity=ret=%d=",ret);

goto end;

}

if ((ret = open_input_video(videoPath,&video_entity,0)) < 0)

{

LOGE("====open_input_file=video_entity==ret=%d=",ret);

goto end;

}

if ((ret = open_input_video(logoPath,&logo_entity,0)) < 0)

{

LOGE("==open_input_file==logo_entity=ret=%d=",ret);

goto end;

}

enc_timebase=video_entity->dec_fmt_ctx->streams[video_entity->in_video_stream_index]->time_base;

png_width= png_entity->video_dec_ctx->width;

png_height= png_entity->video_dec_ctx->height;

video_width= video_entity->video_dec_ctx->width;

video_height= video_entity->video_dec_ctx->height;

fps = av_q2d(video_entity->dec_fmt_ctx->streams[video_entity->in_video_stream_index]->avg_frame_rate);

LOGE( "========fps: %d\n", (int)fps);

outEntity = (OutEntity *)malloc(sizeof(OutEntity));

memset(outEntity, 0x00, sizeof(OutEntity));

outEntity->timebase=enc_timebase;

outEntity->frame_rate=(int)fps;

outEntity->out_filepath = (char*)malloc(strlen(dstPath)+1);

memset(outEntity->out_filepath,0,strlen(dstPath)+1);

memcpy(outEntity->out_filepath , dstPath,strlen(dstPath));

outEntity->entity=video_entity;

outEntity->width=video_width;

outEntity->height=video_height;

outEntity->error_flag=0;

outEntity->end_flag=0;

mgted_dataqueue_init(&(outEntity->video_frame_queue));

ret=pthread_create(&(outEntity->thread_id), NULL, logo_encode_thread, outEntity);

if( ret < 0 ){

goto end;

}

// MGTED_ERROR("=====png_width=%d==png_height=%d=="

// "=video_width=%d===video_height=%d",png_width,png_height,video_width,video_height);

if(video_width > video_height){

logo_height = video_height*44/720;

logo_width = logo_height *160/44;

}else {

logo_width = video_width * 16 / 72;

logo_height = logo_width * 44 / 160;

}

if((logo_width&1)!=0){

logo_width +=1;

}

if((logo_height&1)!=0){

logo_height +=1;

}

if(video_width < video_height){

dst_x = video_width/2 - logo_width/2;

det_y = video_height - 64 - logo_height;

}else{

dst_x = video_width/2 - logo_width/2;

det_y = video_height - 32 - logo_height;

}

if((png_width != video_width)||(png_height != video_height)){

sprintf(reinterpret_cast<char *>(filter_args), "[in1]scale=%d:%d[watermask];[in0]scale=%d:%d,fade=in:0:15[video];[video][watermask]overlay=%d:%d[out]", logo_width, logo_height, video_width, video_height, dst_x, det_y);

}else{

sprintf(reinterpret_cast<char *>(filter_args),"[in1]scale=%d:%d[watermask];[in0]fade=in:0:15[video];[video][watermask]overlay=%d:%d[out]",logo_width,logo_height,dst_x,det_y);

}

transcoder = (filters_info *)malloc(sizeof(filters_info));

memset(transcoder, 0x00, sizeof(filters_info));

transcoder->dest= (filter_video_info*)malloc(2*sizeof(filter_video_info));

memset( transcoder->dest, 0x00, sizeof(filter_video_info));

transcoder->dest[0].video_stream_index=png_entity->in_video_stream_index;

transcoder->dest[0].dec_ctx=png_entity->video_dec_ctx;

transcoder->dest[0].ifmt_ctx=png_entity->dec_fmt_ctx;

transcoder->dest[1].video_stream_index=logo_entity->in_video_stream_index;

transcoder->dest[1].dec_ctx=logo_entity->video_dec_ctx;

transcoder->dest[1].ifmt_ctx=logo_entity->dec_fmt_ctx;

transcoder->size=2;

transcoder->time_base=enc_timebase;

if ((ret = init_more_filters((const char *)filter_args,transcoder)) < 0){

LOGE("==init_filters===ret=%d=",ret);

goto end;

}

//读取一帧背景图

while (1) {

if(outEntity->end_flag){

goto end;

}

ret = av_read_frame(png_entity->dec_fmt_ctx, &packet);

if (ret< 0){

av_packet_unref(&packet);

goto end;

}

if (packet.stream_index == png_entity->in_video_stream_index) {

got_frame = 0;

video_Frame=av_frame_alloc();

av_packet_rescale_ts(&packet,

png_entity->dec_fmt_ctx->streams[png_entity->in_video_stream_index]->time_base,

enc_timebase);

ret = avcodec_decode_video2(png_entity->video_dec_ctx, video_Frame, &got_frame, &packet);

if (ret < 0) {

LOGE( "===src_entity =Error decoding video\n");

av_frame_unref(video_Frame);

av_packet_unref(&packet);

goto end;

}

if (got_frame) {

break;

}

}

}

while (1){

if(outEntity->end_flag){

goto end;

}

if(pts>3000){

break;

}

/* read all packets */

video_Frame->pts=pts/av_q2d(enc_timebase)/ 1000;

if (av_buffersrc_add_frame( transcoder->dest[0].buffersrc_ctx, video_Frame) < 0) {

LOGE( "Error while feeding the filtergraph\n");

}

pts+=frame_rate;

//解码logo图片加入滤镜

while (1) {

ret = av_read_frame(logo_entity->dec_fmt_ctx, &logo_packet);

if (ret< 0){

break;

}

if (logo_packet.stream_index == logo_entity->in_video_stream_index) {

got_frame = 0;

wFrame=av_frame_alloc();

av_packet_rescale_ts(&logo_packet,

logo_entity->dec_fmt_ctx->streams[logo_entity->in_video_stream_index]->time_base,

enc_timebase);

ret = avcodec_decode_video2(logo_entity->video_dec_ctx, wFrame, &got_frame, &logo_packet);

if (ret < 0) {

LOGE( "==logo_entity=src_entity =Error decoding video\n");

av_frame_unref(wFrame);

break;

}

}

if (got_frame) {

/* push the decoded frame into the filtergraph */

if (av_buffersrc_add_frame(transcoder->dest[1].buffersrc_ctx, wFrame) < 0) {

LOGE( "======transcoder->dest[1]=====\n");

}

av_frame_unref(wFrame);

break;

}

}

pFrame_out=av_frame_alloc();

ret = av_buffersink_get_frame(transcoder->buffersink_ctx, pFrame_out);

if (ret < 0){

av_frame_unref(pFrame_out);

continue;

}

mgted_dataqueue_put(&(outEntity->video_frame_queue), pFrame_out, 0);

// int64_t now_pts = pFrame_out->pts * av_q2d(enc_timebase)* 1000 ;

// MGTED_ERROR( "====pFrame_out=now_pts=%lld===\n",now_pts);

// save_yuv420p_file(pFrame_out->data[0], pFrame_out->data[1],

// pFrame_out->data[2],pFrame_out->linesize[0],

// pFrame_out->linesize[1],pFrame_out->width,pFrame_out->height,dstPath);

// av_frame_unref(pFrame_out);

}

//flush

while (1){

if(outEntity->end_flag){

goto end;

}

ret =av_buffersrc_add_frame( transcoder->dest[0].buffersrc_ctx, NULL);

ret =av_buffersrc_add_frame( transcoder->dest[1].buffersrc_ctx, NULL);

pFrame_out=av_frame_alloc();

ret = av_buffersink_get_frame(transcoder->buffersink_ctx, pFrame_out);

if (ret>=0){

mgted_dataqueue_put(&(outEntity->video_frame_queue), pFrame_out, 0);

// int64_t now_pts = pFrame_out->pts * av_q2d(enc_timebase)* 1000 ;

// MGTED_ERROR( "====pFrame_out=now_pts=%lld===\n",now_pts);

// save_yuv420p_file(pFrame_out->data[0], pFrame_out->data[1],

// pFrame_out->data[2],pFrame_out->linesize[0],

// pFrame_out->linesize[1],pFrame_out->width,pFrame_out->height,dstPath);

} else{

av_frame_unref(pFrame_out);

}

if(ret ==AVERROR_EOF){

break;

}

}

ret=0;//成功

end:

if(outEntity){

outEntity->end_flag=1;

if(outEntity->thread_id){

pthread_join(outEntity->thread_id, NULL);

}

if(outEntity->error_flag==1){

ret=-1;

}

if ( outEntity->out_filepath){

free(outEntity->out_filepath);

}

free(outEntity);

}

if (transcoder!=NULL){

if ( transcoder->buffersink_ctx){

avfilter_graph_free(& transcoder->filter_graph);

}

if (transcoder->dest)

free(transcoder->dest);

free(transcoder);

}

if (png_entity!=NULL){

if (png_entity->video_dec_ctx)

avcodec_free_context(&png_entity->video_dec_ctx);

if (png_entity->dec_fmt_ctx)

avformat_close_input(&png_entity->dec_fmt_ctx);

av_free(png_entity);

}

if (logo_entity!=NULL){

if (logo_entity->video_dec_ctx)

avcodec_free_context(&logo_entity->video_dec_ctx);

if (logo_entity->dec_fmt_ctx)

avformat_close_input(&logo_entity->dec_fmt_ctx);

av_free(logo_entity);

}

if (ret < 0) {

return -1;

}

return ret;

}

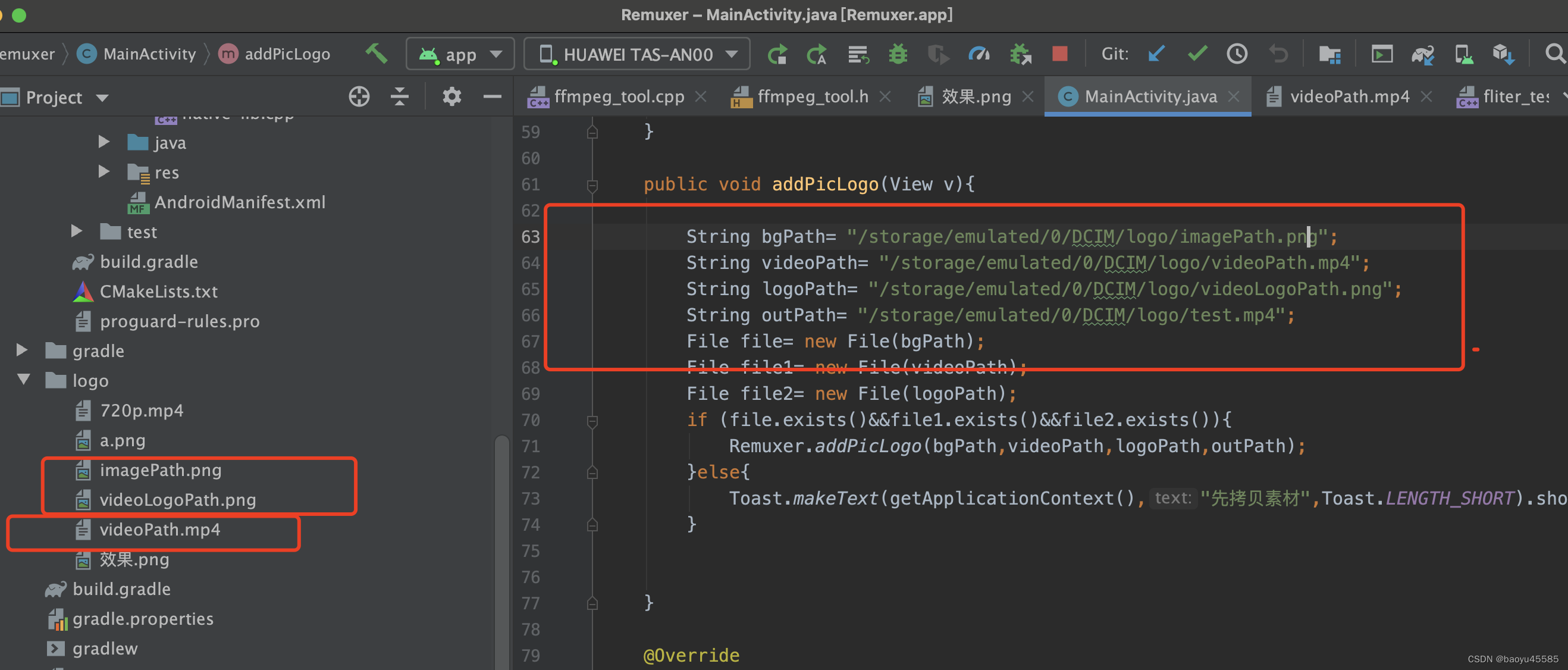

demo说明:

demo是在android环境,对应素材在demo的logo文件夹

效果

logotrailerLogo

QQ:455853625

1744

1744

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?