Since the release of the TensorFlow Object Detection API a lot of enthusiasts have been sharing their own experience of how to train a model for your purposes in a couple of steps (with your purpose being a raccoon alarm or hand detector). However, none of the tutorials actually help to understand the way the model is trained, which is not a good option in case of developing the not-a-toy-but-a-grown-up-production model.

There’s a plenty of knobs to turn in order to either understand or improve the training process on TFODAPI, and in this post I will try to cover basic questions that came up while I was delving in this flourishing API. They are:

- How is training loss calculated?

- How are negative examples mined at all?

To address these questions, we need to stick to some detector architecture and further investigate the way its features are implemented. Let’s pick the simplest model from the zoo: Single-Shot Multibox Detector (SSD) with feature extraction head from MobileNet. For a better understanding of what we are going to do I would totally recommend to get familiar with SSD either by reading original paper or by watching this video from deepsystems.ai team. Just to recap let’s list some features of SSD:

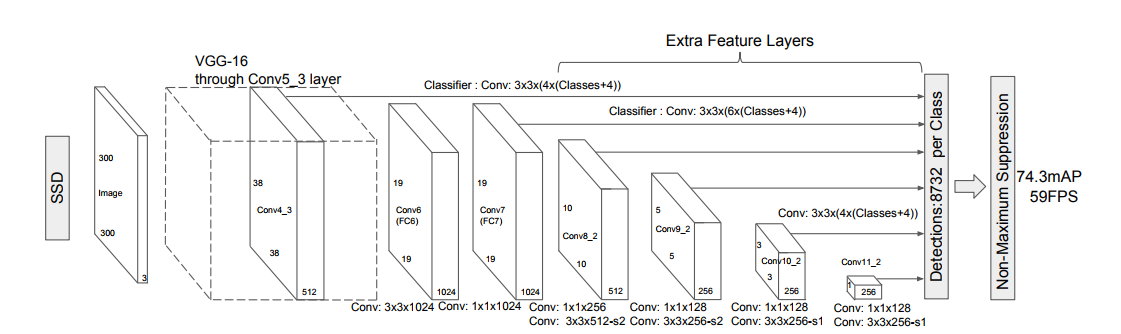

- The first part (“feature extraction”) of the whole network is a bunch of convolutional blocks from some other network (like VGG, Inception and so). Outputs of this part are fed into the detection part.

- The second part (“detection”) of the whole network is a sequence of detection blocks, with each block having its own output that contributes to the final output of the network. Feature maps are reduced in size after each block (in order to capture different scales).

- Each of the detection blocks has 3 branches: box generation, classification and correction of localization. The first one is responsible for cropping rectangles (boxes) of the various aspect ratios centered in regular grid nodes over the feature map. The second one is responsible for predicting the C+1 confidence scores (C for all your classes + 1 for the background) for each generated box. The third one is responsible for the fine adjustment of positions for all boxes generated.

- Outputs of each detection block are collected in the last layer where smart filtering of the predictions (non-maxima suppresion) happens.

- Loss used for training is a sum of Classification and Localization losses with the latter calculated only for boxes with the same class label as the ground truth.

Figure of the SSD (VGG-16) from the original paper (see link above)

So, if you carefully read the bullets above, you might be wondering the same 3 obvious questions to TensorFlow as I stated in the first place. Let’s get to them.

How is training loss calculated?

As stated above, in original SSD network there are two components of the loss: the first one related to the goodness of classification and the second one related to the goodness of localization of the correctly classified object. I’m not placing ugly formulas here — see original paper for details.

The code for most of the detection stuff is in core subfolder of the TFODAPI github (models/research/object_detection/core/). The code for loss classes is in losses.py module. You can see that there’s more than one option for both classification and localization components (quoting lines 18–27):

Localization losses: * WeightedL2LocalizationLoss * WeightedSmoothL1LocalizationLoss * WeightedIOULocalizationLoss

Classification losses: * WeightedSigmoidClassificationLoss * WeightedSoftmaxClassificationLoss * BootstrappedSigmoidClassificationLoss

The cool thing about this API is that you only have to specify the desired parameters inside the pipeline .config file without changing the code itself. In particular, the options for the loss are stored in model/ssd/loss/* sections of the configuration file (see example of ssd_mobilenet_v1_coco.config here, line 108). In case of vanilla SSD smoothed L1 loss is used for localization and weighted sigmoid loss is used for classification:

Loss definition in ssd_mobilenet_v1_coco.config

How are negative examples mined?

In order to train a detector with TFODAPI you don’t need to provide negative examples: the trainer can use your labeled images as well for this purpose. The procedure is called online hard-negative mining and that is why: for each “negative” generated box (that is: such box that its overlap with any of the ground truth boxes is less than specified threshold) on feature map the classification loss is calculated (it has to be high as far as it is not labeled as any object instance), then these negative boxes are sorted by their respective loss in descending order and top-k of them are used for backprop. Three questions arise here.

- Why not take all of them? Well, in case of object detection, you most probably have not-so-many objects on training images (usually less than a dozen), so the largest area on the image is a background (otherwise it wouldn’t be called background, right?). So taking all of the negatives leads to a great imbalance of the dataset towards negative examples, and that is of no good for a good training. In order to keep your dataset balanced you can fix the number of negative examples per single positive one — in this case your dataset will always have a fixed ratio of class representatives (for example, 3/1).

- Why take top-k instead of random-k? Imagine that you take random negatives. Random here means that we don’t take into account how hard for our model these examples are — and, probably, we sometimes will force our model to train on examples that are already chewed. Now imagine that you take only the hard ones. That’s it.

- How do I choose k? There are more than one approach to it. We may stick to the authors of original SSD model and choose k such that the number of negatives per one positive is 3 (that’s 3/1 again).

The definition for miner is in model/loss/hard_examples_miner section of the config (see same example here, line 119):

hard_example_miner definition in ssd_mobilenet_v1_coco.config

As you can see, the ratio of negatives and positives is 3/1 as expected.

In this post we took a look at two (out of many more) aspects of simple detector training using TFODAPI. In the following post I will try to cover some more aspects including generation of the boxes and metrics used for evaluation of the model on validation set.

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?