想要了解更多Linux 内存相关知识敬请关注:Linux内存笔记公众号,期待与大家的更多交流与学习。

原创文章,转载请注明出处。

一、anonymous page是什么?

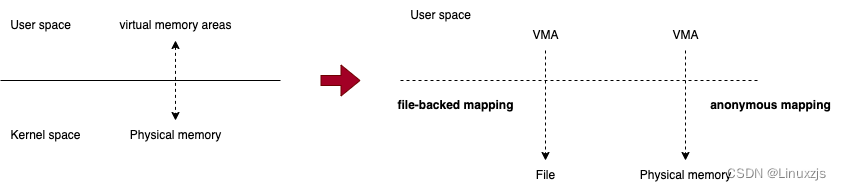

Linux操作系统分为两层: users pace、Kernel space,用户空间通过MMU使用虚拟地址空间访问底层物理地址;同时用户空间访问底层一般的映射方式也是两种:anonymous mapping、file mapping,不同的映射方式产生不同的内存类型也就是anon page, file page:

Active(anon): 352 kB

Inactive(anon): 0 kB

Active(file): 2444 kB

Inactive(file): 55520 kB

anonymous mapping:

MAP_ANON

Synonym for MAP_ANONYMOUS. Deprecated.

MAP_ANONYMOUS

The mapping is not backed by any file; its contents are initialized to zero.

The fd argument is ignored; however, some implementations require fd to be -1

if MAP_ANONYMOUS (or MAP_ANON) is specified, and portable applications should ensure this.

The offset argument should be zero. The use of MAP_ANONYMOUS in conjunction with MAP_SHARED is

supported on Linux only since kernel 2.4.

从手册当中可以明确:匿名映射没有关联任何文件,在使用mmap做匿名映射时fd是被忽略,有的特殊场景下fd = -1无法通过fd查找到任何文件。通过匿名映射用户进程或者是线程能访问底层物理地址,多是堆、栈、mmap地址空间;常见场景如下:

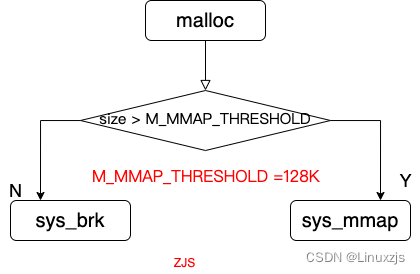

1、malloc 申请内存时在没有做写操作时,只分配虚拟地址并不会分配物理地址空间;只有实际使用这块内存时才会分配物理地址并 将物理地址与分配的虚拟地址建立映射关系。

2、brk当程序变量增加时需要扩展heap空间,此时通过brk系统调用申请内存扩展heap

3、mmap匿名映射进行内存访问与mmap类似当没有使用内存时之分配虚拟地址并没有分配物理地址,当使用时才会分配物理地址 并将物理地址与虚拟地址建立映射关系。

从源码当中可以看到malloc、brk、mmap底层实现时有很强的相似性的,相关底层接口的实现后续会做详细分析,这里主要关注anonymous page fault的过程。

二、源码分析

static int handle_pte_fault(struct vm_fault *vmf)

{

....

if (!vmf->pte) {

if (vma_is_anonymous(vmf->vma))

return do_anonymous_page(vmf);

else

return do_fault(vmf);

}

....

}

虚拟地址对应的pte页表不存在且vma->vm_ops均为空时此次page fault会被判定为anonymous page fault。

static int do_anonymous_page(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct mem_cgroup *memcg;

struct page *page;

int ret = 0;

pte_t entry;

/* 映射标志如果是共享VM_SHARED, 直接return VM_FAULT_SIGBUS*/

if (vma->vm_flags & VM_SHARED)

return VM_FAULT_SIGBUS;

结合mmap anonymous page fault 调用过程:

/*

* The caller must hold down_write(¤t->mm->mmap_sem).

*/

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

...

/* 文件映射处理过程 */

if (file){

...

}

else {

/*此时映射方是内存匿名映射,不是文件映射*/

switch (flags & MAP_TYPE) {

case MAP_SHARED:

if (vm_flags & (VM_GROWSDOWN|VM_GROWSUP))

return -EINVAL;

/*

* Ignore pgoff.

*/

pgoff = 0;

vm_flags |= VM_SHARED | VM_MAYSHARE;

break;

case MAP_PRIVATE:

/*

* Set pgoff according to addr for anon_vma.

*/

pgoff = addr >> PAGE_SHIFT;

break;

default:

return -EINVAL;

}

}

...

}

可以看到mmap flag使用MAP_SHARED 对应的vma->vm_flags |= VM_SHARED添加上共享的属性,但是在do_anonymous_page当中如果被记检测到vma->vm_flags & VM_SHARED == true则直接发生SIGBUDS FAULT,我们就可以得到一个重要的事实:mmap匿名映射的flag标志是MAP_PRIVATE 与 MAP_ANONYMOUS,只有这样才是anonymous page fault,得到的才是anonymous page。 MAP_SHARED 后续介绍tmpfs时会展开描述。重新回到do_anonymous_page当中:

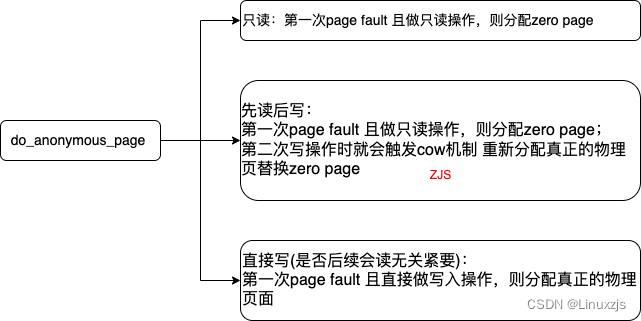

第一次anonymous page fault只读的情况下

if (!(vmf->flags & FAULT_FLAG_WRITE) &&

!mm_forbids_zeropage(vma->vm_mm)) {

entry = pte_mkspecial(pfn_pte(my_zero_pfn(vmf->address),

vma->vm_page_prot));

vmf->pte = pte_offset_map_lock(vma->vm_mm, vmf->pmd,

vmf->address, &vmf->ptl);

if (!pte_none(*vmf->pte))

goto unlock;

ret = check_stable_address_space(vma->vm_mm);

if (ret)

goto unlock;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma)) {

pte_unmap_unlock(vmf->pte, vmf->ptl);

return handle_userfault(vmf, VM_UFFD_MISSING);

}

goto setpte;

}

Zero page:

anonymous page fault过程中如果尝试写入内存vm_flag就会被设置FAULT_FLAG_WRITE属性

static int __kprobes do_page_fault(unsigned long addr, unsigned int esr,

struct pt_regs *regs)

{

...

/*在用户模式下发生指令终止错误,则将VM_EXEC替换为vm标志*/

if (is_el0_instruction_abort(esr)) {

vm_flags = VM_EXEC;

} else if ((esr & ESR_ELx_WNR) && !(esr & ESR_ELx_CM)) {

/*在尝试写入时发生故障,则将VM_WRITE替换为vm标志,并将FAULT_FLAG_WRITE添加到mm标志*/

vm_flags = VM_WRITE;

mm_flags |= FAULT_FLAG_WRITE;

}

...

fault = __do_page_fault(mm, addr, mm_flags, vm_flags, tsk);

}

/* 整个流程形成闭环 */

__do_page_fault -> handle_mm_fault(vma, addr & PAGE_MASK, mm_flags);

|-> __handle_mm_fault ->handle_pte_fault

|-> do_anonymous_page

如果是第一次读匿名页,则do_anonymous_page分配zero page。这样就引入了zero page 随着就产生两个问题: zero page是什么、有什么作用 ?

/* mmu.c

* Empty_zero_page is a special page that is used for zero-initialized data

* and COW.

*/

unsigned long empty_zero_page[PAGE_SIZE / sizeof(unsigned long)] __page_aligned_bss;

EXPORT_SYMBOL(empty_zero_page);

/* pgtable.h

* ZERO_PAGE is a global shared page that is always zero: used

* for zero-mapped memory areas etc..

*/

extern unsigned long empty_zero_page[PAGE_SIZE / sizeof(unsigned long)];

#define ZERO_PAGE(vaddr) phys_to_page(__pa_symbol(empty_zero_page))

/* memory.c

* CONFIG_MMU architectures set up ZERO_PAGE in their paging_init()

*/

static int __init init_zero_pfn(void)

{

zero_pfn = page_to_pfn(ZERO_PAGE(0));

return 0;

}

core_initcall(init_zero_pfn);

Zero page是什么: 是一个特殊的物理页,系统初始化过程中分配了一页的内存并将这页内存初始化为0,所以当anonymous page fault read-only 操作读出的数据全部为0。

Zero page的作用: 是针对匿名页场景专门进行优化,在实际的运行中当发生anonymous page fault如果申请了很大的一块内存并且对该内存的使用是只读的,这种情况读出的数据一般是0,如果分配出完整需要的内存那么就会产生很大的内存浪费;在这种场景下分配zero page 是合理的,这种内存只占用一个物理页(4K且整个系统当中只有一个zero)这样就大大的节省了内存避免内存浪费,同时因为没有去系统当中申请内存这样也加快了anonymous page fault的效率。下面是zero page的英文介绍,感兴趣的可以去找原文阅读。

if a process instantiates a new (non-huge) page by trying to read from it, the kernel still will not allocate a new memory page. Instead, it maps a special page, called simply the “zero page,” into the process’s address space instead. Thus, all unwritten anonymous pages, across all processes in the system, are, in fact, sharing one special page. Needless to say, the zero page is always mapped read-only; it would not do to have some process changing the value of zero for everybody else. Whenever a process attempts to write to the zero page, it will generate a write-protection fault; the kernel will then (finally) get around to allocating a real page of memory and substitute it into the process’s address space at the right spot.

当然如果是read-only 操作后需要重新对这段内存做写操作就会再进行一次anonymous page fault。回到do_anonymous_page 这个read-only属性是在什么地方被设置的还是通过mmap操作进行分析?

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

|-> mmap_region(file, addr, len, vm_flags, pgoff, uf);

|-> vma->vm_page_prot = vm_get_page_prot(vm_flags);

/* description of effects of mapping type and prot in current implementation.

* this is due to the limited x86 page protection hardware. The expected

* behavior is in parens:

*

* map_type prot

* PROT_NONE PROT_READ PROT_WRITE PROT_EXEC

* MAP_SHARED r: (no) no r: (yes) yes r: (no) yes r: (no) yes

* w: (no) no w: (no) no w: (yes) yes w: (no) no

* x: (no) no x: (no) yes x: (no) yes x: (yes) yes

*

* MAP_PRIVATE r: (no) no r: (yes) yes r: (no) yes r: (no) yes

* w: (no) no w: (no) no w: (copy) copy w: (no) no

* x: (no) no x: (no) yes x: (no) yes x: (yes) yes

*/

pgprot_t protection_map[16] __ro_after_init = {

__P000, __P001, __P010, __P011, __P100, __P101, __P110, __P111,

__S000, __S001, __S010, __S011, __S100, __S101, __S110, __S111

};

pgprot_t vm_get_page_prot(unsigned long vm_flags)

{

return __pgprot(pgprot_val(protection_map[vm_flags &

(VM_READ|VM_WRITE|VM_EXEC|VM_SHARED)]) |

pgprot_val(arch_vm_get_page_prot(vm_flags)));

}

mmap传递的读写属性vm_flags,通过vm_get_page_prot 组合出pte的读写属性,这里S代表共享,P代表私有,数字代表:PROT_READ PROT_WRITE PROT_EXEC。

/* shared+writable pages are clean by default, hence PTE_RDONLY|PTE_WRITE */

#define PAGE_SHARED __pgprot(_PAGE_DEFAULT | PTE_USER | PTE_RDONLY | PTE_NG | PTE_PXN | PTE_UXN | PTE_WRITE)

#define PAGE_SHARED_EXEC __pgprot(_PAGE_DEFAULT | PTE_USER | PTE_RDONLY | PTE_NG | PTE_PXN | PTE_WRITE)

#define PAGE_READONLY __pgprot(_PAGE_DEFAULT | PTE_USER | PTE_RDONLY | PTE_NG | PTE_PXN | PTE_UXN)

#define PAGE_READONLY_EXEC __pgprot(_PAGE_DEFAULT | PTE_USER | PTE_RDONLY | PTE_NG | PTE_PXN)

#define __P000 PAGE_NONE

#define __P001 PAGE_READONLY

#define __P010 PAGE_READONLY

#define __P011 PAGE_READONLY

#define __P100 PAGE_READONLY_EXEC

#define __P101 PAGE_READONLY_EXEC

#define __P110 PAGE_READONLY_EXEC

#define __P111 PAGE_READONLY_EXEC

#define __S000 PAGE_NONE

#define __S001 PAGE_READONLY

#define __S010 PAGE_SHARED

#define __S011 PAGE_SHARED

#define __S100 PAGE_READONLY_EXEC

#define __S101 PAGE_READONLY_EXEC

#define __S110 PAGE_SHARED_EXEC

#define __S111 PAGE_SHARED_EXEC

可以看到P私有映射是只有只读属性的(PTE_RDONLY)没有可写的属性,只有S共享映射才是只读,可写都存在的(PTE_RDONLY、PTE_WRITE)。如果设置了MAP_PRIVATE 的VM_READ|VM_WRITE 则对应的属性就是P110 也就是对应PAGE_READONLY_EXEC,PTE被设置PTE_RDONLY属性 。 在没有发生写操作之前对应的PTE就是PTE_RDONLY。当匿名页先只读分配zero page,后进行写操作就会再次发生page fault 重新分配normal page(第二次的page fault是COW)

Anonymous mapping, MAP_PRIVATE

In the MMU case: VM regions backed by arbitrary pages; copy-on-write

across fork.

第一次do_anonymous_page fault写操作的情况下:

/* Allocate our own private page. */

/* 匿名映射进行分配和准备。如果尚未准备就绪,则会返回虚拟内存不足 */

if (unlikely(anon_vma_prepare(vma)))

goto oom;

/*分配一个新的用户page页面,如果存在高内存,则从高内存分配一页作为__GFP_MOVABLE可移动类型

如果分配page失败则跳转到oom表明此时页面不足*/

page = alloc_zeroed_user_highpage_movable(vma, vmf->address);

if (!page)

goto oom;

...

/*vma区域中设置的页表属性,将page增加到vm_page_prot当中,并返回pte的entry地址*/

entry = mk_pte(page, vma->vm_page_prot);

/*

判断该vma是否有写权限,如果有则将添加pte条目的脏标志,并删除只读标志。

pte = set_pte_bit(pte, __pgprot(PTE_WRITE));

pte = clear_pte_bit(pte, __pgprot(PTE_RDONLY));

*/

if (vma->vm_flags & VM_WRITE)

entry = pte_mkwrite(pte_mkdirty(entry));

...

/*递增MM_ANONPAGES计数器*/

inc_mm_counter_fast(vma->vm_mm, MM_ANONPAGES);

/*将page添加到匿名的反向映射当中*/

page_add_new_anon_rmap(page, vma, vmf->address, false);

/*确认已将页面添加到memcg*/

mem_cgroup_commit_charge(page, memcg, false, false);

/*将新页面设置为active,并将其添加到active lru缓存中,将其添加到lru_add_pvec。*/

lru_cache_add_active_or_unevictable(page, vma);

判断第一次不是读操作造成的缺页,而是第一次page fault 写操作导致的缺页,则直接通过alloc_zeroed_user_highpage_movable 为本次page fault申请完整所需的物理页面(可以通过cat /proc/meminfo当中anon 数量的变化可以判断出来, 从代码当中看到新申请内存直接放到anon active list当中),分配高端的,可移动的物理内存并将内存初始化为0;

static inline struct page *

__alloc_zeroed_user_highpage(gfp_t movableflags,

struct vm_area_struct *vma,

unsigned long vaddr)

{

struct page *page = alloc_page_vma(GFP_HIGHUSER | movableflags,

vma, vaddr);

if (page)

clear_user_highpage(page, vaddr);

return page;

}

#endif

/**

* alloc_zeroed_user_highpage_movable - Allocate a zeroed HIGHMEM page for a VMA that the caller knows can move

* @vma: The VMA the page is to be allocated for

* @vaddr: The virtual address the page will be inserted into

*

* This function will allocate a page for a VMA that the caller knows will

* be able to migrate in the future using move_pages() or reclaimed

*/

static inline struct page *

alloc_zeroed_user_highpage_movable(struct vm_area_struct *vma,

unsigned long vaddr)

{

return __alloc_zeroed_user_highpage(__GFP_MOVABLE, vma, vaddr);

}

归纳总结:

三、验证DEMO

MAP_PRIVATE DEMO

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <sys/mman.h>

#define MEM_MMAP_SIZE 100*1024*1024

int main(int argc, char **argv)

{

char *addr = NULL;

addr = (unsigned long *)mmap(0, MEM_MMAP_SIZE, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0);

if (addr == NULL){

printf("jinsheng -> mmap failure\n");

return -1;

}

system("cat /proc/meminfo | grep anon");

getchar();

memset(addr, 0x5A, MEM_MMAP_SIZE);

system("cat /proc/meminfo | grep anon");

getchar();

munmap(addr, MEM_MMAP_SIZE);

system("cat /proc/meminfo | grep anon");

return 0;

}

/root # ./mmap_private

Active(anon): 524 kB //映射且没有做写操作

Inactive(anon): 0 kB

Active(anon): 102956 kB //102956 - 524 = 100M,可以看到anon active list 增加100M

Inactive(anon): 0 kB

Active(anon): 692 kB //释放内存。

Inactive(anon): 0 kB

通过程序输出可以看到MAP_PRIVATE|MAP_ANONYMOUS 与上文的分析的结论是一致的,匿名映射做写操作会直接分配出物理页,然后将内存移动到anon active list链表上进行管理。mmap anonymous page fault使用了100M, 则anon active list 增加100M。

MAP_SHARED DEMO

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <sys/mman.h>

#define MEM_MMAP_SIZE 100*1024*1024

int main(int argc, char **argv)

{

char *addr = NULL;

addr = (unsigned long *)mmap(0, MEM_MMAP_SIZE, PROT_READ|PROT_WRITE, MAP_SHARED|MAP_ANONYMOUS, -1, 0);

if (addr == NULL){

printf("jinsheng -> mmap failure\n");

return -1;

}

system("cat /proc/meminfo | grep anon");

system("free -m");

getchar();

memset(addr, 0x5A, MEM_MMAP_SIZE);

system("cat /proc/meminfo | grep anon");

system("free -m");

getchar();

munmap(addr, MEM_MMAP_SIZE);

system("cat /proc/meminfo | grep anon");

system("free -m");

return 0;

}

/root # ./mmap_share

Active(anon): 528 kB

Inactive(anon): 0 kB

total used free shared buff/cache available

Mem: 3379 82 3224 0 72 3261

Swap: 0 0 0

Active(anon): 656 kB

Inactive(anon): 102400 kB //发现anon inactive list 增加100M,且shared增加100M

total used free shared buff/cache available

Mem: 3379 83 3122 100 172 3159

Swap: 0 0 0

Active(anon): 556 kB

Inactive(anon): 0 kB

total used free shared buff/cache available

Mem: 3379 84 3221 0 72 3258

Swap: 0 0 0

通过打印参数你可能发现anon inactive list 也增加100M,这样可能也会认为MAP_SHARED产生do_anonymous_page匿名映射;但是这背后的原理是不同的,分析do_mmap源码就会发现底层实现的不同,MAP_SHARED|MAP_ANONYMOUS 设置将共享页算到anon list,但是需要强调的是这并不是匿名映射

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

...

if (file){

...

} else {

/*此时映射方是内存匿名映射,不是文件映射*/

switch (flags & MAP_TYPE) {

case MAP_SHARED:

/*对于共享的匿名映射,请添加pgoff = 0,shared和maysahre标志。但是,如果请求grosdown或grossup,则返回-EINVAL错误*/

if (vm_flags & (VM_GROWSDOWN|VM_GROWSUP))

return -EINVAL;

/*

* Ignore pgoff.

*/

pgoff = 0;

vm_flags |= VM_SHARED | VM_MAYSHARE;

break;

...

}

}

...

addr = mmap_region(file, addr, len, vm_flags, pgoff, uf);

...

}

unsigned long mmap_region(struct file *file, unsigned long addr,

unsigned long len, vm_flags_t vm_flags, unsigned long pgoff,

struct list_head *uf)

{

/*判断是否位文件映射如果是则进入该循环完成文件映射相关的属性确认*/

if (file) {

...

} else if (vm_flags & VM_SHARED) {

/*

准备共享的匿名映射。如果失败,则转到free_vma标签。

将“ /dev/zero”文件分配给vma->vm_file,将全局shmem_vm_ops分配给vma->vm_ops

*/

error = shmem_zero_setup(vma);

if (error)

goto free_vma;

}

...

}

int shmem_zero_setup(struct vm_area_struct *vma)

{

...

vma->vm_ops = &shmem_vm_ops;

...

}

static const struct vm_operations_struct shmem_vm_ops = {

.fault = shmem_fault,

.map_pages = filemap_map_pages,

};

shmem_fault->shmem_getpage_gfp

|->lru_cache_add_anon(page);//将共享页作为匿名页添加到anon inactive list上管理。

所以我们可以明确:mmap MAP_SHARED|MAP_ANONYMOUS 设置将共享页统计到anon list,但是需要强调的是这并不是匿名映射

Read then Write

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <sys/mman.h>

#define MEM_MMAP_SIZE 100*1024*1024

int main(int argc, char **argv)

{

char *addr = NULL;

int i = 0;

addr = (unsigned long *)mmap(0, MEM_MMAP_SIZE, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0);

if (addr == NULL){

printf("jinsheng -> mmap failure\n");

return -1;

}

printf("get init memory stat\n");

system("cat /proc/meminfo | grep anon");

system("free -m");

getchar();

printf("read!!!!!!\n");

for (i = 0; i < 100; i++){

printf("%d ", addr[1024*1024*i]);

}

printf("\n");

printf("after read!!!!!!\n");

system("cat /proc/meminfo | grep anon");

system("free -m");

getchar();

printf("write!!!!!!\n");

memset(addr, 0x5A, MEM_MMAP_SIZE);

printf("after write !!!!\n");

system("cat /proc/meminfo | grep anon");

system("free -m");

getchar();

munmap(addr, MEM_MMAP_SIZE);

printf("\n");

system("cat /proc/meminfo | grep anon");

system("free -m");

return 0;

}

get init memory stat

Active(anon): 156132 kB

Inactive(anon): 193416 kB

total used free shared buff/cache available

Mem: 3876 821 2373 0 681 2775

Swap: 2047 1338 709

read!!!!!!

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

after read!!!!!!

Active(anon): 156124 kB

Inactive(anon): 198596 kB

total used free shared buff/cache available

Mem: 3876 821 2372 0 682 2775

Swap: 2047 1338 709

write!!!!!!

after write !!!!

Active(anon): 156128 kB

Inactive(anon): 301736 kB

total used free shared buff/cache available

Mem: 3876 917 2276 0 682 2679

Swap: 2047 1338 709

Active(anon): 156128 kB

Inactive(anon): 195556 kB

total used free shared buff/cache available

Mem: 3876 823 2368 0 685 2773

Swap: 2047 1338 709

从demo当中可以看到mmap做读操作后与系统初始状态内存used内存是一致的都是821M,且打印读取数据为0(读取的是系统初始化设置好的zero page,并没有分配物理内存),当做写操作后used增加96M内存(系统状态不同存在一定的偏差)写操作后才真正的完成了物理地址的分配,mmap 完成vma与物理内存映射关系的建立。

四、总结

匿名页映射缺页缺页异常是我们常见的一种异常,映射完成后通常只是获得一段虚拟地址;当只读这段地址时系统会分配zero page 供程序读取使用且数据为0,减小内存不必要的申请减小系统内存浪费;当发生写操作时才会真正的allocate pages新的page并与虚拟地址建立映射关系;当先读后写时,一般先读取zero page , 做写操作时再触发一次page fault完成物理页面的申请。以上大体上就是anonymous page fault的触发流程。下一篇文章重点介绍:Linux file-backed page fault。

975

975

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?