Note:

Anchored NeighborhoodRegression for Fast Example-Based Super-Resolution

1. Abstract

a) Propose fast super-resolutionmethods while making no compromise on quality

i. Support the use of sparselearned dictionaries in combination with neighbor embedding methods.

1). Dictionary atomsßEuclidean distance

ii. Use global collaborative coding

iii. Propose the anchoredneighborhood regression

1). Anchor the neighborhoodembedding

2). Precompute the correspondingembedding matrix

2. Introduction

a) Defination of super-resolution

b) Three subclasses

i. Interpilation methods

ii. Multi-frame methods

iii. Learning-based methods

1). Gradient Profile Prior

2). Dictionary- or example- learning methods

a) Subdivided into patches

b) Form a Markov Random Field(MRF)

c) Search for nearest neighbors

d) HR is retrieved

e) MRF can be solved

3). Downside

a) High computational complexity

b) Overcome:

i. Neighbor embedding

ii. Sparse encoding approaches

4). Proposed example-basedsuper-resolution

a). Low computational time

b). Qualitative performance

c) Organization

i. Section 2:neighbor embedding& sparse coding

ii. Section 3: proposed methods

iii. Section 4:experimental results

iv. Section 5:conclusions

3. Dictionary-basedSuper-Resolution

a) Neighbor embedding approaches

i. Low-dimensional nonlinearmanifolds

ii. Locally linear embedding(LLE)

1. Search for a set of K nearestneighbors

2. Compute K appropriate weights

3. Create HR patchs

4. Create result HR image

iii. Nonnegative neighbor embeddingapproaches

b) Sparse coding approaches

i. Effects: a learned compactdictionary

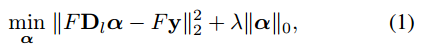

ii.

iii. sparsedictionaries:

iv. several modifications:

1). different training approaches

2). pseudoinverse(伪逆法)

3). PCA

4). Orthogonal matching pursuit

4. Proposed Methods

a) Global regression :special caseof ANR

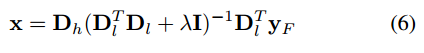

i.

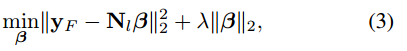

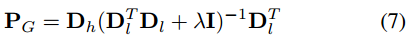

ii.

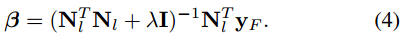

iii.

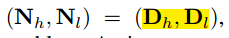

iv.

v.

vi.

b) Anchored neighborhoodregression

5. Experiments

a) Conditions

i. Features

1). Luminance component

2). Basic feature: the patch

3). First and second order derivative

ii. Embeddings

iii. Dictionaries

1). The larger the dictionary thebetter the performance

2). “internal”dictionary,”external” dictionary

3). Randomly sampled dictionaries,learned dictionaries

iv. Neighborhoods

b) Performance

i. Quality

ii. Running times

6. Conclusions

a) Propose a new example-basedmethod for super-resolution called Anchored Neighbor Regression

b) Propose an extreme variantcalled Global Regression

c) Most of these can reach asimilar top performance based on using the appropriate neighborhood size anddictionary

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?