论文:Square Attack: a query-efficient black-box adversarial attack via random search

代码:https://github.com/max-andr/square-attack

1 基于分数的黑盒攻击

基于梯度的白盒攻击容易受到gradient obfuscation或masking[1][2]所影响,而黑盒攻击和PGD形式上不太一样,但是相比于白盒攻击来说要很多查询次数,而且一般性能差一些,基于score-based的黑盒攻击不访问梯度信息,而是在访问分类模型softmax之前最后一层的得分矩阵,对抗损失也是通过访问这个得分矩阵来得到对抗效果

1.2 基本思想

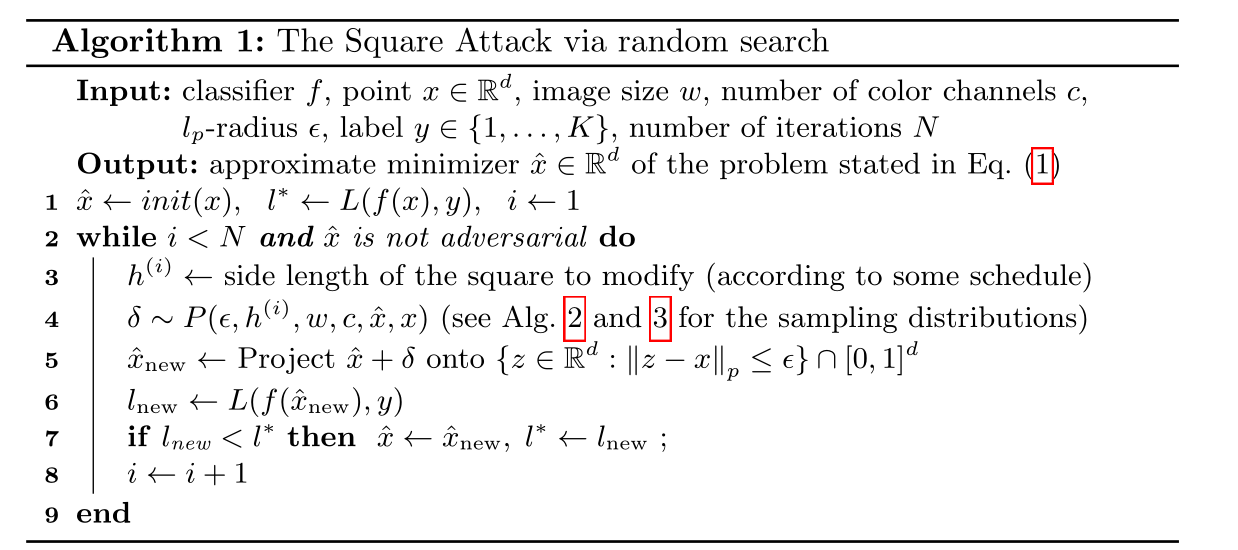

Square Attack是基于随机搜索的,其核心思想就是每次迭代ramdom一个噪声, 加到对抗样本再传入到对抗损失中看对抗效果是否提升,如果提升则使用这一次添加的噪声,如果没有提升则丢弃这一次添加的噪声.

3.h是挑选要添加噪声的窗口, 4.P根据这个窗口随机采样噪声 5.将噪声添加到对抗样本中形成xnew,6.把xnew放到对抗损失中,如果对抗损失值下降了(对抗效果变好)则保留本轮添加的噪声,如果对抗损失值没有下降则丢弃本次添加的噪声

1.3 正方形的随机噪声采样

基于笔记: Square Attack - 知乎 (zhihu.com)

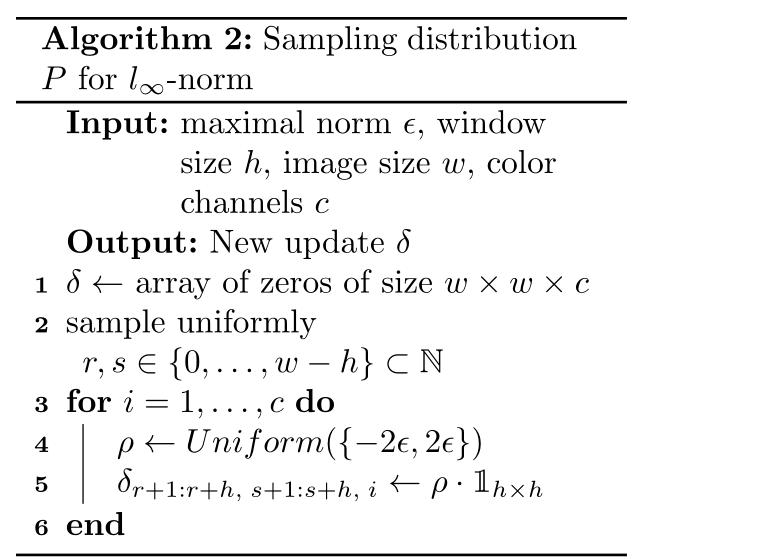

1.4 L∞攻击

1.5 Margin Loss

无目标

L(f(x),y)=fy(x) - max(k≠y)fk(x)

其中y是正确标签,k是错误标签,fk(x)是图像x经过模型后得到矩阵的k维度值,fy(x)是图像x经过模型后得到矩阵的y维度值,损失最后是使得他们之间的差距越小越好代表对抗效果越好

有目标

![]()

2. pytorch代码实现

2.1 main函数

def load():

image_path = "./干净样本.jpg"

image = Image.open(image_path)

# 定义图像预处理的变换

preprocess = transforms.Compose([

transforms.Resize((224, 224)), # 调整图像大小为 224x224

transforms.ToTensor(), # 将图像转换为 PyTorch Tensor,

#像素值自动从0-255转到0-1

])

# 对图像进行预处理

x = preprocess(image)

# 在第0维添加一个维度,使其成为形状为 [1, 3, 224, 224] 的 Tensor

x = x.unsqueeze(0)

# y = torch.tensor(917)

y =np.array([917])

return x,y

x_test,y_test=load()

y_target_onehot = utils.dense_to_onehot(y_target, n_cls=n_cls)

n_queries, x_adv = square_attack(model, x_test, y_target_onehot, corr_classified, args.eps, args.n_iter,

args.p, metrics_path, args.targeted, args.loss)

# 假设 x 是经过预处理后的 PyTorch Tensor

#byte() 方法被用于将张量的数据类型转换为8位整数类型,再从0-1转0-255

print("攻击后图像类别是:{}".format(model.predict(x_test).argmax(1)))

x_adv = (x_adv.squeeze(0) * 255).byte()

# 将 PyTorch Tensor 转换为 NumPy 数组

x_adv = x_adv.numpy()

#CHW->HWC

x_adv=np.transpose(x_adv, (1, 2, 0))

#保存

Image.fromarray(x_adv).save("restored_image.jpg")

print("查询次数为:{}".format(n_queries))2.2 square_attack

ef square_attack_linf(model, x, y, corr_classified, eps, n_iters, p_init, metrics_path, targeted, loss_type):

""" The Linf square attack """

np.random.seed(0) # important to leave it here as well

min_val, max_val = 0, 1 if x.max() <= 1 else 255 #min_val=0 max_val=1

c, h, w = x.shape[1:] #c=3 h=224 w=224

n_features = c*h*w #n_feature=150528

n_ex_total = x.shape[0] #n_ex_total=batch_size=1

x, y = x[corr_classified], y[corr_classified] #仅取被resNet50模型正确分类的样本

# [c, 1, w], i.e. vertical stripes work best for untargeted attacks

init_delta = np.random.choice([-eps, eps], size=[x.shape[0], c, 1, w]) #随机初始化噪声范围[-0.0001,+0.0001]

# x_best=[1,3,224,224],依然是初始样本,只是像素值裁剪到[0,1],x_best同时也是最终生成的对抗样本

x_best = np.clip(x + init_delta, min_val, max_val)

logits = model.predict(x_best) #获取模型经过最后一层softmax之间的输出,logits=[1,1000]

loss_min = model.loss(y, logits, targeted, loss_type=loss_type) #模型为ModelPT,默认损失函数为margin_loss loss_min=[2.96]

margin_min = model.loss(y, logits, targeted, loss_type='margin_loss') #margin_min=2.96

#n_queries的内容为: min=1.0 max=1.0 shape=(1,)

n_queries = np.ones(x.shape[0]) # ones because we have already used 1 query

time_start = time.time()

metrics = np.zeros([n_iters, 7]) #n_iter=10000 metrics=[10000,7]

for i_iter in range(n_iters - 1):

idx_to_fool = margin_min > 0 #margin_min=2.96>0 idx_to_fool=true

x_curr, x_best_curr, y_curr = x[idx_to_fool], x_best[idx_to_fool], y[idx_to_fool]

loss_min_curr, margin_min_curr = loss_min[idx_to_fool], margin_min[idx_to_fool] #loss_min_curr=[2.9] margin_min_curr=2.9

deltas = x_best_curr - x_curr #添加的噪声deltas=[1,3,224,224]

p = p_selection(p_init, i_iter, n_iters) #p=0.05

for i_img in range(x_best_curr.shape[0]): #由于batch_size=1,仅取出一个张图片i_img

s = int(round(np.sqrt(p * n_features / c))) #s=50

s = min(max(s, 1), h-1) # at least c x 1 x 1 window is taken and at most c x h-1 x h-1,s=50

center_h = np.random.randint(0, h - s) #center_h=107

center_w = np.random.randint(0, w - s) #center_w=158

#选择要添加扰动噪声的窗口 中心为x_curr_window,x_best_curr_window,长宽各为50

x_curr_window = x_curr[i_img, :, center_h:center_h+s, center_w:center_w+s] #x_curr_window=[3,50,50]

x_best_curr_window = x_best_curr[i_img, :, center_h:center_h+s, center_w:center_w+s] #x_best_curr_window=[3,50,50]

# prevent trying out a delta if it doesn't change x_curr (e.g. an overlapping patch)

while torch.sum(np.abs(np.clip(x_curr_window + deltas[i_img, :, center_h:center_h+s, center_w:center_w+s], min_val, max_val) - x_best_curr_window) < 10**-7) == c*s*s:

#往窗口里随机添加噪声

deltas[i_img, :, center_h:center_h+s, center_w:center_w+s] = torch.from_numpy(np.random.choice([-eps, eps], size=[c, 1, 1]))

#新添加完噪声的图片

x_new = np.clip(x_curr + deltas, min_val, max_val)

logits = model.predict(x_new) #重新在模型中获取softmax层之间的得分矩阵logits=[1,1000]

loss = model.loss(y_curr, logits, targeted, loss_type=loss_type) #传入magin loss中求得loss=2.96265

margin = model.loss(y_curr, logits, targeted, loss_type='margin_loss')

idx_improved = loss < loss_min_curr #idx_improved =false,本次迭代没有降低margin loss

loss_min[idx_to_fool] = idx_improved * loss + ~idx_improved * loss_min_curr #loss_min=[2.96165]

margin_min[idx_to_fool] = idx_improved * margin + ~idx_improved * margin_min_curr #margin_min=[2.96165]

idx_improved = np.reshape(idx_improved, [-1, *[1]*len(x.shape[:-1])]) #idx_improved=false

idx_improved =torch.from_numpy(idx_improved)

x_best[idx_to_fool] = idx_improved * x_new + ~idx_improved * x_best_curr

n_queries[idx_to_fool] += 1 #查询次数+1

acc = (margin_min > 0.0).sum() / n_ex_total #acc=1.0

acc_corr = (margin_min > 0.0).mean() #acc_corr=1.0 mean_nq=2.0 median_nq_ae=nan

mean_nq, mean_nq_ae, median_nq_ae = np.mean(n_queries), np.mean(n_queries[margin_min <= 0]), np.median(n_queries[margin_min <= 0])

avg_margin_min = np.mean(margin_min) #avg_margin_min=2.9616505

time_total = time.time() - time_start

print('{}: acc={:.2%} acc_corr={:.2%} avg#q_ae={:.2f} med#q={:.1f}, avg_margin={:.2f} (n_ex={}, eps={:.3f}, {:.2f}s)'.format(

i_iter + 1, acc, acc_corr, mean_nq_ae, median_nq_ae, avg_margin_min, x.shape[0], eps, time_total

))

metrics[i_iter] = [acc, acc_corr, mean_nq, mean_nq_ae, median_nq_ae, margin_min.mean(), time_total]

# if (i_iter <= 500 and i_iter % 20 == 0) or (i_iter > 100 and i_iter % 50 == 0) or i_iter + 1 == n_iters or acc == 0:

# np.save(metrics_path, metrics)

if acc == 0:

break

return n_queries, x_best2.3 margin loss

#使用margin_loss损失,logits是经过最后一层softmax之前的得分矩阵[1000],y是正确标签并已经进行了独热编码为[1000]

#y = utils.dense_to_onehot(y, n_cls=n_cls) 是否是有目标攻击targeted

def loss(self, y, logits, targeted=False, loss_type='margin_loss'):

""" Implements the margin loss (difference between the correct and 2nd best class). """

if loss_type == 'margin_loss':

preds_correct_class = (logits * y).sum(1, keepdims=True) #保持[1000],仅取正常分类标签的分数

diff = preds_correct_class - logits # difference between the correct class and all other classes

diff[y] = np.inf # to exclude zeros coming from f_correct - f_correct

margin = diff.min(1, keepdims=True) #取和目标标签差距最小的那个

loss = margin * -1 if targeted else margin

elif loss_type == 'cross_entropy':

probs = utils.softmax(logits)

loss = -np.log(probs[y])

loss = loss * -1 if not targeted else loss

else:

raise ValueError('Wrong loss.')

return loss.flatten()1]Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples

[2]Logit pairing methods can fool gradient-based attacks

3456

3456

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?