蒙特卡洛树搜索 棋

With the COVID-19 pandemic still wreaking havoc around the world, many of us have been stuck at home with shelter-in-place orders. In Singapore, similar “circuit breaker” orders have been given. When I was in Singapore and my family were still in the USA, we tried to find activities that we could do together online. Being fans of board games, we decided to play board games online. We primarily used board game arena to host and play board games, especially those that our daughter could also play.

由于COVID-19大流行仍在世界范围内造成严重破坏,我们中的许多人都被就地庇护所困在家里。 在新加坡,已经发出了类似的“ 断路器 ”命令。 当我在新加坡,而我的家人仍在美国时,我们试图找到可以一起在线进行的活动。 作为棋盘游戏的粉丝,我们决定在线玩棋盘游戏。 我们主要使用棋盘游戏平台来主持和玩棋盘游戏,尤其是那些我们女儿也可以玩的棋盘游戏。

The three of us played a variety of board games, such as Sushi Go!, Color Pop, Buttons, and Battle Sheep. When my daughter was occupied with other activities, my spouse and I would sometimes play those games, and others like 7 Wonders and Reversi. Unfortunately, all of these games are competitive in nature, and it was difficult to find a cooperative board game that we could play online — we had previously played games like Pandemic and Forbidden Island at Sunny Pair’O’Dice, a local board game store, but we couldn’t find a good co-op board game to play online.

我们三个人玩过各种棋盘游戏,例如Sushi Go!。 , 流行色 , 按钮和战羊 。 当我的女儿忙于其他活动时,我和我的配偶有时会玩这些游戏,还有7 Wonders and Reversi等其他游戏。 不幸的是,所有这些游戏本质上都是竞争性的,很难找到可以在线玩的合作棋盘游戏-我们以前在当地的桌游商店Sunny Pair'O'Dice玩过Pandemic和Forbidden Island之类的 游戏。 ,但我们找不到能在网上玩的不错的合作棋盘游戏。

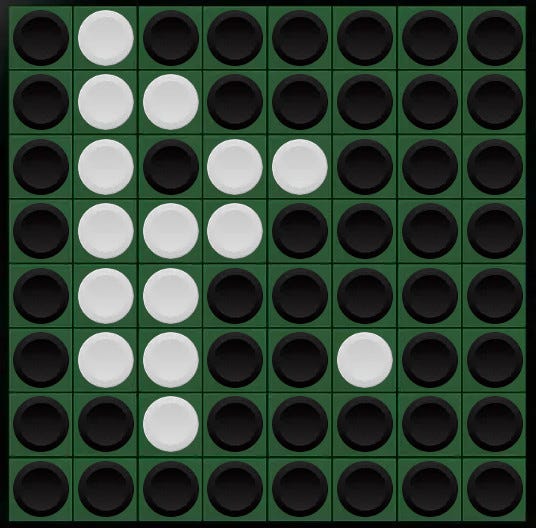

When it comes to Reversi, my spouse is undeniably better than me. If I’m lucky, I win maybe 1 in 5 games. So, I decided to write an algorithm to play the game for me! Firstly, I thought that it probably wouldn’t be too difficult for the algorithm to win against me, and then if it wins against my spouse, then technically, I wrote the algorithm so I can treat it that I won against my spouse too. 😃

当谈到黑白棋时,我的配偶无疑比我强。 如果幸运的话,我可能会赢得5场比赛中的1场。 因此,我决定编写一种算法来为我玩游戏! 首先,我认为该算法对我不利可能并不难,然后,如果它对我的配偶有利,那么从技术上讲, 我编写了该算法,因此我也可以将自己对我的配偶也视为赢。 😃

If you’re wondering the difference between Reversi and Othello, you’re not alone! I wondered the same thing, and I came across this great article comparing the two games. For the purposes of this article, I will be referring to Reversi, since it’s the more popular name, even though Othello might be more accurate.

如果您想知道黑白棋和黑白棋之间的区别,您并不孤单! 我想知道同样的事情,因此我在比较这两个游戏时遇到了一篇很棒的文章 。 出于本文的目的,我将引用黑白棋,因为它是比较流行的名称,尽管奥赛罗可能更准确。

Back in college at Carnegie Mellon University (CMU), one of the homework assignments was to write an algorithm to play Chess, using minimax and alpha-beta pruning. I had a ton of fun doing that assignment, and my algorithm did better than me in short order (I’m not great at chess either). During my Ph.D. at CMU, Mike Bowling gave a talk about how he used Monte Carlo Tree Search (MCTS) to win against top human players in Heads-up Limit Texas Hold’em. More recently, AlphaGo defeated professional human Go players, and it utilized MCTS and Deep Learning.

回到卡内基梅隆大学(CMU)的大学时 ,一项家庭作业是编写一个使用minimax和alpha-beta修剪的 国际象棋算法。 我做这项任务很有趣,而且我的算法在短期内比我做得更好(我也不擅长国际象棋)。 在我攻读博士学位期间 在CMU上, Mike Bowling讲述了他如何使用蒙特卡洛树搜索(MCTS) 在单挑限制德州扑克中与顶级人类玩家获胜 。 最近, AlphaGo 击败了专业的人类围棋运动员 ,并利用了MCTS和深度学习 。

Hence, when I was considering what kind of algorithm to write to play Reversi, I decided to try my hand at the Monte Carlo Tree Search algorithm. I won’t be going into the details of the algorithm itself. There are many great articles and tutorials online that I followed. Instead, I’ll talk about some of the issues, struggles, and lessons learned in my journey to win Reversi.

因此,当我考虑编写哪种算法播放Reversi时,我决定尝试使用Monte Carlo Tree Search算法。 我将不涉及算法本身的细节。 我在网上关注了 许多很棒的文章和教程 。 取而代之的是,我将谈论在赢得黑白棋的过程中的一些问题,挣扎和经验教训。

Lesson 1: close the loop and iterate as early as possible

第1课:闭环并尽早迭代

I first learned this lesson from my Ph.D. advisor, Manuela Veloso, with regards to robots. The idea was: instead of writing everything (whether it is complex robot behaviors or game-playing algorithms) and then testing all of it, it is better to “close the loop” with a smaller, simpler version first. Then, after the smaller version is working, iteratively add more features and test. There are many similarities about that philosophy with creating a minimum viable product in software engineering and business.

我首先从博士学位中学到了这一课。 机器人顾问Manuela Veloso 。 这个想法是:与其编写所有内容(无论是复杂的机器人行为还是玩游戏的算法)然后进行全部测试,不如先编写一个更小,更简单的版本来“封闭循环”。 然后,在较小的版本运行之后,反复添加更多功能并进行测试。 该理念与在软件工程和业务中创建最低限度的可行产品有许多相似之处。

In this case of MCTS and Reversi, I wrote the general algorithm for MCTS (with unit tests) in C++, and then created a prototype Runner function where I hand-coded the state of the game, i.e., where the black and white tokens were on the board.

在MCTS和Reversi的情况下,我用C ++编写了MCTS(带有单元测试)的通用算法,然后创建了Runner函数原型,在其中我手动编码了游戏状态,即黑色和白色标记是在板上。

I was hopeful that everything would work now. After all, the core algorithm was unit tested! 😇 It turns out, the algorithm failed spectacularly: it frequently gave up corners in the board, and I easily won against it.

我希望现在一切都会正常。 毕竟,核心算法已经过单元测试! 😇事实证明,该算法失败得很厉害:它经常放弃董事会中的每个角落,而我很容易就赢得了胜利。

Before I dived into what was wrong with my implementation of MCTS, there was a much bigger pain point — it was extremely tedious to play against my AI. Initially, I manually wrote code that set the game state, e.g., board[3][3] = std::make_optional(true); Then, I wrote some Java code that would process an image (a screenshot in grayscale), and output C++ code. However, that meant (1) I had to manually take a screenshot, (2) save it in GIMP and convert it to grayscale, (3) process it in Java, (4) copy-paste the generated C++ code into my MCTS code, (5) run the C++ code, and finally (6) take the action.

在我深入探讨MCTS实施的问题之前,有一个更大的痛点–与我的AI对抗非常繁琐。 最初,我手动编写了设置游戏状态的代码,例如board [3] [3] = std :: make_optional(true); 然后,我编写了一些Java代码来处理图像( 灰度屏幕快照),并输出C ++代码。 但是,这意味着(1)我必须手动拍摄屏幕截图,(2)将其保存在GIMP中并转换为灰度,(3)用Java处理,(4)将生成的C ++代码复制粘贴到我的MCTS代码中,(5)运行C ++代码,最后(6)采取行动。

If that process sounds painful, yes it was! I had chosen to write the image-processing code in Java because it was easier to open an image file in Java. But after playing a game or two through this process, I gave up and worked on processing the screenshot directly in C++, using libpng. Further, I wrote the grayscale-conversion code in C++, so that I wouldn’t need to use GIMP to do the conversion. I also learned that windows+printscreen takes and saves a screenshot to a file, instead of to the clipboard.

如果这个过程听起来很痛苦,是的! 我选择用Java编写图像处理代码,因为使用Java打开图像文件更加容易。 但是在通过此过程玩了一两个游戏之后,我放弃了,而是使用libpng直接在C ++中处理屏幕截图。 此外,我用C ++编写了灰度转换代码,因此不需要使用GIMP进行转换。 我还了解到,windows + printscreen可以将屏幕截图保存到文件中,而不是剪贴板中。

After optimizing my process, it now was: (1) take-and-save screenshot, (2) run C++ program (which would process the screenshot, initialize the game state, run MCTS, output the next action), then (3) take the next action. This process was much easier and faster, and I kept this process through the remainder of my development of the Reversi MCTS algorithm.

优化我的过程后,现在是:(1)保留并保存屏幕截图,(2)运行C ++程序(将处理屏幕截图,初始化游戏状态,运行MCTS,输出下一个动作),然后是(3)采取下一步行动。 这个过程要容易得多,而且速度也快得多,我在Reversi MCTS算法的其余开发过程中都保留了这一过程。

Lesson 2: Start with an easier problem

第2课:从一个简单的问题开始

This lesson is perhaps a corollary to Lesson 1, but I had severely underestimated how difficult Reversi is as a game to solve. In fact, there are 10⁵⁴ possible game states, and the full game has not yet been strongly solved.

这节课可能是第1课的必然结果,但是我严重低估了黑白棋作为游戏要解决的难度。 实际上, 有10种可能的游戏状态,并且尚未完全解决整个游戏 。

Due to the inherently difficult nature of Reversi, and how long a game takes to play, it was challenging to debug issues of why the MCTS algorithm made apparently sub-optimal decisions. So, I decided to code up Tic-Tac-Toe instead and let the MCTS algorithm loose on it.

由于Reversi固有的困难性,以及游戏进行了多长时间,因此调试MCTS算法为何做出明显次优决策的问题具有挑战性。 因此,我决定改写井字游戏,然后让MCTS算法松开。

I had written my MCTS algorithm to be generic to any game, where games were subclasses of generic GameState and GameAction classes, so writing and running MCTS on Tic-Tac-Toe wasn’t too hard.

我已经编写了适用于所有游戏的通用MCTS算法,其中游戏是通用GameState和GameAction类的子类,因此在井字游戏中编写和运行MCTS并不难。

Below is an illustration of the Tic-Tac-Toe game state I tested with. The expectation would be that the MCTS algorithm would decide to block X’s win by playing on the top-right, but instead it chose to play on a different location.

下面是我测试的Tic-Tac-Toe游戏状态的说明。 期望MCTS算法决定通过在右上方进行比赛来阻止X的获胜,但相反,它选择了在其他位置进行比赛。

Since Tic-Tac-Toe is a (much) easier game to solve than Reversi, I was able to isolate and fix the bug relatively quickly. In hindsight, I should have started with a game like Tic-Tac-Toe to begin with. But better late than never!

由于Tic-Tac-Toe是一个比Reversi更容易解决的游戏,因此我能够相对较快地隔离并修复bug。 事后看来,我应该先从井字游戏开始。 但是迟到总比没有好!

Lesson 3: Game state representation matters (eventually)

第三课:游戏状态表示很重要(最终)

Now that it appeared that MCTS was working, I switched back to playing Reversi with it. I had set up the MCTS algorithm to search a fixed number of iterations before deciding on an action. I started with 10,000 iterations, then 100,000, then 1,000,000. The algorithm seemed to be doing better each time I increased the number of iterations by an order of magnitude. In fact, it felt like the AI would play relatively well, up to a certain point, where it basically gave up and played semi-randomly. As the number of iterations increased, the point in the game where it “gave up” was pushed further out, but not far enough to actually complete and win the game.

现在看来MCTS可以正常工作了,我切换回去玩Reversi。 在设置动作之前,我已经设置了MCTS算法来搜索固定数量的迭代。 我从10,000次迭代开始,然后是100,000次,然后是1,000,000次。 每当我将迭代次数增加一个数量级时,该算法就会表现得更好。 实际上,感觉到AI在一定程度上可以表现得相当好,基本上可以放弃并半随机地玩。 随着迭代次数的增加,游戏中“放弃”的点被进一步推开,但距离还远远不足以实际完成并赢得比赛。

I reflected on that for a while, and realized that it kind of made sense — the game treats a win as a win, and a loss as a loss. It didn’t differentiate between winning with a large margin versus winning with a small margin, just as it didn’t differentiate between barely losing versus losing with a large margin. Chances are, with limited iterations, it couldn’t find any “good” actions to take, in that the actions had similar probabilities of winning/losing, so it essentially chose randomly.

我对此进行了一段时间的思考,并意识到这是有道理的-游戏将胜利视为胜利,将失败视为损失。 它并没有区分大利润赢还是小利润,就像它没有区分勉强亏损还是大利润亏损。 由于迭代次数有限,它没有机会采取任何“好”动作,因为这些动作具有获胜/失败的相似概率,因此实际上是随机选择的。

I was tempted to increase the iterations to 10 million, but I was hit by a memory constraint — my C++ program was compiled as a 32-bit program so it was limited by 2GB of memory. After considerable wrangling with Eclipse CDT and Windows, I got the program to compile as a 64-bit program instead. Why did I develop it in Windows and not Linux? My home PC uses Windows so I can more easily play video games, and I didn’t want to develop through a Virtualbox unless I absolutely needed to.

我很想将迭代次数增加到1000万,但是我受到内存限制的困扰-我的C ++程序被编译为32位程序,因此受到2GB内存的限制。 经过与Eclipse CDT和Windows的大量争论之后,我得到了将程序编译为64位程序的程序。 为什么我在Windows而不是Linux上开发它? 我的家用PC使用Windows,因此我可以更轻松地玩视频游戏,除非绝对需要,否则我不想通过Virtualbox进行开发。

Anyway, now that the program was 64-bit, technically it could access all 16GB of my RAM. Still, I decided that using less memory was preferable in general, so I worked on reducing my memory footprint.

无论如何,既然程序是64位的,从技术上讲它可以访问我的所有16GB RAM。 尽管如此,我还是决定使用较少的内存通常是更好的选择,因此我致力于减少内存占用。

I had used an easy-to-code and easy-to-understand representation with vectors and optionals, which allowed any board size (including larger and smaller than 8 by 8). However, the representation was taking more memory than it needed to. I switched to a bit representation that only allows an 8 by 8 board. There are 64 squares on the board, and conveniently there are 64 bits in a long long int. Now, I could represent the board with 2 long long ints, one to indicate which squares had pieces, and one to indicate which color were in those squares.

我使用了带有矢量和可选 s的易于编码和易于理解的表示形式,该表示形式允许任何电路板尺寸(包括大于和小于8 x 8)。 但是,该表示占用了更多的内存。 我切换到只允许8 x 8板的位表示形式。 板上有64个正方形,很方便地,长long int中有64位。 现在,我可以用2个长整型整数表示该板,一个用于指示哪个正方形有块,另一个用于指示那些正方形中的颜色。

With the new representation, my memory usage dropped from 2GB to 15MB, which was way lower than I had expected (more details later). I increased the number of iterations to 10 million, but the AI still lost in the mid-game. I ran 100 million iterations for a few turns, but it was taking very long to compute (over 30 minutes per turn), so it was too tedious to keep going.

随着新表示,我的内存使用量从2GB降至15MB,这是办法低于我(后来的更多详细信息)的预期。 我将迭代次数增加到1000万,但AI在游戏中期仍然失败。 我进行了1亿次迭代操作,但计算却花了很长时间(每转超过30分钟),所以要继续下去太麻烦了。

Lesson 4: Leverage information from previous searches

第4课:利用以前的搜索信息

I had written my MCTS algorithm as a one-shot algorithm: from a given game state (S₁) , run a fixed number of iterations, output the next best action, and then terminate. However, after taking that action to reach state S₂, even though the other (non-AI) player takes an action to reach state S₃, the game state S₃ is likely to exist in the MCTS search from S₁.

我将我的MCTS算法编写为单发算法:从给定的游戏状态(S₁)开始,运行固定数量的迭代,输出下一个最佳动作,然后终止。 然而,在采取该动作达到状态S 2之后,即使另一位(非AI)玩家采取了动作达到状态S 1,游戏状态S 1也很可能存在于从S 1开始的MCTS搜索中。

By running the MCTS algorithm from scratch each time, all that information from the subtree of S₃ is gone and “wasted”. So, my next feature was to add memory into my overall AI, so that subsequent MCTS searches from new game states leverage information from previous searches.

通过每次从头开始运行MCTS算法,来自S₃子树的所有信息都将消失并“浪费”。 因此,我的下一个功能是将内存添加到我的整体AI中,以便从新游戏状态进行的后续MCTS搜索利用来自先前搜索的信息。

I did so by saving the MCTS search tree in memory, and given the new game state, prune the search tree so that the root is now that new game state. Then, MCTS can begin its iterations using the pruned search tree.

为此,我将MCTS搜索树保存在内存中,并指定了新的游戏状态,然后修剪搜索树,使根现在是该新游戏状态。 然后,MCTS可以使用修剪的搜索树开始其迭代。

At first, I found at with 100,000 iterations, the memory state had very few unique nodes, which seemed suspicious. Upon digging and debugging, I found that there were bugs in my modified action-taking code (which potentially also existed in the original game state representation). In particular, action-taking by one player wasn’t actually changing the board state, and the unit tests failed to catch this bug since the unit tests all used the other player’s turn.

最初,我发现经过100,000次迭代后,内存状态只有很少的唯一节点,这似乎很可疑。 经过挖掘和调试,我发现修改后的动作记录代码中存在错误(原始游戏状态表示中也可能存在错误)。 特别是,一个玩家采取的行动实际上并没有改变棋盘状态,并且单元测试未能捕获此错误,因为单元测试全部使用了另一位玩家的回合。

After fixing the bug, I found that with a 1 million iterations, the search tree would be about a million unique nodes (as expected), and the memory usage was around 1GB of memory, which roughly meant 1000 bytes per state, and that still seemed high, but around the right order of magnitude. Further, the pruned search tree was about 66,000 unique nodes, which wasn’t a lot, but the statistics (win/loss/visited) of those nodes are preserved over time, so they get better and better.

修复该错误之后,我发现经过一百万次迭代,搜索树将有大约一百万个唯一节点(如预期的那样),内存使用量约为1GB内存,大约意味着每个状态1000个字节,并且仍然似乎很高,但是大约是正确的数量级。 此外,修剪后的搜索树大约有66,000个唯一节点,数量不多,但是随着时间的推移,这些节点的统计信息(获胜/失败/访问)会保留下来,因此它们会越来越好。

Finally, with 1 million iterations in the MCTS algorithm, and saved memory states, my Reversi AI won against me!

最终,在MCTS算法中进行了100万次迭代并保存了内存状态后,我的Reversi AI胜了我!

The MCTS algorithm won 33 to 31, which was a small margin, and it had felt like a close game throughout. Since my spouse is better at Reversi than me, I would have to increase the number of iterations!

MCTS算法以33比31获胜,这是一个很小的余地,而且整个过程都感觉像一场接近的比赛。 由于我的配偶在Reversi方面比我更好,因此我将不得不增加迭代次数!

Lesson 5: Profile the code, and compile with optimizations when the code is production-ready

第5课:分析代码,并在代码准备就绪时进行优化编译

My Reversi MCTS algorithm was running in Debug mode in Eclipse CDT, and it was taking around 7 minutes for 1 million iterations. I wanted to increase the number of iterations before asking my spouse to play against it, but I didn’t want to have to wait ages between turns.

我的Reversi MCTS算法在Eclipse CDT中以调试模式运行,大约需要7分钟才能进行100万次迭代。 我希望在要求配偶与之对抗之前增加迭代次数,但我不想在轮回之间等待年龄。

I ran the code with gprof, found a number of functions to optimize, and finally, switched the C++ optimization compile flags, making the code execute faster but be potentially more difficult to debug. Since I was now confident the algorithm was working properly, it was almost time to play against my spouse without the need for debugging on the fly.

我使用gprof运行代码,找到了许多优化功能,最后,切换了C ++优化编译标志,使代码执行速度更快,但调试起来可能会更困难。 由于我现在有信心算法可以正常工作,因此几乎是时候与我的配偶对战了,而无需即时调试。

I played against the algorithm with 5 million iterations, and now it felt “better”. It took 3 of the 4 corners, and won by a larger margin of 37 to 27. Although the algorithm still didn’t differentiate winning by a small versus a large margin, It consistently made moves that I was impressed by and I felt that none of my available moves were “good”.

我使用500万次迭代来对抗该算法,现在感觉“更好”。 它花了4个角中的3个,并以37到27的较大优势获胜。尽管该算法仍无法区分获胜的幅度是小还是大,但始终如一地给我留下深刻的印象,我感到没有一个我所有可用的举动都是“好的”。

Final lesson: run and achieve the goal!

最后一课:运行并实现目标!

Now I was confident that the Reversi MCTS algorithm stood a chance against my spouse. The Reversi MCTS algorithm played against my spouse with 5 million iterations and won! 😁 However, my spouse appeared to be taking it easy on the algorithm, and the algorithm took the first two corners in the game.

现在,我确信Reversi MCTS算法对我的配偶是一个机会。 Reversi MCTS算法与我的配偶进行了500万次迭代,并获胜! 😁但是,我的配偶似乎对算法很轻松,算法占据了游戏的前两个角。

After taking those corners, it pushed its advantage and limited my spouse’s moves severely. Even though my spouse went easy on the algorithm, a win is a win! We have agreed to have a rematch where my spouse will go full-out on the algorithm.

在走了这些弯路之后,它发挥了优势,并严重限制了我配偶的举动。 即使我的配偶在算法上变得轻松,但胜利还是胜利! 我们同意进行重新比赛,我的配偶将全力以赴使用算法。

翻译自: https://medium.com/dev-genius/winning-reversi-with-monte-carlo-tree-search-3e1209c160be

蒙特卡洛树搜索 棋

7615

7615

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?