nlp gpt论文

A lot of ink has been spilled (or pixels illuminated) about the wonders of GPT-3, OpenAI’s latest and greatest language model. In the words of Venturebeat:

关于GPT-3(OpenAI的最新也是最出色的语言模型)的奇观,已经溅出了很多墨水(或点亮了像素)。 用Venturebeat的话来说 :

AGPT-3, a language model capable of achieving state-of-the-art results on a set of benchmark and unique natural language processing tasks that range from language translation to generating news articles to answering SAT questions.

GPT - 3是一种语言模型,能够在一系列基准测试和独特的自然语言处理任务上达到最先进的结果,这些任务的范围从语言翻译到生成新闻报道,再到回答SAT问题。

And as tweeted by MIT:

麻省理工学院(MIT)发推文:

To be fair, some of the examples are amazing:

公平地说,其中一些示例令人惊叹:

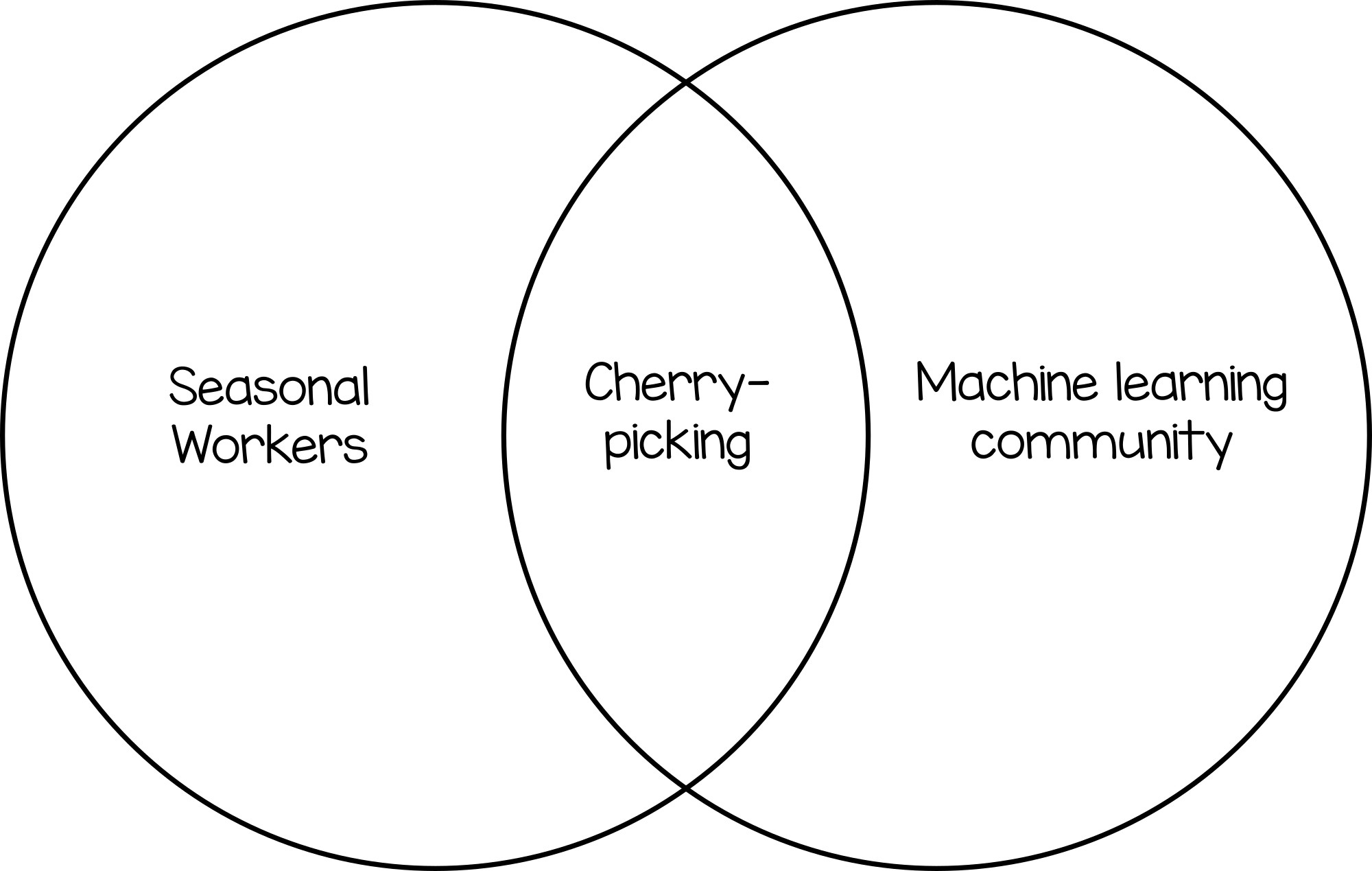

But like most examples spat out by language models, almost all of these were hand-selected by humans after many runs. Because not-so-good results just wouldn’t make the news.

但是,就像大多数语言模型所展示的示例一样,几乎所有这些示例都是在经过多次运行之后由人工人工选择的。 因为效果不是很好,就不会成为新闻。

Even bearing that in mind, I’m still blown away by what I’ve seen of GPT-3. But right now there’s no way I can get my hands on it for any practical purposes — it’s only available to a few researchers at the moment. What about us mere mortals? Other than playing AI Dungeon of course…

即使牢记这一点,我仍然对看到的GPT-3感到震惊。 但是目前,我无法出于任何实际目的而尝试使用它-目前仅适用于少数研究人员。 那我们凡人呢? 当然除了玩AI地牢 …

玩GPT-2! (Play with GPT-2!)

For now, we can play with GPT-3’s older brother, GPT-2. A few weeks ago I wrote easy_text_generator, a tool to generate text of any length from language models in your browser. You can use GPT-2 or a few pre-trained or distilled versions of it (downloaded from huggingface) to generate different kinds of text, like movie scripts, Star Trek scripts, IMDB movie reviews, or just text in general.

目前,我们可以与GPT-3的哥哥GPT-2一起玩。 几周前,我编写了easy_text_generator ,该工具可从浏览器中的语言模型生成任意长度的文本。 您可以使用GPT-2或其一些经过预先训练或提炼的版本(从拥抱面下载)来生成不同类型的文本,例如电影脚本 , 星际迷航脚本 , IMDB电影评论或仅是一般文本。

Or if you want something with zero install, you can generate text directly from huggingface’s site, just with limited length. For example, try out GPT-2 here.

或者,如果您想要零安装的东西,则可以从有限的长度直接从huggingface的站点生成文本。 例如,在此处尝试GPT-2 。

让我们生成一些文本! (Let’s Generate Some Text!)

As I said, most examples of GPT-3 you may have seen have been cherry-picked. To make a reasonably fair comparison, I’ll run each language model 5 times and cherry-pick one output for each model. The other ones I’ll put in another post, otherwise, this blog post will get too long.

正如我所说,您可能已经看到的大多数GPT-3示例都是精心挑选的。 为了进行合理的比较,我将每种语言模型运行5次,然后为每种模型挑选一个输出。 我将在其他文章中发表其他文章,否则,此博客文章将变得太长。

Let’s look at general purpose text at first. Since I just started working at a company doing a neural search, we’ll use some appropriate seed text:

首先让我们看一下通用文本。 由于我刚开始在一家从事神经搜索的公司工作,因此我们将使用一些合适的种子文本:

In short, neural search is a new approach to retrieving information.

In short, neural search is a new approach to retrieving information.

GPT-2 (GPT-2)

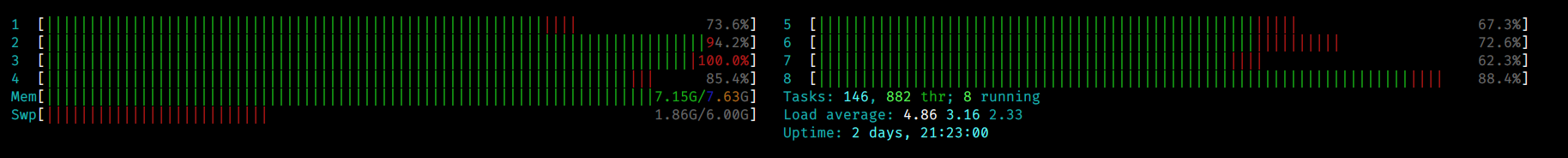

GPT-2 can create amazing results (check out the English speaking unicorns example) but it takes its sweet time and gobbles up CPU and memory. Here’s what it spat out after about 10 minutes:

GPT-2可以产生惊人的结果(请查看讲英语的独角兽示例 ),但是它花费了甜蜜的时间,吞噬了CPU和内存。 这是大约10分钟后出现的内容:

In short, neural search is a new approach to retrieving information. In an ideal world, there would be lots of new data to search, but most of it is already stored in a relational database. That’s why most of the time it’s best not to store any information in the database at all. And in the future, what we want is that the information is simply searchable. But even if the information is readily searchable, there is still the problem of how to combine multiple search terms in the database. One way to combine search terms is to have search engines, but when we search for something on Google or any other search engine, they can often be quite good, but sometimes they’ll make mistakes, and they’ll only show some of the results, and sometimes they won’t show all of the results or they’ll say something very specific but you can’t look it up in that particular search. One of the most important goals of neural search is to let them search even more easily so they can make better decisions. But there’s still one issue: some types of problems don’t lend themselves to it and this can lead to a lot of lost information if the search engine doesn’t have the right tool in the right place. Nurture Now, I think we can take some lessons from neural search to solve this problem...

简而言之,神经搜索是一种检索信息的新方法。 在理想的世界中,将有很多新数据要搜索,但是大多数数据已经存储在关系数据库中。 这就是为什么大多数时候最好根本不将任何信息存储在数据库中的原因。 将来,我们希望的是信息可以简单地搜索。 但是,即使信息易于搜索,仍然存在如何在数据库中组合多个搜索词的问题。 组合搜索词的一种方法是拥有搜索引擎,但是当我们在Google或任何其他搜索引擎上搜索内容时,它们通常会非常好,但是有时它们会出错,并且只会显示一些结果,有时它们不会显示所有结果,或者会说出非常具体的内容,但您无法在特定搜索中查找。 神经搜索的最重要目标之一就是让他们更轻松地搜索,以便他们做出更好的决策。 但是仍然存在一个问题:某些类型的问题无法解决,如果搜索引擎在正确的位置没有正确的工具,这可能会导致很多信息丢失。 培育现在,我认为我们可以从神经搜索中吸取一些经验教训来解决这个问题...

Looks somewhat coherent to me, though it doesn’t keep a clear narrative going, and Dear Lord, some of those run-on sentences!

对我来说似乎有些连贯,尽管叙述不清晰,亲爱的勋爵,其中一些连词都是这样!

distilGPT2 (distilGPT2)

distilGPT2 is a more efficient version of GPT-2 that had some excess neurons pruned away. Judging by its outputs, maybe a few too many neurons! It took about a minute to generate each result:

distilGPT2是GPT-2的更有效版本,其中一些多余的神经元被修剪掉了。 从其输出来看,也许有太多神经元! 生成每个结果大约需要一分钟:

In short, neural search is a new approach to retrieving information. But in recent years, the field has gained little traction, and now there is evidence that it is more accurate in detecting and analyzing neural networks on human skin. Here is the gist of this story. The researchers of the Chinese National Scientific Research Foundation (CSRF) have teamed up with researchers from the University of California, San Diego, who are working on a neuroimaging and learning network that will map up and reconstruct neural networks on human skin. This brain project aims to look into the brain’s visual cortex, so that it can learn from individuals without losing information. The CSRF will also use a neural network to map the brain’s sensory cortex and the cerebral cortex, as well as the anterior and caudate cortex, which is part of the brain’s central cortex. But this may not be the first step in the quest to decipher neural networks, a method used to search for patterns of information on a wide variety of people, including those who are not on the receiving end of a drug. Researchers at University of California, San Diego (USDA) in collaboration with scientists at the Chinese National Scientific Research Foundation (CSRF) in collaboration with CSRF, have developed a technique that allows the scientists to search for patterns of information on skin. The researchers analyzed the brain’s sensory...

简而言之,神经搜索是一种检索信息的新方法。 但是,近年来,该领域的吸引力很小,现在有证据表明,它在检测和分析人体皮肤神经网络方面更为准确。 这是这个故事的要旨。 中国国家科学研究基金会(CSRF)的研究人员与来自加利福尼亚大学圣地亚哥分校的研究人员合作,他们正在研究神经成像和学习网络,该网络将在人体皮肤上绘制和重建神经网络。 这个大脑计划旨在研究大脑的视觉皮层,以便它可以向个人学习而不会丢失信息。 CSRF还将使用神经网络来绘制大脑的感觉皮层和大脑皮层以及大脑中央皮层的一部分的前部和尾状皮层。 但是,这可能不是解密神经网络的第一步,神经网络是一种用于搜索各种各样人的信息模式的方法,包括不在药物接收端的人。 加州大学圣地亚哥分校(USDA)的研究人员与中国国家科学研究基金会(CSRF)的科学家与CSRF合作开发了一种技术,该技术使科学家能够搜索皮肤上的信息模式。 研究人员分析了大脑的感觉...

What if we throw that seed text at something meant to generate something different, like movie scripts?

如果我们将种子文本投掷到意在产生不同事物的东西,例如电影脚本,该怎么办?

gpt2_film_scripts (gpt2_film_scripts)

gpt2_film_scripts was made by fine-tuning GPT-2 on 1,300 screenplays, complete with the weird spacing and margins they have compared to regular text. This really shows when you generate examples. Like GPT-2 (which this model is based on), examples took about 10 minutes each:

gpt2_film_scripts是通过在1,300个剧本上对GPT-2进行微调而制作的,与常规文本相比,它们具有怪异的间距和边距。 当您生成示例时,这确实显示出来。 像GPT-2(该模型所基于的)一样,每个示例大约花费10分钟:

In short, neural search is a new approach to retrieving information. There have been many, many studies demonstrating that what we find, we search for. This is a kind of computer-aided search.

简而言之,神经搜索是一种检索信息的新方法。 有许多研究表明,我们在寻找,寻找。 这是一种计算机辅助搜索。

ALICE I don’t like that. I feel like an a**h*le.

爱丽丝,我不喜欢那样。 我感觉像个**。

She is still getting out of bed, having gotten the morning’s lesson. She sits at the desk, studying the computer screen. It is a huge pile of documents, and she quickly glances through them, and quickly notices the picture of a cat. She stares at the picture, and takes out a tiny file.

上了早上的课,她仍在起床。 她坐在办公桌前,学习电脑屏幕。 这是一大堆文件,她Swift浏览文件,并Swift注意到猫的照片。 她凝视着照片,取出一个小文件。

As we can see, the language model has picked up how to swear and isn’t afraid to do it! (I had to manually censor its potty mouth). We can also see it understands character lines and stage directions. And just like every netizen out there, it has a thing for cats. (If it seems a little short compared to other samples, it’s because it adds a lot of spacing (for magins) that Medium strips out.

正如我们所看到的,语言模型已经学会了如何发誓并且不害怕这样做! (我不得不手动检查它的便盆)。 我们还可以看到它了解角色线和舞台方向。 就像外面的每个网民一样,猫也有东西。 (如果与其他样本相比似乎有点短,那是因为它增加了许多间隔(对于品红而言),从而使Medium去除了。

迷航机器人 (trekbot)

One of my dreams is to create Star Trek episodes fully generated by an AI. Lieutenant Commander Data Science, if you will. So I trained gpt2_film_scripts on every Star Trek script I could find.

我的梦想之一是创建完全由AI生成的《星际迷航》剧集。 数据科学中将 ,如果愿意的话。 因此,我对我能找到的每个《星际迷航》脚本进行了gpt2_film_scripts培训。

And…after making that tragic Star Trek pun, my easy_text_generator crashed on me. I have a feeling these AI’s may be smarter (and more emotional) than we think.

而且……在做出那场悲惨的《星际迷航》双关语之后,我的easy_text_generator撞上了我。 我觉得这些AI可能比我们想象的更聪明(也更具情感)。

After restarting:

重新启动后:

In short, neural search is a new approach to retrieving information. A new way forward.

简而言之,神经搜索是一种检索信息的新方法。 一种新的前进方式。

FARRIS: The key is the neural patterns themselves, not the computer.

FARRIS:关键是神经模式本身,而不是计算机。

SISKO: But a computer can do this.

SISKO:但是计算机可以做到这一点。

FARRIS: It’s a breakthrough in computational neural net theory. It is clear they need to be designed to learn, replicate, and then share their knowledge for practical applications.

FARRIS:这是计算神经网络理论的突破。 显然,需要将它们设计为学习,复制和共享其知识,以用于实际应用。

SISKO: Then you’ve got a problem. How do we know it’ll work?

SISKO:那你有问题。 我们怎么知道它会起作用?

FARRIS: We’ll probably never see it on paper, but the neural pattern generator is the single most promising piece of hardware I’ve ever seen written about, all things considered.

FARRIS:我们可能永远不会在纸上看到它,但是神经模式发生器是我所见过的最有前途的硬件,考虑了所有因素。

SISKO: We’ll get there eventually. When we do, can we trust this?

SISKO:我们最终会到达那里。 当我们这样做时,我们可以相信吗?

[Farris’ office]

[法里斯的办公室]

FARRIS: A neural pattern generator will be the single most promising piece of hardware I’ve ever seen written about all things considered.

FARRIS:神经模式生成器将是我所见过的有关所有事物的最有前途的硬件。

[Captain’s office]

[队长办公室]

(Morn is giving a speech at Starfleet Headquarters, reading.)

(Morn在Starfleet总部发表演讲,阅读。)

FARRIS: My colleagues and I have spent a year developing the prototype, which will be ready for launch by the end of the year.

FARRIS:我和我的同事花了一年的时间开发原型,该原型将在今年年底发布。

SISKO: How will that affect Starfleet operations?

SISKO:这将如何影响Starfleet的运营?

Not bad at all. For anyone who knows Star Trek, that sounds mostly convincing (though Morn giving a speech is plainly hilarious.)

一点也不差。 对于任何了解《星际迷航》的人来说,这听起来似乎很有说服力(尽管Morn演讲很有趣。)

这是一个包装(目前) (That’s a wrap (for now))

As we can see above, GPT-2 is pretty powerful. both for generating general-purpose text and for more specific use cases (like Star Trek scripts).

正如我们在上面看到的,GPT-2非常强大。 既用于生成通用文本,又用于更具体的用例(例如《星际迷航》脚本)。

For these specific use cases though, GPT-2 needs a lot of data thrown at it, and a lot of epochs going over all that data. GPT-3 promises “few-shot learning”, so we could throw just a few examples at it and it would quickly pick up the style. So if you fancy a new book in the Lord of the Rings series, or a press release about <insert product here>, GPT-3 might be the language model for you!

但是对于这些特定的用例,GPT-2需要大量数据,并且所有数据都要经过很多时期。 GPT-3承诺“少量学习”,因此我们仅举几个例子,很快就会发现这种风格。 因此,如果您喜欢《指环王》系列中的新书或有关<在此处插入产品的新闻稿,GPT-3可能是适合您的语言模型!

If you want an easy way to get started generating your own language, check out the easy_text_generator repo. I’d love to see what comes out!

如果您想要一种简单的方法来开始生成自己的语言,请查看easy_text_generator repo 。 我很想看看结果如何!

翻译自: https://towardsdatascience.com/gpt-3-is-the-future-but-what-can-nlp-do-in-the-present-7aae3f21e8ed

nlp gpt论文

875

875

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?