scikit keras

Hyperparameter optimization is often one of the final steps in a data science project. Once you have a shortlist of promising models you will want to fine-tune them so that they perform better on your particular dataset.

超参数优化通常是数据科学项目中的最后步骤之一。 一旦您有一个有前途的模型的清单,您将需要对其进行微调,以使其在您的特定数据集上表现更好。

In this post, we will go over three techniques used to find optimal hyperparameters with examples on how to implement them on models in Scikit-Learn and then finally a neural network in Keras. The three techniques we will discuss are as follows:

在本文中,我们将介绍三种用于查找最佳超参数的技术,并举例说明如何在Scikit-Learn中的模型上实现它们,然后在Keras中的神经网络中实现它们。 我们将讨论的三种技术如下:

- Grid Search 网格搜索

- Randomized Search 随机搜寻

- Bayesian Optimization 贝叶斯优化

You can view the jupyter notebook here.

您可以在此处查看jupyter笔记本。

网格搜索 (Grid Search)

One option would be to fiddle around with the hyperparameters manually, until you find a great combination of hyperparameter values that optimize your performance metric. This would be very tedious work, and you may not have time to explore many combinations.

一种选择是手动摆弄超参数,直到找到可以优化性能指标的超参数值的良好组合。 这将是非常繁琐的工作,并且您可能没有时间探索许多组合。

Instead, you should get Scikit-Learn’s GridSearchCV to do it for you. All you have to do is tell it which hyperparameters you want to experiment with and what values to try out, and it will use cross-validation to evaluate all the possible combinations of hyperparameter values.

相反,您应该获取Scikit-Learn的GridSearchCV来为您完成此任务。 您所要做的就是告诉它您要尝试使用哪些超参数以及要尝试哪些值,然后它将使用交叉验证来评估超参数值的所有可能组合。

Let's work through an example where we use GridSearchCV to search for the best combination of hyperparameter values for a RandomForestClassifier trained using the popular MNIST dataset.

让我们来看一个示例,其中我们使用GridSearchCV搜索使用流行的MNIST数据集训练的RandomForestClassifier的超参数值的最佳组合。

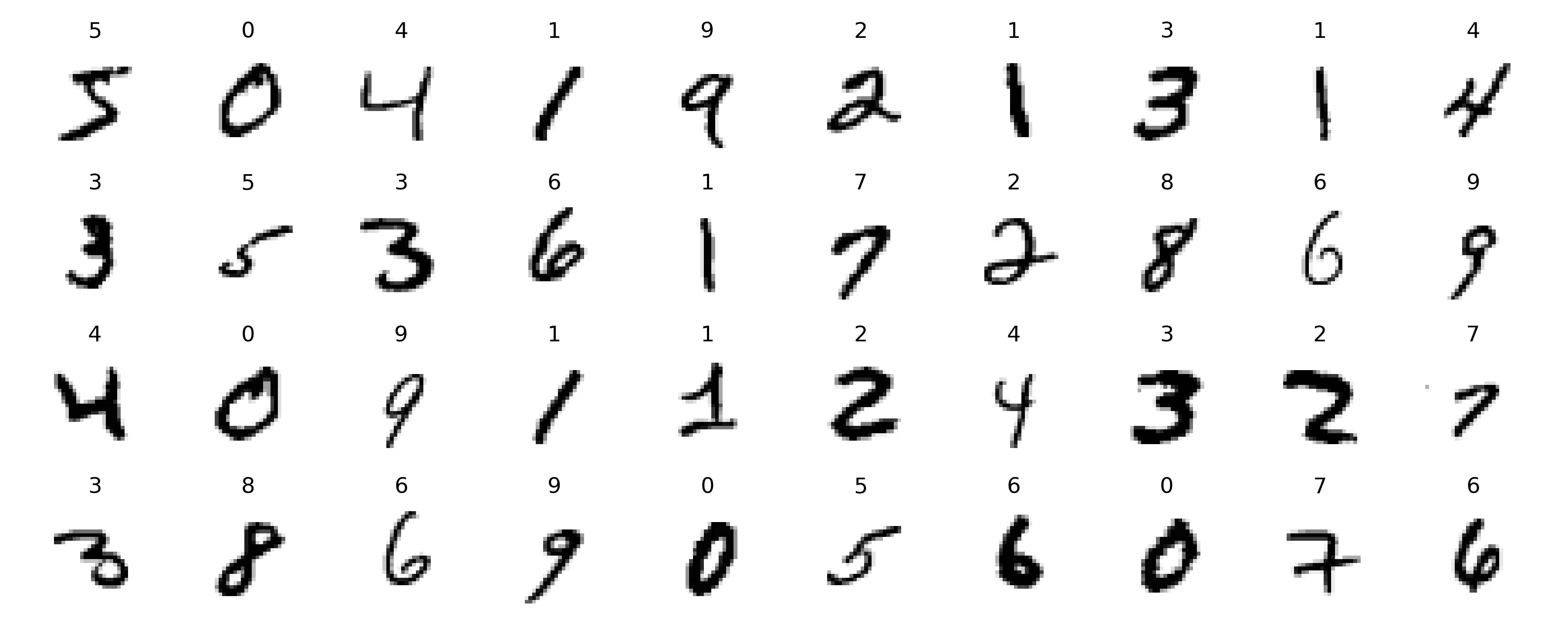

To give you a feel for the complexity of the classification task, the figure below shows a few images from the MNIST dataset:

为了让您感觉到分类任务的复杂性,下图显示了MNIST数据集中的一些图像:

To implement GridSearchCV we need to define a few things. First being the hyperparameters we want to experiment with and the values we want to try out. Below we specify this in a dictionary called param_grid.

为了实现GridSearchCV我们需要定义一些东西。 首先是我们要尝试的超参数以及我们要尝试的值。 下面我们在名为param_grid的字典中指定此param_grid 。

The param_grid tells Scikit-Learn to evaluate 1 x 2 x 2 x 2 x 2 x 2 = 32 combinations of bootstrap, max_depth, max_features, min_samples_leaf, min_samples_split and n_estimators hyperparameters specified. The grid search will explore 32 combinations of RandomForestClassifier’s hyperparameter values, and it will train each model 5 times (since we are using five-fold cross-validation). In other words, all in all, there will be 32 x 5 = 160 rounds of training! It may take a long time, but when it is done you can get the best combination of hyperparameters lik

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

507

507

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?