逻辑回顾和线性回归的区别

Here a piece of technical information on the regression types and techniques available in machine learning. It is a reference article to give little insight into the advantage and disadvantages of each type.

这里是有关机器学习中可用的回归类型和技术的一些技术信息。 这是一篇参考文章,几乎没有介绍每种类型的优点和缺点。

回归 (Regression)

“Regression” is a term for statistical methods that attempt to fit a model to data, to find the relationship between the dependent (outcome) variable and the predictor (independent) variable(s).

“ 回归 ”是统计方法的术语,其试图将模型拟合到数据,以找到因变量(结果变量)与预测变量(因变量)之间的关系。

Assuming it fits the data well, the estimated model may then be used to describe the relationship between the two groups of variables (explanatory) or to predict new values (prediction).

假设它很好地适合数据,则可以使用估计的模型来描述两组变量之间的关系(解释性)或预测新值(预测)。

There are many types of regression models; here are a few commonly used regression models.

回归模型有很多类型; 这是一些常用的回归模型。

1.简单线性回归: (1. Simple Linear Regression :)

It is a straight line regression between an outcome variable (Y ) and a single explanatory or predictor variable (X).

它是结果变量(Y)和单个解释变量或预测变量(X)之间的直线回归。

Equation :

方程式:

Uses :

用途:

- when we have only one feature variable and the target variable. 当我们只有一个特征变量和目标变量时。

2.多元线性回归: (2. Multiple Linear Regression :)

It is the same as Simple Linear Regression, but now with possibly multiple explanatory or predictor variables.

它与简单线性回归相同,但现在可能具有多个解释变量或预测变量。

Equation :

方程式:

Uses :

用途:

- when we want to predict the value of a variable based on the amount of two or more other variables. 当我们要基于两个或多个其他变量的数量来预测变量的值时。

Advantage of Linear Regression :

线性回归的优势:

- Linear Regression performs well when the dataset is linearly separable. We can use it to find the nature of the relationship between the variables. 当数据集是线性可分离的时,线性回归表现良好。 我们可以使用它来查找变量之间关系的性质。

- Linear Regression is easy to implement, interpret, and very efficient to train. 线性回归易于实现,解释并且非常有效地训练。

- Linear Regression is prone to over-fitting, but it can easily bypass using some dimensional reduction techniques, regularization (L1 and L2) techniques, and cross-validation. 线性回归易于过度拟合,但是它可以使用某些降维技术,正则化(L1和L2)技术以及交叉验证来轻松绕过。

The disadvantage of Linear Regression :

线性回归的缺点:

- Assumption of linearity 线性假设

- Prone to over-fitting 容易过拟合

- Prone to Outliers 容易出现异常值

- Prone to multicollinearity(in multiple regression) 倾向于多重共线性(在多元回归中)

3.多项式回归: (3. Polynomial Regression :)

It is a form of linear regression in which the relationship between the independent variable x and dependent variable y modeled as an nth degree polynomial.

它是线性回归的一种形式,其中自变量x和因变量y之间的关系被建模为n次多项式。

Equation :

方程式:

Uses :

用途:

- It used to model a nonlinear relationship between features and Target. 它用于对要素与目标之间的非线性关系进行建模。

Advantage :

优势 :

- Polynomial provides the best approximation of the relationship between the dependent and independent variables. 多项式提供了因变量和自变量之间关系的最佳近似值。

- A broad range of functions can fit under it. 各种功能都可以使用。

- Polynomial fits a wide range of curvature. 多项式适合各种曲率。

Disadvantage :

坏处 :

- Too sensitive to the outliers. 对异常值过于敏感。

4. Logistic回归: (4. Logistic Regression :)

The dependent variable will be in a binary form and is a supervised classification algorithm.

因变量将采用二进制形式,并且是监督分类算法。

Equation :

方程式:

Uses :

用途:

- When the dependent variable is binary. 当因变量是二进制时。

Advantage :

优势:

- Simple and easy to implement. 简单易实现。

Disadvantage :

坏处 :

- We cannot solve nonlinear problems because its decision surface(already partitioned in the region) is linear. 我们不能解决非线性问题,因为它的决策面(已在该区域中划分)是线性的。

- It will not perform well if features not correlated with the target or very similar. 如果功能与目标不相关或非常相似,它将无法正常工作。

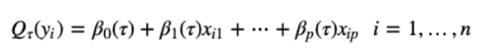

5.分位数回归: (5. Quantile Regression :)

It is splitting of data into a group of some quantiles.

它将数据拆分为一组分位数。

Equation :

方程式:

Uses :

用途:

- Quantile regression is an extension of linear regression used when linear regression conditions not met, i.e., linearity and normality. 分位数回归是在不满足线性回归条件(即线性和正态性)时使用的线性回归的扩展。

Advantage :

优势:

- Quantile regression provides greater flexibility than other regression methods to identify differing relationships at different parts of the distribution of the dependent variable. 与其他回归方法相比,分位数回归在确定因变量分布的不同部分的不同关系方面具有更大的灵活性。

Disadvantage :

坏处 :

- Could not use for more than two features 不能用于两个以上的功能

6.套索回归(最小绝对收缩和选择算子): (6. Lasso Regression(Least Absolute Shrinkage and Selection Operator) :)

- It is a regularized technique that uses shrinkage; shrinkage is where data values are shrunk towards central point like mean. 这是一种使用收缩的正则化技术。 收缩是数据值向均值之类的中心点收缩的地方。

- Performs L1 regularization, i.e., adds penalty equivalent to the absolute value of the magnitude of coefficients. 执行L1正则化,即增加等于系数幅度绝对值的代价。

Equation :

方程式:

Where,

哪里,

LS Obj stands for Least Squares Objective, which is nothing but the linear regression objective without regularization.

LS Obj代表最小二乘目标,它不过是没有正则化的线性回归目标。

λ is the turning factor that controls the amount of regularization. The bias will increase with the increasing value of λ, and the variance will decrease as the amount of shrinkage (λ) increases.

λ是控制正则化量的转向因子。 偏差将随着λ值的增加而增加,并且方差将随收缩量(λ)的增加而减小。

Uses :

用途:

- To avoid many of the problems with over-fitting when we have a large number of independent variables. 当我们有大量自变量时,为了避免因过度拟合而出现的许多问题。

Advantage :

优势:

- Supports on a large number of variables. 支持大量变量。

- It solves the problem of over-fitting(Regularization technique). 解决了过度拟合的问题(正则化技术)。

Disadvantage :

坏处 :

- It is automatic because of which it can lead to problem like a model can ignore important features 它是自动的,因此可能导致出现问题,例如模型可以忽略重要特征

7.岭回归: (7. Ridge Regression :)

- Ridge Regression is a technique for analyzing multiple regression data that suffer from multicollinearity by reducing standard error. Ridge回归是一种通过减少标准误差来分析遭受多重共线性的多个回归数据的技术。

Performs L2 regularization, i.e., adds penalty equivalent to the square of the magnitude of coefficients.

执行L2正则化,即增加等于系数幅度平方的惩罚。

Equation :

方程式:

Uses :

用途:

- When the data is suffering from multicollinearity. 当数据遭受多重共线性时。

Advantage :

优势:

- Performs well, compared to the ordinary least square method in a situation where extensive multivariate data with the number of predictors (p) more significant than the number of observations (n). 与普通的最小二乘方法相比,在预测变量数量(p)比观察数量(n)更为重要的大量多元数据的情况下,其性能很好。

Disadvantage :

坏处 :

- A ridge model does not perform feature selection. 脊模型不执行特征选择。

- Ridge regression shrinks the coefficients towards zero, but it will not set any of them precisely to zero. The lasso regression is an alternative that overcomes this drawback. Ridge回归将系数缩小为零,但不会将其中任何一个精确地设置为零。 套索回归是克服此缺点的替代方法。

8.弹性净回归: (8. Elastic Net Regression :)

Elastic net is a regularized regression technique that linearly combines the L1 and L2 penalties of the lasso and ridge methods.

弹性网是一种正则化回归技术,将套索和岭方法的L1和L2罚分线性地组合在一起。

Equation :

方程式:

Where,

哪里,

- 𝞪 = 0 corresponds to ridge and 𝞪 = 1 to lasso. Put, if plugin 0 for an alpha, the penalty function reduces to the L1 (Ridge) term, and if we set alpha to 1, we get the L2 (Lasso) term. Therefore, we can choose an alpha value between 0 and 1 to optimize the elastic net. 𝞪 = 0对应于ridge,𝞪 = 1对应于套索。 放置,如果插件0为alpha,则惩罚函数将简化为L1(Ridge)项,如果我们将alpha设置为1,则得到L2(Lasso)项。 因此,我们可以在0到1之间选择一个alpha值来优化弹性网。

Uses :

用途:

- Used to build models that can find the generalized trends within the data(using feature selections it will form a recognizable data). 用于构建可以在数据中找到广义趋势的模型(使用特征选择,它将形成可识别的数据)。

Advantage :

优势:

- It gives us the benefits of both Lasso and Ridge regression. It has predictive power better than Lasso, while still performing feature selection. 它为我们带来了套索回归和里奇回归的好处。 它具有比套索更好的预测能力,同时仍在执行特征选择。

Disadvantage :

坏处 :

- With greater flexibility(but at the same time advantage) comes the increased probability of over-fitting. 更大的灵活性(但同时具有优势)带来了过度拟合的可能性增加。

9.主成分回归: (9. Principal Component Regression :)

It is a regression analysis technique based on the principal component analysis.

它是基于主成分分析的回归分析技术。

Equation :

方程式:

Uses :

用途:

- PCR used for estimating the unknown regression coefficients in a standard linear regression model. PCR用于估算标准线性回归模型中的未知回归系数。

Advantage :

优势:

- It removes correlated features. 它删除了相关功能。

- It reduces over-fitting. 它减少了过度拟合。

It improves algorithm performance.

它提高了算法性能。

Disadvantage :

坏处 :

- Data standardization is a must before PCA(finding mean and std deviation before PCA) 在PCA之前必须进行数据标准化(在PCA之前查找均值和标准偏差)

- Information Loss 信息流失

10.偏最小二乘回归: (10. Partial Least Square Regression :)

It is a technique that reduces the features to a smaller set of uncorrelated components and performs least squares regression on these components, instead of on the original data.

它是一种将特征简化为较小的不相关组件集,并对这些组件(而不是原始数据)执行最小二乘回归的技术。

Equation :

方程式:

where Y is the matrix of the dependent variables

其中Y是因变量的矩阵

X is the matrix of the explanatory variables

X是解释变量的矩阵

Th, Ch, W*h, Wh and Ph, are the matrices generated by the PLS algorithm

Th,Ch,W * h,Wh和Ph是PLS算法生成的矩阵

Eh is the matrix of the residuals.

Eh是残差的矩阵。

Uses :

用途:

- The number of feature variables is high, and the feature variables are likely correlated. 特征变量的数量很多,并且特征变量很可能相关。

Advantage :

优势:

- Able to model multiple dependent as well as multiple independent variables. 能够对多个因变量以及多个自变量建模。

- Can handle multicollinearity in Instrumental Variables(when a treatment not successfully delivered to every unit in a randomized experiment) 可以处理工具变量中的多重共线性(当在随机实验中未能成功地将治疗传递到每个单元时)

- Handle a range of variables: nominal, ordinal, continuous. 处理一系列变量:标称,有序,连续。

Disadvantage :

坏处 :

- This algorithm is of a ‘black box’ solution that produces the right prediction but may not generalize, especially for small samples. 该算法属于“黑匣子”解决方案,可产生正确的预测,但可能无法推广,特别是对于小样本。

11.序数回归: (11. Ordinal Regression :)

Its supervised classification algorithm used for predicting ordinal variables (variable in a specific order).

它的监督分类算法用于预测序数变量(按特定顺序可变)。

Equation :

方程式:

Where y* is the exact but unobserved dependent variable is the vector of independent variables, x is the error term, and β is the vector of regression coefficients, which we wish to estimate. Further, suppose that while we cannot observe y*,

其中y *是精确但未观察到的因变量是自变量的向量, x是误差项,而β是回归系数的向量,我们希望对其进行估计。 此外,假设虽然我们无法观察到y * ,

we can only observe the categories of response.

我们只能观察响应的类别。

Where the parameters µ are the externally imposed endpoints of the observable categories, then the ordered logit technique will use the observations on y, which are a form of censored data on y*, to fit the parameter vector β.

如果参数µ是可观察类别的外部施加的端点,则有序logit技术将使用y上的观测值(它是y *上的删失数据的一种形式)来拟合参数矢量β 。

Uses :

用途:

- When the target variable has ordered categories. 当目标变量具有排序类别时。

Advantage :

优势:

- Extremely convenient to group the variables after ordering them. 对变量进行排序后非常方便。

Disadvantage :

坏处 :

- It incorrectly assumes that error variances are the same for all cases. 错误地假设所有情况下的误差方差都相同。

12.泊松回归: (12. Poisson Regression :)

It gives us the probability of a given number of events happening in a fixed interval of time.

它为我们提供了在固定的时间间隔内发生给定数量的事件的概率。

Equation :

方程式:

Where,

哪里,

The symbol “!” is a factorial.

符号“ ! ”是阶乘。

μ (the expected number of occurrences) sometimes written as λ. Sometimes called the event rate or rate parameter.

μ (预期的出现次数)有时写为λ。 有时称为事件速率或速率参数。

Uses :

用途:

- To find an average probability of an event happening per unit and find the probability of a certain number of events happening in a period. 找出每单位事件发生的平均概率,并找到一个时期内发生一定数量事件的概率。

Advantage :

优势:

- Sometimes leads to over-dispersion(more significant variability in the data set). 有时会导致过度分散(数据集中更大的可变性)。

Disadvantage :

坏处 :

- Results are relative rather than absolute 结果是相对而非绝对

13.负二项式回归: (13. Negative Binomial Regression :)

It gives the probability of the event happening a certain number of times out of x.

它给出了事件在x中发生一定次数的概率。

Equation :

方程式:

Uses :

用途:

- For a given exact probability, To find the probability of an event happening a certain number out times out of x (i.e., ten times out of 100, or 99 times out of 1000) 对于给定的确切概率,要找出某事件发生的概率大于x的某个次数(即100中的10倍,或1000中的99倍)

Advantage :

优势:

- Handles over dispersion 处理分散

Disadvantage :

坏处 :

- Not recommended that negative binomial models applied to small samples. 不建议将负二项式模型应用于小样本。

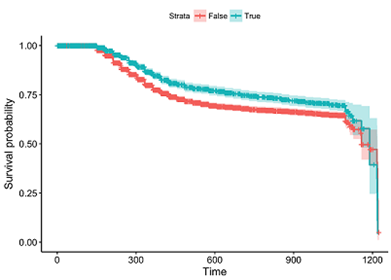

14. Cox回归: (14. Cox Regression :)

It is a method for investigating the effect of several variables upon the time a specified event takes to happen, in the context of an outcome such as death, Cox regression known for survival analysis.

这是一种方法,用于在事件(例如死亡,生存分析已知的Cox回归)的背景下,研究特定事件发生时几个变量的影响。

Equation :

方程式:

Uses :

用途:

- Used in medical research for investigating the association between the survival time of patients and one or more predictor variables. 用于医学研究,以研究患者的生存时间与一个或多个预测变量之间的关联。

Advantage :

优势:

- Do not have to make shaky assumptions such as constant hazard, linearly increasing hazard. 不必做出不稳定的假设,例如恒定的危害,线性增加的危害。

Disadvantage :

坏处 :

- Less efficient model 效率较低的模型

15.轨道回归: (15. Tobit Regression :)

In which the observed range of the dependent variables censored in some way.

其中观察到的因变量范围以某种方式被检查。

Equation :

方程式:

Uses :

用途:

Used when if a CONTINUOUS dependent variable needs to be regressed, but skewed to one direction.

当需要连续回归因变量但偏向一个方向时使用。

Advantage :

优势 :

- Consistent estimates using only OLS. 仅使用OLS进行一致的估算。

Disadvantage :

坏处 :

- Arises complexity in calculating std errors in OLS regression. 在OLS回归中计算std错误时增加了复杂性。

翻译自: https://medium.com/@ashwiniashtekar/quick-review-of-various-regression-techniques-e7bddbfcc88d

逻辑回顾和线性回归的区别

5624

5624

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?