语料库建立

At the time I publish this, we are entering the 9th month since COVID-19 froze the world. Since early January we all experienced it differently: some of us were lucky and got locked up in our houses, able to do our work remotely and live relatively unchanged lives, some of us were left without such luxury, some, sadly, passed away.

在我发布此书时,我们进入了自COVID-19冻结世界以来的第9个月。 自一月初以来,我们所有人的感受都不同:我们有些幸运,被关在屋子里,能够远程完成工作,过着相对不变的生活,有些人则没有这种奢侈,有些不幸的是去世了。

Coronaviruses have been around for decades but few to none such deadly and easily spreading as the COVID-19 thus far. Earlier this year, Allen Institute for AI (AI2) and a consortium of research institutes along with the White House curated a corpus of scientific papers on coronaviruses published since 19th century and offered a Kaggle competition to analyze it and answer some questions about different aspects of the virus, like how it spreads, or how it affects living organisms.

冠状病毒已经存在了数十年,但是到目前为止,致命性和易传播性还不如COVID-19。 今年早些时候,艾伦AI研究所(AI2)和一个研究机构财团与白宫一起策划了自19世纪以来发表的有关冠状病毒的科学论文集,并提供了Kaggle竞赛来对其进行分析并回答有关病毒,例如病毒的传播方式或对生物的影响。

And while I did not directly compete in the challenge itself, this series is an attempt to better the understanding of the coronaviruses by building a deep learning BERT-EM-SOM model (BERT EMbedding layer tied to a Self-Organizing Map). As mentioned in the post that announced this series, in this installment we will focus on understanding the papers’ corpus and will clean the metadata so we can work off of a clean base.

尽管我没有直接参与挑战本身的竞争,但本系列旨在通过构建深度学习的BERT-EM-SOM模型(与自组织图相关的BERT EMbedding层)来更好地理解冠状病毒。 正如宣布本系列文章的文章中提到的那样,在本期中,我们将重点关注理解论文的语料库,并将清理元数据,以便我们可以从一个干净的基础上进行工作。

数据 (Data)

The data can be downloaded from the AI2 S3 repository that is being refreshed almost daily. The code snippet below downloads the data and extracts it into the data directory.

可以从几乎每天刷新的AI2 S3存储库中下载数据。 下面的代码段下载数据并将其提取到数据目录中。

date = '2020-08-24'

url = f'https://ai2-semanticscholar-cord-19.s3-us-west-2.amazonaws.com/historical_releases/cord-19_{date}.tar.gz'

data_dir = 'data'

tar_file = data_dir + '/cord-19.tar.gz'

extract = False

if not os.path.exists(data_dir):

os.mkdir(data_dir)

if not os.path.exists(tar_file):

with open(tar_file, 'wb') as file:

with urllib.request.urlopen(url) as resp:

length = int(resp.getheader('content-length'))

blocksize = max(4096, length // 100)

with tqdm(total=length, file=sys.stdout) as pbar:

while True:

buff = resp.read(blocksize)

if not buff:

break

file.write(buff)

pbar.update(len(buff))

if extract:

import tarfile

tf = tarfile.open(tar_file)

tf.extractall(path=data_dir)

doc_parses = tarfile.open(f'{data_dir}/{date}/document_parses.tar.gz')

doc_parses.extractall(f'{data_dir}/{date}')In this example we’re using the dataset published on 8/24/2020. Reading the metadata from this day shows that there should be 233,539 papers discussing topics related to coronaviruses. We will test the hypothesis that the dataset is clean i.e. all the 233,539 are found in the JSON files that are part of the archive, we do not have any duplicates and there are no missing information.

在此示例中,我们使用的数据集发布于2020年8月24日。 从这一天开始读取元数据,表明应该有233,539篇论文讨论与冠状病毒有关的主题。 我们将测试数据集是否干净的假设,即所有233,539都在存档的JSON文件中找到,我们没有任何重复项,也没有丢失的信息。

But first things first — let’s get familiar with the basics about the dataset.

但是首先要注意的是-让我们熟悉有关数据集的基础知识。

基础数据探索 (Basic data exploration)

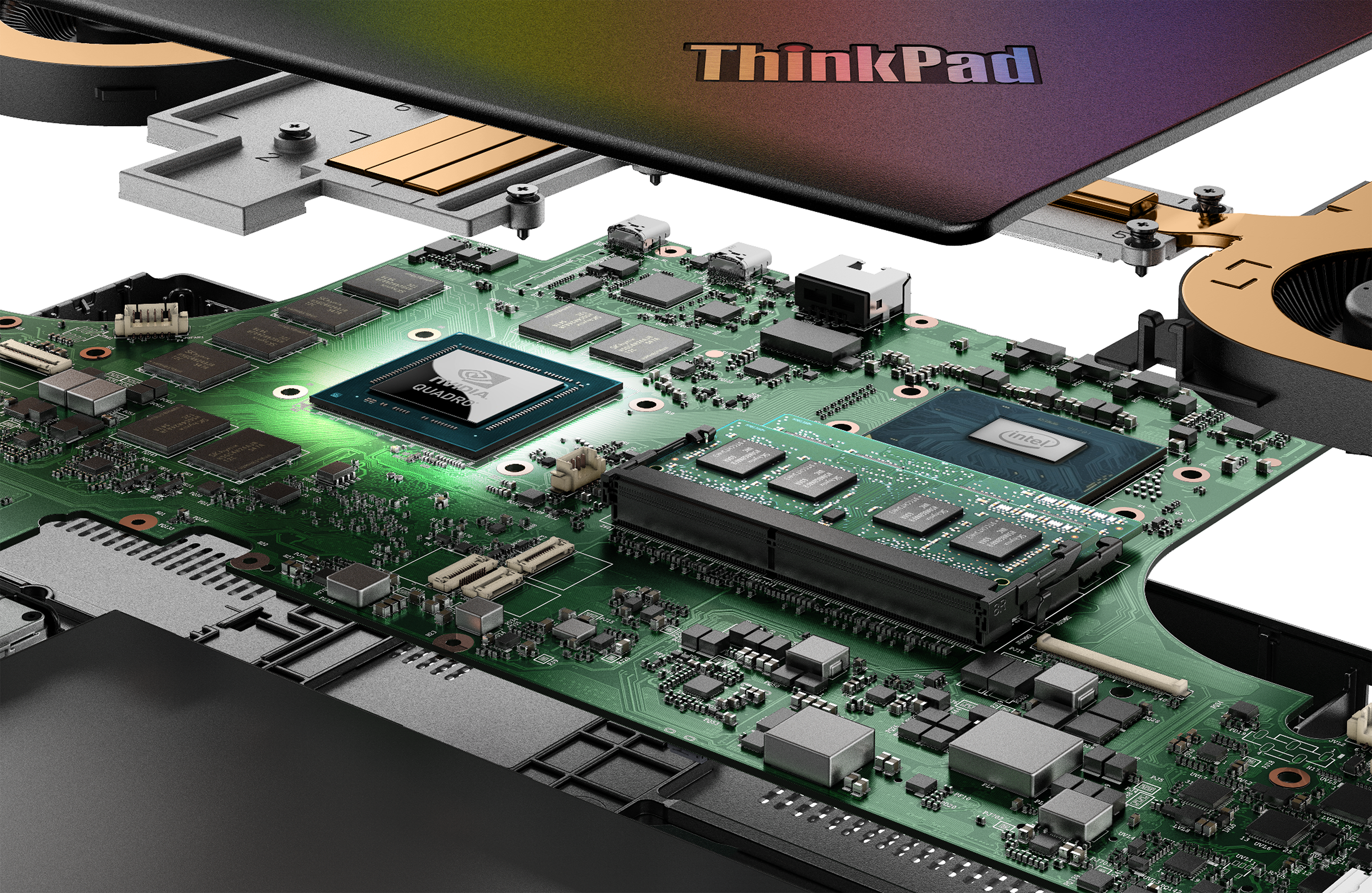

In the remainder of this story we will be switching routinely between using native cuDF from NVIDIA RAPIDS and SQL using BlazingSQL. Most of the time I am using TITAN RTX card. However, I was lucky to still being in the possession of the loaner Lenovo P53 Mobile Workstation with NVIDIA Quadro RTX 5000 GPU so when I was recently camping with my kids (properly socially distanced and wearing masks!) I was still able to work on this story. It is another fantastic piece of hardware that I highly recommend for all the GPU enthusiasts who want to use RAPIDS, BlazingSQL or CUDA while traveling or presenting work to a client or a friend. Just look at this beauty!

在本故事的其余部分中,我们将在使用NVIDIA RAPIDS的本机cuDF和使用BlazingSQL的SQL之间进行常规切换。 大多数时候我都在使用TITAN RTX卡。 但是,我很幸运仍然拥有借贷者Lenovo P53 Mobile Workstation和NVIDIA Quadro RTX 5000 GPU,因此当我最近与孩子们露营时(与社会保持距离并戴好口罩!),我仍然可以从事此工作。故事。 对于那些希望在旅行或向客户或朋友介绍工作时使用RAPIDS,BlazingSQL或CUDA的GPU爱好者,我强烈推荐它是另一种出色的硬件。 看看这个美女!

As a full disclosure — this article is not sponsored nor am I being paid by either NVIDIA or Lenovo — I am simply testing the gear they were kind enough to loan to me for this research. And for this I am super grateful!

作为完整的披露(本文不提供赞助,也不由NVIDIA或Lenovo支付给我),我只是在测试他们是否足够借给我进行这项研究的装备。 为此,我非常感谢!

All the code in this and my other stories can be found on github.

这篇文章和其他故事中的所有代码都可以在github上找到。

There are 19 columns in the metadata.csv file; an example you can see below.

metadata.csv文件中有19列; 您可以在下面看到一个示例。

Most of the columns are IDs like cord_uid, or doi among others. However, of the more interesting to us, we will focus on

大多数列都是ID,例如cord_uid或doi等。 但是,对于我们而言,更有趣的是,我们将专注于

title, abstract and authors we will use to see if we have any duplicates

标题,摘要和作者,我们将使用它们来检查是否有重复项

publish_time that shows when the article was published

显示文章发布时间的publish_time

source_x which shows the source where the article was originated from

source_x ,显示文章的来源

the journal column list the journal which the article was published in

期刊栏列出了发表文章的期刊

pdf_json_files that shows the location of the file with the body text of the paper; we will use this to see if we have any missing files so we can exclude them.

pdf_json_files ,用文件的正文显示文件的位置; 我们将使用它来查看是否有丢失的文件,以便排除它们。

First, let’s check what were the most common sources the researchers from AI2 collected these articles from.

首先,让我们检查一下AI2的研究人员从哪些最常见的来源收集了这些文章。

(

metadata

.groupby(by='source_x')

.agg({'cord_uid': 'count'})

.sort_values(by='cord_uid', ascending=False)

.head(10)

)The above snippet simply takes the metadata cuDF DataFrame, groups by the source_x column, and prints out a table.

上面的代码片段仅获取元数据cuDF DataFrame,按source_x列进行分组,然后打印出一个表。

So, most often the source was WHO, followed by Medline and PMC. Next we see articles distributed thru Elsevier, MedRxiv, ArXiv and BioRxiv. These would account for the majority of all the papers in the corpus.

因此,最常见的来源是WHO,其次是Medline和PMC。 接下来,我们看到通过Elsevier,MedRxiv,ArXiv和BioRxiv分发的文章。 这些将占语料库中所有论文的大部分。

As an aside, the same table could be created with SQL using BlazingSQL.

顺便说一句,可以使用BlazingSQL使用SQL创建同一表。

bc.sql('''

SELECT source_x

, COUNT(*) AS cnt

FROM metadata

GROUP BY source_x

ORDER BY cnt DESC

LIMIT 10

''')This shows the beauty of the RAPIDS ecosystem: if you are familiar with pandas or SQL — you have all the tools you need to unleash the enormous power of GPUs on your data and not even think twice (as you won’t have time to… see this: https://www.linkedin.com/posts/tomekd_blazingsql-notebooks-private-preview-activity-6710308422451126272-wXCb!).

这显示了RAPIDS生态系统的美丽:如果您熟悉熊猫或SQL,您将拥有所需的所有工具,以在数据上释放GPU的强大功能,甚至无需三思而后行(因为您没有时间来…请参阅: https : //www.linkedin.com/posts/tomekd_blazingsql-notebooks-private-preview-activity-6710308422451126272-wXCb !)。

Let’s now check where the papers were published.

现在让我们检查论文的发表位置。

bc.sql('''

SELECT journal

, COUNT(*) AS CNT

FROM metadata

WHERE journal IS NOT NULL

GROUP BY journal

ORDER BY CNT DESC

LIMIT 10

''')So, many of the articles were pre-published on the bioRxiv, but we also see a lot of them in the BMJ, PLoS One, Journal of Virology, Lancet and Nature. In other words — as expected.

因此,许多文章已预先发表在bioRxiv上,但我们在BMJ,PLoS One,《病毒学》,《柳叶刀》和《自然》杂志上也看到了很多文章。 换句话说-符合预期。

Alright, how about time? Let’s see when the papers were published.

好吧,时间怎么样? 让我们看看论文何时出版。

metadata['year_published'] = metadata.publish_time.str.extract('([0-9\.\-]{4})')[0].astype('int16')

(

metadata

.query('year_published < 2020')

.groupby(by='year_published')

.agg({'source_x': 'count'})

.to_pandas()

.plot(kind='bar', figsize=(18,9))

)The above snippet produces the following chart.

上面的代码段生成了以下图表。

Interestingly but not unsurprisingly, the is an uptick in the number of papers published around 2002/2003 as this is most likely related to the SARS pandemic.

有趣但并非不足为奇的是,在2002/2003年左右发表的论文数量有所增加,因为这很可能与SARS大流行有关。

You may wonder why I excluded 2020… Well, I initially had it on the chart but it was literally dwarfing the rest of the time series. I think the below chart should explain why.

您可能想知道为什么我不包括2020年……嗯,我最初在图表上看到了它,但实际上使其余时间序列相形见war 。 我认为下图应解释原因。

The total number of papers published and reported as part of this corpus since 1816 till 2019 (inclusive) was around 90 thousands. In the almost 9 months of 2020 the world researchers published over 140 thousand papers and other information on coronaviruses. Hence I decided that excluding the count of reported papers for the 2020 would make the time series chart above more legible.

从1816年到2019年(含),作为该语料库的一部分发表和报告的论文总数约为9万篇。 在2020年的近9个月中,全球研究人员发表了14万多篇关于冠状病毒的论文和其他信息。 因此,我认为,排除2020年报告论文的数量,将使上面的时间序列图更清晰。

清理 (Cleanup)

It is now time for us to have a look at the data itself. What I like to do first to any dataset is to check for the missing observations.

现在是时候让我们看看数据本身了。 我首先要对任何数据集进行的操作是检查缺失的观测值。

缺少观察 (Missing observations)

Every dataset has some. These can be simple omissions or telemetry issues (we can then either impute or remove them) or it can be, what I call, a valid missing value i.e. a value that simply should not be there. An example of a valid missing observation would be, in this particular dataset, and arxiv_id when the paper was not published on ArXiv.

每个数据集都有一些。 这些可能是简单的遗漏或遥测问题(然后我们可以插补或删除它们),也可以是有效的缺失值,即根本不应该存在的值。 在该特定数据集中,有效的缺失观测值的一个示例是arxiv_id ,而该论文未在ArXiv上发布。

It is quite easy to create a table with the percentage of missing values using SQL. Here’s how I do it.

使用SQL创建具有缺失值百分比的表非常容易。 这是我的方法。

cols = list(metadata.columns)[2:-3]

query_missing = 'SELECT '

query_missing += '\n ,'.join([f'CASE WHEN {col} IS NULL THEN 1 ELSE 0 END AS {col}_miss' for col in cols])

query_missing += '\nFROM metadata'

query_unions = '\nUNION ALL \n '.join([f"SELECT '{col}_miss' AS miss_flag, {col}_miss AS FLAG, COUNT(*) AS CNT FROM missing_flags GROUP BY {col}_miss" for col in cols])

bc.create_table('missing_flags', bc.sql(query_missing))

bc.create_table('missing_summary', bc.sql(query_unions))

row_cnt = float(len(metadata))

bc.sql(f'''

SELECT *

, CNT / {row_cnt} * 100.0 AS MISS_PCNT

FROM missing_summary

WHERE FLAG = 1

ORDER BY MISS_PCNT DESC

''')In lieu of explanation what’s happening here: I am using the two SQL queries that get created in lines 3–7 in the gist above. These queries, if you were to print them out, would read as follows.

代替解释,这里发生了什么:我使用的是上面要点第3–7行中创建的两个SQL查询。 这些查询(如果要打印出来)将如下所示。

SELECT CASE WHEN source_x IS NULL THEN 1 ELSE 0 END AS source_x_miss

,CASE WHEN title IS NULL THEN 1 ELSE 0 END AS title_miss

,CASE WHEN doi IS NULL THEN 1 ELSE 0 END AS doi_miss

,CASE WHEN pmcid IS NULL THEN 1 ELSE 0 END AS pmcid_miss

,CASE WHEN pubmed_id IS NULL THEN 1 ELSE 0 END AS pubmed_id_miss

,CASE WHEN license IS NULL THEN 1 ELSE 0 END AS license_miss

,CASE WHEN abstract IS NULL THEN 1 ELSE 0 END AS abstract_miss

,CASE WHEN publish_time IS NULL THEN 1 ELSE 0 END AS publish_time_miss

,CASE WHEN authors IS NULL THEN 1 ELSE 0 END AS authors_miss

,CASE WHEN journal IS NULL THEN 1 ELSE 0 END AS journal_miss

,CASE WHEN mag_id IS NULL THEN 1 ELSE 0 END AS mag_id_miss

,CASE WHEN who_covidence_id IS NULL THEN 1 ELSE 0 END AS who_covidence_id_miss

,CASE WHEN arxiv_id IS NULL THEN 1 ELSE 0 END AS arxiv_id_miss

,CASE WHEN pdf_json_files IS NULL THEN 1 ELSE 0 END AS pdf_json_files_miss

,CASE WHEN pmc_json_files IS NULL THEN 1 ELSE 0 END AS pmc_json_files_miss

,CASE WHEN url IS NULL THEN 1 ELSE 0 END AS url_miss

FROM metadataThe final SQL query simply returns the list of columns with their corresponding missing values percentage.

最终SQL查询仅返回具有相应缺失值百分比的列列表。

As expected, many of the ID columns have a lot of missing observations but we won’t be using these much later in our analysis so no worries. However, missing pdf_json_files column has almost 60% missing values… That definitely is a worry since we need to be able to find the body of the paper. Thus, we should remove these missing observations.

不出所料,许多ID列缺少很多观察结果,但我们将在以后的分析中不再使用它们,因此不用担心。 但是,缺少pdf_json_files列具有几乎60%的缺失值……这绝对令人担忧,因为我们需要能够找到本文的正文。 因此,我们应该删除这些遗漏的观察结果。

bc.create_table('metadata_no_missing', bc.sql('''

SELECT * FROM metadata WHERE pdf_json_files IS NOT NULL

'''))

bc.sql('SELECT COUNT(*) FROM metadata_no_missing')After the above operation we end up with roughly 94 thousand papers in the corpus. Rerunning the previous query yields the following breakdown of percent missing values per column.

经过上述操作,我们最终获得了约9.4万篇论文。 重新运行上一个查询会产生以下每列缺失值百分比的细分。

We still miss some of the titles, authors or abstracts but that’s fine — this should not hinder our approach. What is more important is that we can find the file and later link it with the cord_uid to return the relevant paper.

我们仍然想念一些标题,作者或摘要,但这很好-这不应该妨碍我们的方法。 更重要的是,我们可以找到文件,然后将其与cord_uid链接以返回相关论文。

重复项 (Duplicates)

Next, we will turn our eye to finding duplicates, another common problem with any dataset (I am yet to find one raw dataset that had none). Querying the metadata table I get the following number of duplicated records in each column.

接下来,我们将目光转向寻找重复项,这是任何数据集的另一个常见问题(我尚未找到一个没有任何原始数据集的问题)。 查询元数据表,我在每一列中得到以下数量的重复记录。

So, we have over 1,200 duplicated titles, 282 duplicated abstracts and 6 duplicated links in the pdf_json_files column. Let’s start with those.

因此,在pdf_json_files列中,我们有1,200多个重复的标题,282个重复的摘要和6个重复的链接。 让我们从这些开始。

Looking at the table above we can clearly tell that the first 3 papers are not duplicates — they simply mistakenly point to the same json file. However, the remaining files are definitely duplicates. Since there’s only 6 of the duplicates total in this column— we will drop all of them using the .dropna() functionality from cuDF.

查看上表,我们可以清楚地看出前三篇论文不是重复的,它们只是错误地指向了相同的json文件。 但是,其余文件肯定是重复的。 由于此列中总共只有6个重复项,因此我们将使用cuDF中的.dropna()功能删除所有重复项。

Next, let’s look at the duplicated titles. A query that allows us to create a quick list of duplicated rows is presented below as well as the top 10 records with the duplicated title.

接下来,让我们看看重复的标题。 下面显示了一个查询,该查询使我们可以创建重复行的快速列表,以及标题重复的前10条记录。

bc.sql('''SELECT A.title

, A.doi

, A.abstract

, A.authors

, A.journal

, A.year_published

FROM metadata_json_clean AS A

INNER JOIN (

SELECT title FROM metadata_json_clean GROUP BY title HAVING COUNT(*) > 1

) AS B

ON A.title = B.title

ORDER BY A.title

LIMIT 10''')You can see that these are truly duplicated records: somehow they differ with doi identifier but the titles, abstracts and authors mostly match. However, since there are over 1200 of duplicated records we would not like to drop all of these and will use the .drop_duplicated() method from to retain one record from each duplicate.

您可以看到它们是真正重复的记录:它们在某种程度上与doi标识符不同,但标题,摘要和作者大多匹配。 但是,由于有1200多个重复记录,因此我们不希望删除所有这些记录,而是使用.drop_duplicated()方法从每个重复记录中保留一个记录。

数据文件夹中缺少JSON文件 (Missing JSON files in the data folder)

Final check: let’s see if we can really find all the files listed in the metadata.csv files physically in the data folder. The approach we’ll take to be as efficient as possible will be to list all the files in the data/2020–08–24/document_parses/pdf_json directory, create a cuDF/BlazingSQL table from these, and then join them with the de-duped dataset we already have.

最终检查:让我们看看我们是否能够真正找到所有物理数据文件夹中的文件metadata.csv列出的文件。 我们将采用的效率最高的方法是列出data / 2020–08–24 / document_parses / pdf_json目录中的所有文件,从这些文件中创建cuDF / BlazingSQL表,然后将它们与de -duped数据集,我们已经拥有。

from os import listdir

from os.path import isfile, join

pdf_json = f'{data_dir}/{date}/document_parses/pdf_json'

files = ['document_parses/pdf_json/' + f for f in listdir(pdf_json) if isfile(join(pdf_json, f))]

bc.create_table('files', cudf.DataFrame(files, columns=['pdf_json_files']))

bc.create_table('pdf_files_meta', bc.sql('''

SELECT A.pdf_json_files AS meta_pdf_json

, B.pdf_json_files AS folder_pdf_json

, CASE WHEN A.pdf_json_files IS NULL THEN 1 ELSE 0 END meta_pdf_json_missing

, CASE WHEN B.pdf_json_files IS NULL THEN 1 ELSE 0 END folder_pdf_json_missing

FROM metadata_title_clean AS A

FULL OUTER JOIN files AS B

ON A.pdf_json_files = B.pdf_json_files

'''))

bc.sql('''

SELECT meta_pdf_json_missing

, folder_pdf_json_missing

, COUNT(*) AS CNT

FROM pdf_files_meta

GROUP BY meta_pdf_json_missing

, folder_pdf_json_missing

''')So, actually quite surprisingly, we have 12.5k files that we have links to in the metadata.csv file but cannot be found on disk, and 5k files that are present on disk but cannot be referenced in the metadata.csv file. Well, in this case, I decided to drop all the missing files thus keeping only the 87,438 files I can find in both, the metadata.csv file, and on disk.

因此,实际上非常令人惊讶的是,我们在metadata.csv文件中具有链接的12.5k文件,但是在磁盘上找不到,而在磁盘上存在但在metadata.csv文件中不能引用的5k文件。 好吧,在这种情况下,我决定删除所有丢失的文件,从而只保留在metadata.csv文件和磁盘上都可以找到的87,438个文件。

有罪直到被证明无罪 (Guilty until proven innocent)

This concludes this part. As I alluded to in the introduction to this series — I always treat every dataset I am given with suspicions until I prove to myself that it is clean enough to be used for doing analysis or building an ML or DL model.

到此为止。 正如我在本系列简介中提到的那样-我总是怀疑我得到的每个数据集,直到我向自己证明它足够干净可以用于进行分析或建立ML或DL模型。

In the next installment we will be looking at reading the files from the disk and using the subword_tokenizer from RAPIDS to tokenize the text and get ready for training BERT in part 3.

在下一部分中,我们将研究从磁盘读取文件,并使用RAPIDS中的subword_tokenizer对文本进行标记,并准备在第3部分中训练BERT。

Also, if you want to try any code presented in this series go to https://app.blazingsql.com/ and launch a free cluster!

另外,如果您想尝试本系列中介绍的任何代码,请访问https://app.blazingsql.com/并启动一个免费集群!

语料库建立

2961

2961

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?