综合指南 (A Comprehensive Guide)

This is a comprehensive tutorial on using the Spark distributed machine learning framework to build a scalable ML data pipeline. I will cover the basic machine learning algorithms implemented in Spark MLlib library and through this tutorial, I will use the PySpark in python environment.

Ť他是在使用了星火分布式机器学习框架构建可伸缩ML数据管道的综合教程。 我将介绍在Spark MLlib库中实现的基本机器学习算法,并且通过本教程,我将在python环境中使用PySpark。

Machine learning is getting popular in solving real-world problems in almost every business domain. It helps solve the problems using the data which is often unstructured, noisy, and in huge size. With the increase in data sizes and various sources of data, solving machine learning problems using standard techniques pose a big challenge. Spark is a distributed processing engine using the MapReduce framework to solve problems related to big data and processing of it.

机器学习在解决几乎每个业务领域中的实际问题方面正变得越来越流行。 它有助于使用通常是非结构化,嘈杂且规模巨大的数据来解决问题。 随着数据大小和各种数据源的增加,使用标准技术解决机器学习问题带来了巨大挑战。 Spark是使用MapReduce框架的分布式处理引擎,用于解决与大数据及其处理有关的问题。

Spark framework has its own machine learning module called MLlib. In this article, I will use pyspark and spark MLlib to demonstrate the use of machine learning using distributed processing. Readers will be able to learn the below concept with real examples.

Spark框架具有自己的名为MLlib的机器学习模块。 在本文中,我将使用pyspark和spark MLlib演示使用分布式处理的机器学习。 读者将能够通过实际示例学习以下概念。

- Setting up Spark in the Google Colaboratory 在Google合作实验室中设置Spark

- Machine Learning Basic Concepts 机器学习的基本概念

- Preprocessing and Data Transformation using Spark 使用Spark进行预处理和数据转换

- Spark Clustering with pyspark pyspark的Spark集群

- Classification with pyspark 用pyspark分类

- Regression methods with pyspark pyspark的回归方法

A working google colab notebook will be provided to reproduce the results.

将提供一个有效的Google colab笔记本以重现结果。

Since this article is a hands-on tutorial covering the transformations, classification, clustering, and regression using pyspark in one session, the length of the article is longer than my previous articles. One benefit is that you can go through the basic concepts and implementation in one go.

由于本文是一本动手教程,涵盖了在一个会话中使用pyspark进行的转换,分类,聚类和回归的操作,因此本文的长度比我以前的文章更长。 好处之一是您可以一口气看完基本概念和实现。

什么是Apache Spark? (What is Apache Spark?)

According to Apache Spark and Delta Lake Under the Hood

Apache Spark is a unified computing engine and a set of libraries for parallel data processing on computer clusters. As of the time this writing, Spark is the most actively developed open source engine for this task; making it the de facto tool for any developer or data scientist interested in big data. Spark supports multiple widely used programming languages (Python, Java, Scala and R), includes libraries for diverse tasks ranging from SQL to streaming and machine learning, and runs anywhere from a laptop to a cluster of thousands of servers. This makes it an easy system to start with and scale up to big data processing or incredibly large scale.

Apache Spark是一个统一的计算引擎和一组库,用于在计算机集群上进行并行数据处理。 在撰写本文时,Spark是为此任务开发的最活跃的开源引擎。 对于任何对大数据感兴趣的开发人员或数据科学家而言,它都是事实工具。 Spark支持多种广泛使用的编程语言(Python,Java,Scala和R),包括用于执行从SQL到流技术和机器学习的各种任务的库,并且可以在从便携式计算机到成千上万台服务器的群集中的任何位置运行。 这使它成为一个简单的系统,可以开始并扩展到大数据处理或难以置信的大规模。

在Google合作实验室中设置Spark 3.0.1 (Setting up Spark 3.0.1 in the Google Colaboratory)

As a first step, I configure the google colab runtime with spark installation. For details, readers may read my article Getting Started Spark 3.0.0 in Google Colab om medium.

第一步,我使用spark安装配置google colab运行时。 有关详细信息,读者可以阅读我在Google Colab om介质中的文章Getting Started Spark 3.0.0 。

We will install the below programs

我们将安装以下程序

you can install the LATEST version of Spark using the below set of commands.

您可以使用以下命令集安装最新版本的Spark。

# Run below commands

!apt-get install openjdk-8-jdk-headless -qq > /dev/null

!wget -q http://apache.osuosl.org/spark/spark-3.0.1/spark-3.0.1-bin-hadoop3.2.tgz

!tar xf spark-3.0.1-bin-hadoop3.2.tgz

!pip install -q findspark环境变量 (Environment Variable)

After installing the spark and Java, set the environment variables where Spark and Java are installed.

安装spark和Java之后,设置环境变量安装Spark和Java的位置。

import os

os.environ["JAVA_HOME"] = "/usr/lib/jvm/java-8-openjdk-amd64"

os.environ["SPARK_HOME"] = "/content/spark-3.0.1-bin-hadoop3.2"火花安装测试 (Spark Installation test)

Let us test the installation of spark in our google colab environment.

让我们测试一下Google colab环境中spark的安装。

import findspark

findspark.init()

from pyspark.sql import SparkSession

spark = SparkSession.builder.master("local[*]").getOrCreate()

# Test the spark

df = spark.createDataFrame([{"hello": "world"} for x in range(1000)])

df.show(3, False)/content/spark-3.0.1-bin-hadoop3.2/python/pyspark/sql/session.py:381: UserWarning: inferring schema from dict is deprecated,please use pyspark.sql.Row instead

warnings.warn("inferring schema from dict is deprecated,"

+-----+

|hello|

+-----+

|world|

|world|

|world|

+-----+

only showing top 3 rows# make sure the version of pyspark

import pyspark

print(pyspark.__version__)3.0.1机器学习 (Machine Learning)

Once, we have set up the spark in google colab and made sure it is running with the correct version i.e. 3.0.1 in this case, we can start exploring the machine learning API developed on top of Spark. Pyspark is a higher level of API to use spark with python. For this tutorial, I assume the readers have a basic understanding of Machine learning and used SK-Learn for model building and training. Spark MLlib used the same fit and predict structure as in SK-Learn.

一次,我们在Google colab中设置了spark,并确保它以正确的版本(即3.0.1)运行,在这种情况下,我们可以开始探索在Spark之上开发的机器学习API。 Pyspark是更高级别的API,可以在python中使用spark。 在本教程中,我假设读者对机器学习有基本的了解,并使用SK-Learn进行模型构建和培训。 Spark MLlib使用与SK-Learn中相同的拟合和预测结构。

In order to reproduce the results, I have uploaded the data to my GitHub and can be accessed easily.

为了重现结果,我已经将数据上传到了GitHub,并且可以轻松访问。

Learn by Doing: Use the colab notebook to run it yourself

边干边学:使用colab笔记本自行运行

Spark中的数据准备和转换 (Data Preparation and Transformations in Spark)

This section covers the basic steps involved in transformations of input feature data into the format Machine Learning algorithms accept. We will be covering the transformations coming with the SparkML library. To understand or read more about the available spark transformations in 3.0.3, follow the below link.

本节介绍将输入要素数据转换为机器学习算法接受的格式所涉及的基本步骤。 我们将介绍SparkML库附带的转换。 要了解或阅读有关3.0.3中可用的spark转换的更多信息,请单击下面的链接。

标准化数值数据 (Normalize Numeric Data)

MinMaxScaler is one of the favorite classes shipped with most machine learning libraries. It scaled the data between 0 and 1.

MinMaxScaler是大多数机器学习库附带的最喜欢的类之一。 它在0到1之间缩放数据。

from pyspark.ml.feature import MinMaxScaler

from pyspark.ml.linalg import Vectors# Create some dummy feature data

features_df = spark.createDataFrame([

(1, Vectors.dense([10.0,10000.0,1.0]),),

(2, Vectors.dense([20.0,30000.0,2.0]),),

(3, Vectors.dense([30.0,40000.0,3.0]),),

],["id", "features"] )features_df.show()+---+------------------+

| id| features|

+---+------------------+

| 1|[10.0,10000.0,1.0]|

| 2|[20.0,30000.0,2.0]|

| 3|[30.0,40000.0,3.0]|

+---+------------------+# Apply MinMaxScaler transformation

features_scaler = MinMaxScaler(inputCol = "features", outputCol = "sfeatures")

smodel = features_scaler.fit(features_df)

sfeatures_df = smodel.transform(features_df)sfeatures_df.show()+---+------------------+--------------------+

| id| features| sfeatures|

+---+------------------+--------------------+

| 1|[10.0,10000.0,1.0]| (3,[],[])|

| 2|[20.0,30000.0,2.0]|[0.5,0.6666666666...|

| 3|[30.0,40000.0,3.0]| [1.0,1.0,1.0]|

+---+------------------+--------------------+标准化数值数据 (Standardize Numeric Data)

StandardScaler is another well-known class written with machine learning libraries. It normalizes the data between -1 and 1 and converts the data into bell-shaped data. You can demean the data and scale to some variance.

StandardScaler是另一个使用机器学习库编写的知名类。 它将数据标准化为-1和1之间,并将其转换为钟形数据。 您可以简化数据并缩放到一些差异。

from pyspark.ml.feature import StandardScaler

from pyspark.ml.linalg import Vectors# Create the dummy data

features_df = spark.createDataFrame([

(1, Vectors.dense([10.0,10000.0,1.0]),),

(2, Vectors.dense([20.0,30000.0,2.0]),),

(3, Vectors.dense([30.0,40000.0,3.0]),),

],["id", "features"] )# Apply the StandardScaler model

features_stand_scaler = StandardScaler(inputCol = "features", outputCol = "sfeatures", withStd=True, withMean=True)

stmodel = features_stand_scaler.fit(features_df)

stand_sfeatures_df = stmodel.transform(features_df)stand_sfeatures_df.show()+---+------------------+--------------------+

| id| features| sfeatures|

+---+------------------+--------------------+

| 1|[10.0,10000.0,1.0]|[-1.0,-1.09108945...|

| 2|[20.0,30000.0,2.0]|[0.0,0.2182178902...|

| 3|[30.0,40000.0,3.0]|[1.0,0.8728715609...|

+---+------------------+--------------------+数值数据桶化 (Bucketize Numeric Data)

The real data sets come with various ranges and sometimes it is advisable to transform the data into well-defined buckets before plugging into machine learning algorithms.

真实的数据集具有各种范围,有时建议在插入机器学习算法之前将数据转换为定义明确的存储桶。

Bucketizer class is handy to transform the data into various buckets.

Bucketizer类很容易将数据转换为各种存储桶。

from pyspark.ml.feature import Bucketizer

from pyspark.ml.linalg import Vectors# Define the splits for buckets

splits = [-float("inf"), -10, 0.0, 10, float("inf")]

b_data = [(-800.0,), (-10.5,), (-1.7,), (0.0,), (8.2,), (90.1,)]

b_df = spark.createDataFrame(b_data, ["features"])b_df.show()+--------+

|features|

+--------+

| -800.0|

| -10.5|

| -1.7|

| 0.0|

| 8.2|

| 90.1|

+--------+# Transforming data into buckets

bucketizer = Bucketizer(splits=splits, inputCol= "features", outputCol="bfeatures")

bucketed_df = bucketizer.transform(b_df)bucketed_df.show()+--------+---------+

|features|bfeatures|

+--------+---------+

| -800.0| 0.0|

| -10.5| 0.0|

| -1.7| 1.0|

| 0.0| 2.0|

| 8.2| 2.0|

| 90.1| 3.0|

+--------+---------+标记文本数据 (Tokenize text Data)

Natural Language Processing is one of the main applications of Machine learning. One of the first steps for NLP is tokenizing the text into words or token. We can utilize the Tokenizer class with SparkML to perform this task.

自然语言处理是机器学习的主要应用之一。 NLP的第一步之一是将文本标记为单词或标记。 我们可以将Tokenizer类与SparkML结合使用来执行此任务。

from pyspark.ml.feature import Tokenizersentences_df = spark.createDataFrame([

(1, "This is an introduction to sparkMlib"),

(2, "Mlib incluse libraries fro classfication and regression"),

(3, "It also incluses support for data piple lines"),

], ["id", "sentences"])sentences_df.show()+---+--------------------+

| id| sentences|

+---+--------------------+

| 1|This is an introd...|

| 2|Mlib incluse libr...|

| 3|It also incluses ...|

+---+--------------------+sent_token = Tokenizer(inputCol = "sentences", outputCol = "words")

sent_tokenized_df = sent_token.transform(sentences_df)sent_tokenized_df.take(10)[Row(id=1, sentences='This is an introduction to sparkMlib', words=['this', 'is', 'an', 'introduction', 'to', 'sparkmlib']),

Row(id=2, sentences='Mlib incluse libraries fro classfication and regression', words=['mlib', 'incluse', 'libraries', 'fro', 'classfication', 'and', 'regression']),

Row(id=3, sentences='It also incluses support for data piple lines', words=['it', 'also', 'incluses', 'support', 'for', 'data', 'piple', 'lines'])]特遣部队 (TF-IDF)

Term frequency-inverse document frequency (TF-IDF) is a feature vectorization method widely used in text mining to reflect the importance of a term to a document in the corpus. Using the above-tokenized data, Let us apply the TF-IDF

术语频率逆文档频率(TF-IDF)是一种特征向量化方法,广泛用于文本挖掘中,以反映术语对语料库中文档的重要性。 使用上面标记的数据,让我们应用TF-IDF

from pyspark.ml.feature import HashingTF, IDFhashingTF = HashingTF(inputCol = "words", outputCol = "rawfeatures", numFeatures = 20)

sent_fhTF_df = hashingTF.transform(sent_tokenized_df)sent_fhTF_df.take(1)[Row(id=1, sentences='This is an introduction to sparkMlib', words=['this', 'is', 'an', 'introduction', 'to', 'sparkmlib'], rawfeatures=SparseVector(20, {6: 2.0, 8: 1.0, 9: 1.0, 10: 1.0, 13: 1.0}))]idf = IDF(inputCol = "rawfeatures", outputCol = "idffeatures")

idfModel = idf.fit(sent_fhTF_df)

tfidf_df = idfModel.transform(sent_fhTF_df)tfidf_df.take(1)[Row(id=1, sentences='This is an introduction to sparkMlib', words=['this', 'is', 'an', 'introduction', 'to', 'sparkmlib'], rawfeatures=SparseVector(20, {6: 2.0, 8: 1.0, 9: 1.0, 10: 1.0, 13: 1.0}), idffeatures=SparseVector(20, {6: 0.5754, 8: 0.6931, 9: 0.0, 10: 0.6931, 13: 0.2877}))]User can play with various transformations depending on the requirements of the problem in-hand.

用户可以根据手头的问题进行各种变换。

使用PySpark进行集群 (Clustering Using PySpark)

Clustering is a machine learning technique where the data is grouped into a reasonable number of classes using the input features. In this section, we study the basic application of clustering techniques using the spark ML framework.

聚类是一种机器学习技术,其中,使用输入功能将数据分组为合理数量的类。 在本节中,我们研究使用Spark ML框架的聚类技术的基本应用。

from pyspark.ml.linalg import Vectors

from pyspark.ml.feature import VectorAssembler

from pyspark.ml.clustering import KMeans, BisectingKMeans

import glob# Downloading the clustering dataset

!wget -q 'https://raw.githubusercontent.com/amjadraza/blogs-data/master/spark_ml/clustering_dataset.csv'Load the clustering data stored in csv format using spark

使用spark加载以csv格式存储的集群数据

# Read the data.

clustering_file_name ='clustering_dataset.csv'

import pandas as pd

# df = pd.read_csv(clustering_file_name)

cluster_df = spark.read.csv(clustering_file_name, header=True,inferSchema=True)Convert the tabular data into vectorized format using VectorAssembler

使用VectorAssembler将表格数据转换为矢量格式

# Coverting the input data into features column

vectorAssembler = VectorAssembler(inputCols = ['col1', 'col2', 'col3'], outputCol = "features")

vcluster_df = vectorAssembler.transform(cluster_df)vcluster_df.show(10)+----+----+----+--------------+

|col1|col2|col3| features|

+----+----+----+--------------+

| 7| 4| 1| [7.0,4.0,1.0]|

| 7| 7| 9| [7.0,7.0,9.0]|

| 7| 9| 6| [7.0,9.0,6.0]|

| 1| 6| 5| [1.0,6.0,5.0]|

| 6| 7| 7| [6.0,7.0,7.0]|

| 7| 9| 4| [7.0,9.0,4.0]|

| 7| 10| 6|[7.0,10.0,6.0]|

| 7| 8| 2| [7.0,8.0,2.0]|

| 8| 3| 8| [8.0,3.0,8.0]|

| 4| 10| 5|[4.0,10.0,5.0]|

+----+----+----+--------------+

only showing top 10 rowsOnce the data is prepared into the format MLlib can use for models, now we can define and train the clustering algorithm such as K-Means. We can define the number of clusters and initialize the seed as done below.

一旦将数据准备成MLlib可用于模型的格式,现在我们就可以定义和训练聚类算法,例如K-Means。 我们可以定义簇的数量,并按照以下步骤初始化种子。

# Applying the k-means algorithm

kmeans = KMeans().setK(3)

kmeans = kmeans.setSeed(1)

kmodel = kmeans.fit(vcluster_df)After training has been finished, let us print the centers.

培训结束后,让我们打印中心。

centers = kmodel.clusterCenters()

print("The location of centers: {}".format(centers))The location of centers: [array([35.88461538, 31.46153846, 34.42307692]), array([80. , 79.20833333, 78.29166667]), array([5.12, 5.84, 4.84])]There are various kinds of clustering algorithms implemented in MLlib. Bisecting K-Means Clustering is another popular method.

MLlib中实现了各种聚类算法。 二等分K均值聚类是另一种流行的方法。

# Applying Hierarchical Clustering

bkmeans = BisectingKMeans().setK(3)

bkmeans = bkmeans.setSeed(1)bkmodel = bkmeans.fit(vcluster_df)

bkcneters = bkmodel.clusterCenters()bkcneters[array([5.12, 5.84, 4.84]),

array([35.88461538, 31.46153846, 34.42307692]),

array([80. , 79.20833333, 78.29166667])]To read more about the clustering methods implemented in MLlib, follow the below link.

要阅读有关MLlib中实现的聚类方法的更多信息,请单击下面的链接。

使用PySpark分类 (Classification Using PySpark)

Classification is one of the widely used Machine algorithms and almost every data engineer and data scientist must know about these algorithms. Once the data is loaded and prepared, I will demonstrate three classification algorithms.

分类是广泛使用的机器算法之一,几乎每个数据工程师和数据科学家都必须了解这些算法。 加载并准备好数据后,我将演示三种分类算法。

- NaiveBayes Classification 朴素贝叶斯分类

- Multi-Layer Perceptron Classification 多层感知器分类

- Decision Trees Classification 决策树分类

We explore the supervised classification algorithms using IRIS data. I have uploaded the data into my GitHub to reproduce the results. Users can download the data using the below command.

我们探索使用IRIS数据的监督分类算法。 我已将数据上传到我的GitHub中以重现结果。 用户可以使用以下命令下载数据。

# Downloading the clustering data

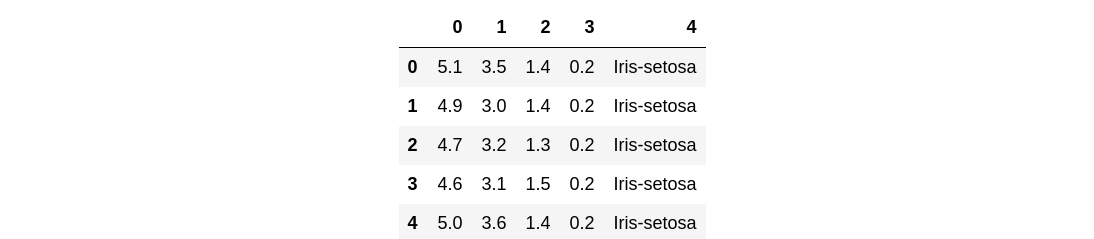

!wget -q "https://raw.githubusercontent.com/amjadraza/blogs-data/master/spark_ml/iris.csv"df = pd.read_csv("https://raw.githubusercontent.com/amjadraza/blogs-data/master/spark_ml/iris.csv", header=None)df.head()

spark.createDataFrame(df, columns)DataFrame[c_0: double, c_1: double, c_2: double, c_3: double, c4 : string]预处理虹膜数据 (Preprocessing the Iris Data)

In this section, we will be using the IRIS data to understand the classification. To perform ML models, we apply the preprocessing step on our input data.

在本节中,我们将使用IRIS数据来了解分类。 为了执行ML模型,我们对输入数据应用了预处理步骤。

from pyspark.sql.functions import *

from pyspark.ml.feature import VectorAssembler

from pyspark.ml.feature import StringIndexer# Read the iris data

df_iris = pd.read_csv("https://raw.githubusercontent.com/amjadraza/blogs-data/master/spark_ml/iris.csv", header=None)

iris_df = spark.createDataFrame(df_iris)iris_df.show(5, False)+------------+-----------+------------+-----------+-----------+

|sepal_length|sepal_width|petal_length|petal_width|species |

+------------+-----------+------------+-----------+-----------+

|5.1 |3.5 |1.4 |0.2 |Iris-setosa|

|4.9 |3.0 |1.4 |0.2 |Iris-setosa|

|4.7 |3.2 |1.3 |0.2 |Iris-setosa|

|4.6 |3.1 |1.5 |0.2 |Iris-setosa|

|5.0 |3.6 |1.4 |0.2 |Iris-setosa|

+------------+-----------+------------+-----------+-----------+

only showing top 5 rows# Rename the columns

iris_df = iris_df.select(col("0").alias("sepal_length"),

col("1").alias("sepal_width"),

col("2").alias("petal_length"),

col("3").alias("petal_width"),

col("4").alias("species"),

)# Converting the columns into features

vectorAssembler = VectorAssembler(inputCols = ["sepal_length", "sepal_width", "petal_length", "petal_width"],

outputCol = "features")

viris_df = vectorAssembler.transform(iris_df)viris_df.show(5, False)+------------+-----------+------------+-----------+-----------+-----------------+

|sepal_length|sepal_width|petal_length|petal_width|species |features |

+------------+-----------+------------+-----------+-----------+-----------------+

|5.1 |3.5 |1.4 |0.2 |Iris-setosa|[5.1,3.5,1.4,0.2]|

|4.9 |3.0 |1.4 |0.2 |Iris-setosa|[4.9,3.0,1.4,0.2]|

|4.7 |3.2 |1.3 |0.2 |Iris-setosa|[4.7,3.2,1.3,0.2]|

|4.6 |3.1 |1.5 |0.2 |Iris-setosa|[4.6,3.1,1.5,0.2]|

|5.0 |3.6 |1.4 |0.2 |Iris-setosa|[5.0,3.6,1.4,0.2]|

+------------+-----------+------------+-----------+-----------+-----------------+

only showing top 5 rowsindexer = StringIndexer(inputCol="species", outputCol = "label")

iviris_df = indexer.fit(viris_df).transform(viris_df)iviris_df.show(2, False)+------------+-----------+------------+-----------+-----------+-----------------+-----+

|sepal_length|sepal_width|petal_length|petal_width|species |features |label|

+------------+-----------+------------+-----------+-----------+-----------------+-----+

|5.1 |3.5 |1.4 |0.2 |Iris-setosa|[5.1,3.5,1.4,0.2]|0.0 |

|4.9 |3.0 |1.4 |0.2 |Iris-setosa|[4.9,3.0,1.4,0.2]|0.0 |

+------------+-----------+------------+-----------+-----------+-----------------+-----+

only showing top 2 rows朴素贝叶斯分类 (Naive Bayes Classification)

Once the data is prepared, we are ready to apply the first classification algorithm.

数据准备好后,我们准备应用第一个分类算法。

from pyspark.ml.classification import NaiveBayes

from pyspark.ml.evaluation import MulticlassClassificationEvaluator# Create the traing and test splits

splits = iviris_df.randomSplit([0.6,0.4], 1)

train_df = splits[0]

test_df = splits[1]# Apply the Naive bayes classifier

nb = NaiveBayes(modelType="multinomial")

nbmodel = nb.fit(train_df)

predictions_df = nbmodel.transform(test_df)predictions_df.show(1, False)+------------+-----------+------------+-----------+-----------+-----------------+-----+------------------------------------------------------------+------------------------------------------------------------+----------+

|sepal_length|sepal_width|petal_length|petal_width|species |features |label|rawPrediction |probability |prediction|

+------------+-----------+------------+-----------+-----------+-----------------+-----+------------------------------------------------------------+------------------------------------------------------------+----------+

|4.3 |3.0 |1.1 |0.1 |Iris-setosa|[4.3,3.0,1.1,0.1]|0.0 |[-9.966434726497221,-11.294595492758821,-11.956012812323921]|[0.7134106367667451,0.18902823898426235,0.09756112424899269]|0.0 |

+------------+-----------+------------+-----------+-----------+-----------------+-----+------------------------------------------------------------+------------------------------------------------------------+----------+

only showing top 1 rowLet us Evaluate the trained classifier

让我们评估训练有素的分类器

evaluator = MulticlassClassificationEvaluator(labelCol="label", predictionCol="prediction", metricName="accuracy")

nbaccuracy = evaluator.evaluate(predictions_df)

nbaccuracy0.8275862068965517多层感知器分类 (Multilayer Perceptron Classification)

The second classifier we will be investigating is a Multi-layer perceptron. In this tutorial, I am not going into details of the optimal MLP network for this problem however in practice, you research the optimal network suitable to the problem in hand.

我们将要研究的第二个分类器是多层感知器。 在本教程中,我不会针对此问题介绍最佳MLP网络的详细信息,但是在实践中,您将研究适合手头问题的最佳网络。

from pyspark.ml.classification import MultilayerPerceptronClassifier# Define the MLP Classifier

layers = [4,5,5,3]

mlp = MultilayerPerceptronClassifier(layers = layers, seed=1)

mlp_model = mlp.fit(train_df)

mlp_predictions = mlp_model.transform(test_df)# Evaluate the MLP classifier

mlp_evaluator = MulticlassClassificationEvaluator(labelCol="label", predictionCol="prediction", metricName="accuracy")

mlp_accuracy = mlp_evaluator.evaluate(mlp_predictions)

mlp_accuracy0.9827586206896551决策树分类 (Decision Trees Classification)

Another common classifier in the ML family is the Decision Tree Classifier, in this section, we explore this classifier.

ML系列中的另一个常见分类器是决策树分类器,在本节中,我们将探讨该分类器。

from pyspark.ml.classification import DecisionTreeClassifier# Define the DT Classifier

dt = DecisionTreeClassifier(labelCol="label", featuresCol="features")

dt_model = dt.fit(train_df)

dt_predictions = dt_model.transform(test_df)# Evaluate the DT Classifier

dt_evaluator = MulticlassClassificationEvaluator(labelCol="label", predictionCol="prediction", metricName="accuracy")

dt_accuracy = dt_evaluator.evaluate(dt_predictions)

dt_accuracy0.9827586206896551Apart from the above three demonstrated classification algorithms, Spark MLlib has also many other implementations of classification algorithms. Details of the implemented classification algorithms can be found at below link

除了上面展示的三种分类算法外,Spark MLlib还具有许多其他分类算法的实现。 可以在下面的链接中找到已实施分类算法的详细信息

It is highly recommended to try some of the classification algorithms to get hands-on.

强烈建议尝试一些分类算法以动手实践。

使用PySpark回归 (Regression using PySpark)

In this section, we explore the Machine learning models for regression problems using pyspark. Regression models are helpful in predicting future values using past data.

在本节中,我们使用pyspark探索用于回归问题的机器学习模型。 回归模型有助于使用过去的数据预测未来价值。

We will use the Combined Cycle Power Plant data set to predict the net hourly electrical output (EP). I have uploaded the data to my GitHub so that users can reproduce the results.

我们将使用联合循环电厂数据集来预测每小时的净电力输出(EP)。 我已将数据上传到我的GitHub,以便用户可以重现结果。

from pyspark.ml.regression import LinearRegression

from pyspark.ml.feature import VectorAssembler# Read the iris data

df_ccpp = pd.read_csv("https://raw.githubusercontent.com/amjadraza/blogs-data/master/spark_ml/ccpp.csv")

pp_df = spark.createDataFrame(df_ccpp)pp_df.show(2, False)+-----+-----+-------+-----+------+

|AT |V |AP |RH |PE |

+-----+-----+-------+-----+------+

|14.96|41.76|1024.07|73.17|463.26|

|25.18|62.96|1020.04|59.08|444.37|

+-----+-----+-------+-----+------+

only showing top 2 rows# Create the feature column using VectorAssembler class

vectorAssembler = VectorAssembler(inputCols =["AT", "V", "AP", "RH"], outputCol = "features")

vpp_df = vectorAssembler.transform(pp_df)vpp_df.show(2, False)+-----+-----+-------+-----+------+---------------------------+

|AT |V |AP |RH |PE |features |

+-----+-----+-------+-----+------+---------------------------+

|14.96|41.76|1024.07|73.17|463.26|[14.96,41.76,1024.07,73.17]|

|25.18|62.96|1020.04|59.08|444.37|[25.18,62.96,1020.04,59.08]|

+-----+-----+-------+-----+------+---------------------------+

only showing top 2 rows线性回归 (Linear Regression)

We start with the simplest regression technique i.e. Linear Regression.

我们从最简单的回归技术开始,即线性回归。

# Define and fit Linear Regression

lr = LinearRegression(featuresCol="features", labelCol="PE")

lr_model = lr.fit(vpp_df)# Print and save the Model output

lr_model.coefficients

lr_model.intercept

lr_model.summary.rootMeanSquaredError4.557126016749486#lr_model.save()决策树回归 (Decision Tree Regression)

In this section, we explore the Decision Tree Regression commonly used in Machine learning.

在本节中,我们将探讨机器学习中常用的决策树回归。

from pyspark.ml.regression import DecisionTreeRegressor

from pyspark.ml.evaluation import RegressionEvaluatorvpp_df.show(2, False)+-----+-----+-------+-----+------+---------------------------+

|AT |V |AP |RH |PE |features |

+-----+-----+-------+-----+------+---------------------------+

|14.96|41.76|1024.07|73.17|463.26|[14.96,41.76,1024.07,73.17]|

|25.18|62.96|1020.04|59.08|444.37|[25.18,62.96,1020.04,59.08]|

+-----+-----+-------+-----+------+---------------------------+

only showing top 2 rows# Define train and test data split

splits = vpp_df.randomSplit([0.7,0.3])

train_df = splits[0]

test_df = splits[1]# Define the Decision Tree Model

dt = DecisionTreeRegressor(featuresCol="features", labelCol="PE")

dt_model = dt.fit(train_df)

dt_predictions = dt_model.transform(test_df)dt_predictions.show(1, False)+----+-----+-------+-----+------+--------------------------+-----------------+

|AT |V |AP |RH |PE |features |prediction |

+----+-----+-------+-----+------+--------------------------+-----------------+

|3.31|39.42|1024.05|84.31|487.19|[3.31,39.42,1024.05,84.31]|486.1117703349283|

+----+-----+-------+-----+------+--------------------------+-----------------+

only showing top 1 row# Evaluate the Model

dt_evaluator = RegressionEvaluator(labelCol="PE", predictionCol="prediction", metricName="rmse")

dt_rmse = dt_evaluator.evaluate(dt_predictions)

print("The RMSE of Decision Tree regression Model is {}".format(dt_rmse))The RMSE of Decision Tree regression Model is 4.451790078736588梯度提升决策树回归 (Gradient Boosting Decision Tree Regression)

Gradient Boosting is another common choice among ML professionals. Let us try the GBM in this section.

梯度提升是机器学习专业人员中的另一个常见选择。 让我们在本节中尝试GBM。

from pyspark.ml.regression import GBTRegressor# Define the GBT Model

gbt = GBTRegressor(featuresCol="features", labelCol="PE")

gbt_model = gbt.fit(train_df)

gbt_predictions = gbt_model.transform(test_df)# Evaluate the GBT Model

gbt_evaluator = RegressionEvaluator(labelCol="PE", predictionCol="prediction", metricName="rmse")

gbt_rmse = gbt_evaluator.evaluate(gbt_predictions)

print("The RMSE of GBT Tree regression Model is {}".format(gbt_rmse))The RMSE of GBT Tree regression Model is 4.035802933864555Apart from the above-demonstrated regression algorithms, Spark MLlib has also many other implementations of regression algorithms. Details of the implemented regression algorithms can be found at the below link.

除上述回归算法外,Spark MLlib还具有许多其他回归算法实现。 可以在下面的链接中找到实现的回归算法的详细信息。

It is highly recommended to try some of the regression algorithms to get hands-on and play with the parameters.

强烈建议尝试一些回归算法以动手操作并使用参数。

运作正常的Google Colab (A working Google Colab)

结论 (Conclusions)

In this tutorial, I have tried to give the readers an opportunity to learn and implement basic Machine Learning algorithms using PySpark. Spark not only provide the benefit of distributed processing but also can handle a large amount of data to be processing. To summarise, we have covered below topics/algorithms

在本教程中,我尝试为读者提供了使用PySpark学习和实现基本机器学习算法的机会。 Spark不仅提供了分布式处理的优势,而且还可以处理大量要处理的数据。 总而言之,我们涵盖了以下主题/算法

- Setting up the Spark 3.0.1 in Google Colab 在Google Colab中设置Spark 3.0.1

- Overview of Data Transformations using PySpark 使用PySpark进行数据转换的概述

- Clustering algorithms using PySpark 使用PySpark的聚类算法

- Classification problems using PySpark 使用PySpark的分类问题

- Regression Problems using PySpark 使用PySpark的回归问题

参考读物/链接 (References Readings/Links)

https://spark.apache.org/docs/3.0.1/ml-classification-regression.html#regression

https://spark.apache.org/docs/3.0.1/ml-classification-regression.html#regression

https://spark.apache.org/docs/3.0.1/ml-classification-regression.html#classification

https://spark.apache.org/docs/3.0.1/ml-classification-regression.html#classification

翻译自: https://medium.com/@amjadraza24/machine-learning-with-spark-f1dbc1363986

2584

2584

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?