When a web page is opened in a browser, the browser will automatically execute JavaScript and generate dynamic HTML content. It is common to make HTTP request to retrieve the web pages. However, if the web page is dynamically generated by JavaScript, a HTTP request will only get source codes of the page. Many websites implement Ajax to send information to and retrieve data from server without reloading web pages. To scrape Ajax-enabled web pages without losing any data, one solution is to execute JavaScript using Python packages and scrape the web page that is completely loaded. Selenium is a powerful tool to automate browsers and load web pages with the functionality to execute JavaScript.

在浏览器中打开网页时,浏览器将自动执行JavaScript并生成动态HTML内容。 通常会发出HTTP请求来检索网页。 但是,如果网页是由JavaScript动态生成的,则HTTP请求将仅获取该网页的源代码。 许多网站实施Ajax来向服务器发送信息并从服务器检索数据,而无需重新加载网页。 为了在不丢失任何数据的情况下刮擦启用Ajax的网页,一种解决方案是使用Python包执行JavaScript,然后刮擦完全加载的网页。 Selenium是一个强大的工具,可以自动执行浏览器并加载具有执行JavaScript功能的网页。

1.使用WebDriver启动Selenium (1. Start Selenium with a WebDriver)

Selenium does not contain a web browser. It calls an API on a WebDriver which opens a browser. Both Firefox and Chrome have their own WebDrivers that interact with Selenium. If you do not need a browser UI, PhantomJS is a good option that loads web pages and executes JavaScript at the background. In the following example, I will use Chrome WebDriver.

Selenium不包含Web浏览器。 它在WebDriver上调用API,该API打开浏览器。 Firefox和Chrome都有自己的与Selenium交互的WebDriver。 如果不需要浏览器UI,则PhantomJS是加载网页并在后台执行JavaScript的不错选择。 在以下示例中,我将使用Chrome WebDriver。

Before starting Selenium with a WebDriver, install Selenium pip install Selenium and download Chrome WebDriver

在使用WebDriver启动Selenium之前,请先安装Selenium pip install Selenium并下载Chrome WebDriver

Start Selenium with a WebDriver. By running the following code, a Chrome browser pops up.

使用WebDriver启动Selenium。 通过运行以下代码,Chrome浏览器将弹出。

from selenium import WebDriver

driver = WebDriver.Chrome('./chromedriver') #specify the path of the WebDriver2.动态HTML (2. Dynamic HTML)

Let’s take this web page as an example: https://www.u-optic.com/plano-convex-spherical-lens/en.html. This page makes Ajax requests to retrieve data and then generate the page content dynamically. Suppose we are interested in the data listed in the HTML table. They are not present in the original HTML source code. A simple HTTP request will only retrieve the page source code without the data.

让我们以该网页为例: https : //www.u-optic.com/plano-convex-spherical-lens/en.html 。 该页面发出Ajax请求以检索数据,然后动态生成页面内容。 假设我们对HTML表中列出的数据感兴趣。 它们没有出现在原始HTML源代码中。 一个简单的HTTP请求将仅检索页面源代码,而不包含数据。

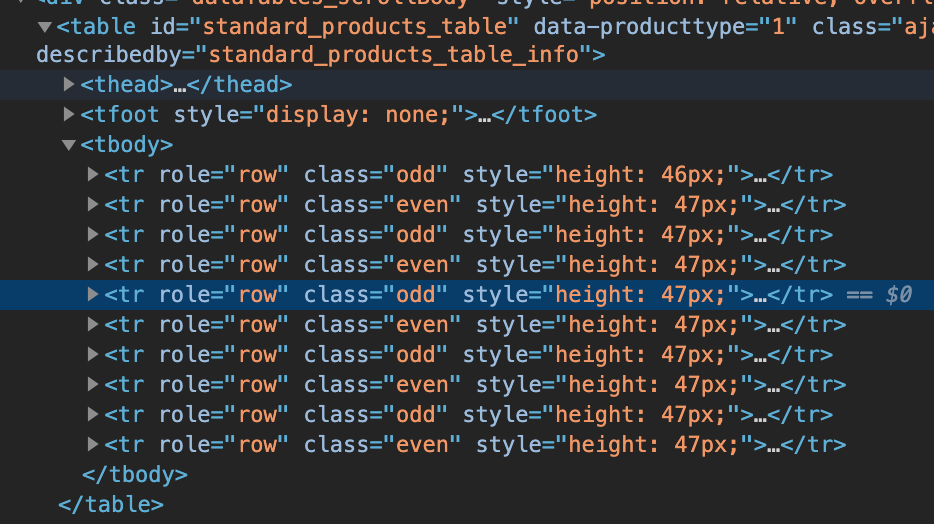

A closer look at the table generated by JavaScript in a browser:

仔细查看浏览器中JavaScript生成的表:

3.开始刮 (3. Start scraping)

There are two ways to scrape dynamic HTML. The more obvious way is to load the page in Selenium WebDriver. The WebDriver automatically executes Ajax requests and subsequently generates the full web page. After the web page is loaded completely, use Selenium to acquire the page source in which the data is present.

抓取动态HTML有两种方法。 更明显的方法是在Selenium WebDriver中加载页面。 WebDriver自动执行Ajax请求,并随后生成完整的网页。 网页完全加载后,使用Selenium获取其中包含数据的页面源。

However, on the example web page, due to table pagination, the table only shows 10 records. Multiple Ajax requests have to be made in order to retrieve all records.

但是,在示例网页上,由于表分页,该表仅显示10条记录。 为了检索所有记录,必须发出多个Ajax请求。

Inspect the web page, under Network tab, we find 2 Ajax requests from which the web page loads the data to construct the tables.

检查网页,在“网络”选项卡下,我们找到2个Ajax请求,网页从这些请求中加载数据以构造表。

By copying and pasting the urls into a browser or making HTTP requests using Python Requests library, we retrieve 10 records in JSON.

通过将网址复制并粘贴到浏览器中或使用Python Requests库发出HTTP请求,我们以JSON检索了10条记录。

{"draw":1,"recordsTotal":1564,"recordsFiltered":1564,"data":[{"id":66,"material_code":"4001010101","model":..."}]}The returned JSON data indicates there are 1564 records in total. A closer look at the Ajax url reveals that the number of records to be retrieved is specified under the parameter “length” in the url.

返回的JSON数据指示总共有1564条记录。 仔细查看Ajax URL,可以发现要检索的记录数是在URL中的“长度”参数下指定的。

There are 62 items in the first table and 1564 items in the second table. Thus we change the value for the parameter “length” in the url accordingly.

第一个表中有62个项目,第二个表中有1564个项目。 因此,我们相应地更改了网址中参数“ length”的值。

Making requests for the data directly is much more convenient than parsing the data from web pages using Xpath or CSS selector.

与使用Xpath或CSS选择器从网页解析数据相比,直接请求数据要方便得多。

4.在WebDriver日志中搜索Ajax请求URL (4. Search for Ajax request urls in WebDriver logs)

The Ajax request urls are hidden inside the JavaScript codes. We can search in WebDriver’s performance log which logs events for Ajax requests. To retrieve performance logs from WebDriver, we must specify the argument when creating a WebDriver object:

Ajax请求网址隐藏在JavaScript代码中。 我们可以在WebDriver的性能日志中进行搜索,该日志记录Ajax请求的事件。 要从WebDriver检索性能日志,我们在创建WebDriver对象时必须指定参数:

from selenium.WebDriver.common.desired_capabilities import DesiredCapabilitiescaps = DesiredCapabilities.CHROME

caps['goog:loggingPrefs'] = {'performance': 'ALL'}

driver = WebDriver.Chrome('./chromedriver', desired_capabilities=caps)

driver.get('https://www.u-optic.com/plano-convex-spherical-lens/en.html')

log = driver.get_log('performance')The performance log records network activities that the WebDriver performed when loading the web page.

性能日志记录WebDriver在加载网页时执行的网络活动。

[{'level': 'INFO', 'message': '{"message":{"method":"Network.responseReceivedExtraInfo","params":{"..."}', 'timestamp': 1596881833630}, {'level': 'INFO', 'message': '{"message":{"method":"Network.responseReceived","params":{"..."}'' ]The value of the key “message” is a string in JSON. Parse the the string using Python json module and we find the Ajax requests to retrieve data are made under the method “Network.requestWillBeSent”. The url has the path: “/api/diy/get_product_by_type”.

键“ message”的值是JSON中的字符串。 使用Python json模块解析字符串,我们发现Ajax请求检索数据是在“ Network.requestWillBeSent”方法下进行的。 网址的路径为:“ / api / diy / get_product_by_type”。

{ 'method': 'Network.requestWillBeSent', 'params': { .... 'request': { ... 'url': 'https://www.u-optic.com/api/diy/get_product_by_type?...start=0&length=10...' }, ... } }We use regular expression to find these urls.

我们使用正则表达式查找这些网址。

import json

import repattern = r'https\:\/\/www\.u\-optic\.com\/api\/diy\/get\_product\_by\_type.+'urls = list() # a list to store Ajax urlsfor entry in log:

message = json.loads(entry['message'])

if message['message']['method'] == 'Network.requestWillBeSent':

if re.search(pattern, message['message']['params']['request']['url']):

urls.append(message['message']['params']['request']['url'])Additional notes:

补充笔记:

When the WebDriver loads the web page, it may take a few seconds for the WebDriver to make Ajax requests and then generate the page content. Thus it is recommended to configure the WebDriver to wait for some time until the section we intend to scrape is loaded completely. In this example, we want to scraped the data in the table. The data is placed under class “text-bold”. Thus we set the WebDriver to wait for 5s until the class ‘text-bold’ gets loaded. If the section does not get loaded in 5s, a TimeoutException will be thrown.

当WebDriver加载网页时,WebDriver可能需要花费几秒钟的时间来发出Ajax请求,然后生成页面内容。 因此,建议将WebDriver配置为等待一段时间,直到我们要抓取的部分完全加载为止。 在此示例中,我们要抓取表中的数据。 数据放在“文本粗体”类下。 因此,我们将WebDriver设置为等待5秒钟,直到类'text-bold'被加载。 如果未在5s内加载该部分,则将引发TimeoutException。

from selenium.WebDriver.support.ui import WebDriverWait

from selenium.WebDriver.support import expected_conditions as EC

from selenium.WebDriver.common.by import Bywait_elementid = "//a[@class='text-bold']"

wait_time = 5

WebDriverWait(self.driver, wait_time).until(EC.visibility_of_element_located((By.XPATH, wait_elementid)))5.结论 (5. Conclusion)

Dynamically generated web pages are different from their source codes and thus we cannot scrape the web pages by HTTP requests. Executing JavaScript with Selenium is a solution to scrape the web pages without losing any data. Furthermore, if the data to be scraped is retrieved via Ajax requests, we may search for the request urls in the performance logs of the WebDriver and retrieve the data directly by making HTTP requests.

动态生成的网页与其源代码不同,因此我们无法通过HTTP请求抓取该网页。 用Selenium执行JavaScript是一种在不丢失任何数据的情况下抓取网页的解决方案。 此外,如果要通过Ajax请求检索要抓取的数据,我们可以在WebDriver的性能日志中搜索请求url,并通过发出HTTP请求直接检索数据。

翻译自: https://medium.com/@zhangw1.2011/scraping-dynamic-html-in-python-with-selenium-f2ce7afcded5

814

814

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?