The demo aims at running an application in Kubernetes behind a Cloud-managed public load balancer also known as an HTTP(s) load balancer which is also known as an Ingress resource in Kubernetes dictionary. For this demo, I will be using Google Kubernetes Engine. Also, instead of using a default ingress controller that GCP makes of its own, I will be creating an NGINX ingress controller which will be used by the Ingress resource. Using this NGINX ingress controller we will be allowing IP addresses and eventually blocking others from accessing our application running in GKE. Before we start with the implementation, let us get some of our prerequisites revised.

该演示旨在在Kubernetes中的云托管公共负载均衡器(也称为HTTP(s)负载均衡器)之后运行应用程序,该负载均衡器在Kubernetes词典中也称为Ingress资源 。 对于此演示,我将使用Google Kubernetes Engine。 此外,而不是使用的默认进入控制器 ,它自己的GCP品牌,我将创建一个NGINX进入控制器将由入口资源的使用。 使用此NGINX入口控制器,我们将允许IP地址,并最终阻止其他人访问在GKE中运行的应用程序。 在开始实施之前,让我们修改一些先决条件。

Kubernetes中的Ingress是什么? (What is Ingress in Kubernetes?)

In Kubernetes, an Ingress is an object or a resource that allows access to your Kubernetes services from outside the Kubernetes cluster. One can configure access by creating a collection of rules that define which inbound connections can reach which services. In GKE, when we specify kind: Ingress in the resource manifest. GKE then creates an Ingress resource making appropriate Google Cloud API calls to create an external HTTP(S) load balancer. The load balancer’s URL maps host rules and path matches, to refer to one or more backend services, where each backend service corresponds to a GKE Service of type NodePort, as referenced in the Ingress.

在Kubernetes中,Ingress是一个对象或资源,它允许从Kubernetes集群外部访问Kubernetes服务。 可以通过创建一组规则来配置访问,这些规则定义了哪些入站连接可以到达哪些服务。 在GKE中,当我们指定种类时:资源清单中的入口 。 然后,GKE创建一个Ingress资源,进行适当的Google Cloud API调用,以创建一个外部HTTP(S)负载均衡器。 负载均衡器的URL映射主机规则和路径匹配,以引用一个或多个后端服务,其中每个后端服务都对应于Ingress中引用的NodePort类型的GKE服务。

But then…..

但是之后…..

Kubernetes中的Ingress Controller是什么? (What is Ingress Controller in Kubernetes?)

For the Ingress resource to work, the cluster must have an ingress controller running. There multiple Ingress controllers available and they can be configured with the Ingress resource eg. NGINX Ingress Controller, HAproxy Ingress controller, Traefik, Contour, etc.

为了使Ingress资源正常工作,集群必须运行一个Ingress Controller。 可以使用多个Ingress控制器,并且可以使用Ingress资源进行配置。 NGINX入口控制器,HAproxy入口控制器,Traefik,轮廓等

We will be using the NGINX Ingress Controller for the demo.

我们将在演示中使用NGINX入口控制器。

So now…..

所以现在.....

什么是NGINX Ingress Controller? (What is NGINX Ingress Controller?)

We know that users who need to provide external access to their Kubernetes services create an Ingress resource that defines rules, including the URI path, backing service name, and other information. The Ingress controller can then automatically program a front‑end load balancer to enable Ingress configuration. The NGINX Ingress Controller for Kubernetes is what enables Kubernetes to configure NGINX and NGINX Plus for load balancing Kubernetes services. The NGINX Ingress Controller for Kubernetes provides enterprise‑grade delivery services for Kubernetes applications, with benefits for users of both NGINX Open Source and NGINX Plus. With the NGINX Ingress Controller for Kubernetes, you get basic load balancing, SSL/TLS termination, support for URI rewrites, and upstream SSL/TLS encryption. NGINX Plus users additionally get session persistence for stateful applications and JSON Web Token (JWT) authentication for APIs.

我们知道需要向其Kubernetes服务提供外部访问权限的用户会创建一个Ingress资源,该资源定义规则,包括URI路径,支持服务名称和其他信息。 然后,Ingress控制器可以自动对前端负载均衡器进行编程以启用Ingress配置。 用于Kubernetes的NGINX入口控制器使Kubernetes能够配置NGINX和NGINX Plus以实现Kubernetes服务的负载平衡。 用于Kubernetes的NGINX入口控制器为Kubernetes应用程序提供企业级交付服务,同时为NGINX开源和NGINX Plus用户带来好处。 使用用于Kubernetes的NGINX入口控制器,您将获得基本的负载平衡,SSL / TLS终止,对URI重写的支持以及上游SSL / TLS加密。 NGINX Plus用户还可以为状态应用程序获得会话持久性,并为API获得JSON Web令牌(JWT)身份验证。

Let’s Visualize all that I have written:

让我们可视化我写的所有内容:

建筑: (Architecture:)

Let us now start with the actual demo where I will be allowing only specific IPs to access the application via the NGINX ingress controller.

现在让我们从实际的演示开始,在该演示中,我将仅允许特定的IP通过NGINX入口控制器访问应用程序。

步骤1:创建Kubernetes集群 (Step 1: Create the Kubernetes Cluster)

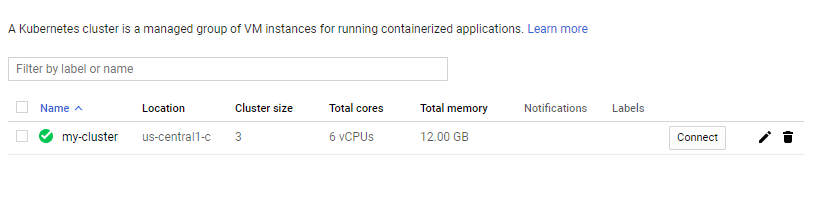

Create a Kubernetes cluster in any cloud provider of your choice. I am using Google Kubernetes Cluster.

在您选择的任何云提供商中创建Kubernetes集群。 我正在使用Google Kubernetes Cluster。

步骤2:连接到集群 (Step 2: Connect to the cluster)

We need to connect to the newly created cluster so that we can deploy our resources in it. I am the Cloud Shell here. A VM instance or a local machine where the project is configured can also be used. Run the following command to connect to the cluster.

我们需要连接到新创建的集群,以便我们可以在其中部署资源。 我是这里的Cloud Shell。 也可以使用配置了项目的VM实例或本地计算机。 运行以下命令以连接到集群。

$ gcloud container clusters get-credentials [CLUSTER_NAME] --zone us-central1-c --project [PROJECT_ID]Give the appropriate name of the cluster and the GCP project ID.

提供适当的群集名称和GCP项目ID。

步骤3:部署示例应用程序 (Step 3: Deploy the sample application)

We will start deploying our resources inside the cluster. We will first deploy our application. I am deploying a simple NodeJS application, which I have dockerized and pushed it on my DockerHub. The image is publicly available so that anyone can pull it for practice purposes. The following command will create a POD with my docker image inside the GKE.

我们将开始在集群内部部署资源。 我们将首先部署我们的应用程序。 我正在部署一个简单的NodeJS应用程序,已对其进行泊坞化并将其推送到我的DockerHub上。 该图像是公开可用的,因此任何人都可以出于实践目的将其拉出。 以下命令将使用我的docker镜像在GKE内创建一个POD。

$ kubectl run sample-app --image=anm237/helloworldnode --port=8080$ kubectl get pods

NAME READY STATUS RESTARTS AGE

sample-app 1/1 Running 0 3m58s步骤4:使用NodePort服务公开Pod (Step 4: Expose the Pod with NodePort Service)

So we will now expose our POD on a NodePort service. A NodePort is an open port on every node of your cluster. Kubernetes transparently routes incoming traffic on the NodePort to your service, even if your application is running on a different node. NodePort is assigned from a pool of cluster-configured NodePort ranges (typically 30000–32767).

因此,我们现在将在节点端口服务上公开POD。 NodePort是群集中每个节点上的开放端口。 即使您的应用程序在其他节点上运行,Kubernetes也会将NodePort上的传入流量透明地路由到您的服务。 NodePort是从群集配置的NodePort范围池(通常为30000–32767)中分配的。

$ kubectl expose pod sample-app --type=NodePort --name=web

service/web exposed$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.79.0.1 <none> 443/TCP 63m

web NodePort 10.79.7.29 <none> 8080:30629/TCP 56sWe can see that our application is exposed to port 30629 as a NodePort service. I have named the service as ‘web’. This service will act as our backend service in further steps.

我们可以看到我们的应用程序作为NodePort服务公开到端口30629。 我已将该服务命名为'web' 。 此服务将在后续步骤中充当我们的后端服务。

步骤5:部署NGINX Ingress Controller (Step 5: Deploy the NGINX Ingress Controller)

Probably one of the most important steps of the demo. As mentioned we will be using the NGINX ingress controller instead of the default Ingress controller. The actual Ingress resource ( HTTP(s) balancer) will be using the NGINX Ingress controller for its operation in the Level4 networking layer. There are several ways to deploy the NGINX Ingress controller. One of the ways I have used is as follows.

可能是演示中最重要的步骤之一。 如前所述,我们将使用NGINX入口控制器,而不是默认的入口控制器。 实际的Ingress资源(HTTP平衡器)将使用NGINX Ingress控制器在Level4网络层中进行操作。 有几种部署NGINX Ingress控制器的方法。 我使用的方法之一如下。

$ kubectl create clusterrolebinding cluster-admin-binding \ --clusterrole cluster-admin \ --user $(gcloud config get-value account)[OUTPUT OF THE ABOVE COMMAND]

Your active configuration is: [cloudshell-21469]

clusterrolebinding.rbac.authorization.k8s.io/cluster-admin-binding created$ https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.34.1/deploy/static/provider/cloud/deploy.yaml[OUTPUT OF THE ABOVE COMMAND]

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission createdWe have now created the NGINX ingress controller. The resource got created in a separate namespace called ‘ingress-nginx’. This can be verified using the following command.

现在,我们已经创建了NGINX入口控制器。 资源是在名为'ingress-nginx'的单独命名空间中创建的 。 可以使用以下命令来验证。

$ kubectl get svc -n ingress-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.79.1.218 34.67.217.146 80:30336/TCP,443:31777/TCP 16m

ingress-nginx-controller-admission ClusterIP 10.79.11.244 <none> 443/TCP 16mNote: Deployment of NGINX Ingress controller has created a Level 4 Load Balancer or say a Network Load Balancer in GCP.

注意: NGINX Ingress控制器的部署已在GCP中创建了一个4级负载均衡器或一个网络负载均衡器。

步骤6:部署入口 (Step 6: Deploying the Ingress)

Now, its time to deploy our actual HTTP(s) Load Balancer, and this is done by deploying the Ingress. The following the Ingress manifest file. The kind is specified as Ingress and the backend is configured as the name of the NodePort service we created in step 4.

现在,是时候部署实际的HTTP(s)负载均衡器了,这是通过部署Ingress完成的。 以下Ingress清单文件。 该类型指定为Ingress,后端配置为我们在步骤4中创建的NodePort服务的名称。

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-ingress

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/whitelist-source-range: 27.4.4.187/32 #the IP to be allowed

spec:

backend:

serviceName: web #name of the NodePort service

servicePort: 8080If you carefully observe, in the annotations I have mentioned 2 configurations. The first is to allow our Ingress resource to use the NGINX ingress controller instead of the default. The second i.e. nginx.ingress.kubernetes.io/whitelist-source-range is for whitelisting or allows listing the IP addresses that access our application. Multiple IPs can be added by separating them with commas.

如果您仔细观察,我在注释中提到了2种配置。 首先是允许我们的Ingress资源使用NGINX Ingress控制器代替默认控制器。 第二个即nginx.ingress.kubernetes.io/whitelist-source-range用于白名单或允许列出访问我们的应用程序的IP地址。 可以通过使用逗号分隔添加多个IP。

Note: The IP address I have mentioned is the IP of my local machine.

注意 :我提到的IP地址是本地计算机的IP。

Now, let us deploy this Ingress using the following command

现在,让我们使用以下命令部署此Ingress

$ kubectl apply -f ingress-allowip.yamlingress.extensions/web-ingress createdIt takes a few minutes to create the Ingress, as GCP creates the HTTP(s) external Load Balancer. We check the status by using the following command.

由于GCP创建了HTTP(s)外部负载均衡器,因此创建Ingress需花费几分钟。 我们使用以下命令检查状态。

$ kubectl get ingressNAME HOSTS ADDRESS PORTS AGE

web-ingress * 34.67.217.146 80 2m50sSo when I hit the Load Balancer public IP on my browser from my local machine I will be able to access the application. This is because my machine’s IP is in the AllowList.

因此,当我从本地计算机在浏览器中点击Load Balancer公用IP时,便可以访问该应用程序。 这是因为我的计算机的IP在AllowList中。

However, when I try to curl it from the Cloud Shell, which is another machine, it won’t be able to access the application as the IP address of the Cloud Shell is not included in the AllowList.

但是,当我尝试从另一台计算机Cloud Shell卷曲它时,由于Allow Shell中未包含Cloud Shell的IP地址,因此它将无法访问该应用程序。

$ curl -I 34.67.217.146HTTP/1.1 403 Forbidden

Server: nginx/1.19.1

Date: Tue, 25 Aug 2020 17:08:21 GMT

Content-Type: text/html

Content-Length: 153

Connection: keep-aliveIt can be seen that the cloud shell is forbidden from accessing the application. Here is the actual proof for that !!

可以看出,禁止云外壳访问应用程序。 这是实际的证明!

That’s it…we achieved what was needed. We secured our application by allowing only the desired IP address to access.

就是这样……我们实现了所需。 通过仅允许所需的IP地址访问,我们保护了我们的应用程序。

结论: (Conclusion:)

When we use NGINX or any 3rd party Ingress controller, it becomes important that the controller is security hardened. Security hardening can include adding SSL certificates, proper authentication, blocking or allowing a range of IP addresses, introducing strong ciphers, etc. Critical applications in production cannot be exposed to Ingress using the default state of the Ingress controller. Thus, we implemented one of the security hardening features on the NGINX ingress controller in this demo. Suggestions are most welcome. See you soon in another use case implementation.

当我们使用NGINX或任何第三方Ingress控制器时,对控制器进行安全性加固就变得很重要。 安全强化可以包括添加SSL证书,正确的身份验证,阻止或允许一定范围的IP地址,引入强密码等。使用Ingress控制器的默认状态,生产中的关键应用程序无法暴露给Ingress。 因此,在此演示中,我们在NGINX入口控制器上实现了安全强化功能之一。 欢迎提出建议。 稍后在另一个用例实现中见。

576

576

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?