用TensorFlow实现简单的AND和XOR函数,是一个很好的多层神经网络的例子。因为XOR函数无法用单层神经网络实现。

先上代码(此段代码计算XOR函数,AND函数只需修改OUTPUT_TRAIN的值即可)

#!/usr/bin/env python

import tensorflow as tf

import math

import numpy as np

INPUT_COUNT = 2

OUTPUT_COUNT = 2

HIDDEN_COUNT = 2

LEARNING_RATE = 0.1

MAX_STEPS = 5000

# For every training loop we are going to provide the same input and expected output data

INPUT_TRAIN = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

OUTPUT_TRAIN = np.array([[1, 0], [0, 1], [0, 1], [1, 0]])

# Nodes are created in Tensorflow using placeholders. Placeholders are values that we will input when we ask Tensorflow to run a computation.

# Create inputs x consisting of a 2d tensor of floating point numbers

inputs_placeholder = tf.placeholder("float",

shape=[None, INPUT_COUNT])

labels_placeholder = tf.placeholder("float",

shape=[None, OUTPUT_COUNT])

# We need to create a python dictionary object with placeholders as keys and feed tensors as values

feed_dict = {

inputs_placeholder: INPUT_TRAIN,

labels_placeholder: OUTPUT_TRAIN,

}

# Define weights and biases from input layer to hidden layer

WEIGHT_HIDDEN = tf.Variable(tf.truncated_normal([INPUT_COUNT, HIDDEN_COUNT]))

BIAS_HIDDEN = tf.Variable(tf.zeros([HIDDEN_COUNT]))

# Define an activation function for the hidden layer. Here we are using the Sigmoid function, but you can use other activation functions offered by Tensorflow.

AF_HIDDEN = tf.nn.sigmoid(tf.matmul(inputs_placeholder, WEIGHT_HIDDEN) + BIAS_HIDDEN)

# Define weights and biases from hidden layer to output layer. The biases are initialized with tf.zeros to make sure they start with zero values.

WEIGHT_OUTPUT = tf.Variable(tf.truncated_normal([HIDDEN_COUNT, OUTPUT_COUNT]))

BIAS_OUTPUT = tf.Variable(tf.zeros([OUTPUT_COUNT]))

# With one line of code we can calculate the logits tensor that will contain the output that is returned

logits = tf.matmul(AF_HIDDEN, WEIGHT_OUTPUT) + BIAS_OUTPUT

# We then compute the softmax probabilities that are assigned to each class

y = tf.nn.softmax(logits)

# The tf.nn.softmax_cross_entropy_with_logits op is added to compare the output logits to expected output

#cross_entropy = tf.nn.softmax_cross_entropy_with_logits(logits, y)

cross_entropy = -tf.reduce_sum(labels_placeholder * tf.log(y))

# It then uses tf.reduce_mean to average the cross entropy values across the batch dimension as the total loss

loss = tf.reduce_mean(cross_entropy)

# Next, we instantiate a tf.train.GradientDescentOptimizer that applies gradients with the requested learning rate. Since Tensorflow has access to the entire computation graph, it can find the gradients of the cost of all the variables.

train_step = tf.train.GradientDescentOptimizer(LEARNING_RATE).minimize(loss)

# Next we create a tf.Session () to run the graph

init = tf.global_variables_initializer()

with tf.Session() as sess:

# Then we run the session

sess.run(init)

# The following code fetch two values [train_step, loss] in its run call. Because there are two values to fetch, sess.run() returns a tuple with two items. We also print the loss and outputs every 100 steps.

for step in range(MAX_STEPS):

loss_val = sess.run([train_step, loss], feed_dict)

if step % 100 == 0:

print ("Step:", step, "loss: ", loss_val)

for input_value in INPUT_TRAIN:

print (input_value, sess.run(y,

feed_dict={inputs_placeholder: [input_value]}))

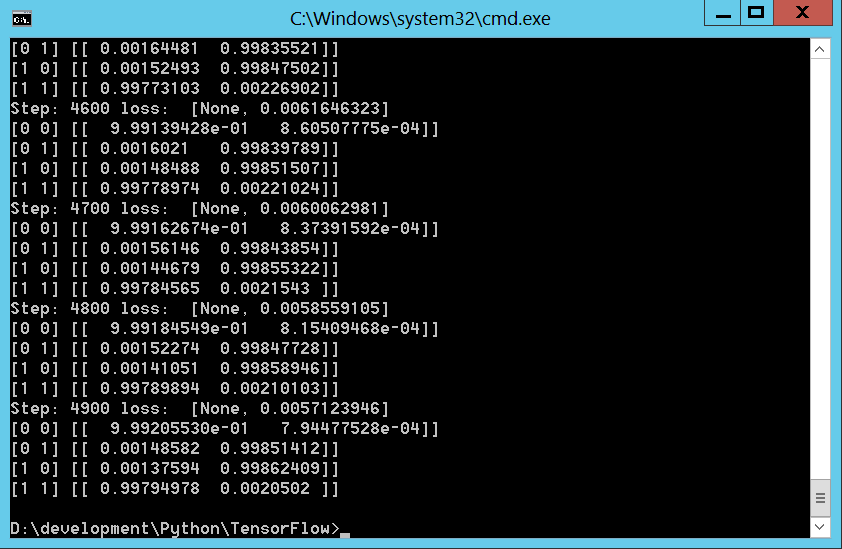

运行结果如下

虽然英文注释已经写得很清楚,不过接下来还是逐条说明一下。

import tensorflow as tf

import math

import numpy as np

INPUT_COUNT = 2

OUTPUT_COUNT = 2

HIDDEN_COUNT = 2

LEARNING_RATE = 0.1

MAX_STEPS = 5000

# For every training loop we are going to provide the same input and expected output data

INPUT_TRAIN = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

OUTPUT_TRAIN = np.array([[1, 0], [0, 1], [0, 1], [1, 0]])这段代码导入了必要的库,并定义了基本的常数,比如输入变量的个数INPUT_COUNT,输出变量的个数OUTPUT_COUNT,隐藏层神经元个数HIDDEN_COUNT,学习速率LEARNING_RATE,以及最多训练的次数MAX_STEPS。值得一提的是训练集,对于XOR函数,有 XOR(0, 0) = 0, XOR(0, 1) = 1, XOR(1, 0) = 1, XOR(1, 1) = 0。那么训练集应该是输入集[[0,0], [0,1], [1,0], [1,1]],输出集[0, 1, 1, 0],为何此处定义看起来很奇怪?原因是tensorflow的输出默认使用了one hot encoding(独热码),类似前面MNIST例子中用到的那样,对每个状态做了区分。此处输出包含两个状态0和1,因此分别使用[1,0]和[0,1]代表(也可以反过来用[0,1]代表0,[1,0]代表1,只要一开始规定好就行)。关于one hot encoding的基本信息,可参阅如下网址或自行百度

http://baike.baidu.com/link?url=7Qw-sLrFRegdtYgJPpPXR687n-PwbpczZ7lmPwskwHi0YtUDHE4PLwNfTftnBT0ZIsq-glmxkqp01VDK4NTU6qU5Kwe_4p99wtjWwdLGa_A4EysH219Sh6oZjXDkNQ3s

对于AND函数,可以将输出训练集改为OUTPUT_TRAIN=np.array([[1, 0], [1, 0], [1, 0], [0, 1])。(或者OUTPUT_TRAIN=np.array([[0, 1], [0, 1], [0, 1], [1, 0]]),取决于如何定义,每次计算都可以独立定义。)

# Nodes are created in Tensorflow using placeholders. Placeholders are values that we will input when we ask Tensorflow to run a computation.

# Create inputs x consisting of a 2d tensor of floating point numbers

inputs_placeholder = tf.placeholder("float",

shape=[None, INPUT_COUNT])

labels_placeholder = tf.placeholder("float",

shape=[None, OUTPUT_COUNT])

# We need to create a python dictionary object with placeholders as keys and feed tensors as values

feed_dict = {

inputs_placeholder: INPUT_TRAIN,

labels_placeholder: OUTPUT_TRAIN,

}接下来这几句定义了输入和输出的占位符,并将这个占位符和前面定义的输入输出变量矩阵关联起来。feed_dict的定义纯粹是为了方便,也可以类似MNIST例子中那样直接放在session.run()中。

# Define weights and biases from input layer to hidden layer

WEIGHT_HIDDEN = tf.Variable(tf.truncated_normal([INPUT_COUNT, HIDDEN_COUNT]))

BIAS_HIDDEN = tf.Variable(tf.zeros([HIDDEN_COUNT]))

# Define an activation function for the hidden layer. Here we are using the Sigmoid function, but you can use other activation functions offered by Tensorflow.

AF_HIDDEN = tf.nn.sigmoid(tf.matmul(inputs_placeholder, WEIGHT_HIDDEN) + BIAS_HIDDEN)

# Define weights and biases from hidden layer to output layer. The biases are initialized with tf.zeros to make sure they start with zero values.

WEIGHT_OUTPUT = tf.Variable(tf.truncated_normal([HIDDEN_COUNT, OUTPUT_COUNT]))

BIAS_OUTPUT = tf.Variable(tf.zeros([OUTPUT_COUNT]))接下来定义了两层网络的权重WEIGHT_HIDDEN,WEIGHT_OUTPUT和偏置BIAS_HIDDEN,BIAS_OUTPUT。AF_HIDDEN是第一层的输出,用到了经典的sigmoid函数。

# With one line of code we can calculate the logits tensor that will contain the output that is returned

logits = tf.matmul(AF_HIDDEN, WEIGHT_OUTPUT) + BIAS_OUTPUT

# We then compute the softmax probabilities that are assigned to each class

y = tf.nn.softmax(logits)

# The tf.nn.softmax_cross_entropy_with_logits op is added to compare the output logits to expected output

#cross_entropy = tf.nn.softmax_cross_entropy_with_logits(logits, y)

cross_entropy = -tf.reduce_sum(labels_placeholder * tf.log(y))

# It then uses tf.reduce_mean to average the cross entropy values across the batch dimension as the total loss

loss = tf.reduce_mean(cross_entropy)与MNIST的例子类似,上面的几句定义了交叉熵(符号使用略有差异),之所以不使用tf.nn.softmax_cross_entropy_with_logits函数(注释掉的cross_entropy那句),是因为在此例中,和MNIST中不同,这个方法收敛性差。

# Next, we instantiate a tf.train.GradientDescentOptimizer that applies gradients with the requested learning rate. Since Tensorflow has access to the entire computation graph, it can find the gradients of the cost of all the variables.

train_step = tf.train.GradientDescentOptimizer(LEARNING_RATE).minimize(loss)

# Next we create a tf.Session () to run the graph

init = tf.global_variables_initializer()

with tf.Session() as sess:

# Then we run the session

sess.run(init)

# The following code fetch two values [train_step, loss] in its run call. Because there are two values to fetch, sess.run() returns a tuple with two items. We also print the loss and outputs every 100 steps.

for step in range(MAX_STEPS):

loss_val = sess.run([train_step, loss], feed_dict)

if step % 100 == 0:

print ("Step:", step, "loss: ", loss_val)

for input_value in INPUT_TRAIN:

print (input_value, sess.run(y,

feed_dict={inputs_placeholder: [input_value]}))最后定义了优化算法,然后初始化session,开始运行,并且每100次训练就给出一个训练结果。

MNIST的例子可参照如下地址:

https://my.oschina.net/propagator/blog/851912

3186

3186

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?