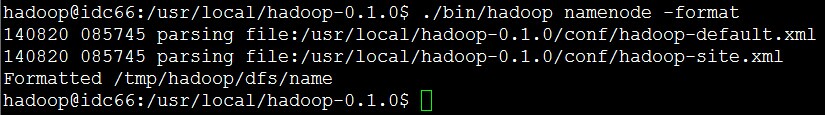

网上视频和书本都说:启动之前要格式化,本节就来讲讲解格式化HDFS命令背后的原理!先来看看执行结果!

下面我们来研究原理!

=====================

首先需要研究下./bin/hadoop脚本怎么运行

THIS="$0"

while [ -h "$THIS" ]; do

ls=`ls -ld "$THIS"`

link=`expr "$ls" : '.*-> \(.*\)$'`

if expr "$link" : '.*/.*' > /dev/null; then

THIS="$link"

else

THIS=`dirname "$THIS"`/"$link"

fi

done

意思是判断./bin/hadoop是否是一个符号链接,

这里不是符号链接,所以不用太关注这段代码!

$0的值是 ./bin/hadoop------------

# if no args specified, show usage

if [ $# = 0 ]; then

echo "Usage: hadoop COMMAND"

echo "where COMMAND is one of:"

echo " namenode -format format the DFS filesystem"

echo " namenode run the DFS namenode"

echo " datanode run a DFS datanode"

echo " dfs run a DFS admin client"

echo " fsck run a DFS filesystem checking utility"

echo " jobtracker run the MapReduce job Tracker node"

echo " tasktracker run a MapReduce task Tracker node"

echo " job manipulate MapReduce jobs"

echo " jar <jar> run a jar file"

echo " or"

echo " CLASSNAME run the class named CLASSNAME"

echo "Most commands print help when invoked w/o parameters."

exit 1

fi

接下来是判断./bin/hadoop后面的参数个数,如果是0,表明用户

还不太清楚这个命令的用法,则给出这个命令的用法!

很简单!---------

# get arguments

COMMAND=$1

shift

这里是获取参数,如果执行./bin/hadoop namenode -format

则COMMAND的值就是namenode

然后执行完shift后,$1就变成-format

----------

# some directories

THIS_DIR=`dirname "$THIS"`

HADOOP_HOME=`cd "$THIS_DIR/.." ; pwd`

接下来是获取2个变量

值分别是

./bin

/usr/local/hadoop-0.1.0---

# Allow alternate conf dir location.

HADOOP_CONF_DIR="${HADOOP_CONF_DIR:-$HADOOP_HOME/conf}"

接下来就是获取

HADOOP_CONF_DIR

值为/usr/local/hadoop-0.1.0/conf=============

if [ -f "${HADOOP_CONF_DIR}/hadoop-env.sh" ]; then

source "${HADOOP_CONF_DIR}/hadoop-env.sh"

fi

导入hadoop-env.sh里的环境变量,

可见后续如果有需求的话,可以修改hadoop-env.sh这个文件就可以了

而不是修改hadoop文件,很方便!

备注:针对hadoop-env.sh这个文件,

我只添加了

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_21===

# some Java parameters

if [ "$JAVA_HOME" != "" ]; then

#echo "run java in $JAVA_HOME"

JAVA_HOME=$JAVA_HOME

fi

给JAVA_HOME赋值!

结果自然就是 /usr/lib/jvm/jdk1.7.0_2==========

if [ "$JAVA_HOME" = "" ]; then

echo "Error: JAVA_HOME is not set."

exit 1

fi

这个就是校验JAVA_HOME是否配置了=======

JAVA=$JAVA_HOME/bin/java

JAVA_HEAP_MAX=-Xmx1000m

设置2个变量,值分别如下:

/usr/lib/jvm/jdk1.7.0_21/bin/java

-Xmx1000m---

# check envvars which might override default args

if [ "$HADOOP_HEAPSIZE" != "" ]; then

#echo "run with heapsize $HADOOP_HEAPSIZE"

JAVA_HEAP_MAX="-Xmx""$HADOOP_HEAPSIZE""m"

#echo $JAVA_HEAP_MAX

fi

这里,通过echo得知 $HADOOP_HEAPSIZE为空,所以也不会执行!===

# CLASSPATH initially contains $HADOOP_CONF_DIR

CLASSPATH="${HADOOP_CONF_DIR}"

CLASSPATH=${CLASSPATH}:$JAVA_HOME/lib/tools.jar

最终CLASSPATH的值是

/usr/local/hadoop-0.1.0/conf:/usr/lib/jvm/jdk1.7.0_21/lib/tools.jar# for developers, add Hadoop classes to CLASSPATH

if [ -d "$HADOOP_HOME/build/classes" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_HOME/build/classes

fi

if [ -d "$HADOOP_HOME/build/webapps" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_HOME/build

fi

if [ -d "$HADOOP_HOME/build/test/classes" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_HOME/build/test/classes

fi经过修饰,CLASSPATH最终的值是

/usr/local/hadoop-0.1.0/conf

:

/usr/lib/jvm/jdk1.7.0_21/lib/tools.jar

:

/usr/local/hadoop-0.1.0/build/classes

:

/usr/local/hadoop-0.1.0/build

:

/usr/local/hadoop-0.1.0/build/test/classes

这样就把所有的相关类包含进来了!!!!!!!!!!!!!

===

# so that filenames w/ spaces are handled correctly in loops below

IFS=

这个简单!---

# for releases, add hadoop jars & webapps to CLASSPATH

if [ -d "$HADOOP_HOME/webapps" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_HOME

fi

for f in $HADOOP_HOME/hadoop-*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

# add libs to CLASSPATH

for f in $HADOOP_HOME/lib/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/lib/jetty-ext/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

最终CLASSPATH的值是

/usr/local/hadoop-0.1.0/conf

:

/usr/lib/jvm/jdk1.7.0_21/lib/tools.jar

:

/usr/local/hadoop-0.1.0/build/classes

:

/usr/local/hadoop-0.1.0/build

:

/usr/local/hadoop-0.1.0/build/test/classes

:

/usr/local/hadoop-0.1.0

:

/usr/local/hadoop-0.1.0/hadoop-0.1.0-examples.jar

:

/usr/local/hadoop-0.1.0/hadoop-0.1.0.jar

:

/usr/local/hadoop-0.1.0/lib/commons-logging-api-1.0.4.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-5.1.4.jar

:

/usr/local/hadoop-0.1.0/lib/junit-3.8.1.jar

:

/usr/local/hadoop-0.1.0/lib/lucene-core-1.9.1.jar

:

/usr/local/hadoop-0.1.0/lib/servlet-api.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/ant.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/commons-el.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/jasper-compiler.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/jasper-runtime.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/jsp-api.jar===

# figure out which class to run

if [ "$COMMAND" = "namenode" ] ; then

CLASS='org.apache.hadoop.dfs.NameNode'

elif [ "$COMMAND" = "datanode" ] ; then

CLASS='org.apache.hadoop.dfs.DataNode'

elif [ "$COMMAND" = "dfs" ] ; then

CLASS=org.apache.hadoop.dfs.DFSShell

elif [ "$COMMAND" = "fsck" ] ; then

CLASS=org.apache.hadoop.dfs.DFSck

elif [ "$COMMAND" = "jobtracker" ] ; then

CLASS=org.apache.hadoop.mapred.JobTracker

elif [ "$COMMAND" = "tasktracker" ] ; then

CLASS=org.apache.hadoop.mapred.TaskTracker

elif [ "$COMMAND" = "job" ] ; then

CLASS=org.apache.hadoop.mapred.JobClient

elif [ "$COMMAND" = "jar" ] ; then

JAR="$1"

shift

CLASS=`"$0" org.apache.hadoop.util.PrintJarMainClass "$JAR"`

if [ $? != 0 ]; then

echo "Error: Could not find main class in jar file $JAR"

exit 1

fi

CLASSPATH=${CLASSPATH}:${JAR}

else

CLASS=$COMMAND

fi

这里显然执行./bin/hadoop namenode -format的话

CLASS就是

org.apache.hadoop.dfs.NameNode===

# cygwin path translation

if expr `uname` : 'CYGWIN*' > /dev/null; then

CLASSPATH=`cygpath -p -w "$CLASSPATH"`

fi

我的是在linux中运行的,所以不用管!---

最后就是执行了!

# run it

exec "$JAVA" $JAVA_HEAP_MAX $HADOOP_OPTS -classpath "$CLASSPATH" $CLASS "$@"

相关的值分别如下:

/usr/lib/jvm/jdk1.7.0_21/bin/java

-Xmx1000m

-classpath

/usr/local/hadoop-0.1.0/conf

:

/usr/lib/jvm/jdk1.7.0_21/lib/tools.jar

:

/usr/local/hadoop-0.1.0/build/classes

:

/usr/local/hadoop-0.1.0/build

:

/usr/local/hadoop-0.1.0/build/test/classes

:

/usr/local/hadoop-0.1.0

:

/usr/local/hadoop-0.1.0/hadoop-0.1.0-examples.jar

:

/usr/local/hadoop-0.1.0/hadoop-0.1.0.jar

:

/usr/local/hadoop-0.1.0/lib/commons-logging-api-1.0.4.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-5.1.4.jar

:

/usr/local/hadoop-0.1.0/lib/junit-3.8.1.jar

:

/usr/local/hadoop-0.1.0/lib/lucene-core-1.9.1.jar

:

/usr/local/hadoop-0.1.0/lib/servlet-api.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/ant.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/commons-el.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/jasper-compiler.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/jasper-runtime.jar

:

/usr/local/hadoop-0.1.0/lib/jetty-ext/jsp-api.jar

org.apache.hadoop.dfs.NameNode

-format这样就运行起来了!

很简单!

5773

5773

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?