简介:见 AI : 一种现代方法。Chapter21. Reinforce Learning p.703

Softmax函数为多个变量的Logitic函数的泛化.

为什么使用SoftMax方法:因为反向传播和更新方法简单,更直接且直观。

Exercise:Softmax Regression

Softmax regression

In this problem set, you will use softmax regression to classify MNIST images. The goal of this exercise is to build a softmax classifier that you will be able to reuse in the future exercises and also on other classification problems that you might encounter.

在此问题集合里,你将使用SoftMax进行分类MNIST图像数据集。目标是建立一个SoftMax分类器,你将遇到其他特征练习时遇到的分类问题。

In the file softmax_exercise.zip, we have provided some starter code. You should write your code in the places indicated by "YOUR CODE HERE" in the files.

In the starter code, you will need to modifysoftmaxCost.m andsoftmaxPredict.m for this exercise.

We have also provided softmaxExercise.m that will help walk you through the steps in this exercise.

代码下载链接给出了一些初始预处理代码。

Dependencies 依赖项

The following additional files are required for this exercise:

You will also need:

computeNumericalGradient.m from Exercise:Sparse Autoencoder

If you have not completed the exercises listed above, we strongly suggest you complete them first.

Step 0: Initialize constants and parameters

We've provided the code for this step in softmaxExercise.m. 步骤一:初始化常量和参数

Two constants,inputSizeandnumClasses, corresponding to the size of each input vector and the number of class labels have been defined in the

starter code. This will allow you to reuse your code on a different data set in a later exercise. We also initializelambda, the weight decay parameter here.

Step 1: Load data

The starter code loads the MNIST images and labels into inputData andlabels respectively. The images are pre-processed to scale the pixel values to the range[0,1], and the label 0 is

remapped to 10 for convenience of implementation, so that the labels take values in

. You will not need to change

. You will not need to change

any code in this step for this exercise, but note that your code should be general enough to operate on data of arbitrary size belonging to any number of classes.

Step 2: Implement softmaxCost - 执行软回归 代价函数

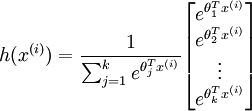

In softmaxCost.m, implement code to compute the softmax cost functionJ(θ). Remember to include the weight decay term in the cost as well. Your code should also compute the appropriate

gradients, as well as the predictions for the input data (which will be used in the cross-validation step later).

It is important to vectorize your code so that it runs quickly. We also provide several implementation tips below:

Note: In the provided starter code, theta is a matrix where each thejth row is

Implementation Tip: Computing the ground truth matrix - In your code, you may need to compute the ground truth matrixM, such thatM(r, c) is 1 ify(c)

=r and 0 otherwise. This can be done quickly, without a loop, using the MATLAB functionssparse andfull. Specifically, the commandM = sparse(r, c, v) creates a sparse matrix such thatM(r(i), c(i)) = v(i) for all i. That is, the vectorsr andc

give the position of the elements whose values we wish to set, andv the corresponding values of the elements. Runningfull on a sparse matrix gives a "full" representation of the matrix for use (meaning that Matlab will no longer try to represent it as a sparse

matrix in memory). The code for usingsparse andfull to compute the ground truth matrix has already been included in softmaxCost.m.

Implementation Tip: Preventing overflows - in softmax regression, you will have to compute the hypothesis

When the products

are large, the exponential function

are large, the exponential function

will become very large and possibly overflow. When this happens, you will not be able to compute your hypothesis. However, there is an easy solution - observe that we can multiply the top and bottom of the hypothesis by some constant without changing the output:

Hence, to prevent overflow, simply subtract some large constant value from each of the

terms before computing the exponential. In practice, for each example, you can use the maximum of the

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1229

1229

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?