思路:

1.提取出横线

2.提取出纵线

3.得到交叉点,删除错误的交叉点,两个交叉点距离小于10,取坐标值小的那个交叉点,得到表格行列

4.对每个单元格使用paddle ocr提取文字

在原文代码基础上修改了2点

1.pytesseract识别准确率不高,使用paddle ocr代替 pytesseract

2.识别出的表格交叉点有些并非真实交叉点,通过判断该行像素点个数,丢掉错误横纵坐标

import cv2

import numpy as np

import pandas as pd

# import pytesseract

# import re

from paddleocr import PaddleOCR

src = 'image.png'

raw = cv2.imread(src, 1)

# 灰度图片

gray = cv2.cvtColor(raw, cv2.COLOR_BGR2GRAY)

# 图片二值化 使二值化后的图片是黑底白字

binary = cv2.adaptiveThreshold(~gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 35, -5)

# src:灰度化的图片

# maxValue:满足条件的像素点需要设置的灰度值

# adaptiveMethod:自适应方法。有2种:ADAPTIVE_THRESH_MEAN_C 或 ADAPTIVE_THRESH_GAUSSIAN_C

# thresholdType:二值化方法,可以设置为THRESH_BINARY或者THRESH_BINARY_INV

# blockSize:分割计算的区域大小,取奇数

# C:常数,每个区域计算出的阈值的基础上在减去这个常数作为这个区域的最终阈值,可以为负数

# dst:输出图像,可选

# 展示图片

# cv2.imshow("binary_picture", binary)

# cv2.waitKey()

def recognize_bgkx(binary):

rows, cols = binary.shape

scale = 30 # 值越小 横线越少 40

# 自适应获取核值

# 识别横线:

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (cols // scale, 1))

# 矩形

eroded = cv2.erode(binary, kernel, iterations=1) # 腐蚀

dilated_col = cv2.dilate(eroded, kernel, iterations=1) # 膨胀

# cv2.imshow("excel_horizontal_line", dilated_col)

# cv2.waitKey()

# 识别竖线:

scale = 20

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1, rows // scale))

eroded = cv2.erode(binary, kernel, iterations=1)

dilated_row = cv2.dilate(eroded, kernel, iterations=1)

# cv2.imshow("excel_vertical_line:", dilated_row)

# cv2.waitKey()

# 将识别出来的横竖线合起来 对二进制数据进行“与”操作

bitwise_and = cv2.bitwise_and(dilated_col, dilated_row)

cv2.imshow("excel_bitwise_and", bitwise_and)

cv2.waitKey()

# 标识表格轮廓

# merge = cv2.add(dilated_col, dilated_row) # 进行图片的加和

# cv2.imshow("entire_excel_contour:", merge)

# cv2.waitKey()

# 两张图片进行减法运算,去掉表格框线

# merge2 = cv2.subtract(binary, merge)

# cv2.imshow("binary_sub_excel_rect", merge2)

# cv2.waitKey()

# new_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (2, 2))

# erode_image = cv2.morphologyEx(merge2, cv2.MORPH_OPEN, new_kernel)

# cv2.imshow('erode_image2', erode_image)

# cv2.waitKey()

# merge3 = cv2.add(erode_image, bitwise_and)

# cv2.imshow('merge3', merge3)

# cv2.waitKey()

# 将焦点标识取出来

ys, xs = np.where(bitwise_and > 0)

# 横纵坐标数组

y_point_arr = []

x_point_arr = []

# 通过排序,排除掉相近的像素点,只取相近值的最后一点

# 这个10就是两个像素点的距离,不是固定的,根据不同的图片会有调整,基本上为单元格表格的高度(y坐标跳变)和长度(x坐标跳变)

i = 0

sort_x_point = np.sort(xs)

for i in range(len(sort_x_point) - 1):

if sort_x_point[i + 1] - sort_x_point[i] > 10:

x_point_arr.append(sort_x_point[i])

i = i + 1

# 要将最后一个点加入

x_point_arr.append(sort_x_point[i])

i = 0

sort_y_point = np.sort(ys)

# print(np.sort(ys))

for i in range(len(sort_y_point) - 1):

if (sort_y_point[i + 1] - sort_y_point[i] > 10):

y_point_arr.append(sort_y_point[i])

i = i + 1

y_point_arr.append(sort_y_point[i])

# 横纵坐标超过3个 代表点对应的行、列超过2

data = pd.DataFrame(bitwise_and)

drop_y_list = []

for i in y_point_arr:

# for j in x_point_arr: data[(data.loc[i-1,:]>0)].index.tolist()

# y_dot_num = [x for x in data.loc[i-1,:] if x!=0]

y_dot_num = 0

y_dot_num += len(data.loc[(data.loc[i, :] > 0)].index.tolist())

for j in range(1, 5):

y_dot_num += len(data.loc[(data.loc[i + j, :] > 0)].index.tolist())

y_dot_num += len(data.loc[(data.loc[i - j, :] > 0)].index.tolist())

if y_dot_num < 5:

print('纵坐标%s并不在框线上删除,该行只有%s个像素' % (i, y_dot_num))

drop_y_list.append(i)

for y in drop_y_list:

y_point_arr.remove(y)

drop_x_list = []

for m in x_point_arr:

# for j in x_point_arr: data[(data.loc[i-1,:]>0)].index.tolist()

# y_dot_num = [x for x in data.loc[i-1,:] if x!=0]

x_dot_num = 0

x_dot_num += len(data.loc[(data.loc[:, m] > 0)].index.tolist())

print('检测', m)

for n in range(1, 5):

x_dot_num += len(data.loc[(data.loc[:, m + n] > 0)].index.tolist())

x_dot_num += len(data.loc[(data.loc[:, m - n] > 0)].index.tolist())

if x_dot_num < 5:

print('横坐标坐标%s并不在框线上删除 ,该列只有%s个像素' % (m, x_dot_num))

drop_x_list.append(m)

for x in drop_x_list:

x_point_arr.remove(x)

print('该表格有%s行%s列 ' % (len(y_point_arr) - 1, len(x_point_arr) - 1))

return x_point_arr, y_point_arr

x_point_arr, y_point_arr = recognize_bgkx(binary)

# 退后

ocr = PaddleOCR(use_angle_cls=True, lang="ch")

def recognize_text_by_loop():

y_point_arr = [x - 3 for x in y_point_arr]

# 循环y坐标,x坐标分割表格

data = [[] for i in range(len(y_point_arr))]

for i in range(len(y_point_arr) - 1):

# if i==0:

# continue

for j in range(len(x_point_arr) - 1):

# 在分割时,第一个参数为y坐标,第二个参数为x坐标

cell = gray[

y_point_arr[i]:y_point_arr[i + 1],

x_point_arr[j]:x_point_arr[j + 1]

]

# cv2.imshow("sub_pic" + str(i) + str(j), cell)

# cv2.waitKey()

# cv2.destroyAllWindows()

img_path = "cell_image_"+str(i)+'_'+str(j)+".png"

cv2.imwrite(img_path,cell)

# 输入待识别图片路径

# 输出结果保存路径

result = ocr.ocr(img_path, cls=True)

text1 = ''.join([x[1][0] for x in result])

print(text1)

data[i].append(text1)

j = j + 1

i = i + 1

print(data)

table_coordinate = pd.DataFrame(data[1:-1],columns=data[0])

table_coordinate.to_excel('saomiaojian.xlsx',index=False)

待优化:

- 真实图片的单元格并不在一条横坐标或纵坐标下,使用框线交叉点坐标确定单元格,实际图像不够完整,待后续优化,获取每个单元格坐标

添加优化代码:

1.找到表格四个角点

2.做平移变换

因为已经平移变换,就删除了一些代码,完整优化代码如下:

import cv2

import pandas as pd

import numpy as np

from paddleocr import PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang="ch")

class TableOCR(object):

def __init__(self,result_file=''):

self.img_file = ''

if not result_file:

self.result_file = 'result.xlsx'

def get_sorted_rect(self, rect):

'''

获取排序的四个坐标

@param rect:

@return:按照左上 右上 右下 左下排列返回

'''

mid_x = (max([x[1] for x in rect]) - min([x[1] for x in rect])) * 0.5 + min([x[1] for x in rect]) # 中间点坐标

left_rect = [x for x in rect if x[1] < mid_x]

left_rect.sort(key=lambda x: (x[0], x[1]))

right_rect = [x for x in rect if x[1] > mid_x]

right_rect.sort(key=lambda x: (x[0], x[1]))

sorted_rect = left_rect[0], left_rect[1], right_rect[1], right_rect[0]

return sorted_rect

def get_table(self, gray, min_table_area=0):

'''

从灰度图获取表格坐标,[[右下→左下→左上→右上],..]

边缘检测+膨胀---》找最外围轮廓点,根据面积筛选---》根据纵坐标排序---》计算轮廓的四个点,再次筛选

@param gray:灰度图 如果是二值图会报错

@return:

'''

canny = cv2.Canny(gray, 200, 255) # 第一个阈值和第二个阈值

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3))

canny = cv2.dilate(canny, kernel)

_, contours, HIERARCHY = cv2.findContours(canny, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

if not min_table_area:

min_table_area = gray.shape[0] * gray.shape[1] * 0.01 # 50000 # 最小的矩形面积阈值

candidate_table = [cnt for cnt in contours if cv2.contourArea(cnt) > min_table_area] # 计算该轮廓的面积

candidate_table = sorted(candidate_table, key=cv2.contourArea, reverse=True)

area_list = [cv2.contourArea(cnt) for cnt in candidate_table]

table = []

for i in range(len(candidate_table)):

# 遍历所有轮廓

# cnt是一个点集

cnt = candidate_table[i]

# 找到最小的矩形,该矩形可能有方向

rect = cv2.minAreaRect(cnt)

# box是四个点的坐标

box = cv2.boxPoints(rect)

box = np.int0(box)

sorted_box = self.get_sorted_rect(box)

result = [sorted_box[2], sorted_box[3], sorted_box[0], sorted_box[1]] # 右下 左下 左上 右上

result = [x.tolist() for x in result]

table.append(result)

return table

def perTran(self, image, rect):

'''

做透视变换

image 图像

rect 四个顶点位置:左上 右上 右下 左下

'''

tl, tr, br, bl = rect # 左下 右下 左上 右上 || topleft topright 左上 右上 右下 左下

# 计算宽度

widthA = np.sqrt(((br[0] - bl[0]) ** 2) + ((br[1] - bl[1]) ** 2))

widthB = np.sqrt(((tr[0] - tl[0]) ** 2) + ((tr[1] - tl[1]) ** 2))

maxWidth = max(int(widthA), int(widthB))

# 计算高度

heightA = np.sqrt(((tr[0] - br[0]) ** 2) + ((tr[1] - br[1]) ** 2))

heightB = np.sqrt(((tl[0] - bl[0]) ** 2) + ((tl[1] - bl[1]) ** 2))

maxHeight = max(int(heightA), int(heightB))

# 定义变换后新图像的尺寸

dst = np.array([[0, 0], [maxWidth - 1, 0], [maxWidth - 1, maxHeight - 1],

[0, maxHeight - 1]], dtype='float32')

# 变换矩阵

rect = np.array(rect, dtype=np.float32)

dst = np.array(dst, dtype=np.float32)

M = cv2.getPerspectiveTransform(rect, dst)

# 透视变换

warped = cv2.warpPerspective(image, M, (maxWidth, maxHeight))

return warped

def recognize_bgkx(self,binary):

rows, cols = binary.shape

scale = 30 # 值越小 横线越少 40

# 自适应获取核值

# 识别横线:

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (cols // scale, 1))

# 矩形

eroded = cv2.erode(binary, kernel, iterations=1) # 腐蚀

dilated_col = cv2.dilate(eroded, kernel, iterations=1) # 膨胀

# cv2.imshow("excel_horizontal_line", dilated_col)

# cv2.waitKey()

# 识别竖线:

scale = 20

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1, rows // scale))

eroded = cv2.erode(binary, kernel, iterations=1)

dilated_row = cv2.dilate(eroded, kernel, iterations=1)

# cv2.imshow("excel_vertical_line:", dilated_row)

# cv2.waitKey()

# 将识别出来的横竖线合起来 对二进制数据进行“与”操作

bitwise_and = cv2.bitwise_and(dilated_col, dilated_row)

# cv2.imshow("excel_bitwise_and", bitwise_and)

# cv2.waitKey()

# 标识表格轮廓

# merge = cv2.add(dilated_col, dilated_row) # 进行图片的加和

# cv2.imshow("entire_excel_contour:", merge)

# cv2.waitKey()

# 两张图片进行减法运算,去掉表格框线

# merge2 = cv2.subtract(binary, merge)

# cv2.imshow("binary_sub_excel_rect", merge2)

# cv2.waitKey()

# new_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (2, 2))

# erode_image = cv2.morphologyEx(merge2, cv2.MORPH_OPEN, new_kernel)

# cv2.imshow('erode_image2', erode_image)

# cv2.waitKey()

# merge3 = cv2.add(erode_image, bitwise_and)

# cv2.imshow('merge3', merge3)

# cv2.waitKey()

# 将焦点标识取出来

ys, xs = np.where(bitwise_and > 0)

# 横纵坐标数组

y_point_arr = []

x_point_arr = []

# 通过排序,排除掉相近的像素点,只取相近值的最后一点

# 这个10就是两个像素点的距离,不是固定的,根据不同的图片会有调整,基本上为单元格表格的高度(y坐标跳变)和长度(x坐标跳变)

i = 0

sort_x_point = np.sort(list(set(xs)))

for i in range(len(sort_x_point) - 1):

if sort_x_point[i + 1] - sort_x_point[i] > 10:

x_point_arr.append(sort_x_point[i])

i = i + 1

# 要将最后一个点加入

x_point_arr.append(sort_x_point[i])

i = 0

sort_y_point = np.sort(list(set(ys)))

# print(np.sort(ys))

for i in range(len(sort_y_point) - 1):

if (sort_y_point[i + 1] - sort_y_point[i] > 10):

y_point_arr.append(sort_y_point[i])

i = i + 1

y_point_arr.append(sort_y_point[i])

return x_point_arr, y_point_arr

def recognize_text_by_loop(self,gray,x_point_arr,y_point_arr):

# y_point_arr = [x - 3 for x in y_point_arr]

# 循环y坐标,x坐标分割表格

data = [[] for i in range(len(y_point_arr))]

for i in range(len(y_point_arr) - 1):

# if i==0:

# continue

for j in range(len(x_point_arr) - 1):

# 在分割时,第一个参数为y坐标,第二个参数为x坐标

cell = gray[

y_point_arr[i]:y_point_arr[i + 1],

x_point_arr[j]:x_point_arr[j + 1]

]

# cv2.imshow("sub_pic" + str(i) + str(j), cell)

# cv2.waitKey()

# cv2.destroyAllWindows()

# img_path = "cell_image_" + str(i) + '_' + str(j) + ".png"

# cv2.imwrite(img_path, cell)

# 输入待识别图片路径

# 输出结果保存路径

result = ocr.ocr(cell)

text1 = ''.join([x[1][0] for x in result])

print(text1)

data[i].append(text1)

print(data)

df = pd.DataFrame(data[1:-1], columns=data[0])

return df

def ocr(self,img_file):

self.img_file = img_file

img = cv2.imread(img_file)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

rect = self.get_table(gray)

sorted_rect = self.get_sorted_rect(rect[0])

gray_z = self.perTran(gray, sorted_rect) #如果有多个table 可以循环

cv2.imwrite('gray_z.jpg', gray_z)

binary_z = cv2.adaptiveThreshold(~gray_z, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C,

cv2.THRESH_BINARY, 15, -5)

x_point_arr, y_point_arr = self.recognize_bgkx(binary_z)

df = self.recognize_text_by_loop(gray_z,x_point_arr, y_point_arr)

df.to_excel(self.result_file, index=False)

tableOCR = TableOCR()

tableOCR.ocr('table.png')

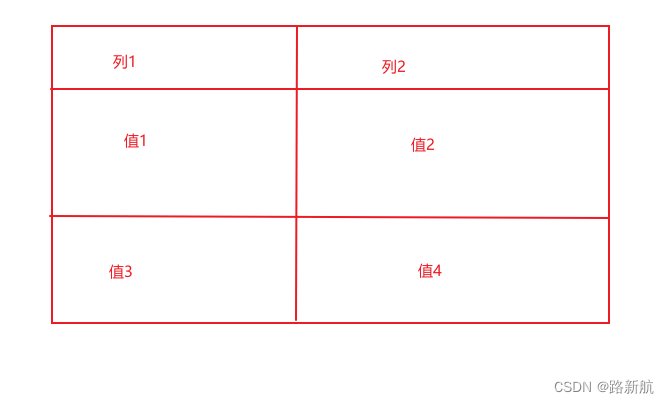

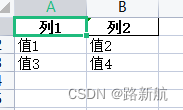

效果:

结果:

4002

4002

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?