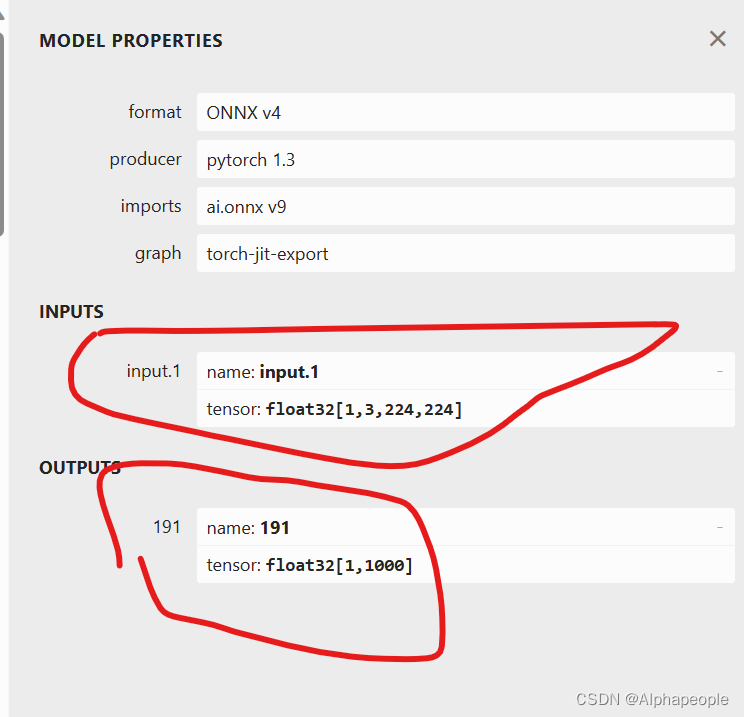

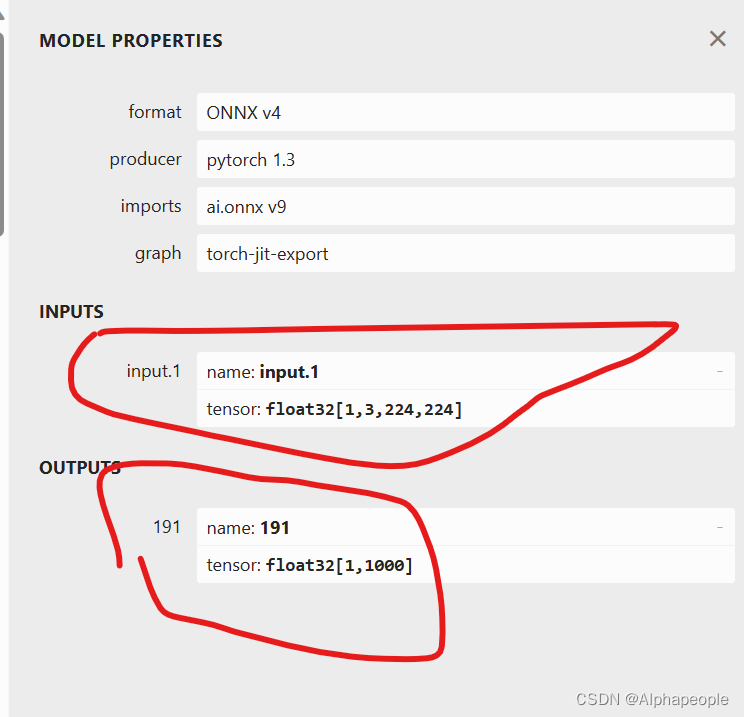

利用Netron打开onnx,右边名字:

int input_index = engine->getBindingIndex("inout1.1");

int output_index = engine->getBindingIndex("191");利用Netron打开onnx,右边名字:

int input_index = engine->getBindingIndex("inout1.1");

int output_index = engine->getBindingIndex("191"); 6088

6088

615

615

4024

4024

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?