文献速递:GAN医学影像合成–双向映射生成对抗网络用于脑部 MR 到 PET 合成

01

文献速递介绍

作为精准医学的基石,多模态医学图像已成为必备要素。稿件收到日期:2021年6月26日;修改日期:2021年8月6日;接受日期:2021年8月13日。发表日期:2021年8月24日;当前版本日期:2021年12月30日。该工作部分由国家自然科学基金(项目编号 62172403 和 61872351)、广东省国际科技合作项目(项目编号 2019A050510030)、广东省杰出青年学者基金(项目编号 2021B1515020019)、深圳市杰出青年学者(项目编号 RCYX20200714114641211)和深圳市重点基础研究项目(项目编号 JCYJ20200109115641762)支持。(胡胜业和雷柏英对本工作贡献相等。)(通讯作者:王树强。)胡胜业就职于中国科学院深圳先进技术研究院,深圳 518055,同时也在中国科学院大学计算机科学系,北京 100049(电子邮件:sy.hu@siat.ac.cn)。雷柏英就职于深圳大学生物医学工程学院,深圳 518060(电子邮件:leiby@szu.edu.cn)。王树强和沈艳艳就职于中国科学院深圳先进技术研究院,深圳 518055(电子邮件:sq.wang@siat.ac.cn; yy.shen@siat.ac.cn)。王勇就职于中南大学自动化学院,长沙 410083(电子邮件:ywang@csu.edu.cn)。冯志光就职于哈尔滨工程大学智能系统科学与工程学院,哈尔滨 150001(电子邮件:fengzhiguang@hrbeu.edu.cn)。数字对象标识符 10.1109/TMI.2021.3107013

Title

题目

Bidirectional Mapping Generative Adversarial Networks for Brain MR to PET Synthesis

双向映射生成对抗网络用于脑部 MR 到 PET 合成

Abstract

摘要

Fusing multi-modality medical images, such as magnetic resonance (MR) imaging and positron emission tomography (PET), can provide various anatomical and functional information about the human body. However, PET data is not always available for several reasons, such as high cost, radiation hazard, and other limitations. This paper proposes a 3D end-to-end synthesis network called Bidirectional Mapping Generative Adversarial Networks (BMGAN). Image contexts and latent vectors are effectively used for brain MR-to-PET synthesis. Specifically, a bidirectional mapping mechanism is designed to embed the semantic information of PET images into the high-dimensional latent space. Moreover, the 3D Dense-UNet generator architecture and the hybrid loss functions are further constructed to improve the visual quality of cross-modality synthetic images. The most appealing part is that the proposed method can synthesize perceptually realistic PET images while preserving the diverse brain structures of different subjects. Experimental results demonstrate that the performance of the proposed method outperforms other competitive methods in terms of quantitative measures, qualitative displays, and evaluation metrics for classification. Index Terms—Medical image synthesis, generative adversarial network, bidirectional mapping mechanism.

融合多模态医学图像,如磁共振(MR)成像和正电子发射断层扫描(PET),可以提供有关人体的各种解剖和功能信息。然而,由于高成本、辐射风险以及其他限制,PET 数据并不总是可用的。本文提出了一种名为双向映射生成对抗网络(BMGAN)的 3D 端到端合成网络。图像上下文和潜在向量被有效地用于脑 MR 到 PET 合成。具体来说,设计了一种双向映射机制,将 PET 图像的语义信息嵌入到高维潜在空间中。此外,还构建了 3D Dense-UNet 生成器架构和混合损失函数,以提高跨模态合成图像的视觉质量。最吸引人的部分是,提出的方法可以合成感知上真实的 PET 图像,同时保留不同受试者的多样化脑结构。实验结果表明,所提出的方法在定量测量、定性展示和分类评估指标方面的性能超过了其他竞争方法。索引术语——医学图像合成,生成对抗网络,双向映射机制。

Methods

方法

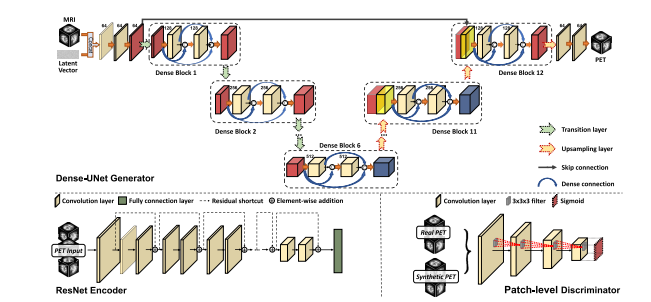

A. Overview Assuming there is a dataset containing the paired brain MR images x ∈ Rh×w×d M R and PET images y ∈ Rh×w×d PET , the goal is learning a cross-modality mapping. It can be formulated as f : x → y, where f denotes the complex non-linear mapping between the brain MR and PET images. Nevertheless, there are diverse geometric structures among brain anatomies of different subjects. It brings a huge challenge to the generative capability of cross-modality networks. To address this challenge, we creatively design a 3D generative adversarial network with a bidirectional mechanism. The main framework of training the proposed BMGAN is illustrated in Fig. 1. It consists of three components: the generator network, the discriminator network, and the encoder network. The 3D Dense-UNet generator that combines the architectures of U-Net [36] and DenseNet [37] is firstly constructed to synthesize the targeted PET images from the corresponding brain MR images. It should be stressed that the 3D convolutional operations are adopted to optimally model the spatial structural information of PET images and eliminate the slice discontinuity problem caused by 2D networks. Then the adversarial learning strategy is utilized in the designed network, where an additional patch-level discriminator network is created. A bidirectional mapping mechanism is introduced by adding an encoder network to embed the semantic information of PET images into the latent space. It encourages the generator to preserve the diverse details of brain structures in synthetic PET images. Moreover, to improve the visual quality of the synthetic target images, the generator is featured by incorporating the adversarial loss, the reconstruction loss, and the perceptual loss into the loss functions.

A. 概述 假设存在一个数据集,包含成对的脑部 MR 图像 x ∈ Rh×w×d M R 和 PET 图像 y ∈ Rh×w×d PET,目标是学习跨模态映射。它可以被公式化为 f : x → y,其中 f 表示脑 MR 和 PET 图像之间的复杂非线性映射。然而,不同受试者的脑解剖结构之间存在多样的几何结构。这给跨模态网络的生成能力带来了巨大的挑战。为了解决这个挑战,我们创造性地设计了一个具有双向机制的 3D 生成对抗网络。提出的 BMGAN 训练主框架如图 1 所示。它由三个组成部分构成:生成器网络、鉴别器网络和编码器网络。首先构建了 3D Dense-UNet 生成器,它结合了 U-Net [36] 和 DenseNet [37] 的架构,用于从相应的脑 MR 图像合成目标 PET 图像。应强调的是,采用了 3D 卷积操作,以最佳方式模拟 PET 图像的空间结构信息,并消除由 2D 网络引起的切片不连续性问题。然后在设计的网络中使用对抗性学习策略,创建了一个额外的补丁级鉴别器网络。通过添加编码器网络引入双向映射机制,将 PET 图像的语义信息嵌入到潜在空间中。这鼓励生成器在合成的 PET 图像中保留脑结构的多样细节。此外,为了提高合成目标图像的视觉质量,生成器的特点是将对抗损失、重建损失和感知损失纳入损失函数中。

Conclusions

结论

In this work, a novel 3D BMGAN is proposed for synthesizing brain PET images from MR images. As an attempt to bridge the gap between the synthetic images and real medical images, the bidirectional mapping mechanism is introduced to encourage the generator to synthesize the perceptually realistic PET images while preserving the diverse details of brain structures in different subjects. The 3D Dense-UNet generator architecture and the hybrid loss functions are constructed to improve the visual quality of synthetic PET images. Both qualitative and quantitative experiments suggest that the proposed method can effectively synthesize PET images from MR images, outperforming the baseline and competitive synthesis methods. We also conduct classification experiments and ablation experiments to study the performance of the proposed method. Please note that the proposed method can be used in wider imaging synthesis applications, such as MRto-CT synthesis. In the future, we will further investigate the probability of incorporating multi-modality information for better synthetic results.

在这项工作中,提出了一种新颖的3D BMGAN,用于从MR图像合成大脑PET图像。作为弥合合成图像与真实医学图像差距的尝试,引入了双向映射机制,以鼓励生成器在保留不同受试者大脑结构的多样细节的同时,合成感知上逼真的PET图像。构建了3D Dense-UNet生成器架构和混合损失函数,以提高合成PET图像的视觉质量。定性和定量实验均表明,所提出的方法能够有效地从MR图像合成PET图像,性能超过基线和竞争性合成方法。我们还进行了分类实验和消融实验,以研究所提出方法的性能。请注意,所提出的方法可用于更广泛的成像合成应用,如MR到CT合成。未来,我们将进一步探索结合多模态信息以获得更好的合成结果的可能性。

Figure

图

Fig. 1. The main framework of training the proposed BMGAN to synthesize brain PET from corresponding MR images.

图 1. 训练所提出的 BMGAN 以从相应的 MR 图像合成脑 PET 的主框架

Fig. 2. The network architecture of the proposed BMGAN. It consists of a Dense-UNet generator, a patch-level discriminator, and a ResNet encoder.

图 2. 所提出的 BMGAN 的网络架构。它由 Dense-UNet 生成器、补丁级别的鉴别器和 ResNet 编码器组成。

Fig. 3. The comparison of generation quality between the 2D variant and 3D BMGAN model. The red arrow in the real image points to the region with a significant difference. The synthetic results of the 3D BMGAN have a more similar appearance to the ground truth PET images compared to that of the 2D variant.

图 3. 2D 变体和 3D BMGAN 模型之间生成质量的比较。真实图像中的红色箭头指向存在显著差异的区域。与 2D 变体相比,3D BMGAN 的合成结果与地面真实 PET 图像的外观更为相似。

Fig. 4. The error maps between the synthetic PET and ground-truth PET in the axial plane. The synthetic result of the 2D variant has more significant error regions than the result of the proposed model.

图 4. 轴向平面上合成 PET 与地面真实 PET 之间的误差图。2D 变体的合成结果相较于所提出模型的结果有更显著的误差区域。

Fig. 5. Qualitative comparison between the proposed BMGAN (Ours) and the simplified model without the discriminator (Remove D). The synthetic images without the adversarial training strategy are easily over-smoothed and fuzzy. With the adversarial training strategy, the results are more evident and with better visual quality

图 5. 所提出的 BMGAN(我们的模型)与没有鉴别器的简化模型(移除 D)之间的定性比较。没有对抗性训练策略的合成图像容易过度平滑和模糊。采用对抗性训练策略后,结果更加明显,视觉质量更佳。

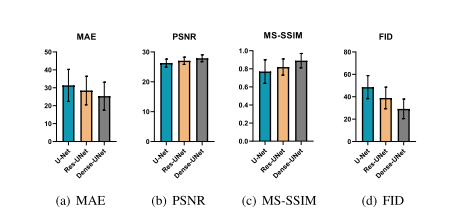

Fig. 6. Quantitative comparison between three generator architectures, including original U-Net, Res-UNet, and Dense-UNet. (a): The performance of MAE in different generators. (b): The performance of PSNR in different generators. ©: The performance of MS-SSIM in different generators. (d): The performance of FID in different generators. The original U-Net generator obtains the lowest performance, and the Dense-UNet generator achieves the best performance in all metrics.

图 6. 三种生成器架构之间的定量比较,包括原始 U-Net、Res-UNet 和 Dense-UNet。(a): 不同生成器的 MAE 性能。(b): 不同生成器的 PSNR 性能。©: 不同生成器的 MS-SSIM 性能。(d): 不同生成器的 FID 性能。原始 U-Net 生成器获得了最低的性能,而 Dense-UNet 生成器在所有指标中均实现了最佳性能。

Fig. 7. Visual comparison with the existing MR-to-PET synthesis models on the ADNI dataset. The columns from left to right are the synthetic images of FCN, U-Net [52], GAN, Cycle-GAN [53], RIED-Net [14], pGAN [26], the proposed BMGAN, and the ground truth FDG-PET, respectively. The red arrow in the real image points to the region with a significant difference. The proposed BMGAN achieves the best synthetic performance compared to the existing synthesis models. Compared to the synthetic images of the BMGAN, there are more differences in appearance between the real images and the synthetic images of other models.

图7. 在ADNI数据集上与现有的MR到PET合成模型的视觉比较。从左到右的列分别是FCN、U-Net [52]、GAN、Cycle-GAN [53]、RIED-Net [14]、pGAN [26]、所提出的BMGAN的合成图像,以及基准的FDG-PET图像。真实图像中的红色箭头指向有显著差异的区域。所提出的BMGAN在合成性能上比现有的合成模型表现得更好。与BMGAN的合成图像相比,其他模型的合成图像与真实图像在外观上有更多的差异。

Fig. 8. Representative results of the absolute error between the synthetic PET and real FDG-PET in the ADNI dataset. The proposed BMGAN achieves better performance compared to other synthesis methods under the same circumstances

图8. ADNI数据集中合成PET与真实FDG-PET之间的绝对误差的代表性结果。在相同情况下,提出的BMGAN与其他合成方法相比,实现了更好的性能。

Fig. 9. Quantitative comparison between the existing synthetic methods and the proposed method in terms of four metrics. (a): The performance of MAE in different methods. (b): The performance of PSNR in different methods. ©: The performance of MS-SSIM in different methods. (d): The performance of FID in different methods. The synthetic results of BMGAN have the best image quality in terms of four classic evaluation metrics.

图9. 在四个指标方面,现有合成方法与所提出方法的定量比较。(a):不同方法中MAE的表现。(b):不同方法中PSNR的表现。©:不同方法中MS-SSIM的表现。(d):不同方法中FID的表现。BMGAN的合成结果在四个经典评价指标方面具有最佳的图像质量。

Fig. 10. Evaluation of classification results on different synthetic images. (a): The performance of four traditional classification metrics. (b): The performance of the AUC metric. The proposed BMGAN achieves the best performance compared to other synthesis methods in terms of five classic metrics.

图10. 不同合成图像上分类结果的评估。(a):四个传统分类指标的表现。(b):AUC指标的表现。提出的BMGAN在五个经典指标方面与其他合成方法相比实现了最佳性能。

Fig. 11. Qualitative comparison of synthetic AV45-PET images on the OASIS-3 dataset. The images from left to right are synthesized by FCN, by U-Net [52], by GAN, by Cycle-GAN [53], by RIED-Net [14], by pGAN [26], by the proposed BMGAN, as well as the real full-dose PET images (Ground truth). The red arrow in the real image points to the region with a significant difference. The proposed BMGAN also achieves the best synthetic performance compared to the existing synthesis models.

图11. 在OASIS-3数据集上合成的AV45-PET图像的定性比较。从左到右的图像分别由FCN、U-Net [52]、GAN、Cycle-GAN [53]、RIED-Net [14]、pGAN [26]以及所提出的BMGAN合成,以及真实的全剂量PET图像(基准)。真实图像中的红色箭头指向有显著差异的区域。所提出的BMGAN也在与现有合成模型相比,实现了最佳的合成性能。

Fig. 12. Representative results of the absolute error between the synthetic PET and real AV45-PET in the OASIS-3 dataset. The proposed BMGAN achieves better performance compared to other synthesis methods under the same circumstances

图12. OASIS-3数据集中合成PET与真实AV45-PET之间的绝对误差的代表性结果。在相同情况下,提出的BMGAN与其他合成方法相比,实现了更好的性能。

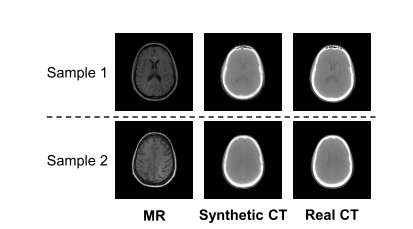

Fig. 13. The cases of the proposed BMGAN on MR-to-CT synthesis. It could be applied to another cross-modality mapping without modifying the network architecture radically

图13. 所提出的BMGAN在MR到CT合成中的案例应用。它可以应用于另一种跨模态映射,而无需大幅修改网络架构。

Fig. 14. Typical failure case of our method. The red arrow in the real image points to the region with a significant difference.

图14. 我们方法的典型失败案例。真实图像中的红色箭头指向有显著差异的区域。

Table

表

TABLE I quantitative comparson between 2Dbmgan and 3D BMGA

表 I 2D BMGAN 与 3D BMGAN 的定量比较

TABLE II quantitative comparison between the proposed model (ours)and the simplified model without the discriminator network(remove D)

表 II 所提出的模型(我们的模型)与没有鉴别器网络的简化模型(移除 D)之间的定量比较

TABLE III quantitative comparison between different loss functions

表 III 不同损失函数之间的定量比较

TABLE IV quantitative comparison between differnt adversarial losses

表 IV 不同对抗性损失之间的定量比较

TABLE V the effect of some hyper paramters

表 V 一些超参数的效果

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?