1.导包

import numpy as np

import torch

from torch.utils import data

2.生成1000个数据

#生成数据

def synthetic_data(w,b,num_examples):

X=torch.normal(0,1,(num_examples,len(w)))

y=torch.matmul(X,w)+b

y+=torch.normal(0,0.01,y.shape)

return X,y.reshape((-1,1))

true_w=torch.tensor([2,-3.4])

true_b=4.2

features,labels=synthetic_data(true_w,true_b,1000)

3.写一个pytorch迭代器

def load_array(data_array,batch_size,is_train=True):

data_set=data.TensorDataset(*data_array)

return data.DataLoader(data_set,batch_size,shuffle=is_train)

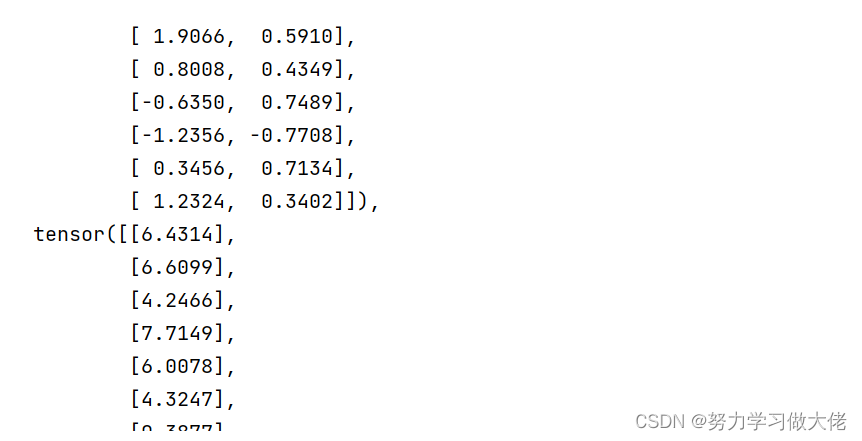

4.使用迭代器取数据

batch_size=10

data_iter=load_array((features,labels),batch_size)

next(iter(data_iter))

5.导入线性回归模型

5.导入线性回归模型

from torch import nn

net=nn.Sequential(nn.Linear(2,1))

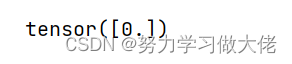

6.设置权重正态分布参数

net[0].weight.data.normal_(0,0.01)

net[0].bias.data.fill_(0)

7.均方误差

loss=nn.MSELoss()

8.设置梯度下降方式

trainer=torch.optim.SGD(net.parameters(),lr=0.03)

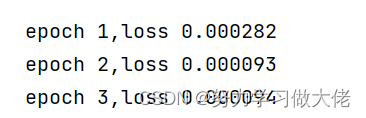

9.训练

num_epoch=3

for epoch in range(num_epoch):

for X,y in data_iter:

l=loss(net(X),y)

trainer.zero_grad()

l.backward()

trainer.step()

l=loss(net(features),labels)

print(f'epoch {epoch+1},loss {l:f}')

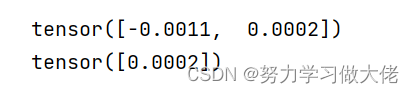

10.真实值和预测值之间的误差

w=net[0].weight.data

b=net[0].bias.data

print(true_w-w.reshape(true_w.shape))

print(true_b-b)

229

229

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?