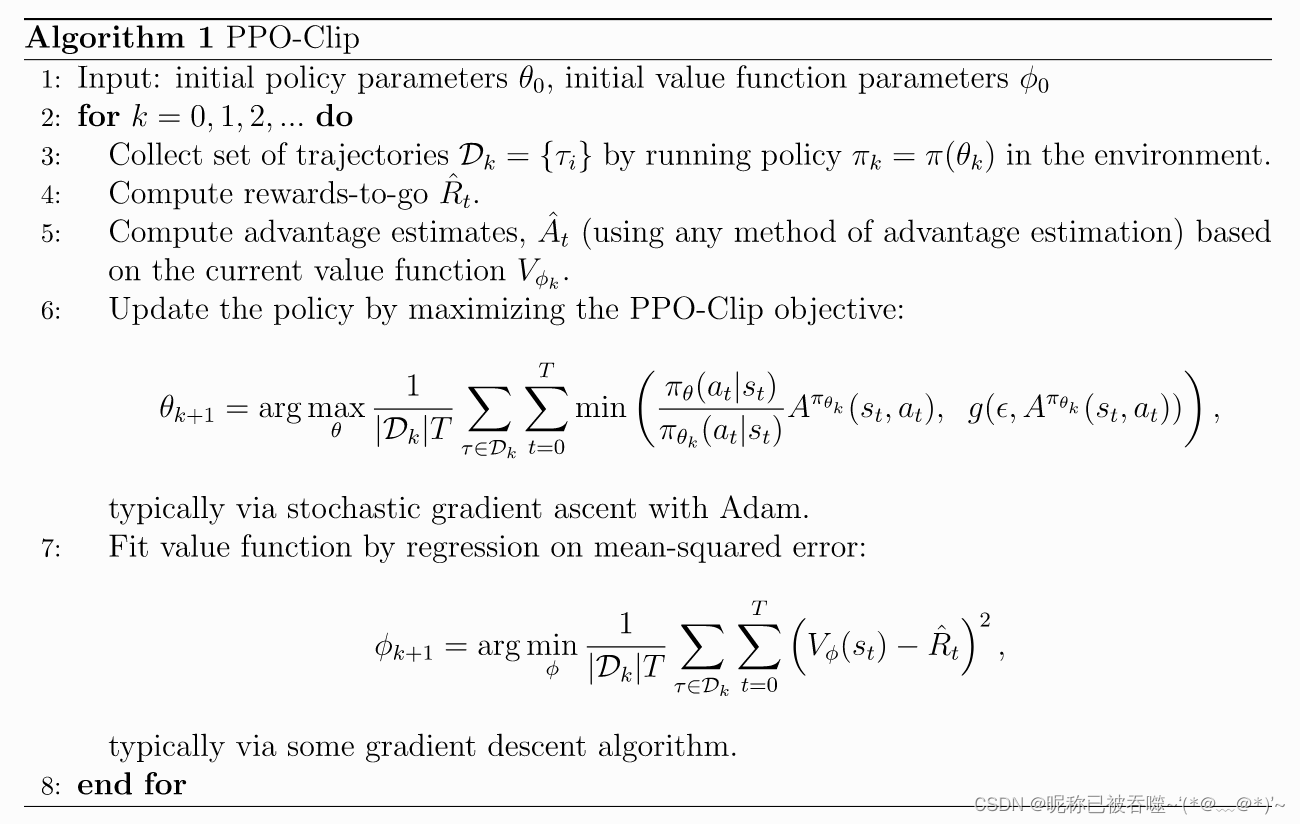

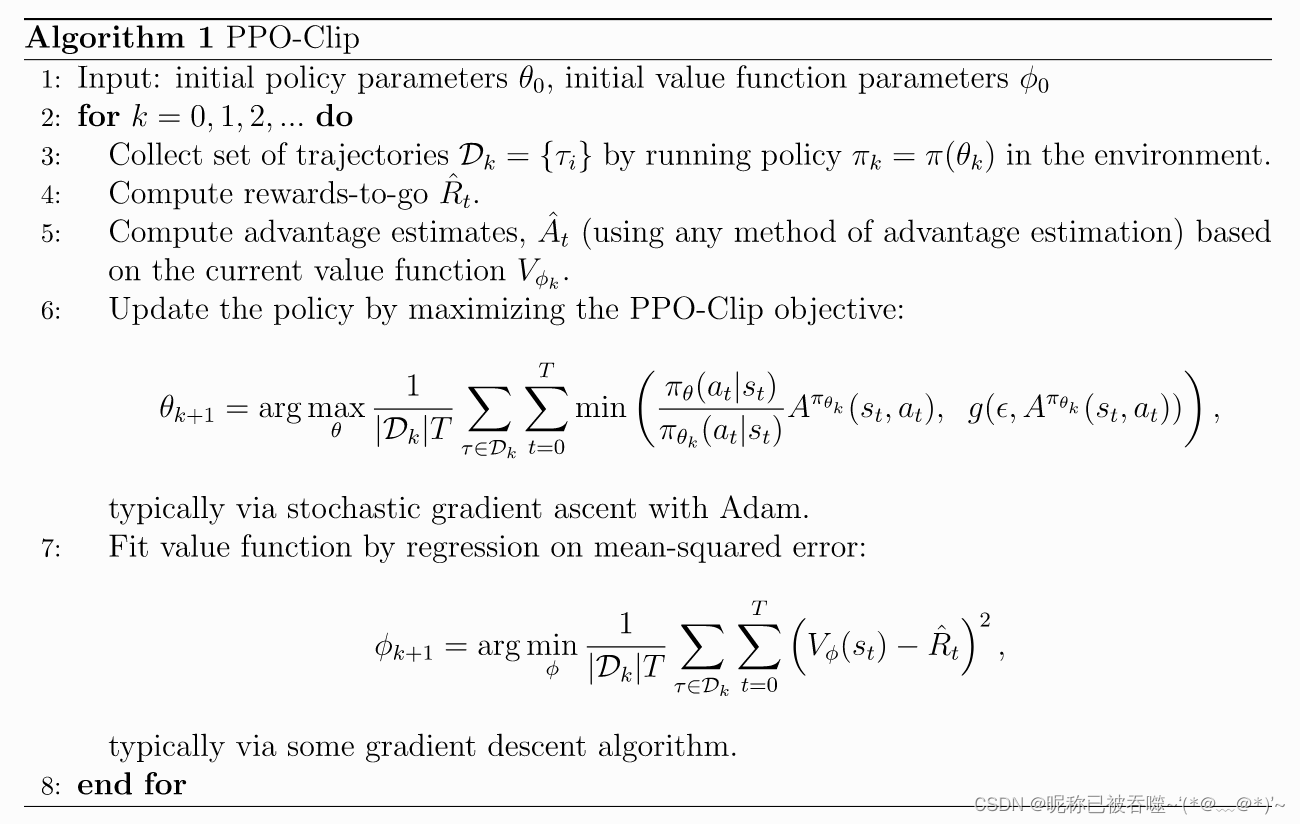

算法流程

代码

import tensorflow as tf

import numpy as np

import gym

import copy

physical_devices = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_memory_growth(physical_devices[0], True)

def build_model(action_dim, l2=1e-4):

feature_input = tf.keras.Input(shape=(210, 160, 3))

x = tf.keras.layers.Conv2D(64, 7, 3, padding='same', use_bias=False,

kernel_regularizer=tf.keras.regularizers.l2(l2))(feature_input)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Conv2D(128, 7, 3, padding='same', use_bias=False,

kernel_regularizer=tf.keras.regularizers.l2(l2))(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Conv2D(256, 7, 3, padding='same', use_bias=False,

kernel_regularizer=tf.keras.regularizers.l2(l2))(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Conv2D(512, 5, 2, padding='same', use_bias=False,

kernel_regularizer=tf.keras.regularizers.l2(l2))(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Conv2D(1024, 5, 2, padding='same', use_bias=False,

kernel_regularizer=tf.keras.regularizers.l2(l2))(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

feature_output = tf.keras.layers.GlobalAvgPool2D()(x)

x_a = tf.keras.layers.Dense(units=512, activation='relu',

kernel_regularizer=tf.keras.regularizers.l2(l2))(feature_output)

x_a = tf.keras.layers.Dense(units=512, activation='relu',

kernel_regularizer=tf.keras.regularizers.l2(l2))(x_a)

actor_output = tf.keras.layers.Dense(units=action_dim, activation='softmax',

kernel_regularizer=tf.keras.regularizers.l2(l2))(x_a)

x_c = tf.keras.layers.Dense(units=512, activation='relu',

kernel_regularizer=tf.keras.regularizers.l2(l2))(feature_output)

x_c = tf.keras.layers.Dense(units=512, activation='relu',

kernel_regularizer=tf.keras.regularizers.l2(l2))(x_c)

critic_output = tf.keras.layers.Dense(units=1, activation='linear',

kernel_regularizer=tf.keras.regularizers.l2(l2))(x_c)

AC_model = tf.keras.Model(inputs=feature_input, outputs=[actor_output, critic_output])

AC_model.summary()

return AC_model

def choice_action(model, state):

policy, value = model(np.array([state], dtype=np.float))

policy = tf.stop_gradient(policy).numpy()

return np.random.choice(

len(policy[0]),

p=policy[0]

), policy[0]

def get_advantage(model, state, reward, batch_size):

policy, value = model.predict(state, batch_size=batch_size, verbose=0)

return reward - value

def get_value(model, state, batch_size):

policy, value = model.predict(state, batch_size=batch_size, verbose=0)

return value

def PPO_train(model, optimizer, batch_state, batch_action, batch_policy, advantage, batch_raward, K_epochs, batch_size,

epsilon=0.2, T=1e-2):

losses = 0.0

ent = 0.0

advantage = np.reshape(advantage, newshape=(-1))

batch_action = tf.stack([tf.range(tf.shape(batch_action)[0], dtype=tf.int32), batch_action], axis=1)

data_length = len(state)

mini_epo = int(data_length / batch_size) + 1

for _ in range(K_epochs):

for i in range(mini_epo):

start = i * batch_size

end = min((i + 1) * batch_size, data_length)

b_s = batch_state[start:end]

b_a = batch_action[start:end]

b_p = batch_policy[start:end]

adv = advantage[start:end]

b_r = batch_raward[start:end]

with tf.GradientTape() as tape:

new_policy, value_predict = model(b_s)

pi_prob = tf.gather_nd(params=new_policy, indices=b_a)

oldpi_prob = tf.gather_nd(params=b_p, indices=b_a)

entropy = - tf.reduce_sum(new_policy * tf.math.log(new_policy + 1e-6), axis=-1)

ratio = pi_prob / (oldpi_prob + 1e-6)

surr1 = ratio * adv

surr2 = tf.clip_by_value(ratio, clip_value_min=1.0 - epsilon, clip_value_max=1.0 + epsilon) * adv

loss = - tf.minimum(surr1, surr2) - T * entropy + tf.keras.losses.mean_squared_error(b_r, value_predict)

grad = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grad, model.trainable_variables))

losses += np.mean(loss.numpy())

ent += np.mean(entropy.numpy())

return losses / (K_epoch * mini_epo), ent / (K_epochs * mini_epo)

if __name__ == '__main__':

episodes = 1000000

env_name = "ALE/SpaceInvaders-v5"

render = False

full_action_space = False

training = True

if render:

env = gym.make(env_name, render_mode="human", full_action_space=full_action_space)

else:

env = gym.make(env_name, render_mode="rgb_array", full_action_space=full_action_space)

env.seed(0)

action_dim = env.action_space.n

learning_rate = 2.5 * 1e-4

batch_size = 256

model = build_model(action_dim=action_dim)

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

try:

print("load models at ./{}".format(env_name))

model.load_weights("./{}.h5".format(env_name))

print("load model success!")

except:

print("No checkpoint, Init new model!")

print("Action Space dimension: {}".format(action_dim))

gamma = 0.99

lam = 0.95

assert lam >= 0.0 and lam <= 1.0, "lammbda必须介于[0, 1]"

K_epoch = 8

assert K_epoch > 1, "K_epoch必须大于1,不然计算的重要性采样没有意义"

epsilon = 0.2

assert epsilon > 0.0 and epsilon < 1.0, "epsilon必须介于(0, 1)"

temperature = 1e-2

if training:

for e in range(episodes):

state = env.reset()

S, A, P, R, nS = [], [], [], [], []

score = 0.0

while True:

action, policy = choice_action(model, state)

next_state, reward, done, info = env.step(action)

score += reward

S.append(state)

A.append(action)

P.append(policy)

R.append(reward)

nS.append(next_state)

state = copy.deepcopy(next_state)

if done:

discounted_r = []

tmp_r = 0.0

v_nS = get_value(model, np.array(nS, dtype=np.float), batch_size)

v_nS[-1] = 0

for r, vs in zip(R[::-1], v_nS[::-1]):

tmp_r = r + gamma * (lam * tmp_r + (1.0 - lam) * vs[0])

discounted_r.append(np.array([tmp_r]))

discounted_r.reverse()

bs = np.array(S, dtype=np.float)

ba = np.array(A)

bp = np.array(P)

br = np.array(discounted_r, dtype=np.float)

advantage = get_advantage(model, bs, br, batch_size)

advantage = (advantage - advantage.mean()) / (advantage.std() + 1e-6)

losses, entropy = PPO_train(

model=model,

optimizer=optimizer,

batch_state=bs,

batch_action=ba,

batch_policy=bp,

advantage=advantage,

batch_raward=br,

K_epochs=K_epoch,

batch_size=batch_size,

epsilon=epsilon,

T=temperature

)

print("episode: {}/{}, score: {}, losses: {:.4f}, entropy: {:.4f}".format(

e + 1, episodes, score, losses, entropy))

break

if (e + 1) % 50 == 0:

model.save_weights("./{}.h5".format(env_name))

print("Model Saved!")

else:

env = gym.make(env_name, render_mode="human", full_action_space=full_action_space)

env.seed(0)

state = env.reset()

while True:

action, _ = choice_action(model, state)

next_state, reward, done, info = env.step(action)

state = copy.deepcopy(next_state)

if done: break

2837

2837

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?