先上源码:

tf.nn.dynamic_rnn(

cell,

inputs,

sequence_length=None,

initial_state=None,

dtype=None,

parallel_iterations=None,

swap_memory=False,

time_major=False,

scope=None

)

batch_size: 是输入的这批数据的数量。一般是矩阵的第一个维度的大小。

max_time: 就是这批数据中序列的最长长度,如果输入的三个句子,那max_time对应的就是最长句 子的单词数量,cell.output_size其实就是rnn cell中神经元的个数

outputs : outputs是一个tensor,如果time_major 为True,outputs形状为 [max_time, batch_size, cell.output_size ](要求rnn输入与rnn输出形状保持一致),如果time_major==False(默认),outputs形状为 [ batch_size, max_time, cell.output_size ]

state: state是一个tensor。state是最终的状态,也就是序列中最后一个cell输出的状态。一般情况下state的形状为 [batch_size, cell.output_size ],但当输入的cell为BasicLSTMCell时,state的形状为[2,batch_size, cell.output_size ],其中2也对应着LSTM中的cell state和hidden state

state含义

对于第一问题“state”形状为什么会发生变化呢?

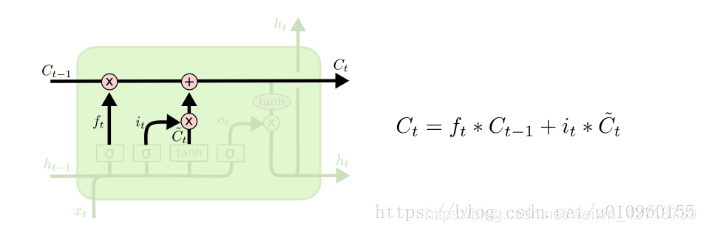

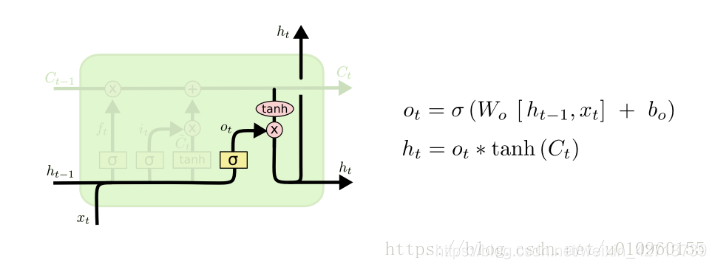

我们以LSTM和GRU分别为tf.nn.dynamic_rnn的输入cell类型为例,当cell为LSTM,state形状为[2,batch_size, cell.output_size ];当cell为GRU时,state形状为[batch_size, cell.output_size ]。其原因是因为LSTM和GRU的结构本身不同,如下面两个图所示,这是LSTM的cell结构,每个cell会有两个输出Ct 和 Ht,上面这个图是输出Ct,代表哪些信息应该被记住哪些应该被遗忘; 下面这个图是输出Ht,代表这个cell的最终输出,LSTM的state是由Ct 和 Ht组成的。

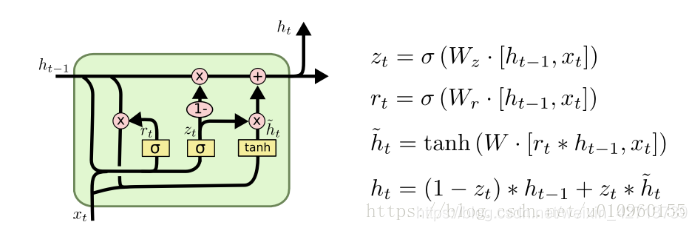

当cell为GRU时,state就只有一个了,原因是GRU将Ct 和 Ht进行了简化,将其合并成了Ht,如下图所示,GRU将遗忘门和输入门合并成了更新门,另外cell不在有细胞状态cell state,只有hidden state。

对于第二个问题outputs和state有什么关系?

结论上来说,如果cell为LSTM,那 state是个tuple,分别代表Ct 和 Ht,其中 Ht与outputs中的对应的最后一个时刻的输出相等,假设state形状为[ 2,batch_size, cell.output_size ],outputs形状为 [ batch_size, max_time, cell.output_size ],那么state[ 1, batch_size, : ] == outputs[ batch_size, -1, : ];如果cell为GRU,那么同理,state其实就是 Ht,state ==outputs[ -1 ]

代码举例

单个隐藏层

import tensorflow as tf

import numpy as np

n_steps = 2

n_inputs = 3

n_neurons = 5 #神经元的个数

X = tf.placeholder(tf.float32, [None, n_steps, n_inputs])

basic_cell = tf.contrib.rnn.BasicRNNCell(num_units=n_neurons)

seq_length = tf.placeholder(tf.int32, [None])

outputs, states = tf.nn.dynamic_rnn(basic_cell, X, dtype=tf.float32,

sequence_length=seq_length)

init = tf.global_variables_initializer()

X_batch = np.array([

# step 0 step 1

[[0, 1, 2], [9, 8, 7]], # instance 1

[[3, 4, 5], [0, 0, 0]], # instance 2 (padded with zero vectors)

[[6, 7, 8], [6, 5, 4]], # instance 3

[[9, 0, 1], [3, 2, 1]], # instance 4

])

print(X_batch.shape) #[4,2,3]

seq_length_batch = np.array([2, 1, 2, 2]) #必须是一维矩阵并且元素的个数必须是X_batch矩阵的第一维的大小

with tf.Session() as sess:

init.run()

outputs_val, states_val = sess.run(

[outputs, states], feed_dict={X: X_batch, seq_length: seq_length_batch})

print("outputs_val.shape:", outputs_val.shape)

print("states_val.shape:", states_val.shape)

print("outputs_val:", outputs_val)

print("states_val:", states_val)

输出结果是:

(4, 2, 3)

outputs_val.shape: (4, 2, 5)

states_val.shape: (4, 5)

outputs_val: [[[ 0.50321156 -0.41240135 -0.84440345 -0.02686742 -0.8964557 ]

[ 0.9573825 -0.99886006 -0.9999997 0.991583 -1. ]]

[[ 0.92471015 -0.93656176 -0.9996478 0.5771828 -0.9999348 ]

[ 0. 0. 0. 0. 0. ]]

[[ 0.99078256 -0.9948545 -0.99999946 0.87246966 -0.99999994]

[ 0.58877105 -0.9849114 -0.9999669 0.9589857 -0.99999225]]

[[-0.0043383 -0.9785873 -0.99291325 -0.7907419 -0.99364054]

[-0.48218966 -0.94694626 -0.8577129 0.88309634 -0.9994416 ]]]

states_val: [[ 0.9573825 -0.99886006 -0.9999997 0.991583 -1. ]

[ 0.92471015 -0.93656176 -0.9996478 0.5771828 -0.9999348 ]

[ 0.58877105 -0.9849114 -0.9999669 0.9589857 -0.99999225]

[-0.48218966 -0.94694626 -0.8577129 0.88309634 -0.9994416 ]]

首先输入X是一个 [batch_size,step,input_size] = [4,2,3] 的tensor,注意我们这里调用的是BasicRNNCell(同GRUCell),只有一层循环网络,经过tf.nn.dynamic_rnn后outputs的形状为 [ 4, 2, 5 ],state形状为 [ 4, 5 ]。可以看到 state 与 对应的outputs的最后一行是相等的。

多个隐藏层的情况

n_steps = 2

n_inputs = 3

n_neurons = 5

n_layers = 3

X = tf.placeholder(tf.float32, [None, n_steps, n_inputs])

seq_length = tf.placeholder(tf.int32, [None])

layers = [tf.contrib.rnn.BasicRNNCell(num_units=n_neurons,

activation=tf.nn.relu)

for layer in range(n_layers)]

multi_layer_cell = tf.contrib.rnn.MultiRNNCell(layers)

outputs, states = tf.nn.dynamic_rnn(multi_layer_cell, X, dtype=tf.float32, sequence_length=seq_length)

init = tf.global_variables_initializer()

X_batch = np.array([

# step 0 step 1

[[0, 1, 2], [9, 8, 7]], # instance 1

[[3, 4, 5], [0, 0, 0]], # instance 2 (padded with zero vectors)

[[6, 7, 8], [6, 5, 4]], # instance 3

[[9, 0, 1], [3, 2, 1]], # instance 4

])

seq_length_batch = np.array([2, 1, 2, 2])

with tf.Session() as sess:

init.run()

outputs_val, states_val = sess.run(

[outputs, states], feed_dict={X: X_batch, seq_length: seq_length_batch})

print("outputs_val.shape:", outputs)

print("states_val.shape:", states)

print("outputs_val:", outputs_val)

print("states_val:", states_val)

输出结果:

outputs_val.shape: Tensor("rnn/transpose_1:0", shape=(?, 2, 5), dtype=float32)

states_val.shape: (<tf.Tensor 'rnn/while/Exit_3:0' shape=(?, 5) dtype=float32>,

<tf.Tensor 'rnn/while/Exit_4:0' shape=(?, 5) dtype=float32>,

<tf.Tensor 'rnn/while/Exit_5:0' shape=(?, 5) dtype=float32>)

outputs_val: [[[0. 0. 0.2550385 0.12092578 0.40954068]

[0. 0. 1.896489 0.6294941 3.8094907 ]]

[[0. 0. 1.0293288 0.4813519 1.824276 ]

[0. 0. 0. 0. 0. ]]

[[0. 0. 1.803619 0.84177816 3.2390108 ]

[0. 0.78937817 0. 0. 4.249421 ]]

[[0. 0. 2.3075228 0.9682029 3.3698235 ]

[0. 2.1534934 0. 0. 2.5193954 ]]]

states_val: (array([[0. , 5.516548 , 5.836305 , 0. , 1.5091596 ],

[0. , 2.882547 , 2.6163056 , 0. , 0.77435017],

[0. , 1.4631326 , 1.4441929 , 0. , 2.7281032 ],

[3.9093783 , 0. , 0. , 0. , 3.2143312 ]],

dtype=float32),

array([[0. , 1.8190625 , 2.4112606 , 0. , 2.3922706 ],

[0. , 1.048449 , 1.0651987 , 0. , 1.2866479 ],

[2.540713 , 0. , 2.0854363 , 0. , 0.15023205],

[1.324711 , 0. , 0. , 0. , 0. ]],

dtype=float32),

array([[0. , 0. , 1.896489 , 0.6294941 , 3.8094907 ],

[0. , 0. , 1.0293288 , 0.4813519 , 1.824276 ],

[0. , 0.78937817, 0. , 0. , 4.249421 ],

[0. , 2.1534934 , 0. , 0. , 2.5193954 ]],

dtype=float32))

我们说过,outputs是最后一层的输出,即 [batch_size,step,n_neurons] = [4,2,5]

states是每一层的最后一个step的输出,即三个结构为 [batch_size,n_neurons] = [4,5] 的tensor

继续观察数据,states中的最后一个array,正好是outputs的最后那个step的输出

BasicLSTMCell 多个隐藏层

n_steps = 2

n_inputs = 3

n_neurons = 5

n_layers = 3

X = tf.placeholder(tf.float32, [None, n_steps, n_inputs])

seq_length = tf.placeholder(tf.int32, [None])

#cell = tf.contrib.rnn.BasicLSTMCell(num_units=rnn_hidden_size, state_is_tuple=True)

layers = [tf.contrib.rnn.BasicLSTMCell(num_units=n_neurons,

activation=tf.nn.relu)

for layer in range(n_layers)]

multi_layer_cell = tf.contrib.rnn.MultiRNNCell(layers)

outputs, states = tf.nn.dynamic_rnn(multi_layer_cell, X, dtype=tf.float32, sequence_length=seq_length)

init = tf.global_variables_initializer()

X_batch = np.array([

# step 0 step 1

[[0, 1, 2], [9, 8, 7]], # instance 1

[[3, 4, 5], [0, 0, 0]], # instance 2 (padded with zero vectors)

[[6, 7, 8], [6, 5, 4]], # instance 3

[[9, 0, 1], [3, 2, 1]], # instance 4

])

seq_length_batch = np.array([2, 1, 2, 2])

with tf.Session() as sess:

init.run()

outputs_val, states_val = sess.run(

[outputs, states], feed_dict={X: X_batch, seq_length: seq_length_batch})

print("outputs_val.shape:", outputs)

print("states_val.shape:", states)

print("outputs_val:", outputs_val)

print("states_val:", states_val)

输出结果:

outputs_val.shape: Tensor("rnn/transpose_1:0", shape=(?, 2, 5), dtype=float32)

states_val.shape:

(LSTMStateTuple(c=<tf.Tensor 'rnn/while/Exit_3:0' shape=(?, 5) dtype=float32>,

h=<tf.Tensor 'rnn/while/Exit_4:0' shape=(?, 5) dtype=float32>),

LSTMStateTuple(c=<tf.Tensor 'rnn/while/Exit_5:0' shape=(?, 5) dtype=float32>,

h=<tf.Tensor 'rnn/while/Exit_6:0' shape=(?, 5) dtype=float32>),

LSTMStateTuple(c=<tf.Tensor 'rnn/while/Exit_7:0' shape=(?, 5) dtype=float32>,

h=<tf.Tensor 'rnn/while/Exit_8:0' shape=(?, 5) dtype=float32>))

outputs_val: [[[0.00209509 0.00182596 0. 0.00067289 0.00347673]

[0.04328459 0.07312859 0. 0.01560373 0.06793488]]

[[0.01704519 0.02663972 0. 0.00636475 0.02475912]

[0. 0. 0. 0. 0. ]]

[[0.03605811 0.0760659 0. 0.01464115 0.04976365]

[0.09704518 0.3786289 0. 0.05542209 0.04016687]]

[[0. 0. 0. 0. 0. ]

[0.01460283 0.05219102 0. 0.00475517 0. ]]]

states_val: (LSTMStateTuple(c=array([[4.2300582e+00, 6.1886315e-03, 1.9541669e+00, 4.7516477e-01,

7.1493559e+00],

[2.2236171e+00, 0.0000000e+00, 4.6114996e-01, 0.0000000e+00,

2.5368762e+00],

[3.3151534e+00, 0.0000000e+00, 9.3500906e-01, 1.7955723e+00,

9.6494026e+00],

[1.9809818e+00, 0.0000000e+00, 3.0963078e+00, 1.6501746e+00,

4.6886072e+00]], dtype=float32), h=array([[4.2053738e+00, 3.8800889e-03, 1.9386519e+00, 4.7059658e-01,

3.4508269e+00],

[2.0354471e+00, 0.0000000e+00, 4.3142736e-01, 0.0000000e+00,

1.7041360e+00],

[3.1316614e+00, 0.0000000e+00, 7.4865651e-01, 1.7757093e+00,

6.9275556e+00],

[1.4433841e+00, 0.0000000e+00, 2.3309155e+00, 1.5163686e+00,

2.4487319e+00]], dtype=float32)),

LSTMStateTuple(c=array([[0. , 0.89624435, 1.9348668 , 0.699529 , 0. ],

[0. , 0.41140077, 0.85579544, 0.31884393, 0. ],

[0. , 2.424478 , 3.900877 , 3.1388435 , 0. ],

[0. , 0.4629493 , 0.251346 , 0.54938227, 0. ]],

dtype=float32), h=array([[0. , 0.5113401 , 0.8787495 , 0.3594259 , 0. ],

[0. , 0.21974793, 0.37868136, 0.14333102, 0. ],

[0. , 1.6213391 , 1.3959492 , 1.379813 , 0. ],

[0. , 0.27001444, 0.14599895, 0.3638716 , 0. ]],

dtype=float32)),

LSTMStateTuple(c=array([[0.07640141, 0.12756428, 0. , 0.02928707, 0.11843267],

[0.03226396, 0.0501599 , 0. , 0.01238208, 0.04656338],

[0.14331082, 0.5718589 , 0. , 0.09317783, 0.0627808 ],

[0.02764782, 0.0983929 , 0. , 0.0092742 , 0. ]],

dtype=float32), h=array([[0.04328459, 0.07312859, 0. , 0.01560373, 0.06793488],

[0.01704519, 0.02663972, 0. , 0.00636475, 0.02475912],

[0.09704518, 0.3786289 , 0. , 0.05542209, 0.04016687],

[0.01460283, 0.05219102, 0. , 0.00475517, 0. ]],

dtype=float32)))

我们的states包含三个LSTMStateTuple,每一个表示每一层的最后一个step的输出,这个输出有两个信息,一个是h表示短期记忆信息,一个是c表示长期记忆信息。维度都是[batch_size,n_neurons] = [4,5],states的最后一个LSTMStateTuple中的h就是outputs的最后一个step的输出

参考文章:

https://blog.csdn.net/junjun150013652/article/details/81331448

https://blog.csdn.net/u010960155/article/details/81707498

https://blog.csdn.net/zhylhy520/article/details/82631736

663

663

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?