参数讲解

函数原型为:

tf.nn.dynamic_rnn(

cell,#RNNCell的一个实例.

inputs,#RNN的一个输入

#如果time_major == False(默认), 则是一个shape为[batch_size, max_time, input_size]的Tensor,或者这些元素的嵌套元组。

#如果time_major == True,则是一个shape为[max_time, batch_size, input_size]的Tensor,或这些元素的嵌套元组。

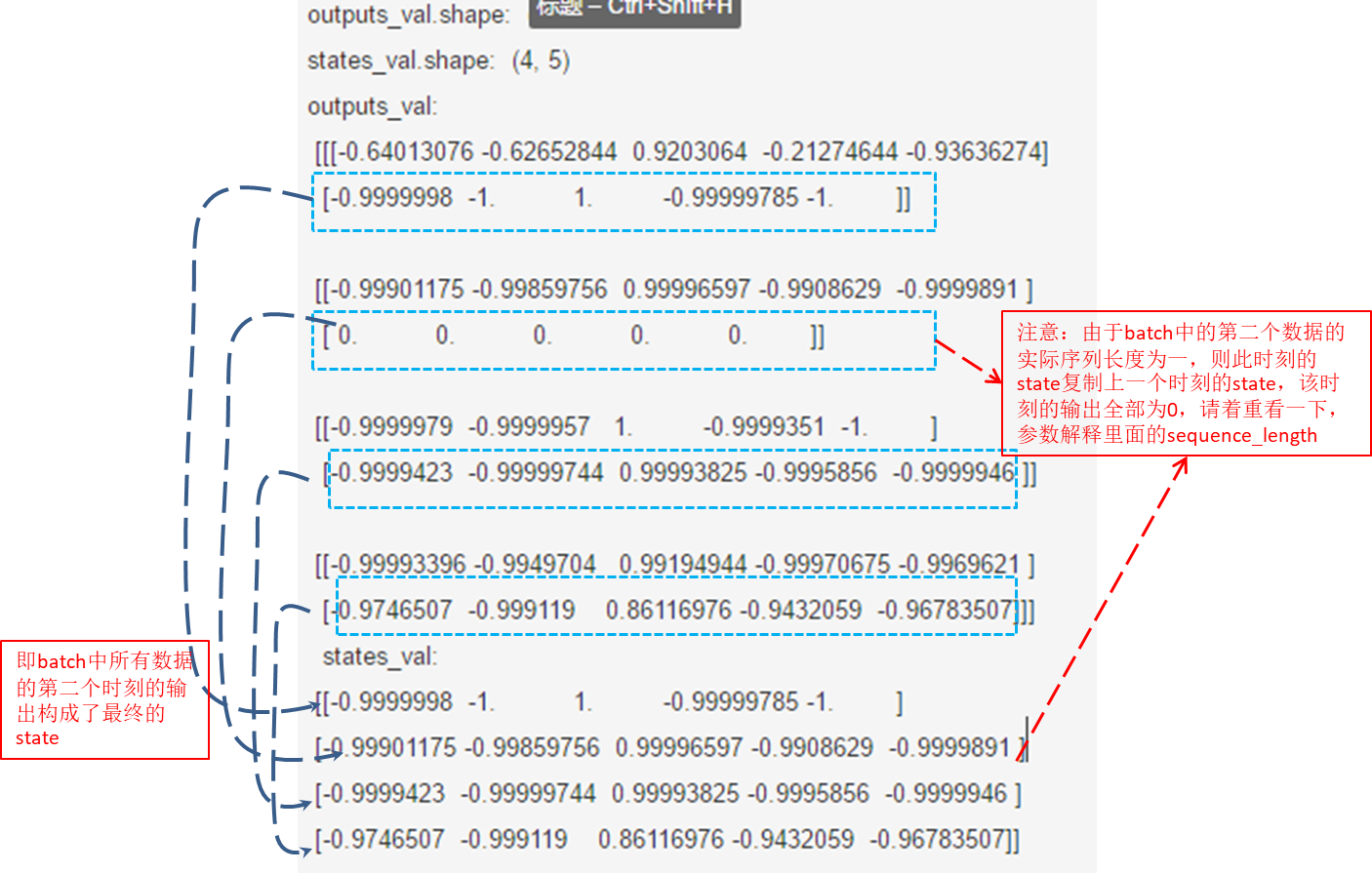

sequence_length=None,# (可选)大小为[batch_size],数据的类型是int32/int64向量。如果当前时间步的index超过该序列的实际长度时,则该时间步不进行计算,RNN的state复制上一个时间步的,同时该时间步的输出全部为零。

initial_state=None,#(可选)RNN的初始state(状态)。如果cell.state_size(一层的RNNCell)是一个整数,那么它必须是一个具有适当类型和形状的张量[batch_size,cell.state_size]。如果cell.state_size是一个元组(多层的RNNCell,如MultiRNNCell),那么它应该是一个张量元组,每个元素的形状为[batch_size,s] for s in cell.state_size。

dtype=None,

parallel_iterations=None,

swap_memory=False,

time_major=False,#time_major: inputs 和outputs 张量的形状格式。如果为True,则这些张量都应该是(都会是)[max_time, batch_size, depth]。如果为false,则这些张量都应该是(都会是)[batch_size,max_time, depth]。time_major=true说明输入和输出tensor的第一维是max_time。否则为batch_size。

#使用time_major =True更有效,因为它避免了RNN计算开始和结束时的转置.但是,大多数TensorFlow数据都是batch-major,因此默认情况下,此函数接受输入并以batch-major形式发出输出.

scope=None

)

返回值:

一对(outputs, state),其中:

outputs: RNN输出Tensor.

如果time_major == False(默认),这将是shape为[batch_size, max_time, cell.output_size]的Tensor.

如果time_major == True,这将是shape为[max_time, batch_size, cell.output_size]的Tensor.

state: 最终的状态.

一般情况下state的形状为 [batch_size, cell.output_size ]

如果cell是LSTMCells,则state将是包含每个单元格的LSTMStateTuple的元组,state的形状为[2,batch_size, cell.output_size ]

代码讲解:

单层RNN

import tensorflow as tf

import numpy as np

n_steps=2

n_inputs=3

n_neurons=5#也就是hidden_size

X=tf.placeholder(tf.float32,[None,n_steps,n_inputs])

basic_cell=tf.contrib.rnn.BasicRNNCell(num_units=n_neurons)

seq_length=tf.placeholder(tf.int32,[None])

outputs,states=tf.nn.dynamic_rnn(basic_cell,X,dtype=tf.float32,sequence_length=seq_length)

init=tf.global_variables_initializer()

X_batch=np.array([

[[0, 1, 2], [9, 8, 7]], # instance 1

[[3, 4, 5], [0, 0, 0]], # instance 2

[[6, 7, 8], [6, 5, 4]], # instance 3

[[9, 0, 1], [3, 2, 1]], # instance 4

])

#即batch=4,step=2,n_inputs=3

seq_length_batch=np.array([2,1,2,2])#即每个样本实际序列长度

with tf.Session() as sess:

init.run()

outputs_val,states_val=sess.run(

[outputs,states],feed_dict={X:X_batch,seq_length:seq_length_batch}

)

print('outputs_val.shape: ',outputs_val.shape,"states_val.shape: ",states_val.shape)

print('outputs_val: ',outputs_val,"states_val:",states_val)

结果如下:

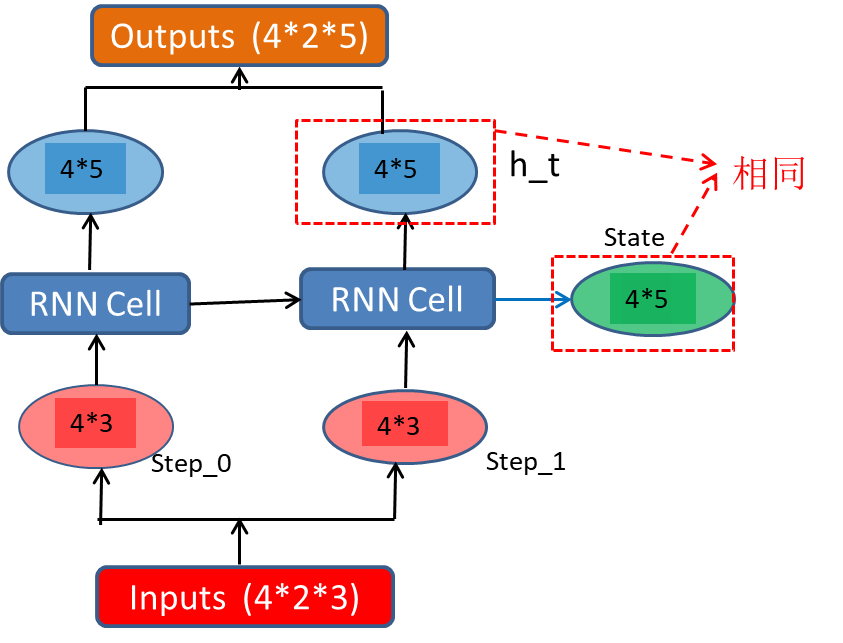

数据流程如下所示:

多层RNN

以上示例是单层RNN的情况,当RNN的层数大于1的时候,如下所示。

代码:

import tensorflow as tf

import numpy as np

n_steps=2

n_inputs=3

n_neurons=5#也就是hidden_size

n_layers=3#隐藏层的层数

X=tf.placeholder(tf.float32,[None,n_steps,n_inputs])

seq_length=tf.placeholder(tf.int32,[None])

layers=[tf.contrib.rnn.BasicRNNCell(num_units=n_neurons,activation=tf.nn.relu)

for layer in range(n_layers)]

multi_layer_cell=tf.contrib.rnn.MultiRNNCell(layers)

outputs,states=tf.nn.dynamic_rnn(multi_layer_cell,X,

dtype=tf.float32,sequence_length=seq_length)

init=tf.global_variables_initializer()

X_batch=np.array([

[[0, 1, 2], [9, 8, 7]], # instance 1

[[3, 4, 5], [0, 0, 0]], # instance 2

[[6, 7, 8], [6, 5, 4]], # instance 3

[[9, 0, 1], [3, 2, 1]], # instance 4

])

#即batch=4,step=2,n_inputs=3

seq_length_batch=np.array([2,1,2,2])#即每个样本实际序列长度

with tf.Session() as sess:

init.run()

outputs_val,states_val=sess.run(

[outputs,states],feed_dict={X:X_batch,seq_length:seq_length_batch}

)

print('outputs_val.shape: ',outputs,"states_val.shape: ",states)

print('outputs_val: ',outputs_val,"states_val:",states_val)

结果为:

outputs_val.shape: Tensor("rnn/transpose_1:0", shape=(?, 2, 5), dtype=float32)

states_val.shape: (<tf.Tensor 'rnn/while/Exit_3:0' shape=(?, 5) dtype=float32>, <tf.Tensor 'rnn/while/Exit_4:0' shape=(?, 5) dtype=float32>, <tf.Tensor 'rnn/while/Exit_5:0' shape=(?, 5) dtype=float32>)

outputs_val:

[[[0.16912684 0. 0.08588259 0. 0. ]

[0.5697968 0.15893483 0. 0.34489262 0. ]]

[[0.31041974 0. 0.11882807 0. 0. ]

[0. 0. 0. 0. 0. ]]

[[0.49713948 0. 0.04162228 0.04990477 0. ]

[0.16998267 0. 0. 0.10351204 0.03232437]]

[[0.76482284 0.12405375 0. 0.43402794 0. ]

[0.4364532 0. 0.21812947 0.31527176 0.10070064]]]

states_val:

(array([[4.4701347 , 1.4318902 , 0. , 0. , 0.6220417 ],

[0.26799655, 1.5918089 , 0. , 0. , 0. ],

[3.3658004 , 0.3127355 , 0. , 0. , 0.17700624],

[0. , 0. , 1.9166557 , 0. , 0. ]],

dtype=float32), array([[0.5263379 , 0.8757478 , 0. , 0. , 0.09927309],

[0.7071353 , 0.19394904, 0.5685146 , 0. , 0. ],

[0.499615 , 0.2132711 , 0. , 0. , 0.20847398],

[1.625023 , 0.28453514, 0. , 0.33261997, 0.0146603 ]],

dtype=float32), array([[0.5697968 , 0.15893483, 0. , 0.34489262, 0. ],

[0.31041974, 0. , 0.11882807, 0. , 0. ],

[0.16998267, 0. , 0. , 0.10351204, 0.03232437],

[0.4364532 , 0. , 0.21812947, 0.31527176, 0.10070064]],

dtype=float32))

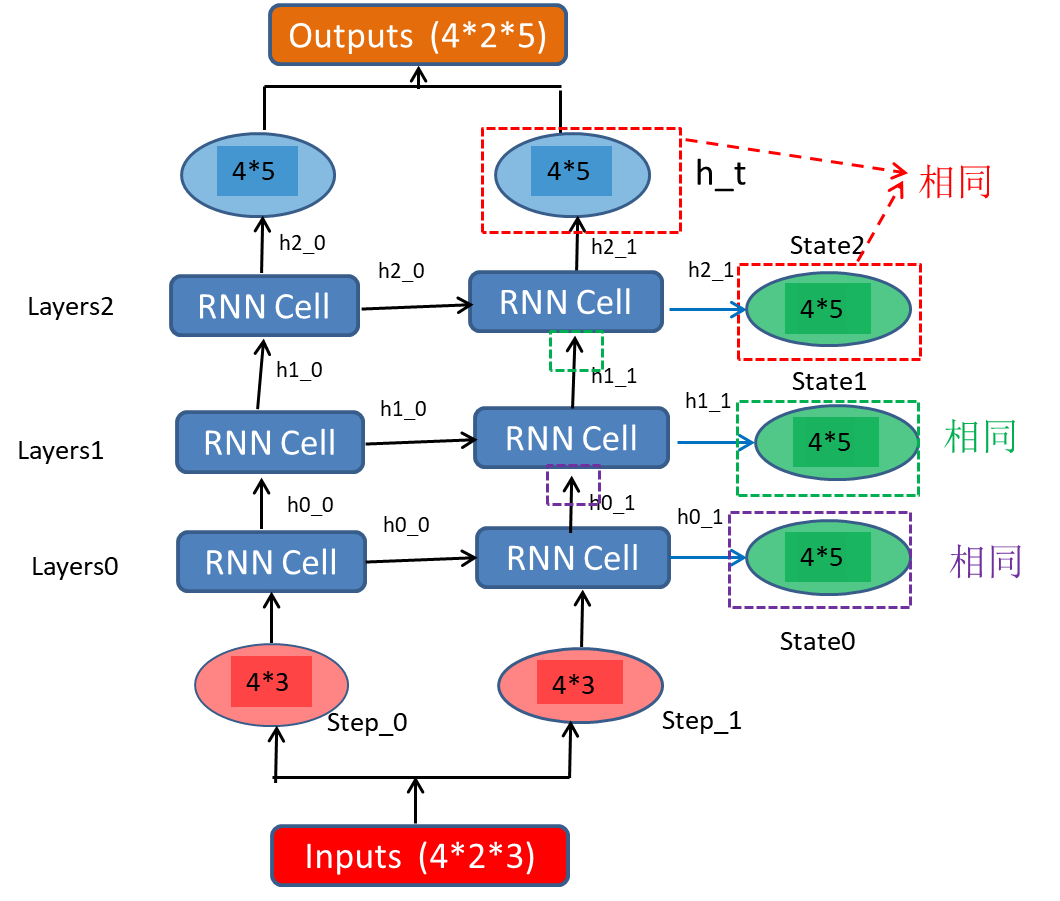

通过上面的实验结果可以看出,output仍是一个tensor,大小为[4,2,5],(注意,一般情况下"?"的大小指的是batch的大小,只有在赋值的时候才被确定)。state变为数组了,数组大小为n_layers,每一个元素为每一层的state(即当层最后一个时刻的输出),大小为[4,5]。即state格式为([4,5],[4,5],[4,5]),state数组的最后一个[4,5],即为输出中的最后一个时刻。

数据流如下:

多层LSTM

代码如下(其实就将上面的代码中的BasicRNNCell替换为BasicLSTMCell即可):

import tensorflow as tf

import numpy as np

n_steps=2

n_inputs=3

n_neurons=5#也就是hidden_size

n_layers=3#隐藏层的层数

X=tf.placeholder(tf.float32,[None,n_steps,n_inputs])

seq_length=tf.placeholder(tf.int32,[None])

layers=[tf.contrib.rnn.BasicLSTMCell(num_units=n_neurons)

for layer in range(n_layers)]

multi_layer_cell=tf.contrib.rnn.MultiRNNCell(layers)

outputs,states=tf.nn.dynamic_rnn(multi_layer_cell,X,

dtype=tf.float32,sequence_length=seq_length)

init=tf.global_variables_initializer()

X_batch=np.array([

[[0, 1, 2], [9, 8, 7]], # instance 1

[[3, 4, 5], [0, 0, 0]], # instance 2

[[6, 7, 8], [6, 5, 4]], # instance 3

[[9, 0, 1], [3, 2, 1]], # instance 4

])

#即batch=4,step=2,n_inputs=3

seq_length_batch=np.array([2,1,2,2])#即每个样本实际序列长度

with tf.Session() as sess:

init.run()

outputs_val,states_val=sess.run(

[outputs,states],feed_dict={X:X_batch,seq_length:seq_length_batch}

)

print('outputs_val.shape: \n',outputs)

print("states_val.shape: \n",states)

print('outputs_val: \n',outputs_val)

print("states_val:\n",states_val)

实验结果为:

outputs_val.shape:

Tensor("rnn/transpose_1:0", shape=(?, 2, 5), dtype=float32)

states_val.shape:

(LSTMStateTuple(c=<tf.Tensor 'rnn/while/Exit_3:0' shape=(?, 5) dtype=float32>, h=<tf.Tensor 'rnn/while/Exit_4:0' shape=(?, 5) dtype=float32>), LSTMStateTuple(c=<tf.Tensor 'rnn/while/Exit_5:0' shape=(?, 5) dtype=float32>, h=<tf.Tensor 'rnn/while/Exit_6:0' shape=(?, 5) dtype=float32>), LSTMStateTuple(c=<tf.Tensor 'rnn/while/Exit_7:0' shape=(?, 5) dtype=float32>, h=<tf.Tensor 'rnn/while/Exit_8:0' shape=(?, 5) dtype=float32>))

outputs_val:

[[[ 3.6594199e-03 -2.3809972e-03 3.6295355e-04 -1.8459697e-04

-8.4387854e-04]

[ 1.7839899e-02 -5.6135282e-03 6.7316908e-03 2.8012830e-03

-1.9292714e-03]]

[[ 5.0514797e-03 -2.5350808e-03 -1.4125259e-04 -3.9734138e-04

-3.8697536e-04]

[ 0.0000000e+00 0.0000000e+00 0.0000000e+00 0.0000000e+00

0.0000000e+00]]

[[ 6.1530811e-03 -2.5830804e-03 -1.3008754e-04 -4.9706007e-04

-4.8092019e-04]

[ 2.1675983e-02 -4.6836347e-03 7.2841253e-03 3.3016382e-03

-1.4338250e-03]]

[[ 6.4758253e-03 2.3308340e-03 7.8704627e-03 4.4720336e-03

-6.1070466e-05]

[ 1.3698395e-02 4.9990271e-03 1.8609043e-02 1.1145807e-02

3.5309794e-03]]]

states_val:

(LSTMStateTuple(c=array([[-0.15694004, 0.20655824, 0.02140534, 1.290602 , 1.0829494 ],

[-0.092046 , -0.17215544, 0.03073704, 0.7301587 , 0.868821 ],

[-0.17743632, 0.24454583, -0.11202757, 1.4110444 , 0.99785495],

[-0.7480043 , 0.54532003, -0.33524883, -0.13812238, -0.7433575 ]],

dtype=float32), h=array([[-0.00346211, 0.20228496, 0.01092053, 0.596373 , 0.00237695],

[-0.02712807, -0.16213426, 0.01270847, 0.47801512, 0.02886685],

[-0.01196137, 0.22819318, -0.05507968, 0.5266304 , 0.01994273],

[-0.11182017, 0.3645902 , -0.18546359, -0.05767139, -0.14662874]],

dtype=float32)), LSTMStateTuple(c=array([[-0.1239864 , -0.15757737, -0.05463322, -0.05578279, -0.0355193 ],

[-0.06876241, -0.05659953, 0.0066802 , 0.00191028, -0.00357829],

[-0.12697479, -0.15305266, -0.07750382, -0.01697125, -0.06576221],

[ 0.04750019, -0.03268681, -0.19806221, -0.02590359, -0.05825719]],

dtype=float32), h=array([[-0.06023093, -0.08405985, -0.0287365 , -0.02379826, -0.01778038],

[-0.03298169, -0.02795991, 0.00357828, 0.00089366, -0.00178973],

[-0.06152573, -0.08337288, -0.03940198, -0.00737659, -0.03257041],

[ 0.02453705, -0.01760371, -0.09145316, -0.01300842, -0.02788338]],

dtype=float32)), LSTMStateTuple(c=array([[ 0.03627876, -0.0112713 , 0.01375618, 0.00563769, -0.00391612],

[ 0.01014269, -0.00506173, -0.00028427, -0.00079693, -0.00077602],

[ 0.0440302 , -0.0094044 , 0.01487851, 0.0066558 , -0.00289687],

[ 0.02767749, 0.01008328, 0.03725405, 0.02220093, 0.0070855 ]],

dtype=float32), h=array([[ 0.0178399 , -0.00561353, 0.00673169, 0.00280128, -0.00192927],

[ 0.00505148, -0.00253508, -0.00014125, -0.00039734, -0.00038698],

[ 0.02167598, -0.00468363, 0.00728413, 0.00330164, -0.00143383],

[ 0.0136984 , 0.00499903, 0.01860904, 0.01114581, 0.00353098]],

dtype=float32)))

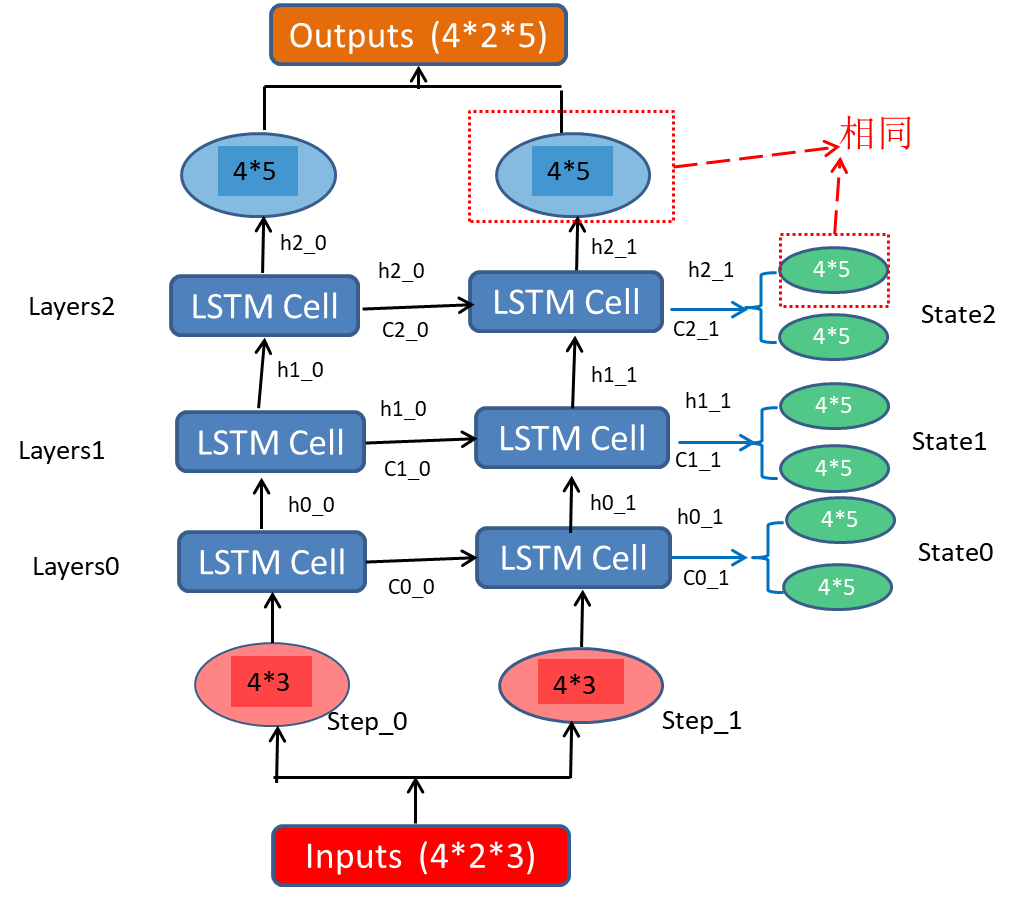

通过上面的结果可以看出,输出的仍为一个大小为[4,2,5]的tensor,state变为了一个数组,数组大小仍为n_lalyers,每个元素不再是大小为[4,5]的序列,而又是一个数组(LSTMStateTuple),LSTMStateTuple,包含两项(ct,ht)。即state的格式为(LSTMStateTuple,LSTMStateTuple,LSTMStateTuple),LSTMStateTuple:(ct,ht)。

数据流如下:

参考

tf.nn.dynamic_rnn 详解

该博客的最后那部分,解析维度的地方可以看一下,很通顺

5351

5351

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?