ResNet是一种残差网络,咱们可以把它理解为一个子网络,这个子网络经过堆叠可以构成一个很深的网络。咱们可以先简单看一下ResNet的结构,之后会对它的结构进行详细介绍。

为什么要引入ResNet?

我们知道,网络越深,咱们能获取的信息越多,而且特征也越丰富。但是根据实验表明,随着网络的加深,优化效果反而越差,测试数据和训练数据的准确率反而降低了。这是由于网络的加深会造成梯度爆炸和梯度消失的问题。

目前针对这种现象已经有了解决的方法:对输入数据和中间层的数据进行归一化操作,这种方法可以保证网络在反向传播中采用随机梯度下降(SGD),从而让网络达到收敛。但是,这个方法仅对几十层的网络有用,当网络再往深处走的时候,这种方法就无用武之地了。

为了让更深的网络也能训练出好的效果,何凯明大神提出了一个新的网络结构——ResNet

ResNet详细解说

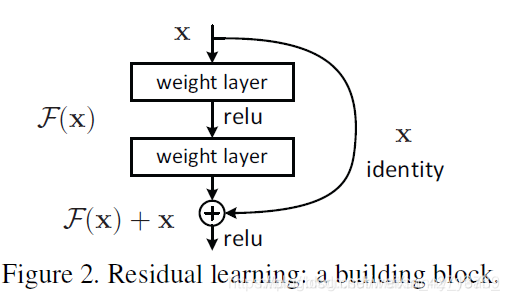

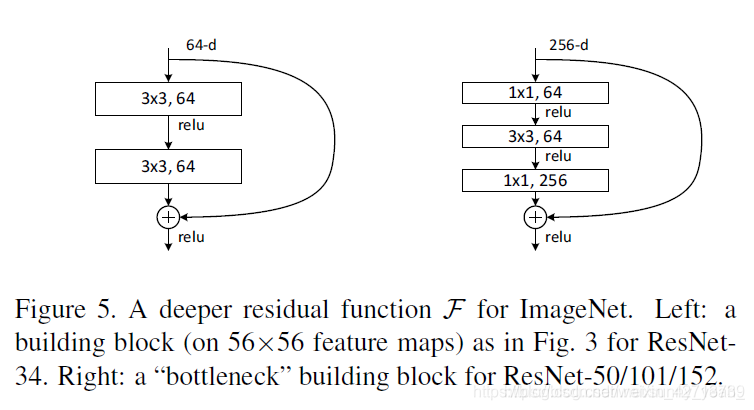

再放一遍ResNet结构图。要知道咱们要介绍的核心就是这个图啦!(ResNet block有两种,一种两层结构,一种三层结构)

咱们要求解的映射为:H(x)

现在咱们将这个问题转换为求解网络的残差映射函数,也就是F(x),其中F(x) = H(x)-x。

残差:观测值与估计值之间的差。

这里H(x)就是观测值,x就是估计值(也就是上一层ResNet输出的特征映射)。

我们一般称x为identity Function,它是一个跳跃连接;称F(x)为ResNet Function。

那么咱们要求解的问题变成了H(x) = F(x)+x。

有小伙伴可能会疑惑,咱们干嘛非要经过F(x)之后在求解H(x)啊!整这么麻烦干嘛!

咱们开始看图说话:如果是采用一般的卷积神经网络的化,原先咱们要求解的是H(x) = F(x)这个值对不?那么,我们现在假设,在我的网络达到某一个深度的时候,咱们的网络已经达到最优状态了,也就是说,此时的错误率是最低的时候,再往下加深网络的化就会出现退化问题(错误率上升的问题)。咱们现在要更新下一层网络的权值就会变得很麻烦,权值得是一个让下一层网络同样也是最优状态才行。对吧?

但是采用残差网络就能很好的解决这个问题。还是假设当前网络的深度能够使得错误率最低,如果继续增加咱们的ResNet,为了保证下一层的网络状态仍然是最优状态,咱们只需要把令F(x)=0就好啦!因为x是当前输出的最优解,为了让它成为下一层的最优解也就是希望咱们的输出H(x)=x的话,是不是只要让F(x)=0就行了?

当然上面提到的只是理想情况,咱们在真实测试的时候x肯定是很难达到最优的,但是总会有那么一个时刻它能够无限接近最优解。采用ResNet的话,也只用小小的更新F(x)部分的权重值就行啦!不用像一般的卷积层一样大动干戈!

上代码:

import os

import tensorflow as tf

import numpy as np

from tensorflow import keras

"""ResNet是一种残差网络,咱们可以把它理解为一个子网络,这个子网络经过堆叠可以构成一个很深的网络。

咱们可以先简单看一下ResNet的结构,之后会对它的结构进行详细介绍.H(x) = F(x) + x。x:输入。H(x):期望输出。F(x):输入和输出的差别叫残差 即H(x) - x"""

# In[1]:

tf.random.set_seed(22)

np.random.seed(22)

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

assert tf.__version__.startswith('2.')

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

x_train, x_test = x_train.astype(np.float32)/255., x_test.astype(np.float32)/255.

#print(x_train.shape, y_train.shape) (60000, 28, 28) (60000,)

"""

np.expand_dims扩充矩阵的维度,axis的值代表着扩充维度的位置。例如:axis=0那么[60000, 28, 28] => [1,60000, 28, 28]

axis=1那么[60000, 28, 28] => [60000, 1, 28, 28]其他类推

"""

x_train, x_test = np.expand_dims(x_train, axis=3), np.expand_dims(x_test, axis=3)#[60000, 28, 28] => [60000, 28, 28, 1]

"""(60000,)-->(60000, 10)"""

y_train_ohe = tf.one_hot(y_train, depth=10).numpy()

y_test_ohe = tf.one_hot(y_test, depth=10).numpy()

# In[2]:

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

"""

输入:[batch, height, width, channels]

第一个维度batch,一般设置为1,代表每一个样本都要作为输入进行传递。

第二个维度和第三个维度代表单个样本的行数和列数,

第四个维度代表输入图像的通道数,通常值为1,代表不跳过每一个颜色通道。

注意:不要和输入图像的参数搞混了,比如输入[1,5,5,3]这个3代表的是图像是三通道的彩色图像

Conv2D():卷积层函数。假如输入w为[batch, height, width, channels],padding='same'就是P=1,stride(S)在这是1.kernel为卷积核的大小。

不带dilation_rate参数的计算公式height_out = ((height-kernel+2P)/S) + 1 ,width_out = ((weight-kernel+2P)/S) + 1

带dilation_rate(D)的计算公式为:先计算channels的新值:kernel = kernel+(kernel−1)∗(D−1),将新的channels带入height_out = ((height-kernel+2P)/S) + 1

因此输出矩阵为:[batch,height_out,width_out,Conv2D函数filters参数的值]

"""

def conv3x3(channels, stride=1, kernel=(3, 3)):#

return keras.layers.Conv2D(channels, kernel, strides=stride, padding='same',

use_bias=False,

kernel_initializer=tf.random_normal_initializer())

class ResnetBlock(keras.Model):

def __init__(self, channels, strides=1, residual_path=False):

super(ResnetBlock, self).__init__()

self.channels = channels#通道数

self.strides = strides#步长

self.residual_path = residual_path

self.conv1 = conv3x3(channels, strides)#卷积核为3 * 3的卷积函数

self.bn1 = keras.layers.BatchNormalization()#对所有样本归一化

self.conv2 = conv3x3(channels)

self.bn2 = keras.layers.BatchNormalization()

if residual_path:

self.down_conv = conv3x3(channels, strides, kernel=(1, 1))

self.down_bn = tf.keras.layers.BatchNormalization()

def call(self, inputs, training=None):

residual = inputs#input=(batch, height, weight, channels)

x = self.bn1(inputs, training=training)#对input的数据进行归一化操作 输出x = (batch, height, weight, channels)

x = tf.nn.relu(x)#input数据 所有小于0的数据设置为0,输出输入形状为:(batch, height, weight, channels)

x = self.conv1(x)#卷积输出为(batch, height_out, weight_out, channels) 详细看conv3x3的注释 里面有height_out和weight_out的计算公式

x = self.bn2(x, training=training)#再一次对input的数据进行归一化操作 输出x = (batch, height_out, weight_out, channels)

x = tf.nn.relu(x)#再一次对input数据 所有小于0的数据设置为0,输出输入形状为:(batch, height_out, weight_out, channels)

x = self.conv2(x)#卷积 (batch, height_out, weight_out, channels)

"""residual计算残差"""

if self.residual_path:

residual = self.down_bn(inputs, training=training)#对所有样本归一化

residual = tf.nn.relu(residual)#input数据 所有小于0的数据设置为0 输出/输入(batch, height, weight, channels)

residual = self.down_conv(residual)#卷积 输入(batch, height, weight, channels) 输出(batch, height_out, weight_out, channels)

x = x + residual#x=(batch, height_out, weight_out, channels) 期望输出 H(x) = residual(残差) + x

print("x:",x.shape, 'residual_path:', self.residual_path)

return x

class ResNet(keras.Model):

def __init__(self, block_list, num_classes, initial_filters=16, **kwargs):

super(ResNet, self).__init__(**kwargs)

self.num_blocks = len(block_list)

self.block_list = block_list

self.in_channels = initial_filters

self.out_channels = initial_filters

self.conv_initial = conv3x3(self.out_channels)

self.blocks = keras.models.Sequential(name='dynamic-blocks')#创建Sequential()对象,逐层堆叠网络

print(block_list)

# build all the blocks

for block_id in range(len(block_list)):#block_list=[2, 2, 2]

for layer_id in range(block_list[block_id]):

print('block_id:',block_id, 'layer_id:', layer_id)

if block_id != 0 and layer_id == 0:

block = ResnetBlock(self.out_channels, strides=2, residual_path=True)

else:

if self.in_channels != self.out_channels:

residual_path = True

else:

residual_path = False

block = ResnetBlock(self.out_channels, residual_path=residual_path)

self.in_channels = self.out_channels

self.blocks.add(block)

self.out_channels *= 2

self.final_bn = keras.layers.BatchNormalization()#对所有样本归一化

self.avg_pool = keras.layers.GlobalAveragePooling2D()#全局平均池化

self.fc = keras.layers.Dense(num_classes)#全连接层

def call(self, inputs, training=None):

out = self.conv_initial(inputs)#输入(batch_size=32, 28, 28, 1) 输出(batch_size=32, 28, 28, channels=16)

out = self.blocks(out, training=training)#输入(batch_size=32, 28, 28, channels=16) 输出(batch_size=32, 7, 7, channels=64)

out = self.final_bn(out, training=training)#对所有样本归一化,输出(batch_size=32, 7, 7, channels=64)

out = tf.nn.relu(out)#input数据 所有小于0的数据设置为0 输出(batch_size=32, 7, 7, channels=64)

out = self.avg_pool(out)#全局平均池化 输入(batch_size=32, 7, 7, channels=64) 输出:(batch_size=32, channels=64)

out = self.fc(out)#全连接层 输出:(batch_size=32, num_classes=10)

return out

# In[3]:

def main():

num_classes = 10

batch_size = 32

epochs = 1

# build model and optimizer

model = ResNet([2, 2, 2], num_classes)#输出 (batch_size, num_classes)

model.compile(optimizer=keras.optimizers.Adam(0.001),

loss=keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

model.build(input_shape=(None, 28, 28, 1))

print("Number of variables in the model :", model.name)

model.summary()

# train

model.fit(x_train, y_train_ohe, batch_size=batch_size, epochs=epochs,

validation_data=(x_test, y_test_ohe), verbose=1)

# evaluate on test set

scores = model.evaluate(x_test, y_test_ohe, batch_size, verbose=1)

print("Final test loss and accuracy :", scores)

if __name__ == '__main__':

main()

3456

3456

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?