import os

import time

import numpy as np

import pandas as pd

import torch

import torch. nn as nn

import torch. nn. functional as F

from torch. utils. data import Dataset

from torch. utils. data import DataLoader

from torchvision import datasets

from torchvision import transforms

import matplotlib. pyplot as plt

from PIL import Image

DEVICE = torch. device( "cuda" if torch. cuda. is_available( ) else "cpu" )

http: // mmlab. ie. cuhk. edu. hk/ projects/ CelebA. html

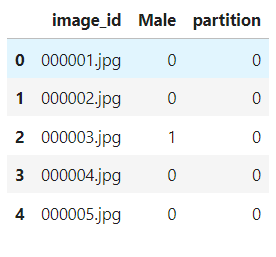

df1 = pd. read_csv( '../input/celeba-dataset/list_attr_celeba.csv' , usecols= [ 'Male' , 'image_id' ] )

df1. loc[ df1[ 'Male' ] == - 1 , 'Male' ] = 0

df1. head( )

t = df1[ 'Male' ] . values

print ( t)

[ 0 0 1 . . . 1 0 0 ]

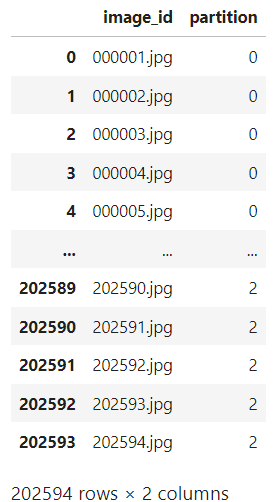

df2 = pd. read_csv( '../input/celeba-dataset/list_eval_partition.csv' )

df2. head( - 5 )

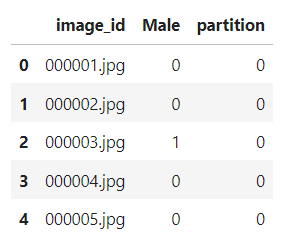

df = pd. merge( df1, df2, on= 'image_id' )

df. head( )

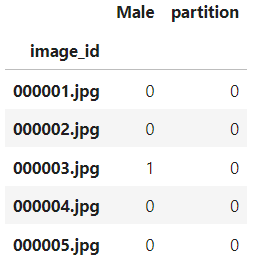

df = df. set_index( 'image_id' )

df. head( )

df. to_csv( 'celeba-gender-partitions.csv' )

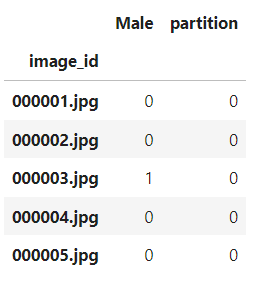

tmp = pd. read_csv( './celeba-gender-partitions.csv' , index_col= 0 )

tmp. head( )

df. loc[ df[ 'partition' ] == 0 ] . to_csv( 'celeba-gender-train.csv' )

df. loc[ df[ 'partition' ] == 1 ] . to_csv( 'celeba-gender-valid.csv' )

df. loc[ df[ 'partition' ] == 2 ] . to_csv( 'celeba-gender-test.csv' )

t1 = pd. read_csv( 'celeba-gender-train.csv' )

t1. head( )

class CelebaDataset ( Dataset) :

"""Custom Dataset for loading CelebA face images"""

def __init__ ( self, csv_path, img_dir, transform= None ) :

df = pd. read_csv( csv_path, index_col= 0 )

self. img_dir = img_dir

self. csv_path = csv_path

self. img_names = df. index. values

self. y = df[ 'Male' ] . values

self. transform = transform

def __getitem__ ( self, index) :

img = Image. open ( os. path. join( self. img_dir, self. img_names[ index] ) )

if self. transform is not None :

img = self. transform( img)

label = self. y[ index]

return img, label

def __len__ ( self) :

return self. y. shape[ 0 ]

tmp = CelebaDataset( csv_path = './celeba-gender-train.csv' , img_dir = '../input/celeba-dataset/img_align_celeba/img_align_celeba' )

x, y = tmp. __getitem__( 0 )

print ( x. size)

print ( y)

( 178 , 218 )

0

BATCH_SIZE = 64

custom_transform = transforms. Compose( [ transforms. CenterCrop( ( 178 , 178 ) ) ,

transforms. Resize( ( 128 , 128 ) ) ,

transforms. ToTensor( ) ] )

train_dataset = CelebaDataset( csv_path= './celeba-gender-train.csv' ,

img_dir= '../input/celeba-dataset/img_align_celeba/img_align_celeba' ,

transform= custom_transform)

valid_dataset = CelebaDataset( csv_path= './celeba-gender-valid.csv' ,

img_dir= '../input/celeba-dataset/img_align_celeba/img_align_celeba' ,

transform= custom_transform)

test_dataset = CelebaDataset( csv_path= './celeba-gender-test.csv' ,

img_dir= '../input/celeba-dataset/img_align_celeba/img_align_celeba' ,

transform= custom_transform)

train_loader = DataLoader( dataset= train_dataset,

batch_size= BATCH_SIZE,

shuffle= True ,

num_workers= 4 )

valid_loader = DataLoader( dataset= valid_dataset,

batch_size= BATCH_SIZE,

shuffle= False ,

num_workers= 4 )

test_loader = DataLoader( dataset= test_dataset,

batch_size= BATCH_SIZE,

shuffle= False ,

num_workers= 4 )

def conv3x3 ( in_planes, out_planes, stride= 1 ) :

"""3x3 convolution with padding"""

return nn. Conv2d( in_planes, out_planes, kernel_size= 3 , stride= stride,

padding= 1 , bias= False )

class BasicBlock ( nn. Module) :

expansion = 1

def __init__ ( self, inplanes, planes, stride= 1 , downsample= None ) :

super ( BasicBlock, self) . __init__( )

self. conv1 = conv3x3( inplanes, planes, stride)

self. bn1 = nn. BatchNorm2d( planes)

self. relu = nn. ReLU( inplace= True )

self. conv2 = conv3x3( planes, planes)

self. bn2 = nn. BatchNorm2d( planes)

self. downsample = downsample

self. stride = stride

def forward ( self, x) :

residual = x

out = self. conv1( x)

out = self. bn1( out)

out = self. relu( out)

out = self. conv2( out)

out = self. bn2( out)

if self. downsample is not None :

residual = self. downsample( x)

out += residual

out = self. relu( out)

return out

class ResNet ( nn. Module) :

def __init__ ( self, block, layers, num_classes, grayscale) :

self. inplanes = 64

if grayscale:

in_dim = 1

else :

in_dim = 3

super ( ResNet, self) . __init__( )

self. conv1 = nn. Conv2d( in_dim, 64 , kernel_size= 7 , stride= 2 , padding= 3 ,

bias= False )

self. bn1 = nn. BatchNorm2d( 64 )

self. relu = nn. ReLU( inplace= True )

self. maxpool = nn. MaxPool2d( kernel_size= 3 , stride= 2 , padding= 1 )

self. layer1 = self. _make_layer( block, 64 , layers[ 0 ] )

self. layer2 = self. _make_layer( block, 128 , layers[ 1 ] , stride= 2 )

self. layer3 = self. _make_layer( block, 256 , layers[ 2 ] , stride= 2 )

self. layer4 = self. _make_layer( block, 512 , layers[ 3 ] , stride= 2 )

self. avgpool = nn. AdaptiveAvgPool2d( 7 )

self. fc = nn. Linear( 25088 * block. expansion, num_classes)

def _make_layer ( self, block, planes, blocks, stride= 1 ) :

downsample = None

if stride != 1 or self. inplanes != planes * block. expansion:

downsample = nn. Sequential(

nn. Conv2d( self. inplanes, planes * block. expansion,

kernel_size= 1 , stride= stride, bias= False ) ,

nn. BatchNorm2d( planes * block. expansion) ,

)

layers = [ ]

layers. append( block( self. inplanes, planes, stride, downsample) )

self. inplanes = planes * block. expansion

for i in range ( 1 , blocks) :

layers. append( block( self. inplanes, planes) )

return nn. Sequential( * layers)

def forward ( self, x) :

x = self. conv1( x)

x = self. bn1( x)

x = self. relu( x)

x = self. maxpool( x)

x = self. layer1( x)

x = self. layer2( x)

x = self. layer3( x)

x = self. layer4( x)

x = self. avgpool( x)

x = x. view( x. size( 0 ) , - 1 )

logits = self. fc( x)

probas = F. softmax( logits, dim= 1 )

return logits, probas

def resnet18 ( num_classes) :

"""Constructs a ResNet-18 model."""

model = ResNet( block= BasicBlock,

layers= [ 2 , 2 , 2 , 2 ] ,

num_classes= num_classes,

grayscale= False )

return model

net = resnet18( num_classes = 2 )

print ( net)

print ( net( torch. randn( [ 1 , 3 , 256 , 256 ] ) ) )

ResNet(

( conv1) : Conv2d( 3 , 64 , kernel_size= ( 7 , 7 ) , stride= ( 2 , 2 ) , padding= ( 3 , 3 ) , bias= False )

( bn1) : BatchNorm2d( 64 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( maxpool) : MaxPool2d( kernel_size= 3 , stride= 2 , padding= 1 , dilation= 1 , ceil_mode= False )

( layer1) : Sequential(

( 0 ) : BasicBlock(

( conv1) : Conv2d( 64 , 64 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 64 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 64 , 64 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 64 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

( 1 ) : BasicBlock(

( conv1) : Conv2d( 64 , 64 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 64 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 64 , 64 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 64 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( layer2) : Sequential(

( 0 ) : BasicBlock(

( conv1) : Conv2d( 64 , 128 , kernel_size= ( 3 , 3 ) , stride= ( 2 , 2 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 128 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 128 , 128 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 128 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( downsample) : Sequential(

( 0 ) : Conv2d( 64 , 128 , kernel_size= ( 1 , 1 ) , stride= ( 2 , 2 ) , bias= False )

( 1 ) : BatchNorm2d( 128 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( 1 ) : BasicBlock(

( conv1) : Conv2d( 128 , 128 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 128 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 128 , 128 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 128 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( layer3) : Sequential(

( 0 ) : BasicBlock(

( conv1) : Conv2d( 128 , 256 , kernel_size= ( 3 , 3 ) , stride= ( 2 , 2 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 256 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 256 , 256 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 256 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( downsample) : Sequential(

( 0 ) : Conv2d( 128 , 256 , kernel_size= ( 1 , 1 ) , stride= ( 2 , 2 ) , bias= False )

( 1 ) : BatchNorm2d( 256 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( 1 ) : BasicBlock(

( conv1) : Conv2d( 256 , 256 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 256 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 256 , 256 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 256 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( layer4) : Sequential(

( 0 ) : BasicBlock(

( conv1) : Conv2d( 256 , 512 , kernel_size= ( 3 , 3 ) , stride= ( 2 , 2 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 512 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 512 , 512 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 512 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( downsample) : Sequential(

( 0 ) : Conv2d( 256 , 512 , kernel_size= ( 1 , 1 ) , stride= ( 2 , 2 ) , bias= False )

( 1 ) : BatchNorm2d( 512 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( 1 ) : BasicBlock(

( conv1) : Conv2d( 512 , 512 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn1) : BatchNorm2d( 512 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

( relu) : ReLU( inplace= True )

( conv2) : Conv2d( 512 , 512 , kernel_size= ( 3 , 3 ) , stride= ( 1 , 1 ) , padding= ( 1 , 1 ) , bias= False )

( bn2) : BatchNorm2d( 512 , eps= 1e - 05 , momentum= 0.1 , affine= True , track_running_stats= True )

)

)

( avgpool) : AdaptiveAvgPool2d( output_size= 7 )

( fc) : Linear( in_features= 25088 , out_features= 2 , bias= True )

)

( tensor( [ [ 0.5787 , - 0.0013 ] ] , grad_fn= < AddmmBackward> ) , tensor( [ [ 0.6411 , 0.3589 ] ] , grad_fn= < SoftmaxBackward> ) )

NUM_EPOCHS = 3

model = resnet18( num_classes= 10 )

model = model. to( DEVICE)

optimizer = torch. optim. Adam( model. parameters( ) , lr= 0.001 )

valid_loader = test_loader

def compute_accuracy_and_loss ( model, data_loader, device) :

correct_pred, num_examples = 0 , 0

cross_entropy = 0 .

for i, ( features, targets) in enumerate ( data_loader) :

features = features. to( device)

targets = targets. to( device)

logits, probas = model( features)

cross_entropy += F. cross_entropy( logits, targets) . item( )

_, predicted_labels = torch. max ( probas, 1 )

num_examples += targets. size( 0 )

correct_pred += ( predicted_labels == targets) . sum ( )

return correct_pred. float ( ) / num_examples * 100 , cross_entropy/ num_examples

start_time = time. time( )

train_acc_lst, valid_acc_lst = [ ] , [ ]

train_loss_lst, valid_loss_lst = [ ] , [ ]

for epoch in range ( NUM_EPOCHS) :

model. train( )

for batch_idx, ( features, targets) in enumerate ( train_loader) :

features = features. to( DEVICE)

targets = targets. to( DEVICE)

logits, probas = model( features)

cost = F. cross_entropy( logits, targets)

optimizer. zero_grad( )

cost. backward( )

optimizer. step( )

if not batch_idx % 500 :

print ( f'Epoch: { epoch+ 1 : 03d } / { NUM_EPOCHS: 03d } | ' f'Batch { batch_idx: 04d } / { len ( train_loader) : 04d } |' f' Cost: { cost: .4f } ' )

model. eval ( )

with torch. set_grad_enabled( False ) :

train_acc, train_loss = compute_accuracy_and_loss( model, train_loader, device= DEVICE)

valid_acc, valid_loss = compute_accuracy_and_loss( model, valid_loader, device= DEVICE)

train_acc_lst. append( train_acc)

valid_acc_lst. append( valid_acc)

train_loss_lst. append( train_loss)

valid_loss_lst. append( valid_loss)

print ( f'Epoch: { epoch+ 1 : 03d } / { NUM_EPOCHS: 03d } Train Acc.: { train_acc: .2f } %' f' | Validation Acc.: { valid_acc: .2f } %' )

elapsed = ( time. time( ) - start_time) / 60

print ( f'Time elapsed: { elapsed: .2f } min' )

elapsed = ( time. time( ) - start_time) / 60

print ( f'Total Training Time: { elapsed: .2f } min' )

Epoch: 001 / 003 | Batch 0000 / 2544 | Cost: 0.8401

Epoch: 001 / 003 | Batch 0500 / 2544 | Cost: 0.1133

Epoch: 001 / 003 | Batch 1000 / 2544 | Cost: 0.1819

Epoch: 001 / 003 | Batch 1500 / 2544 | Cost: 0.1938

Epoch: 001 / 003 | Batch 2000 / 2544 | Cost: 0.0334

Epoch: 001 / 003 | Batch 2500 / 2544 | Cost: 0.0738

Epoch: 001 / 003 Train Acc. : 96.16 % | Validation Acc. : 95.70 %

Time elapsed: 11.19 min

Epoch: 002 / 003 | Batch 0000 / 2544 | Cost: 0.0383

Epoch: 002 / 003 | Batch 0500 / 2544 | Cost: 0.0661

Epoch: 002 / 003 | Batch 1000 / 2544 | Cost: 0.1381

Epoch: 002 / 003 | Batch 1500 / 2544 | Cost: 0.1923

Epoch: 002 / 003 | Batch 2000 / 2544 | Cost: 0.0851

Epoch: 002 / 003 | Batch 2500 / 2544 | Cost: 0.1290

Epoch: 002 / 003 Train Acc. : 97.19 % | Validation Acc. : 96.65 %

Time elapsed: 19.65 min

Epoch: 003 / 003 | Batch 0000 / 2544 | Cost: 0.0455

Epoch: 003 / 003 | Batch 0500 / 2544 | Cost: 0.0671

Epoch: 003 / 003 | Batch 1000 / 2544 | Cost: 0.0431

Epoch: 003 / 003 | Batch 1500 / 2544 | Cost: 0.0403

Epoch: 003 / 003 | Batch 2000 / 2544 | Cost: 0.1455

Epoch: 003 / 003 | Batch 2500 / 2544 | Cost: 0.1445

Epoch: 003 / 003 Train Acc. : 97.77 % | Validation Acc. : 97.24 %

Time elapsed: 28.05 min

Total Training Time: 28.05 min

model. eval ( )

with torch. set_grad_enabled( False ) :

test_acc, test_loss = compute_accuracy_and_loss( model, test_loader, DEVICE)

print ( f'Test accuracy: { test_acc: .2f } %' )

Test accuracy: 97.24 %

from PIL import Image

import matplotlib. pyplot as plt

for features, targets in train_loader:

break

_, predictions = model. forward( features[ : 8 ] . to( DEVICE) )

predictions = torch. argmax( predictions, dim= 1 )

print ( predictions)

features = features[ : 7 ]

fig = plt. figure( )

for i in range ( 6 ) :

plt. subplot( 2 , 3 , i+ 1 )

plt. tight_layout( )

tmp = features[ i]

plt. imshow( np. transpose( tmp, ( 1 , 2 , 0 ) ) )

plt. title( "Actual value: {}" . format ( targets[ i] ) + '\n' + "Prediction value: {}" . format ( predictions[ i] ) , size = 10 )

plt. show( )

5957

5957

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?