1.Fine-tuning global model via data-free knowledge distillation for non iid federated learning

challenge: simple model aggregation ignores local knowledge incompatibility and induces knowledge forgetting in the global model.

Aggregation without finetuning will degrade the performance.

2.Federated evaluation and tuning for on-device personlization :system design and application

From system perspective, the system can be used in commercial applications.

Ground truth generation is hard in specific scenarios, like ASR.

3.Semi-supervised learning based on generative adversarial network: a

comparison between good GAN and bad GAN approach

GAN can be applied to Semi-supervised learning.

However, many works have not adopted GAN in SSL recently.

There may be some issues in GAN.

self-supervised is a good direction to solve the FSSL problem.

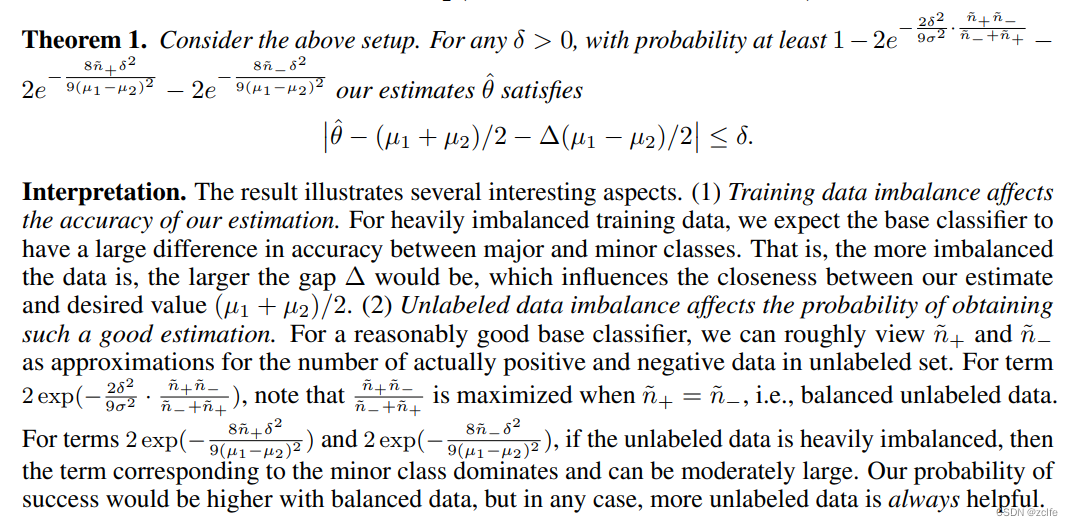

4.Rethinking the Value of Labels for Improving Class-Imbalanced Learning

We identify a persisting dilemma on the value of labels in the context of imbalanced learning: on the one hand, supervision from labels typically leads to better results than its unsupervised counterparts; on the other hand, heavily imbalanced data naturally incurs “label bias” in the classifier, where the decision boundary can be drastically altered by the majority classes.

Imbalanced Learning with Unlabeled Data

With the help of unlabeled data, we show that while all

classes can obtain certain improvements, the minority classes tend to exhibit larger gains.

5.Virtual Homogeneity Learning: Defending against Data Heterogeneity in Federated Learning

Is it possible to defend against data heterogeneity in FL

systems by sharing data containing no private information?

The key challenge of VHL is how to generate the virtual dataset to benefit model performance. (DA) utilizes domain adaption to mitigate the distribution shift.

6.Spherical Space Domain Adaptation with Robust Pseudo-label Loss

7.Rethinking Pseudo Labels for Semi-supervised Object Detection

8.RETHINKING DATA AUGMENTATION:

SELF-SUPERVISION AND SELF-DISTILLATION

9.Rethinking Re-Sampling in Imbalanced Semi-Supervised Learning

10.ABC: Auxiliary Balanced Classifier for

Class-Imbalanced Semi-Supervised Learning

11.Disentangling Label Distribution for Long-tailed Visual Recognition

12.CReST: A Class-Rebalancing Self-Training Framework

for Imbalanced Semi-Supervised Learning

13.Rethinking Pre-training and Self-training

three similar paper for generate pseudo feature to train federated models.

(1) No Fear of Heterogeneity: Classifier Calibration for Federated Learning with Non-IID Data

(2) Fine-tuning global model via data-free knowledge distillation for non-iid federated learning

(3) Data-Free Knowledge Distillation for Heterogeneous Federated Learning.

Similarity:

1.three methods use the virtual representation to

2. targets of three methods are to solve the non iid problem.

3. (1)(2) have the training process in server. (1) is to retrain classifiers. (2) is to aggregate the local model.

difference:

1 . (2)(3) utilize the GAN to generate pseudo samples and the generators are updated in servers. But, (2) adopts pseudo samples to aggregate the local models and use hard sampling, label sampling and class level ensemble to optimize the pseudo sampling steps. (3) uses pseudo samples to regularize the local training steps and still use fedavg to aggregate local models. (1) ues mixture guassian distribution to generate virtual samples to retrain the classifier to debias.

2 . (3) share the prediction layer, which is different with previous works. Many works share the feature extraction layer and localize the prediction layer. The purpose is to reduce communication workload.

2862

2862

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?