目录

前言

平台搭建可以参考以往的文章。

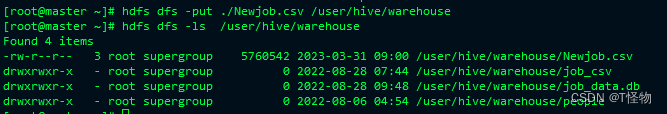

一、上传CSV文件

上传文件到HDFS中

[root@master ~]# hdfs dfs -put ./Newjob.csv /user/hive/warehouse

[root@master ~]# hdfs dfs -ls /user/hive/warehouse

登录网页查看

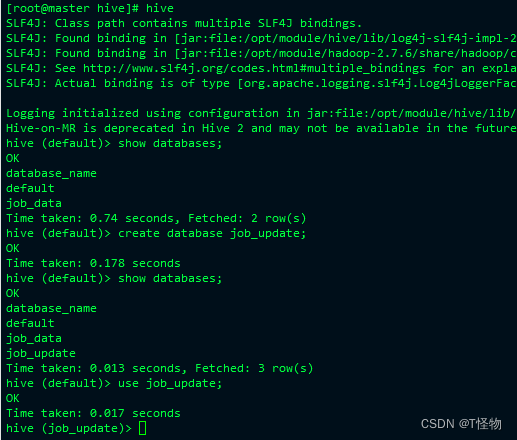

二、Hive部分

1.创建数据库,将文件Load到Hive中

代码如下:

[root@master hive]# hive

hive (default)> create database job_update;

hive (default)> use job_update;

2.创建Job总表

代码如下:

创建总表

hive >

create external table job(

post string,

wages string,

city string,

experience string,

education string,

company string,

skill string)

row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde' with serdeproperties

('separatorChar' = ',','quoteChar'= '\"','escapeChar'= '\\') STORED AS TEXTFILE TBLPROPERTIES ('skip.header.line.count'='1');从hdfs导入csv数据到hive表:

hive (job_update)> load data inpath '上传的csv文件的路径' into table job;

3.创建分表

职位数量分布情况--

CREATE TABLE job_city as SELECT city ,count(city) AS bigdata FROM job group by city order by bigdata desc;

职位工作方向--

CREATE TABLE job_post AS SELECT post,count(post) AS bigdata FROM job group by post order by bigdata desc;

公司招聘数量--

CREATE TABLE job_company AS SELECT company ,count(company) AS bigdata FROM job group by company order by bigdata desc;

技能需求--

CREATE TABLE job_skill AS SELECT skill ,count(skill) AS bigdata FROM job group by skill order by bigdata desc;

学历要求--

CREATE TABLE job_education AS SELECT education ,count(education) AS bigdata FROM job GROUP BY education order by bigdata desc;

工作经验--

CREATE TABLE job_experience AS SELECT experience ,count(experience) AS bigdata FROM job GROUP BY experience order by bigdata desc;

不同工作经验对应薪资--

create TABLE job_experience_wages AS select experience,wages from job group by experience,wages order by experience desc;

不同学历对应薪资--

create table job_education_wages as select education,wages from job group by education,wages order by education desc;

职位薪资TOP10--

create TABLE job_top_wages AS select post,wages from job group by post,wages order by wages desc limit 10;三、Sqoop部分

将Hive中数据利用Sqoop导入MySQL

sqoop-export --connect "jdbc:mysql://192.168.61.130:3306/job_data?useUnicode=true&characterEncoding=utf-8" --username root --password 000000 --table job --export-dir /user/hive/warehouse/job_data.db/job

================================================================================

sqoop-export --connect "jdbc:mysql://192.168.61.130:3306/job_data?useUnicode=true&characterEncoding=utf-8" --username root --password 000000 --table job_city --export-dir /user/hive/warehouse/job_data.db/job_city --input-fields-terminated-by '\001' -m 1

================================================================================

sqoop-export --connect "jdbc:mysql://192.168.61.130:3306/job_data?useUnicode=true&characterEncoding=utf-8" --username root --password 000000 --table job_post --export-dir /user/hive/warehouse/job_data.db/job_post --input-fields-terminated-by '\001' -m 1

================================================================================

sqoop-export --connect "jdbc:mysql://192.168.61.130:3306/job_data?useUnicode=true&characterEncoding=utf-8" --username root --password 000000 --table job_experience --export-dir /user/hive/warehouse/job_data.db/job_experience --input-fields-terminated-by '\001' -m 1

================================================================================

sqoop-export --connect "jdbc:mysql://192.168.61.130:3306/job_data?useUnicode=true&characterEncoding=utf-8" --username root --password 000000 --table job_education --export-dir /user/hive/warehouse/job_data.db/job_education --input-fields-terminated-by '\001' -m 1

6697

6697

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?