(本周共计4个作业,一个扩展作业)

- 以Lena为原始图像,通过OpenCV实现平均滤波,高斯滤波及中值滤波,比较滤波结果。

import cv2 as cv

help(cv.inRange)

filename = r'D:\opencv\opencv\sources\samples\data\lena.jpg'

img = cv.imread(filename)

Gaussian = cv.GaussianBlur(img, (5, 5), 0)

Median = cv.medianBlur(img, 5)

# getStructuringElement函数会返回指定形状和尺寸的结构元素

kernel = cv.getStructuringElement(cv.MORPH_ELLIPSE, (5, 5))

# morphologyEx 形态学变化函数

Morphology = cv.morphologyEx(img, cv.MORPH_OPEN, kernel)

Morphology = cv.morphologyEx(Morphology, cv.MORPH_CLOSE, kernel)

# 平均滤波对噪声的效果差,而中值滤波和高斯滤波对噪声效果好

cv.imshow('GaussianBlur', Gaussian)

cv.imshow('MedianBlue', Median)

cv.imshow('MorphologyEx', Morphology)

cv.waitKey()

cv.destroyAllWindows()

- 以Lena为原始图像,通过OpenCV使用Sobel及Canny算子检测,比较边缘检测结果。

import cv2 as cv

import pdb

filename = r'D:\opencv\opencv\sources\samples\data\lena.jpg'

img = cv.imread(r'D:\opencv\opencv\sources\samples\data\lena.jpg')

img = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

# Sobel算子

y0 = cv.Sobel(img, cv.CV_16S, 1, 0)

x0 = cv.Sobel(img, cv.CV_16S, 0, 1)

absX = cv.convertScaleAbs(x0)

absY = cv.convertScaleAbs(y0)

Sobel = cv.addWeighted(absX, 0.5, absY, 0.5, 0)

# Robert算子

def robert_suanzi(img):

r, c = img.shape[0], img.shape[1]

r_sunnzi = [[-1, -1], [1, 1]]

for x in range(r):

for y in range(c):

if (y + 2 <= c) and (x + 2 <= r):

imgChild = img[x:x + 2, y:y + 2]

list_robert = r_sunnzi * imgChild

img[x, y] = abs(list_robert.sum()) # 求和加绝对值

return img

Robert = robert_suanzi(img)

#Laplacian滤波

Laplacian = cv.Laplacian(img, cv.CV_64F)

#Canny算子

Canny = cv.Canny(img, 10, 70)

# Canny算子明显比Sobel算子和Robert检测的更加清晰,Laplacian的结果不太好,应该是参数上出了些问题,但是调了很久都不太好。

cv.imshow('Robert', Robert)

# cv.imshow('absX', absX)

# cv.imshow('absY', absY)

cv.imshow('Sobel', Sobel)

cv.imshow('Laplacian', Laplacian)

cv.imshow('Canny', Canny)

cv.waitKey()

cv.destroyAllWindows()

- 在OpenCV安装目录下找到课程对应演示图片(安装目录\sources\samples\data),首先计算灰度直方图,进一步使用大津算法进行分割,并比较分析分割结果。

import cv2 as cv

import matplotlib.pyplot as plt

img = cv.imread(r'D:\opencv\opencv\sources\samples\data\lena.jpg')

def threshold_binary(src):

#把BGR图像转化成灰度图像

gray = cv.cvtColor(src, cv.COLOR_BGR2GRAY)

#获得灰度直方图以便调整算法的使用

plt.hist(src.ravel(), 256, [0, 256])

plt.show()

#几种二值化方法

ret, binary = cv.threshold(gray, 50, 255, cv.THRESH_BINARY)#指定阈值50

print("二值阈值: %s" % ret)

print(binary)

#cv.imshow("threshold_binary", binary)

ret_otsu, binary_otsu = cv.threshold(gray, 0, 255, cv.THRESH_BINARY | cv.THRESH_OTSU)

print("二值阈值_otsu: %s" %ret_otsu)

cv.imshow("threshold_binary_otsu", binary_otsu)

cv.waitKey()

cv.destroyAllWindows()

ret_tri, binary_tri = cv.threshold(gray, 0, 255, cv.THRESH_BINARY | cv.THRESH_TRIANGLE)

print("二值阈值_tri: %s" % ret_tri)

threshold_binary(img)

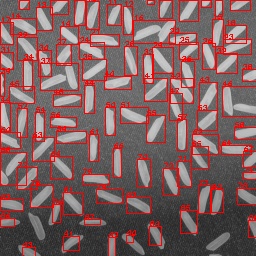

- 使用米粒图像,分割得到各米粒,首先计算各区域(米粒)的面积、长度等信息,进一步计算面积、长度的均值及方差,分析落在3sigma范围内米粒的数量。

import cv2 as cv

import copy

filename = "D:\opencv\Rice.jpg"

img = cv.imread(filename)

gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

_, bw = cv.threshold(gray, 0, 0xff, cv.THRESH_OTSU)

element = cv.getStructuringElement(cv.MORPH_CROSS, (3, 3))

bw = cv.morphologyEx(bw, cv.MORPH_OPEN, element)

seg = copy.deepcopy(bw)

# opencv3的findContours会返回三个值,分别是img, countours, hierarchy

gray, cnts,hier= cv.findContours(seg, cv.RETR_EXTERNAL, cv.CHAIN_APPROX_SIMPLE)

print(cnts)

count = 0

for i in range(len(cnts), 0, -1):

c = cnts[i - 1]

area = cv.contourArea(c)

if area < 10:

continue

count = count + 1

print('blob', i, ':', area)

x, y, w, h = cv.boundingRect(c)

cv.rectangle(img, (x, y), (x + w, y + h), (0, 0, 0xff), 1)

cv.putText(img, str(count), (x, y), cv.FONT_HERSHEY_PLAIN, 0.5, (0, 0, 0xff))

print("米粒数量:", count)

cv.imshow("源图", img)

cv.imshow("阈值化图", bw)

# 检测除了93个米粒,可以看出还是漏检了2个米粒,并由将两个米粒检测成一个的情况,面积长度等信息由cnts矩阵给出

cv.waitKey()

cv.destroyAllWindows()

输出图片:

扩展作业:

5. 使用棋盘格及自选风景图像,分别使用SIFT、FAST及ORB算子检测角点,并比较分析检测结果。

import cv2 as cv

import copy

import pdb

# SIFT

filename1 = r'D:checkerboard.jpg'

img0 = cv.imread(filename1)

img0 = cv.cvtColor(img0, cv.COLOR_BGR2GRAY)

img1 = copy.deepcopy(img0)

# 创建

minHesssian = 1000

detector = cv.xfeatures2d.SURF_create(minHesssian)

descriptor = cv.xfeatures2d.SURF_create()

matcher1 = cv.DescriptorMatcher_create("BruteForce")

# 检测特征点

keyPoint1 = detector.detect(img1)

img1 = cv.drawKeypoints(img1, keyPoint1, img1, [0, 0, 0xff])

# ORB

# 创建

minHesssian = 1000

detector = cv.ORB_create(minHesssian)

descriptor = cv.ORB_create()

matcher1 = cv.DescriptorMatcher_create("BruteForce")

img2 = copy.deepcopy(img0)

# 检测特征点

keyPoint2 = detector.detect(img1)

img2 = cv.drawKeypoints(img2, keyPoint1, img2, [0, 0, 0xff])

# 创建

img3 = copy.deepcopy(img0)

fast = cv.FastFeatureDetector_create(threshold=40, \

nonmaxSuppression=True, type=cv.FAST_FEATURE_DETECTOR_TYPE_9_16)

kp = fast.detect(img1, None)

img3 = cv.drawKeypoints(img3, kp, img3, color = (0, 0, 255))

# ORB与SIFT检测结果差别不大,FAST算子检测出的角点更多。三者从结果上来说,还是存在较多的误点

cv.imshow("FAST", img3)

cv.imshow("SIFT keypoints", img1)

cv.imshow("ORB keypoints", img2)

cv.waitKey()

print("keypoint1.size = ", len(keyPoint1))

(可选)使用Harris角点检测算子检测棋盘格,并与上述结果比较。

import cv2 as cv

import numpy as np

import pdb

filename = r'D:checkerboard.jpg'

img = cv.imread(filename)

gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

class Cornerdetect():

def detect(self, img):

self.cornerStrength = cv.cornerHarris(img, self.neighbourhood, self.aperture, self.k)

#cv.imshow('ini', self.cornerStrength)

min_val, self.maxStrength, min_indx, max_indx = cv.minMaxLoc(self.cornerStrength)

dilated = cv.dilate(self.cornerStrength, cv.getStructuringElement(cv.MORPH_RECT, (3, 3)))

self.localMax = cv.compare(self.cornerStrength, dilated, cv.CMP_EQ)

def getCornerMap(self, qualitylevel): # the value of qualitylevel between 0.03 and 0.05

self.threshold = qualitylevel * self.maxStrength

_, self.cornerTH = cv.threshold(self.cornerStrength, self.threshold, 255, cv.THRESH_BINARY)

self.cornerTH = np.array(self.cornerTH)

self.cornerMap = self.cornerTH.astype(np.uint8)

cv.bitwise_and(self.cornerMap, self.localMax, self.cornerMap)

cv.bitwise_and(gray, self.cornerMap, gray)

#cv.imshow("bitand", img)

#cv.imshow("gray", gray)

def drawOnImage(self, image, points, color, radius, thickness):

print(len(points))

for i in points:

print(i)

cv.circle(image, tuple(i), radius, color, thickness)

Parament = Cornerdetect()

Parament.k = 0.04

Parament.aperture = 3

Parament.neighbourhood = 2

Cornerdetect.detect(Parament, gray)

Cornerdetect.getCornerMap(Parament, qualitylevel=0.1)

pos = np.nonzero(gray != 0)

print(pos)

pos = tuple(map(list, zip(*pos)))

print(pos)

Cornerdetect.drawOnImage(Parament, img, pos, (0, 0, 255), 3, 1)

cv.imshow('image', img)

cv.waitKey()

cv.destroyAllWindows()

# 用Harris检测出的结果基本正确,明显比上述三种好,具体原因不是很清楚,还有待研究

2216

2216

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?