情况1

场景

[root@linux01 logs]# start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.master.Master-1-linux01.out

failed to launch: nice -n 0 /opt/servers/spark-2.4.5/bin/spark-class org.apache.spark.deploy.master.Master --host linux01 --port 7077 --webui-port 8080

Spark Command: /opt/servers/jdk1.8/bin/java -cp /opt/servers/spark-2.4.5/conf/:/opt/servers/spark-2.4.5/jars/*:/opt/servers/hadoop-2.9.2/etc/hadoop/:/opt/servers/hadoop-2.9.2/share/hadoop/common/lib/*:/opt/seroop/common/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/:/opt/serversyarn/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/*:/opt/servers/hadoop-2.9.2/contrib/capacity-schedecoveryMode=ZOOKEEPER - Dspark.deploy.zookeeper.url=linux01,linux02,linux03 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.master.Master --host linux01 --port 7077 --webui-port 8080

========================================

Unrecognized option: -

Error: Could not create the Java Virtual Machine.

Error: A fatal exception has occurred. Program will exit.

full log in /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.master.Master-1-linux01.out

linux03: starting org.apache.spark.deploy.worker.Worker, logging to /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-linux03.out

linux01: starting org.apache.spark.deploy.worker.Worker, logging to /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out

linux02: starting org.apache.spark.deploy.worker.Worker, logging to /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-linux02.out

linux01: failed to launch: nice -n 0 /opt/servers/spark-2.4.5/bin/spark-class org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

linux03: failed to launch: nice -n 0 /opt/servers/spark-2.4.5/bin/spark-class org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

linux02: failed to launch: nice -n 0 /opt/servers/spark-2.4.5/bin/spark-class org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

linux01: Spark Command: /opt/servers/jdk1.8/bin/java -cp /opt/servers/spark-2.4.5/conf/:/opt/servers/spark-2.4.5/jars/*:/opt/servers/hadoop-2.9.2/etc/hadoop/:/opt/servers/hadoop-2.9.2/share/hadoop/common/lib/*share/hadoop/common/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/:/ope/hadoop/yarn/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/*:/opt/servers/hadoop-2.9.2/contrib/capac.deploy.recoveryMode=ZOOKEEPER - Dspark.deploy.zookeeper.url=linux01,linux02,linux03 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

linux01: ========================================

linux01: Unrecognized option: -

linux01: Error: Could not create the Java Virtual Machine.

linux01: Error: A fatal exception has occurred. Program will exit.

linux03: Spark Command: /opt/servers/jdk1.8/bin/java -cp /opt/servers/spark-2.4.5/conf/:/opt/servers/spark-2.4.5/jars/*:/opt/servers/hadoop-2.9.2/etc/hadoop/:/opt/servers/hadoop-2.9.2/share/hadoop/common/lib/*share/hadoop/common/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/:/ope/hadoop/yarn/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/*:/opt/servers/hadoop-2.9.2/contrib/capac.deploy.recoveryMode=ZOOKEEPER - Dspark.deploy.zookeeper.url=linux01,linux02,linux03 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

linux03: ========================================

linux03: Unrecognized option: -

linux03: Error: Could not create the Java Virtual Machine.

linux03: Error: A fatal exception has occurred. Program will exit.

linux02: Spark Command: /opt/servers/jdk1.8/bin/java -cp /opt/servers/spark-2.4.5/conf/:/opt/servers/spark-2.4.5/jars/*:/opt/servers/hadoop-2.9.2/etc/hadoop/:/opt/servers/hadoop-2.9.2/share/hadoop/common/lib/*share/hadoop/common/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/:/ope/hadoop/yarn/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/*:/opt/servers/hadoop-2.9.2/contrib/capac.deploy.recoveryMode=ZOOKEEPER - Dspark.deploy.zookeeper.url=linux01,linux02,linux03 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

linux02: ========================================

linux02: Unrecognized option: -

linux02: Error: Could not create the Java Virtual Machine.

linux02: Error: A fatal exception has occurred. Program will exit.

linux02: full log in /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-linux02.out

linux01: full log in /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out

linux03: full log in /opt/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-linux03.out

日志

[root@linux01 logs]# pwd

/opt/servers/spark-2.4.5/logs

[root@linux01 logs]#

[root@linux01 logs]#

[root@linux01 logs]# ls

spark-root-org.apache.spark.deploy.history.HistoryServer-1-linux01.out spark-root-org.apache.spark.deploy.master.Master-1-linux01.out.3 spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out.2

spark-root-org.apache.spark.deploy.history.HistoryServer-1-linux01.out.1 spark-root-org.apache.spark.deploy.master.Master-1-linux01.out.4 spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out.3

spark-root-org.apache.spark.deploy.master.Master-1-linux01.out spark-root-org.apache.spark.deploy.master.Master-1-linux01.out.5 spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out.4

spark-root-org.apache.spark.deploy.master.Master-1-linux01.out.1 spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out.5

spark-root-org.apache.spark.deploy.master.Master-1-linux01.out.2 spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out.1

[root@linux01 logs]#

[root@linux01 logs]# cat spark-root-org.apache.spark.deploy.worker.Worker-1-linux01.out

Spark Command: /opt/servers/jdk1.8/bin/java -cp /opt/servers/spark-2.4.5/conf/:/opt/servers/spark-2.4.5/jars/*:/opt/servers/hadoop-2.9.2/etc/hadoop/:/opt/servers/hadoop-2.9.2/share/hadoop/common/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/common/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/hdfs/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/yarn/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/lib/*:/opt/servers/hadoop-2.9.2/share/hadoop/mapreduce/*:/opt/servers/hadoop-2.9.2/contrib/capacity-scheduler/*.jar -Dspark.deploy.recoveryMode=ZOOKEEPER - Dspark.deploy.zookeeper.url=linux01,linux02,linux03 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://linux01:7077

========================================

Unrecognized option: -

Error: Could not create the Java Virtual Machine.

Error: A fatal exception has occurred. Program will exit.

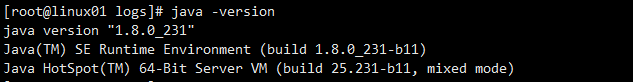

环境检查

-

Java - 正常

-

zookeeper 集群 - 正常

- 线程正常

- 启动正常

- 创建节点正常

create -s /zk-test 123 - 线程正常

-

检查spark:spark-env.sh

ps:我吐了!!!!

总结

那个框架不好使看哪个框架配置文件(spark-env.sh)

2605

2605

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?