1.geopandas安装

见[https://zhuanlan.zhihu.com/p/137628480]第一条评论(https://zhuanlan.zhihu.com/p/137628480)

pip install wheel

pip install pipwin

pipwin install numpy

pipwin install pandas

pipwin install shapely

pipwin install gdal

pipwin install fiona

pipwin install pyproj

pipwin install six

pipwin install rtree

pipwin install geopandas

2.pip install 和conda install 区别

https://blog.csdn.net/chanmufeng/article/details/107415218

- 在jupyter中开启matplotlib交互式绘图模式

利用%matplotlib widget

安装:见https://www.sohu.com/a/244095207_717210

使用 conda 安装 ipympl:

conda install-c conda-forge ipympl

Ifusingthe Notebook

conda install -c conda-forge widgetsnbextension(用)

IfusingJupyterLab

conda installnodejs

jupyter labextension install@jupyter-widgets/jupyterlab-manager

使用pip安装ipympl:

pip installipympl

#IfusingJupyterLab

#Installnodejs: https://nodejs.org/en/download/

jupyter labextension install@jupyter-widgets/jupyterlab-manager

对于开发安装(需要节点)

git clone https://github.com/matplotlib/jupyter-matplotlib.git

cd jupyter-matplotlib

pip install-e .

jupyter nbextension install–py --symlink --sys-prefix ipympl

jupyter nbextension enable–py --sys-prefix ipympl

jupyter labextension install@jupyter-widgets/jupyterlab-manager --no-build

jupyter labextension link./js

cd js && npm run watch

在这里插入代码片# 创建混合点线面的GeoSeries,这里第5个有孔多边形内部空洞创建时使用[::-1]颠倒顺序

# 是因为GeoSeries.plot()方法绘制有孔多边形的一个bug,即外部边框与内部孔洞创建时坐标

# 方向同为顺时针或顺时针时内部孔洞会自动被填充,如果你对这个bug感兴趣,可以前往

# https://github.com/geopandas/geopandas/issues/951查看细节

s = gpd.GeoSeries([geometry.Polygon([(0, 0), (0.5, 0.5), (1, 0), (0.5, -0.5)]),

geometry.Polygon([(1, 1), (1.5, 1.5), (2, 1), (1.5, -1.5)]),

geometry.Point(3, 3),

geometry.LineString([(2, 2), (0, 3)]),

geometry.Polygon([(4, 4), (8, 4), (8, 8), (4, 8)],

[[(5, 5), (7, 5), (7, 7), (5, 7)][::-1]])])

# 在jupyter中开启matplotlib交互式绘图模式

%matplotlib widget

s.plot() # 对s进行简单的可视化

4.df.loc和df.iloc区别

.loc行可用bool,列必用列名

.iloc行列全用索引

world.loc[world['pop_est']>1000000000,['pop_est','name']]

world.iloc[[98,139],[0,2]]

5.geopandas教程

https://www.cnblogs.com/feffery/p/11898190.html

6.folium

安装

conda install -c conda-forge folium

使用https://blog.csdn.net/weixin_38169413/article/details/104806257

https://www.cnblogs.com/wlfya/p/14462071.html

https://www.cnblogs.com/feffery/p/9282808.html

https://blog.csdn.net/kevin_7july/article/details/109600927

https://www.cnblogs.com/feffery/p/9288138.html

https://www.jianshu.com/p/1ce378278847

7.kepler

教程:https://www.cnblogs.com/feffery/p/12987968.html

https://sspai.com/post/55655

安装:pip install keplergl

在notebook里显示

jupyter nbextension install --py --sys-prefix keplergl

jupyter nbextension enable --py --sys-prefix keplergl

plt.rcParams[‘font.sans-serif’] = [‘SimSun’] # 指定默认字体为新宋体。

plt.rcParams[‘axes.unicode_minus’] = False # 解决保存图像时 负号’-’ 显示为方块和报错的问题

模型建立

关于GBDT见https://www.bilibili.com/video/BV1Ca4y1t7DS讲的很全

from sklearn import datasets

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import f1_score

#数据集导入

iris=datasets.load_iris()

feature=iris.feature_names

X = iris.data

y = iris.target

#随机森林

clf=RandomForestClassifier(n_estimators=200)

train_X,test_X,train_y,test_y = train_test_split(X,y,test_size=0.1,random_state=5)

clf.fit(train_X,train_y)

test_pred=clf.predict(test_X)

2.

#特征的重要性查看

print(str(feature)+’\n’+str(clf.feature_importances_))

3.F1-score 用于模型评价

#如果是二分类问题则选择参数‘binary’

#如果考虑类别的不平衡性,需要计算类别的加权平均,则使用‘weighted’

#如果不考虑类别的不平衡性,计算宏平均,则使用‘macro’

score=f1_score(test_y,test_pred,average=‘macro’)

print(“随机森林-macro:”,score)

score=f1_score(test_y,test_pred,average=‘weighted’)

print(“随机森林-weighted:”,score)

4.

import lightgbm as lgb

from sklearn import datasets

from sklearn.model_selection import train_test_split

import numpy as np

from sklearn.metrics import roc_auc_score, accuracy_score

import matplotlib.pyplot as plt

# 加载数据

iris = datasets.load_iris()

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.3)

# 转换为Dataset数据格式

train_data = lgb.Dataset(X_train, label=y_train)

validation_data = lgb.Dataset(X_test, label=y_test)

# 参数

results = {}

params = {

'learning_rate': 0.1,

'lambda_l1': 0.1,

'lambda_l2': 0.9,

'max_depth': 1,

'objective': 'multiclass', # 目标函数

'num_class': 3,

'verbose': -1

}

# 模型训练

gbm = lgb.train(params, train_data, valid_sets=(validation_data,train_data),valid_names=('validate','train'),evals_result= results)

# 模型预测

y_pred_test = gbm.predict(X_test)

y_pred_data = gbm.predict(X_train)

y_pred_data = [list(x).index(max(x)) for x in y_pred_data]

y_pred_test = [list(x).index(max(x)) for x in y_pred_test]

# 模型评估

print(accuracy_score(y_test, y_pred_test))

print('训练集',f1_score(y_train, y_pred_data,average='macro'))

print('验证集',f1_score(y_test, y_pred_test,average='macro'))

5.过拟合

6.修正阈值

7.网格搜索

我电脑崩了,太慢了

8.智慧海洋数据

import pandas as pd

import numpy as np

from tqdm import tqdm

from sklearn.metrics import classification_report, f1_score

from sklearn.model_selection import StratifiedKFold, KFold,train_test_split

import lightgbm as lgb

import os

import warnings

from hyperopt import fmin, tpe, hp, STATUS_OK, Trials

use_train = all_df[all_df['label'] != -1]

use_test = all_df[all_df['label'] == -1]#label为-1时是测试集

use_feats = [c for c in use_train.columns if c not in ['ID', 'label']]

X_train,X_verify,y_train,y_verify= train_test_split(use_train[use_feats],use_train['label'],test_size=0.3,random_state=0)

##############特征选择参数###################

selectFeatures = 200 # 控制特征数

earlyStopping = 100 # 控制早停

select_num_boost_round = 1000 # 特征选择训练轮次

#首先设置基础参数

selfParam = {

'learning_rate':0.01, # 学习率

'boosting':'dart', # 算法类型, gbdt,dart

'objective':'multiclass', # 多分类

'metric':'None',

'num_leaves':32, #

'feature_fraction':0.7, # 训练特征比例

'bagging_fraction':0.8, # 训练样本比例

'min_data_in_leaf':30, # 叶子最小样本

'num_class': 3,

'max_depth':6, # 树的最大深度

'num_threads':8,#LightGBM 的线程数

'min_data_in_bin':30, # 单箱数据量

'max_bin':256, # 最大分箱数

'is_unbalance':True, # 非平衡样本

'train_metric':True,

'verbose':-1,

}

# 特征选择 ---------------------------------------------------------------------------------

def f1_score_eval(preds, valid_df):

labels = valid_df.get_label()

preds = np.argmax(preds.reshape(3, -1), axis=0)

scores = f1_score(y_true=labels, y_pred=preds, average='macro')

return 'f1_score', scores, True

train_data = lgb.Dataset(data=X_train,label=y_train,feature_name=use_feats)

valid_data = lgb.Dataset(data=X_verify,label=y_verify,reference=train_data,feature_name=use_feats)

sm = lgb.train(params=selfParam,train_set=train_data,num_boost_round=select_num_boost_round,

valid_sets=[valid_data],valid_names=['valid'],

feature_name=use_feats,

early_stopping_rounds=earlyStopping,verbose_eval=False,keep_training_booster=True,feval=f1_score_eval)

features_importance = {k:v for k,v in zip(sm.feature_name(),sm.feature_importance(iteration=sm.best_iteration))}

sort_feature_importance = sorted(features_importance.items(),key=lambda x:x[1],reverse=True)

print('total feature best score:', sm.best_score)

print('total feature importance:',sort_feature_importance)

print('select forward {} features:{}'.format(selectFeatures,sort_feature_importance[:selectFeatures]))

#model_feature是选择的超参数

model_feature = [k[0] for k in sort_feature_importance[:selectFeatures]]

##############超参数优化的超参域###################

spaceParam = {

'boosting': hp.choice('boosting',['gbdt','dart']),

'learning_rate':hp.loguniform('learning_rate', np.log(0.01), np.log(0.05)),

'num_leaves': hp.quniform('num_leaves', 3, 66, 3),

'feature_fraction': hp.uniform('feature_fraction', 0.7,1),

'min_data_in_leaf': hp.quniform('min_data_in_leaf', 10, 50,5),

'num_boost_round':hp.quniform('num_boost_round',500,2000,100),

'bagging_fraction':hp.uniform('bagging_fraction',0.6,1)

}

# 超参数优化 ---------------------------------------------------------------------------------

def getParam(param):

for k in ['num_leaves', 'min_data_in_leaf','num_boost_round']:

param[k] = int(float(param[k]))

for k in ['learning_rate', 'feature_fraction','bagging_fraction']:

param[k] = float(param[k])

if param['boosting'] == 0:

param['boosting'] = 'gbdt'

elif param['boosting'] == 1:

param['boosting'] = 'dart'

# 添加固定参数

param['objective'] = 'multiclass'

param['max_depth'] = 7

param['num_threads'] = 8

param['is_unbalance'] = True

param['metric'] = 'None'

param['train_metric'] = True

param['verbose'] = -1

param['bagging_freq']=5

param['num_class']=3

param['feature_pre_filter']=False

return param

def f1_score_eval(preds, valid_df):

labels = valid_df.get_label()

preds = np.argmax(preds.reshape(3, -1), axis=0)

scores = f1_score(y_true=labels, y_pred=preds, average='macro')

return 'f1_score', scores, True

def lossFun(param):

param = getParam(param)

m = lgb.train(params=param,train_set=train_data,num_boost_round=param['num_boost_round'],

valid_sets=[train_data,valid_data],valid_names=['train','valid'],

feature_name=features,feval=f1_score_eval,

early_stopping_rounds=earlyStopping,verbose_eval=False,keep_training_booster=True)

train_f1_score = m.best_score['train']['f1_score']

valid_f1_score = m.best_score['valid']['f1_score']

loss_f1_score = 1 - valid_f1_score

print('训练集f1_score:{},测试集f1_score:{},loss_f1_score:{}'.format(train_f1_score, valid_f1_score, loss_f1_score))

return {'loss': loss_f1_score, 'params': param, 'status': STATUS_OK}

features = model_feature

train_data = lgb.Dataset(data=X_train[model_feature],label=y_train,feature_name=features)

valid_data = lgb.Dataset(data=X_verify[features],label=y_verify,reference=train_data,feature_name=features)

best_param = fmin(fn=lossFun, space=spaceParam, algo=tpe.suggest, max_evals=100, trials=Trials())

best_param = getParam(best_param)

print('Search best param:',best_param)

def f1_score_eval(preds, valid_df):

labels = valid_df.get_label()

preds = np.argmax(preds.reshape(3, -1), axis=0)

scores = f1_score(y_true=labels, y_pred=preds, average='macro')

return 'f1_score', scores, True

def sub_on_line_lgb(train_, test_, pred, label, cate_cols, split,

is_shuffle=True,

use_cart=False,

get_prob=False):

n_class = 3

train_pred = np.zeros((train_.shape[0], n_class))

test_pred = np.zeros((test_.shape[0], n_class))

n_splits = 5

assert split in ['kf', 'skf'

], '{} Not Support this type of split way'.format(split)

if split == 'kf':

folds = KFold(n_splits=n_splits, shuffle=is_shuffle, random_state=1024)

kf_way = folds.split(train_[pred])

else:

#与KFold最大的差异在于,他是分层采样,确保训练集,测试集中各类别样本的比例与原始数据集中相同。

folds = StratifiedKFold(n_splits=n_splits,

shuffle=is_shuffle,

random_state=1024)

kf_way = folds.split(train_[pred], train_[label])

print('Use {} features ...'.format(len(pred)))

#将以下参数改为贝叶斯优化之后的参数

params = {

'learning_rate': 0.05,

'boosting_type': 'gbdt',

'objective': 'multiclass',

'metric': 'None',

'num_leaves': 60,

'feature_fraction':0.86,

'bagging_fraction': 0.73,

'bagging_freq': 5,

'seed': 1,

'bagging_seed': 1,

'feature_fraction_seed': 7,

'min_data_in_leaf': 15,

'num_class': n_class,

'nthread': 8,

'verbose': -1,

'num_boost_round': 1100,

'max_depth': 7,

}

for n_fold, (train_idx, valid_idx) in enumerate(kf_way, start=1):

print('the {} training start ...'.format(n_fold))

train_x, train_y = train_[pred].iloc[train_idx

], train_[label].iloc[train_idx]

valid_x, valid_y = train_[pred].iloc[valid_idx

], train_[label].iloc[valid_idx]

if use_cart:

dtrain = lgb.Dataset(train_x,

label=train_y,

categorical_feature=cate_cols)

dvalid = lgb.Dataset(valid_x,

label=valid_y,

categorical_feature=cate_cols)

else:

dtrain = lgb.Dataset(train_x, label=train_y)

dvalid = lgb.Dataset(valid_x, label=valid_y)

clf = lgb.train(params=params,

train_set=dtrain,

# num_boost_round=3000,

valid_sets=[dvalid],

early_stopping_rounds=100,

verbose_eval=100,

feval=f1_score_eval)

train_pred[valid_idx] = clf.predict(valid_x,

num_iteration=clf.best_iteration)

test_pred += clf.predict(test_[pred],

num_iteration=clf.best_iteration) / folds.n_splits

print(classification_report(train_[label], np.argmax(train_pred,

axis=1),

digits=4))

if get_prob:

sub_probs = ['qyxs_prob_{}'.format(q) for q in ['围网', '刺网', '拖网']]

prob_df = pd.DataFrame(test_pred, columns=sub_probs)

prob_df['ID'] = test_['ID'].values

return prob_df

else:

test_['label'] = np.argmax(test_pred, axis=1)

return test_[['ID', 'label']]

use_train = all_df[all_df['label'] != -1]

use_test = all_df[all_df['label'] == -1]

# use_feats = [c for c in use_train.columns if c not in ['ID', 'label']]

use_feats=model_feature

sub = sub_on_line_lgb(use_train, use_test, use_feats, 'label', [], 'kf',is_shuffle=True,use_cart=False,get_prob=False)

这步我电脑中途自己要休息啊,我再跑一次试一试

Task5 模型融合

1.

import pandas as pd

import numpy as np

import warnings

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

warnings.filterwarnings('ignore')

%matplotlib inline

import itertools

import matplotlib.gridspec as gridspec

from sklearn import datasets

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier,RandomForestRegressor

from sklearn.linear_model import LogisticRegression

# from mlxtend.classifier import StackingClassifier

from sklearn.model_selection import cross_val_score, train_test_split

# from mlxtend.plotting import plot_learning_curves

# from mlxtend.plotting import plot_decision_regions

from sklearn.model_selection import StratifiedKFold

from sklearn.model_selection import train_test_split

from sklearn.model_selection import StratifiedKFold

from sklearn.model_selection import train_test_split

from sklearn.ensemble import AdaBoostClassifier

from sklearn.ensemble import VotingClassifier

import lightgbm as lgb

from sklearn.neural_network import MLPClassifier,MLPRegressor

from sklearn.metrics import mean_squared_error, mean_absolute_error

import pandas as pd

import numpy as np

from sklearn.metrics import classification_report, f1_score

from sklearn.model_selection import StratifiedKFold, KFold,train_test_split

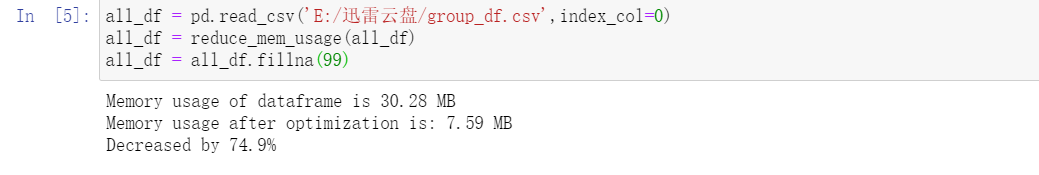

def reduce_mem_usage(df):

start_mem = df.memory_usage().sum() / 1024**2

print('Memory usage of dataframe is {:.2f} MB'.format(start_mem))

for col in df.columns:

col_type = df[col].dtype

if col_type != object:

c_min = df[col].min()

c_max = df[col].max()

if str(col_type)[:3] == 'int':

if c_min > np.iinfo(np.int8).min and c_max < np.iinfo(np.int8).max:

df[col] = df[col].astype(np.int8)

elif c_min > np.iinfo(np.int16).min and c_max < np.iinfo(np.int16).max:

df[col] = df[col].astype(np.int16)

elif c_min > np.iinfo(np.int32).min and c_max < np.iinfo(np.int32).max:

df[col] = df[col].astype(np.int32)

elif c_min > np.iinfo(np.int64).min and c_max < np.iinfo(np.int64).max:

df[col] = df[col].astype(np.int64)

else:

if c_min > np.finfo(np.float16).min and c_max < np.finfo(np.float16).max:

df[col] = df[col].astype(np.float16)

elif c_min > np.finfo(np.float32).min and c_max < np.finfo(np.float32).max:

df[col] = df[col].astype(np.float32)

else:

df[col] = df[col].astype(np.float64)

else:

df[col] = df[col].astype('category')

end_mem = df.memory_usage().sum() / 1024**2

print('Memory usage after optimization is: {:.2f} MB'.format(end_mem))

print('Decreased by {:.1f}%'.format(100 * (start_mem - end_mem) / start_mem))

return df

all_df = pd.read_csv('E:/迅雷云盘/group_df.csv',index_col=0)

all_df = reduce_mem_usage(all_df)

all_df = all_df.fillna(99)

train = all_df[all_df['label'] != -1]

test = all_df[all_df['label'] == -1]

feats = [c for c in train.columns if c not in ['ID', 'label']]

# 根据7:3划分训练集和测试集

X_train,X_val,y_train,y_val= train_test_split(train[feats],train['label'],test_size=0.3,random_state=0)

# 单模函数

def build_model_rf(X_train,y_train):

model = RandomForestClassifier(n_estimators = 100)

model.fit(X_train, y_train)

return model

def build_model_lgb(X_train,y_train):

model = lgb.LGBMClassifier(num_leaves=127,learning_rate = 0.1,n_estimators = 200)

model.fit(X_train, y_train)

return model

def build_model_lgb2(X_train,y_train):

model = lgb.LGBMClassifier(num_leaves=63,learning_rate = 0.05,n_estimators = 400)

model.fit(X_train, y_train)

return model

# 这里针对三个单模进行训练,其中subA_rf/lgb/nn都是可以提交的模型

# 单模没有进行调参,因此是弱分类器,效果可能不是很好。

print('predict rf ...')

model_rf = build_model_rf(X_train,y_train)

val_rf = model_rf.predict(X_val)

subA_rf = model_rf.predict(test[feats])

rf_f1_score = f1_score(y_val,val_rf,average='macro')

print(rf_f1_score)

print('predict lgb...')

model_lgb = build_model_lgb(X_train,y_train)

val_lgb = model_lgb.predict(X_val)

subA_lgb = model_lgb.predict(test[feats])

lgb_f1_score = f1_score(y_val,val_lgb,average='macro')

print(lgb_f1_score)

print('predict lgb 2...')

model_lgb2 = build_model_lgb2(X_train,y_train)

val_lgb2 = model_lgb2.predict(X_val)

subA_lgb2 = model_lgb2.predict(test[feats])

lgb2_f1_score = f1_score(y_val,val_lgb2,average='macro')

print(lgb2_f1_score)

voting_clf = VotingClassifier(estimators=[('rf',model_rf ),

('lgb',model_lgb),

('lgb2',model_lgb2 )],voting='hard')

voting_clf.fit(X_train,y_train)

val_voting = voting_clf.predict(X_val)

subA_voting = voting_clf.predict(test[feats])

voting_f1_score = f1_score(y_val,val_voting,average='macro')

print(voting_f1_score)

_N_FOLDS = 5 # 采用5折交叉验证

kf = KFold(n_splits=_N_FOLDS, random_state=42) # sklearn的交叉验证模块,用于划分数据

def get_oof(clf, X_train, y_train, X_test):

oof_train = np.zeros((X_train.shape[0], 1))

oof_test_skf = np.empty((_N_FOLDS, X_test.shape[0], 1))

for i, (train_index, test_index) in enumerate(kf.split(X_train)): # 交叉验证划分此时的训练集和验证集

kf_X_train = X_train.iloc[train_index,]

kf_y_train = y_train.iloc[train_index,]

kf_X_val = X_train.iloc[test_index,]

clf.fit(kf_X_train, kf_y_train)

oof_train[test_index] = clf.predict(kf_X_val).reshape(-1, 1)

oof_test_skf[i, :] = clf.predict(X_test).reshape(-1, 1)

oof_test = oof_test_skf.mean(axis=0) # 对每一则交叉验证的结果取平均

return oof_train, oof_test # 返回当前分类器对训练集和测试集的预测结果

_N_FOLDS = 5 # 采用5折交叉验证

kf = KFold(n_splits=_N_FOLDS, random_state=42) # sklearn的交叉验证模块,用于划分数据

def get_oof(clf, X_train, y_train, X_test):

oof_train = np.zeros((X_train.shape[0], 1))

oof_test_skf = np.empty((_N_FOLDS, X_test.shape[0], 1))

for i, (train_index, test_index) in enumerate(kf.split(X_train)): # 交叉验证划分此时的训练集和验证集

kf_X_train = X_train.iloc[train_index,]

kf_y_train = y_train.iloc[train_index,]

kf_X_val = X_train.iloc[test_index,]

clf.fit(kf_X_train, kf_y_train)

oof_train[test_index] = clf.predict(kf_X_val).reshape(-1, 1)

oof_test_skf[i, :] = clf.predict(X_test).reshape(-1, 1)

oof_test = oof_test_skf.mean(axis=0) # 对每一则交叉验证的结果取平均

return oof_train, oof_test # 返回当前分类器对训练集和测试集的预测结果

第一次参加组队学习比赛,学到了很多,进度还是蛮快的,自己很多时候都是CV大法,很多模型还不熟悉,缺少自己的思考

当前目标:跟上团队的进度,不能再补打卡

下个月目标:了解模型,有自己的思考!

5058

5058

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?