神经元模型

神经元模型如下:

σ ( x \sigma(x σ(x)为激活函数。

BP神经网络结构

B

P

神

经

网

络

结

构

BP神经网络结构

BP神经网络结构

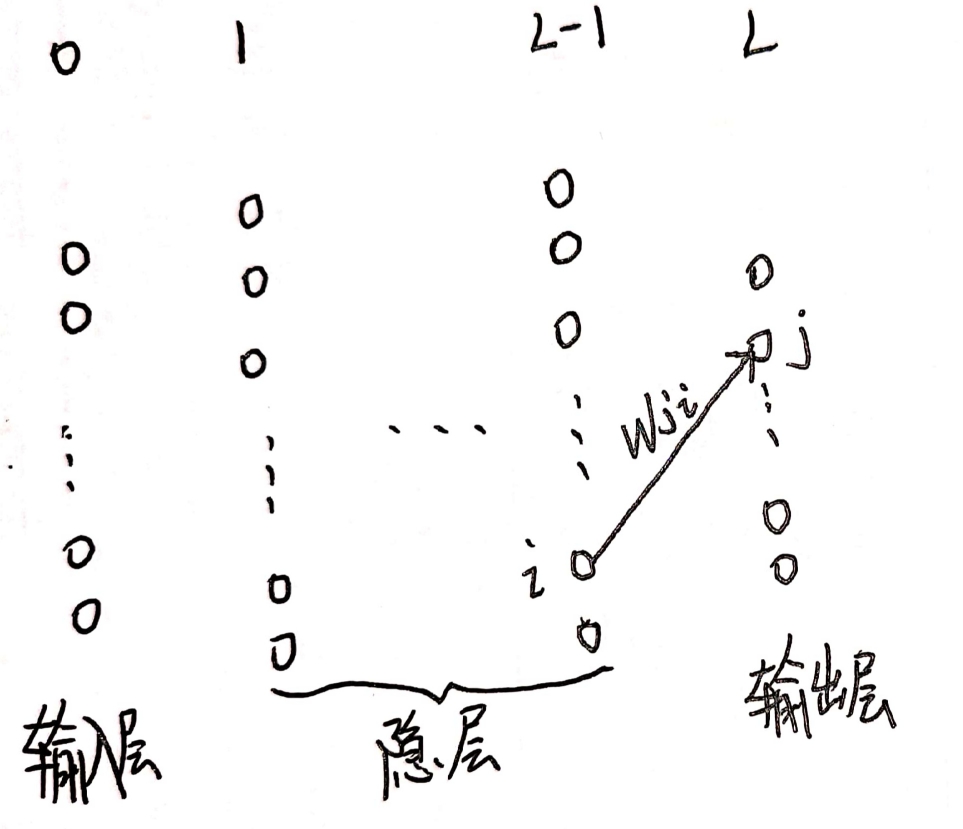

图示BP神经网络相邻两层之间的各神经元相互连接,同一层的神经元不连接。

第一层称为输入层,最后一层称为输出层,中间各层称为隐层。

训练集 D D D

训练样本输入 x = [ x 1 , x 2 , . . . , x m 1 ] x=[x_1,x_2,...,x_{m^1}] x=[x1,x2,...,xm1],样本输出 y = [ y 1 , y 2 , . . . , y m L ] y=[y_1,y_2,...,y_{m^L}] y=[y1,y2,...,ymL]

m k m^k mk为第 k k k层神经元的个数。

w j i k w_{ji}^k wjik为第 k − 1 k-1 k−1层第 i i i个神经元连接第 k k k层第 j j j个结点的权值。

y j k y^k_j yjk为第 k k k层第 j j j个神经元的输出值,也是第 k + 1 k+1 k+1层的输入值, k = 0 k=0 k=0时, y 0 y^0 y0即为训练样本输入的 x x x。

b j k b_j^k bjk为第 k k k层第 j j j个神经元的阈值。

z j k z^k_j zjk为第 k k k层第 j j j个神经元受到上一层各神经元的输出总和。

σ ( x ) \sigma(x) σ(x)为激活函数。

各变量之间的关系:

z

j

k

=

∑

i

m

k

−

1

(

w

j

i

k

y

i

k

−

1

+

b

j

k

)

y

j

k

=

σ

(

z

j

k

)

,

k

=

1

,

.

.

.

,

L

y

0

=

x

,

x

∈

D

\begin{aligned} z_j^k=&\sum_i^{m^{k-1}}{(w_{ji}^ky_i^{k-1}+b_j^k)}\\ \\ y_j^k=&\sigma(z_j^k),k=1,...,L\\ \\ y^0=&x,x\in{D} \end{aligned}

zjk=yjk=y0=i∑mk−1(wjikyik−1+bjk)σ(zjk),k=1,...,Lx,x∈D

对于激活函数,常用的有Sigmoid、ReLU等

S i g m o i d 函 数 Sigmoid函数 Sigmoid函数

R e L U 函 数 ReLU函数 ReLU函数

BP神经网络算法

训练集 D D D

随机初始化网络中的所有权值和阈值

w

h

i

l

e

while

while

f

o

r

a

l

l

(

x

,

y

)

∈

D

for all(x,y)\in{D}

for all(x,y)∈D

计算各层输出值

y

k

,

k

=

1

,

2

,

.

.

.

,

L

−

1

y^k,k=1,2,...,L-1

yk,k=1,2,...,L−1

计算各层权值和阈值的梯度

根据梯度更新权值和阈值

e

n

d

end

end

u

n

t

i

l

until

until到达终止条件

B

P

BP

BP算法的目标是最小化训练集上的累积误差

E

=

1

m

∑

l

D

J

l

J

l

=

1

2

∑

j

=

1

m

L

(

y

j

L

−

y

j

)

2

\begin{aligned} E=&\frac{1}{m}\sum_l^D{J_l}\\ \\ J_l=&\frac{1}{2}\sum^{m^L}_{j=1}{(y^L_j-y_j)^2} \end{aligned}

E=Jl=m1l∑DJl21j=1∑mL(yjL−yj)2

误差反向传播推导

BP算法通过反向传播更新权值来进行学习,对于训练样本

(

x

,

y

)

l

(x,y)_l

(x,y)l,神经网络输出为

y

L

y^L

yL,误差函数为:

J

l

=

1

2

∑

j

=

1

m

L

(

y

j

L

−

y

j

)

2

\begin{aligned} J_l=&\frac{1}{2}\sum^{m^L}_{j=1}{(y^L_j-y_j)^2} \end{aligned}

Jl=21j=1∑mL(yjL−yj)2

使用梯度下降法更新各层权值和阈值,即沿目标函数的负梯度方向更新权值,因此,神经网络权值修正量为:

Δ

w

j

i

k

=

−

η

∂

J

∂

w

j

i

k

Δ

b

i

k

=

−

η

∂

J

∂

b

i

k

η

∈

(

0

,

1

)

,

称

为

学

习

率

\begin{aligned} \Delta{w^k_{ji}}=-\eta{\frac{\partial{J}}{\partial{w^{k}_{ji}}}}\\ \\ \Delta{b^k_i}=-\eta{\frac{\partial{J}}{\partial{b^k_i}}}\\ \\ \eta\in(0,1),称为学习率 \end{aligned}

Δwjik=−η∂wjik∂JΔbik=−η∂bik∂Jη∈(0,1),称为学习率

下面进行推导。

对于输出层的神经元的权值:

∂

J

∂

w

j

i

L

=

∂

J

∂

z

j

L

∂

z

j

L

∂

w

j

i

L

\begin{aligned} \frac{\partial{J}}{\partial{w^L_{ji}}}=&\frac{\partial{J}}{\partial{z^L_j}}\frac{\partial{z^L_j}}{\partial{w^L_{ji}}} \end{aligned}

∂wjiL∂J=∂zjL∂J∂wjiL∂zjL

令

d

j

L

=

∂

J

∂

z

j

L

\begin{aligned} d^L_j=&\frac{\partial{J}}{\partial{z^L_j}} \end{aligned}

djL=∂zjL∂J

则

d

j

L

=

∂

J

∂

y

j

L

∂

y

j

L

∂

z

j

L

=

(

y

j

L

−

y

j

)

σ

′

(

z

j

L

)

\begin{aligned} d_j^L=&\frac{\partial{J}}{\partial{y^L_j}}\frac{\partial{y^L_j}}{\partial{z^L_j}}\\ \\ =&(y_j^L-y_j)\sigma'(z^L_j) \end{aligned}

djL==∂yjL∂J∂zjL∂yjL(yjL−yj)σ′(zjL)又

∂

z

j

L

∂

w

j

i

L

=

y

i

L

−

1

\begin{aligned} \frac{\partial{z^L_j}}{\partial{w^L_{ji}}}=&y^{L-1}_i \end{aligned}

∂wjiL∂zjL=yiL−1

所以

∂

J

l

∂

w

j

i

L

=

∂

J

l

∂

z

j

L

∂

z

j

L

∂

w

j

i

L

=

d

j

L

y

i

L

−

1

=

∂

J

l

∂

y

j

L

∂

y

j

L

∂

z

j

L

∂

z

j

L

∂

w

j

i

L

=

(

y

j

L

−

y

)

σ

′

(

z

j

L

)

y

i

L

−

1

\begin{aligned} \frac{\partial{J_l}}{\partial{w^L_{ji}}}=&\frac{\partial{J_l}}{\partial{z^L_j}}\frac{\partial{z^L_j}}{\partial{w^L_{ji}}}\\ \\ =&d_j^Ly^{L-1}_i\\ \\ =&\frac{\partial{J_l}}{\partial{y^L_j}}\frac{\partial{y^L_j}}{\partial{z^L_j}}\frac{\partial{z^L_j}}{\partial{w^L_{ji}}}\\ \\ =&(y_j^L-y)\sigma'(z^L_j)y^{L-1}_i \end{aligned}

∂wjiL∂Jl====∂zjL∂Jl∂wjiL∂zjLdjLyiL−1∂yjL∂Jl∂zjL∂yjL∂wjiL∂zjL(yjL−y)σ′(zjL)yiL−1

对于输出层的神经元的阈值,与权值同理:

∂

J

l

∂

b

j

L

=

∂

J

l

∂

z

j

L

∂

z

j

L

∂

b

j

L

\begin{aligned} \frac{\partial{J_l}}{\partial{b^L_j}}=&\frac{\partial{J_l}}{\partial{z^L_j}}\frac{\partial{z^L_j}}{\partial{b^L_j}} \end{aligned}

∂bjL∂Jl=∂zjL∂Jl∂bjL∂zjL

由于

∂

z

j

L

∂

b

j

L

=

1

\begin{aligned} \frac{\partial{z^L_j}}{\partial{b^L_j}}=&1 \end{aligned}

∂bjL∂zjL=1

所以

∂

J

l

∂

b

j

L

=

∂

J

l

∂

z

j

L

∂

z

j

L

∂

b

j

L

=

d

j

L

=

(

y

j

L

−

y

)

σ

′

(

z

j

L

)

\begin{aligned} \frac{\partial{J_l}}{\partial{b^L_j}}=&\frac{\partial{J_l}}{\partial{z^L_j}}\frac{\partial{z^L_j}}{\partial{b^L_j}}\\ \\ =&d^L_j\\ \\ =&(y_j^L-y)\sigma'(z^L_j) \end{aligned}

∂bjL∂Jl===∂zjL∂Jl∂bjL∂zjLdjL(yjL−y)σ′(zjL)

对于隐层的神经元的权值:

以

L

−

1

L-1

L−1层为例:

∂

J

l

∂

w

j

i

L

−

1

=

∂

J

l

∂

z

j

L

−

1

∂

z

j

L

−

1

∂

w

j

i

L

−

1

\begin{aligned} \frac{\partial{J_l}}{\partial{w^{L-1}_{ji}}}=&\frac{\partial{J_l}}{\partial{z^{L-1}_j}}\frac{\partial{z^{L-1}_j}}{\partial{w^{L-1}_{ji}}} \end{aligned}

∂wjiL−1∂Jl=∂zjL−1∂Jl∂wjiL−1∂zjL−1

同理,

d

j

L

−

1

=

∂

J

l

∂

z

j

L

−

1

=

∂

J

l

∂

y

j

L

−

1

∂

y

j

L

−

1

∂

z

j

L

−

1

=

∂

J

l

∂

y

j

L

−

1

σ

′

(

z

j

L

)

∂

z

j

L

−

1

∂

w

j

i

L

−

1

=

y

i

L

−

2

\begin{aligned} d^{L-1}_j=&\frac{\partial{J_l}}{\partial{z^{L-1}_j}}\\ \\ =&\frac{\partial{J_l}}{\partial{y^{L-1}_j}}\frac{\partial{y^{L-1}_j}}{\partial{z^{L-1}_j}}\\ \\ =&\frac{\partial{J_l}}{\partial{y^{L-1}_j}}\sigma'(z^L_j)\\ \\ \frac{\partial{z^{L-1}_j}}{\partial{w^{L-1}_{ji}}}=&y^{L-2}_i \end{aligned}

djL−1===∂wjiL−1∂zjL−1=∂zjL−1∂Jl∂yjL−1∂Jl∂zjL−1∂yjL−1∂yjL−1∂Jlσ′(zjL)yiL−2

因为

J

=

1

2

∑

j

=

1

m

L

(

y

j

L

−

y

j

)

2

y

j

L

=

σ

(

∑

i

m

L

−

1

(

w

j

i

k

y

i

L

−

1

+

b

j

L

)

)

\begin{aligned} J=&\frac{1}{2}\sum^{m^L}_{j=1}{(y^L_j-y_j)^2}\\ \\ y_j^L=&\sigma(\sum_i^{m^{L-1}}{(w_{ji}^ky_i^{L-1}+b_j^L)})\\ \end{aligned}

J=yjL=21j=1∑mL(yjL−yj)2σ(i∑mL−1(wjikyiL−1+bjL))

神经网络是全连接,所以

∂

J

l

∂

y

j

L

−

1

=

∑

i

=

1

m

L

∂

J

∂

y

i

L

∂

y

i

L

∂

y

j

L

−

1

=

∑

i

=

1

m

L

∂

J

l

∂

y

i

L

∂

y

i

L

∂

z

i

L

∂

z

i

L

∂

y

j

L

−

1

=

∑

i

=

1

m

L

d

i

L

w

i

j

L

\begin{aligned} \frac{\partial{J_l}}{\partial{y^{L-1}_j}}=&\sum_{i=1}^{m^{L}}\frac{\partial{J}}{\partial{y^{L}_i}}\frac{\partial{y^{L}_i}}{\partial{y_{j}^{L-1}}}\\ \\ =&\sum_{i=1}^{m^{L}}\frac{\partial{J_l}}{\partial{y^{L}_i}}\frac{\partial{y^{L}_i}}{\partial{z_{i}^{L}}}\frac{\partial{z_{i}^{L}}}{\partial{y_{j}^{L-1}}}\\ \\ =&\sum_{i=1}^{m^{L}}d^L_iw^L_{ij} \end{aligned}

∂yjL−1∂Jl===i=1∑mL∂yiL∂J∂yjL−1∂yiLi=1∑mL∂yiL∂Jl∂ziL∂yiL∂yjL−1∂ziLi=1∑mLdiLwijL

所以,

∂

J

l

∂

w

j

i

L

−

1

=

∂

J

l

∂

z

j

L

−

1

∂

z

j

L

−

1

∂

w

j

i

L

−

1

=

∂

J

l

∂

y

j

L

−

1

∂

y

j

L

−

1

∂

z

j

L

−

1

∂

z

j

L

−

1

∂

w

j

i

L

−

1

=

d

j

L

−

1

y

i

L

−

2

=

∂

J

∂

y

j

L

−

1

σ

′

(

z

j

L

)

y

i

L

−

2

=

∑

i

=

1

m

L

∂

J

l

∂

y

i

L

∂

y

i

L

∂

z

i

L

∂

z

i

L

∂

y

j

L

−

1

σ

′

(

z

j

L

)

y

i

L

−

2

=

∑

i

=

1

m

L

d

i

L

w

i

j

L

σ

′

(

z

j

L

)

y

i

L

−

2

\begin{aligned} \frac{\partial{J_l}}{\partial{w^{L-1}_{ji}}}=&\frac{\partial{J_l}}{\partial{z^{L-1}_j}}\frac{\partial{z^{L-1}_j}}{\partial{w^{L-1}_{ji}}}\\ \\ =&\frac{\partial{J_l}}{\partial{y^{L-1}_j}}\frac{\partial{y^{L-1}_j}}{\partial{z^{L-1}_j}}\frac{\partial{z^{L-1}_j}}{\partial{w^{L-1}_{ji}}}\\ \\ =&d_j^{L-1}y_i^{L-2}\\ \\ =&\frac{\partial{J}}{\partial{y^{L-1}_j}}\sigma'(z^L_j)y^{L-2}_i\\ \\ =&\sum_{i=1}^{m^{L}}\frac{\partial{J_l}}{\partial{y^{L}_i}}\frac{\partial{y^{L}_i}}{\partial{z_{i}^{L}}}\frac{\partial{z_{i}^{L}}}{\partial{y_{j}^{L-1}}}\sigma'(z^L_j)y^{L-2}_i\\ \\ =&\sum_{i=1}^{m^{L}}d^L_iw^L_{ij}\sigma'(z^L_j)y^{L-2}_i \end{aligned}

∂wjiL−1∂Jl======∂zjL−1∂Jl∂wjiL−1∂zjL−1∂yjL−1∂Jl∂zjL−1∂yjL−1∂wjiL−1∂zjL−1djL−1yiL−2∂yjL−1∂Jσ′(zjL)yiL−2i=1∑mL∂yiL∂Jl∂ziL∂yiL∂yjL−1∂ziLσ′(zjL)yiL−2i=1∑mLdiLwijLσ′(zjL)yiL−2

对于隐层的神经元的阈值,与权值同理

以L-1层为例:

∂

J

l

∂

b

j

L

−

1

=

∂

J

l

∂

z

j

L

−

1

∂

z

j

L

−

1

∂

b

j

L

−

1

\begin{aligned} \frac{\partial{J_l}}{\partial{b^{L-1}_j}}=&\frac{\partial{J_l}}{\partial{z^{L-1}_j}}\frac{\partial{z^{L-1}_j}}{\partial{b^{L-1}_j}} \end{aligned}

∂bjL−1∂Jl=∂zjL−1∂Jl∂bjL−1∂zjL−1

∂

z

j

L

−

1

∂

b

j

L

−

1

=

1

\begin{aligned} \frac{\partial{z^{L-1}_j}}{\partial{b^{L-1}_j}}=1 \end{aligned}

∂bjL−1∂zjL−1=1

所以

∂

J

l

∂

b

j

L

−

1

=

∂

J

l

∂

z

j

L

−

1

∂

z

j

L

−

1

∂

b

j

L

−

1

=

d

j

L

−

1

=

∑

i

=

1

m

L

d

i

L

w

i

j

L

σ

′

(

z

j

L

)

\begin{aligned} \frac{\partial{J_l}}{\partial{b^{L-1}_j}}=&\frac{\partial{J_l}}{\partial{z^{L-1}_j}}\frac{\partial{z^{L-1}_j}}{\partial{b^{L-1}_j}}\\ \\ =&d^{L-1}_j\\ =&\sum_{i=1}^{m^{L}}d^L_iw^L_{ij}\sigma'(z^L_j) \end{aligned}

∂bjL−1∂Jl===∂zjL−1∂Jl∂bjL−1∂zjL−1djL−1i=1∑mLdiLwijLσ′(zjL)

推广到第k层隐层的权值:

∂

J

l

∂

w

j

i

k

=

∂

J

l

∂

z

j

k

∂

z

j

k

∂

w

j

i

k

=

∂

J

l

∂

y

j

k

∂

y

j

k

∂

z

j

k

∂

z

j

k

∂

w

j

i

k

=

d

j

k

y

i

k

−

1

=

∂

J

l

∂

y

j

k

σ

′

(

z

j

k

)

y

i

k

−

1

=

∑

i

=

1

m

k

+

1

∂

J

l

∂

y

i

k

+

1

∂

y

i

k

+

1

∂

z

i

k

+

1

∂

z

i

k

+

1

∂

y

j

k

σ

′

(

z

j

k

)

y

i

k

−

1

=

∑

i

=

1

m

k

+

1

d

i

k

+

1

w

i

j

k

+

1

σ

′

(

z

j

k

)

y

i

k

−

1

\begin{aligned} \frac{\partial{J_l}}{\partial{w^{k}_{ji}}}=&\frac{\partial{J_l}}{\partial{z^{k}_j}}\frac{\partial{z^{k}_j}}{\partial{w^{k}_{ji}}}\\ \\ =&\frac{\partial{J_l}}{\partial{y^{k}_j}}\frac{\partial{y^{k}_j}}{\partial{z^{k}_j}}\frac{\partial{z^{k}_j}}{\partial{w^{k}_{ji}}}\\ \\ =&d_j^{k}y_i^{k-1}\\ \\ =&\frac{\partial{J_l}}{\partial{y^{k}_j}}\sigma'(z^{k}_j)y^{k-1}_i\\ \\ =&\sum_{i=1}^{m^{k+1}}\frac{\partial{J_l}}{\partial{y^{k+1}_i}}\frac{\partial{y^{k+1}_i}}{\partial{z_{i}^{k+1}}}\frac{\partial{z_{i}^{k+1}}}{\partial{y_{j}^{k}}}\sigma'(z^k_j)y^{k-1}_i\\ \\ =&\sum_{i=1}^{m^{k+1}}d^{k+1}_iw^{k+1}_{ij}\sigma'(z^k_j)y^{k-1}_i \end{aligned}

∂wjik∂Jl======∂zjk∂Jl∂wjik∂zjk∂yjk∂Jl∂zjk∂yjk∂wjik∂zjkdjkyik−1∂yjk∂Jlσ′(zjk)yik−1i=1∑mk+1∂yik+1∂Jl∂zik+1∂yik+1∂yjk∂zik+1σ′(zjk)yik−1i=1∑mk+1dik+1wijk+1σ′(zjk)yik−1

第k层隐层的阈值:

∂

J

l

∂

b

j

k

=

∂

J

l

∂

z

j

k

∂

z

j

k

∂

b

j

k

=

d

j

k

=

∑

i

=

1

m

k

+

1

d

i

k

+

1

w

i

j

k

+

1

σ

′

(

z

j

k

)

\begin{aligned} \frac{\partial{J_l}}{\partial{b^{k}_j}}=&\frac{\partial{J_l}}{\partial{z^{k}_j}}\frac{\partial{z^{k}_j}}{\partial{b^{k}_j}}\\ \\ =&d_j^{k}\\ \\ =&\sum_{i=1}^{m^{k+1}}d^{k+1}_iw^{k+1}_{ij}\sigma'(z^{k}_j) \end{aligned}

∂bjk∂Jl===∂zjk∂Jl∂bjk∂zjkdjki=1∑mk+1dik+1wijk+1σ′(zjk)

根据上面的推导,更新权值和阈值

w

j

i

k

←

w

j

i

k

+

Δ

w

j

i

k

b

j

k

←

b

j

k

+

Δ

b

j

k

\begin{aligned} w^k_{ji}\leftarrow{w^k_{ji}+\Delta{w^k_{ji}}}\\ \\ b^k_j\leftarrow{b^k_{j}+\Delta{b^k_{j}}}\\ \\ \end{aligned}

wjik←wjik+Δwjikbjk←bjk+Δbjk

BP算法总结

训练集

D

D

D

训练样本输入

x

=

[

x

1

,

x

2

,

.

.

.

,

x

m

1

]

x=[x_1,x_2,...,x_{m^1}]

x=[x1,x2,...,xm1],样本输出

y

=

[

y

1

,

y

2

,

.

.

.

,

y

m

L

]

y=[y_1,y_2,...,y_{m^L}]

y=[y1,y2,...,ymL]

各层输出:

z

j

k

=

∑

i

m

k

−

1

(

w

j

i

k

y

i

k

−

1

+

b

j

k

)

y

j

k

=

σ

(

z

j

k

)

k

=

1

,

.

.

.

,

L

y

0

=

x

,

x

∈

D

\begin{aligned} z_j^k=&\sum_i^{m^{k-1}}{(w_{ji}^ky_i^{k-1}+b_j^k)}\\ \\ y_j^k=&\sigma(z_j^k)k=1,...,L\\ \\ y^0=&x,x\in{D} \end{aligned}

zjk=yjk=y0=i∑mk−1(wjikyik−1+bjk)σ(zjk)k=1,...,Lx,x∈D

最小化累积误差:

E

=

1

m

∑

l

D

J

l

J

l

=

1

2

∑

j

=

1

m

L

(

y

j

L

−

y

j

)

2

\begin{aligned} E=&\frac{1}{m}\sum_l^D{J_l}\\ \\ J_l=&\frac{1}{2}\sum^{m^L}_{j=1}{(y^L_j-y_j)^2} \end{aligned}

E=Jl=m1l∑DJl21j=1∑mL(yjL−yj)2

权值、阈值更新:

Δ

w

j

i

k

=

−

η

d

j

k

y

i

k

−

1

Δ

b

j

k

=

−

η

d

j

k

d

j

L

=

(

y

j

L

−

y

j

)

σ

′

(

z

j

L

)

d

j

k

=

∑

i

=

1

m

L

d

i

L

w

i

j

L

σ

′

(

z

j

L

)

,

k

∈

{

1

,

.

.

.

,

L

−

1

}

\begin{aligned} \Delta{w^k_{ji}}=&-\eta{d^k_jy_i^{k-1}}\\ \\ \Delta{b^k_{j}}=&-\eta{d^k_j}\\ \\ d_j^L=&(y^L_j-y_j)\sigma'(z_j^L)\\ \\ d_j^k=&\sum_{i=1}^{m^{L}}d^L_iw^L_{ij}\sigma'(z_j^L),k\in\{1,...,L-1\} \end{aligned}

Δwjik=Δbjk=djL=djk=−ηdjkyik−1−ηdjk(yjL−yj)σ′(zjL)i=1∑mLdiLwijLσ′(zjL),k∈{1,...,L−1}

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?