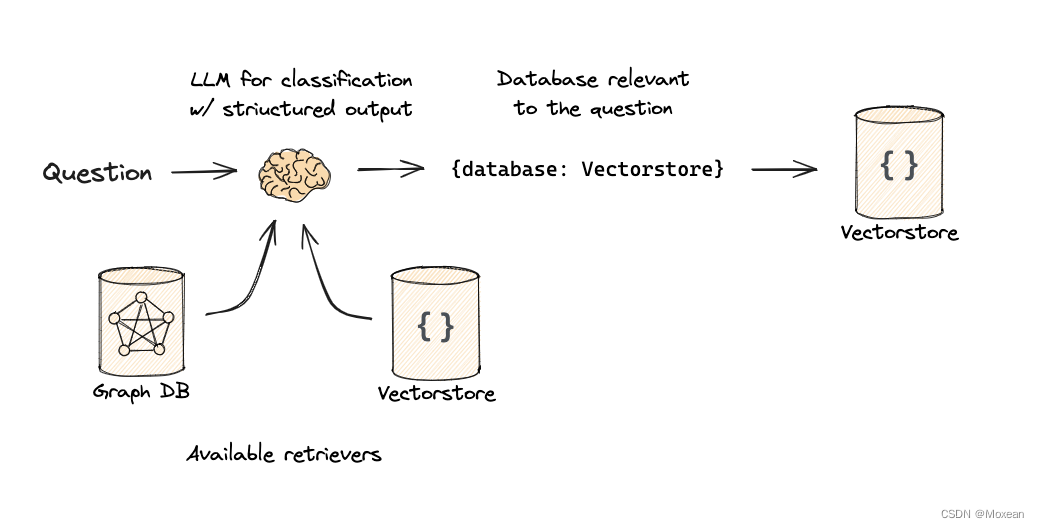

routing在RAG&LLM中起到连接查询和生成之间的桥梁作用,确保在检索和生成过程中信息的准确性和相关性。

准备工作

每次必做的事情😕,我们要把环境配好

import os

os.environ['LANGCHAIN_TRACING_V2'] = 'true'

os.environ['LANGCHAIN_ENDPOINT'] = '<https://api.smith.langchain.com>'

os.environ['LANGCHAIN_API_KEY'] = '<填你的lang-chain的API-Key>'

os.environ["OPENAI_API_BASE"] = '<填你的openai的API>'

os.environ['OPENAI_API_KEY'] = '<填你的openai的API-Key>'

Logical and Semantic routing

Logical routing

workflow:

- 使用LLM将用户的查询路由到最相关的数据源。这里的例子:根据问题所指的编程语言,将其路由到相关的数据源。

from typing import Literal

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.pydantic_v1 import BaseModel, Field

from langchain_openai import ChatOpenAI

class RouteQuery(BaseModel):

"""Route a user query to the most relevant datasource."""

datasource: Literal["python_docs", "js_docs", "golang_docs"] = Field(

...,

description="Given a user question choose which datasource would be most relevant for answering their question",

)

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

structured_llm = llm.with_structured_output(RouteQuery)

system = """You are an expert at routing a user question to the appropriate data source.

Based on the programming language the question is referring to, route it to the relevant data source."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

router = prompt | structured_llm

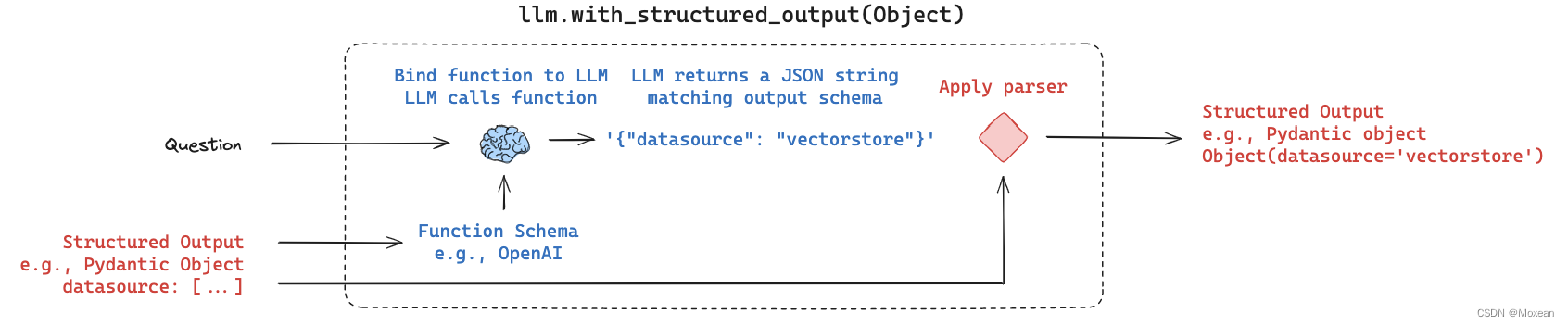

note:我们使用函数调用来生成结构化输出。

- e.g. 这里提问“为什么以下的代码不起作用:(Python代码)”,让llm调用函数识别属于哪个datasource。

question = """Why doesn't the following code work:

import { ChatPromptTemplate } from "@langchain/core/prompts";

const chatPrompt = ChatPromptTemplate.fromMessages([

["human", "speak in {language}"],

]);

const formattedChatPrompt = await chatPrompt.invoke({

input_language: "french"

});

"""

router.invoke({"question": question})

得到结果:RouteQuery(datasource=”python_docs”)

result.datasource

得到结果:’python_docs’ ,一旦我们有了这个结果,使用result.datasource定义一个分支就很简单了

- full_chain

def choose_route(result):

if "python_docs" in result.datasource.lower():

### Logic here

return "chain for python_docs"

elif "js_docs" in result.datasource.lower():

### Logic here

return "chain for js_docs"

else:

### Logic here

return "golang_docs"

from langchain_core.runnables import RunnableLambda

full_chain = router | RunnableLambda(choose_route)

full_chain.invoke({"question": question})

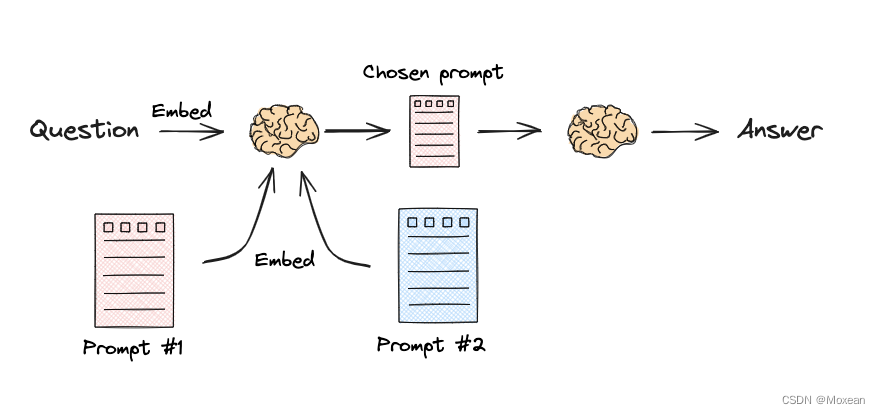

Semantic routing

Flow:

结合routing选择正确的prompt并回答问题

from langchain.utils.math import cosine_similarity

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import PromptTemplate

from langchain_core.runnables import RunnableLambda, RunnablePassthrough

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

# Two prompts

physics_template = """You are a very smart physics professor. \\

You are great at answering questions about physics in a concise and easy to understand manner. \\

When you don't know the answer to a question you admit that you don't know.

Here is a question:

{query}"""

math_template = """You are a very good mathematician. You are great at answering math questions. \\

You are so good because you are able to break down hard problems into their component parts, \\

answer the component parts, and then put them together to answer the broader question.

Here is a question:

{query}"""

# Embed prompts

embeddings = OpenAIEmbeddings()

prompt_templates = [physics_template, math_template]

prompt_embeddings = embeddings.embed_documents(prompt_templates)

# Route question to prompt

def prompt_router(input):

# Embed question

query_embedding = embeddings.embed_query(input["query"])

# Compute similarity

similarity = cosine_similarity([query_embedding], prompt_embeddings)[0]

most_similar = prompt_templates[similarity.argmax()]

# Chosen prompt

print("Using MATH" if most_similar == math_template else "Using PHYSICS")

return PromptTemplate.from_template(most_similar)

chain = (

{"query": RunnablePassthrough()}

| RunnableLambda(prompt_router)

| ChatOpenAI()

| StrOutputParser()

)

print(chain.invoke("What's a black hole"))

得到结果:Using PHYSICS A black hole is a region in space where the gravitational force is so strong that nothing, not even light, can escape its pull. It is formed when a massive star collapses in on itself, creating a singularity in space-time. The size of a black hole is determined by its mass, and the event horizon is the point of no return where anything that crosses it is inevitably pulled into the black hole. Black holes are fascinating objects in the universe and are still being studied by scientists today.

1183

1183

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?