基于之前写的Faster-whisper+silero-vad 实时语音转录 发现faster-whisper实时转录的速度比想象中的慢,而且静音时老会崩出来广告,便改为使用Paraformer进行识别,对中文更友好,还可以使用热词进行更准确的关键词识别,可以去魔搭社区看模型用法,模型会自动下载。

需要再下载silero-vad

思路是获取音频数据后,使用Paraformer获取音频文字和字级时间戳,再检测其中的关键词,最后再使用AudioSegment静音。

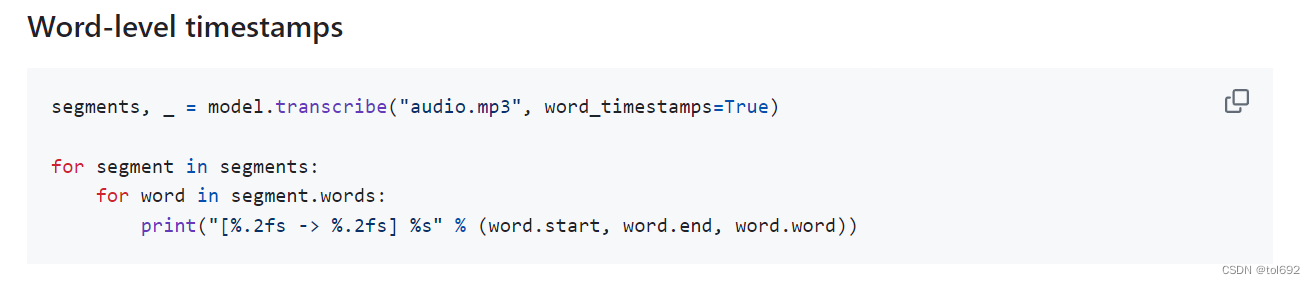

这里faster-whisper也可以获取秒级时间戳。思路不变,只是获取时间戳较慢

这里使用滑动窗口的思想进行关键词检测

def findKeywords(userId, keywords, res):

split_time = []

if len(res) > 0:

words = {"word": [], "timestamp": []}

chars = re.sub(r'[^\w\s]', '', res[0]["text"])

print(chars)

for char in chars:

words["word"].append(char)

words["timestamp"] = (res[0]["timestamp"])

print(words)

for keyword in keywords:

keyword_words = [char for char in keyword]

window_size = len(keyword_words)

window_start = 0

while window_start + window_size <= len(words["word"]):

window_end = window_start + window_size

window_words = words["word"][window_start:window_end]

if window_words == keyword_words:

# print("检测到关键词:" + keyword)

match_keyword[userId].append(keyword)

start_time = words["timestamp"][window_start][0]

end_time = words["timestamp"][window_end - 1][1]

split_time.append((start_time, end_time))

window_start += 1

print(split_time)

return split_time这里实现的websocket进行音频数据获取,关键词使用redis订阅进行关键词推送

async def start(websocket, path):

try:

count = 0

audio = None

chunk_size = 16000

userId = path.lstrip('/')

subscribe_user[userId] = True

keywords[userId] = ["高考", "新闻", "关心", "产品", "肠道"]

match_keyword[userId] = []

rc = redis.StrictRedis(host='xxx', port='xxx', db=xxx, password='xxx')

ps = rc.pubsub()

ps.subscribe("LIVE_PUSH:" + userId) # 订阅消息

print("订阅开启")

async for message in websocket:

start_time = time.time()

if message == '"close"':

await websocket.close()

break

else:

audio_chunk = base64.b64decode(message)

audio_float32 = int2float(np.frombuffer(audio_chunk, np.int16))

new_confidence = model(torch.from_numpy(audio_float32), 16000).item()

if new_confidence > 0.5:

# print("录音")

if audio is None:

audio = audio_chunk

else:

audio = audio + audio_chunk

count = count + 1

if count > 0:

message = ps.get_message()

if message and message['type'] == 'message':

data = message['data'].decode('utf-8')

# print("当前关键词:" + data)

keywords[userId] = ast.literal_eval(data)

hot_words = " ".join(keyword + " " for keyword in keywords[userId])

res = modelScope.generate(input=audio,

batch_size_s=300,

hotword=hot_words)

# print(res)

data = split_keyword(findKeywords(userId, keywords[userId], res), audio)

# chunks = [data[i:i + chunk_size] for i in range(0, len(data), chunk_size)]

# for chunk in chunks:

base64String = base64.b64encode(data).decode()

send_msg = {'content': base64String, 'type': 'base64'}

await websocket.send(json.dumps(send_msg))

end_time = time.time()

print(end_time - start_time)

count = 0

audio = None

else:

send_msg = {'content': message, 'type': 'base64'}

await websocket.send(json.dumps(send_msg))

if len(match_keyword[userId]) > 0:

send_msg = {'content': match_keyword[userId][0], 'type': 'keyword'}

await websocket.send(json.dumps(send_msg))

match_keyword[userId].remove(match_keyword[userId][0])

except websockets.exceptions.ConnectionClosedError:

print("Client disconnected")

except websockets.exceptions.ConnectionClosed:

print("Client disconnected")

finally:

userId = path.lstrip('/')

subscribe_user[userId] = False

print(userId + "缓存清除成功")

async def main():

async with websockets.serve(start, '0.0.0.0', 8765):

await asyncio.Future() # run forever这里是将识别到的关键词进行静音

def split_keyword(split_time, audio):

original_audio = AudioSegment.from_file(BytesIO(audio), format='pcm', frame_rate=16000, sample_width=2,

channels=1)

# original_audio = AudioSegment.from_file("output.wav", format="wav")

for start_time, end_time in split_time:

print(start_time, end_time)

start_time = start_time

end_time = end_time

segment_to_replace = original_audio[start_time:end_time]

replacement_audio = AudioSegment.silent(duration=len(segment_to_replace))

original_audio = original_audio[:start_time] + replacement_audio + original_audio[end_time:]

return original_audio.raw_data一些笔记

本来想将订阅做成线程,但发现一旦开启订阅消息,处理就会卡顿

处理的音频长度是和发送的音频长度相同的,不考虑实时可以持续录音直到说话结束

关键词判断是将关键字与转录到的词全部分割成单个字进行匹配的

静音部分如果传过来的语音分割太短,则尾音有可能消除不了

423

423

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?