Table of Contents

- Packages

- 1 - Data synthesis: Creating a Speech Dataset

- 2 - The Model

- 3 - Making Predictions

- 4 - Try Your Own Example! (OPTIONAL/UNGRADED)

Packages

In [1]:

import numpy as np from pydub import AudioSegment import random import sys import io import os import glob import IPython from td_utils import * %matplotlib inline

1 - Data synthesis: Creating a Speech Dataset

Let's start by building a dataset for your trigger word detection algorithm.

- A speech dataset should ideally be as close as possible to the application you will want to run it on.

- In this case, you'd like to detect the word "activate" in working environments (library, home, offices, open-spaces ...).

- Therefore, you need to create recordings with a mix of positive words ("activate") and negative words (random words other than activate) on different background sounds. Let's see how you can create such a dataset.

1.1 - Listening to the Data

- One of your friends is helping you out on this project, and they've gone to libraries, cafes, restaurants, homes and offices all around the region to record background noises, as well as snippets of audio of people saying positive/negative words. This dataset includes people speaking in a variety of accents.

- In the raw_data directory, you can find a subset of the raw audio files of the positive words, negative words, and background noise. You will use these audio files to synthesize a dataset to train the model.

- The "activate" directory contains positive examples of people saying the word "activate".

- The "negatives" directory contains negative examples of people saying random words other than "activate".

- There is one word per audio recording.

- The "backgrounds" directory contains 10 second clips of background noise in different environments.

Run the cells below to listen to some examples.

In [2]:

IPython.display.Audio("./raw_data/activates/1.wav")

Out[2]:

In [3]:

IPython.display.Audio("./raw_data/negatives/4.wav")

Out[3]:

In [4]:

IPython.display.Audio("./raw_data/backgrounds/1.wav")

Out[4]:

You will use these three types of recordings (positives/negatives/backgrounds) to create a labeled dataset.

1.2 - From Audio Recordings to Spectrograms

What really is an audio recording?

- A microphone records little variations in air pressure over time, and it is these little variations in air pressure that your ear also perceives as sound.

- You can think of an audio recording as a long list of numbers measuring the little air pressure changes detected by the microphone.

- We will use audio sampled at 44100 Hz (or 44100 Hertz).

- This means the microphone gives us 44,100 numbers per second.

- Thus, a 10 second audio clip is represented by 441,000 numbers (= 10×44,10010×44,100).

Spectrogram

- It is quite difficult to figure out from this "raw" representation of audio whether the word "activate" was said.

- In order to help your sequence model more easily learn to detect trigger words, we will compute a spectrogram of the audio.

- The spectrogram tells us how much different frequencies are present in an audio clip at any moment in time.

- If you've ever taken an advanced class on signal processing or on Fourier transforms:

- A spectrogram is computed by sliding a window over the raw audio signal, and calculating the most active frequencies in each window using a Fourier transform.

- If you don't understand the previous sentence, don't worry about it.

Let's look at an example.

In [5]:

IPython.display.Audio("audio_examples/example_train.wav")

Out[5]:

In [6]:

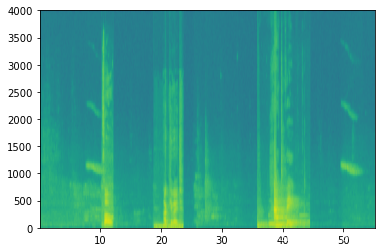

x = graph_spectrogram("audio_examples/example_train.wav")

The graph above represents how active each frequency is (y axis) over a number of time-steps (x axis).

**Figure 1**: Spectrogram of an audio recording

- The color in the spectrogram shows the degree to which different frequencies are present (loud) in the audio at different points in time.

- Green means a certain frequency is more active or more present in the audio clip (louder).

- Blue squares denote less active frequencies.

- The dimension of the output spectrogram depends upon the hyperparameters of the spectrogram software and the length of the input.

- In this notebook, we will be working with 10 second audio clips as the "standard length" for our training examples.

- The number of timesteps of the spectrogram will be 5511.

- You'll see later that the spectrogram will be the input xx into the network, and so Tx=5511Tx=5511.

In [7]:

_, data = wavfile.read("audio_examples/example_train.wav")

print("Time steps in audio recording before spectrogram", data[:,0].shape)

print("Time steps in input after spectrogram", x.shape)

Time steps in audio recording before spectrogram (441000,) Time steps in input after spectrogram (101, 5511)

Now, you can define:

In [8]:

Tx = 5511 # The number of time steps input to the model from the spectrogram n_freq = 101 # Number of frequencies input to the model at each time step of the spectrogram

Dividing into time-intervals

Note that we may divide a 10 second interval of time with different units (steps).

- Raw audio divides 10 seconds into 441,000 units.

- A spectrogram divides 10 seconds into 5,511 units.

- Tx=5511Tx=5511

- You will use a Python module

pydubto synthesize audio, and it divides 10 seconds into 10,000 units. - The output of our model will divide 10 seconds into 1,375 units.

- Ty=1375Ty=1375

- For each of the 1375 time steps, the model predicts whether someone recently finished saying the trigger word "activate".

- All of these are hyperparameters and can be changed (except the 441000, which is a function of the microphone).

- We have chosen values that are within the standard range used for speech systems.

In [9]:

Ty = 1375 # The number of time steps in the output of our model

1.3 - Generating a Single Training Example

Benefits of synthesizing data

Because speech data is hard to acquire and label, you will synthesize your training data using the audio clips of activates, negatives, and backgrounds.

- It is quite slow to record lots of 10 second audio clips with random "activates" in it.

- Instead, it is easier to record lots of positives and negative words, and record background noise separately (or download background noise from free online sources).

Process for Synthesizing an audio clip

- To synthesize a single training example, you will:

- Pick a random 10 second background audio clip

- Randomly insert 0-4 audio clips of "activate" into this 10 sec. clip

- Randomly insert 0-2 audio clips of negative words into this 10 sec. clip

- Because you had synthesized the word "activate" into the background clip, you know exactly when in the 10 second clip the "activate" makes its appearance.

- You'll see later that this makes it easier to generate the labels y〈t〉y〈t〉 as well.

Pydub

- You will use the pydub package to manipulate audio.

- Pydub converts raw audio files into lists of Pydub data structures.

- Don't worry about the details of the data structures.

- Pydub uses 1ms as the discretization interval (1 ms is 1 millisecond = 1/1000 seconds).

- This is why a 10 second clip is always represented using 10,000 steps.

In [10]:

# Load audio segments using pydub

activates, negatives, backgrounds = load_raw_audio('./raw_data/')

print("background len should be 10,000, since it is a 10 sec clip\n" + str(len(backgrounds[0])),"\n")

print("activate[0] len may be around 1000, since an `activate` audio clip is usually around 1 second (but varies a lot) \n" + str(len(activates[0])),"\n")

print("activate[1] len: different `activate` clips can have different lengths\n" + str(len(activates[1])),"\n")

background len should be 10,000, since it is a 10 sec clip 10000 activate[0] len may be around 1000, since an `activate` audio clip is usually around 1 second (but varies a lot) 916 activate[1] len: different `activate` clips can have different lengths 1579

Overlaying positive/negative 'word' audio clips on top of the background audio

- Given a 10 second background clip and a short audio clip containing a positive or negative word, you need to be able to "add" the word audio clip on top of the background audio.

- You will be inserting multiple clips of positive/negative words into the background, and you don't want to insert an "activate" or a random word somewhere that overlaps with another clip you had previously added.

- To ensure that the 'word' audio segments do not overlap when inserted, you will keep track of the times of previously inserted audio clips.

- To be clear, when you insert a 1 second "activate" onto a 10 second clip of cafe noise, you do not end up with an 11 sec clip.

- The resulting audio clip is still 10 seconds long.

- You'll see later how pydub allows you to do this.

Label the positive/negative words

- Recall that

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

7368

7368

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?