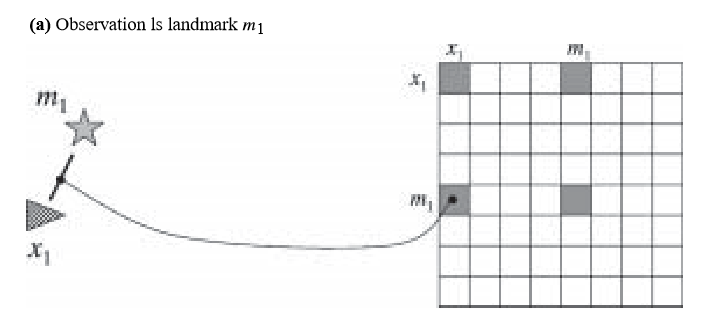

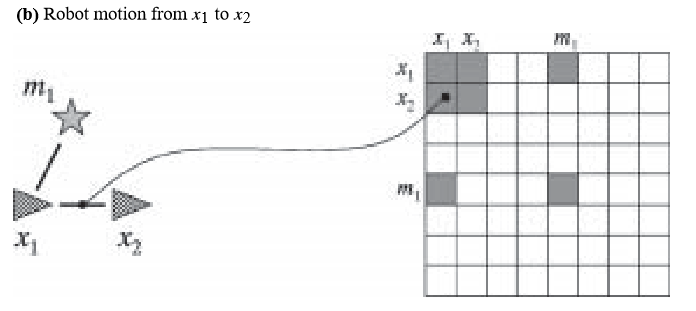

In GraphSLAM, the map and the path are obtained from the linearized information matrix

Ω

and the information vector

ϵ

, via the equations

Σ=Ω−1

and

μ=Σϵ

. This operation requires us to solve a system of linear equations. This raises the question on how efficiently we can recover the map estimate

μ

.

The answer to the complexity question depends on the topology of the world. If each feature is seen only locally in time, the graph represented by the constraints is linear. Thus, can be reordered so that it becomes a band-diagonal matrix,that is,all non-zero values occur near its diagonal.The equation

μ=Ω−1ϵ

can then be computed in linear time. This intuition carries over to a cycle-free world that is traversed once,so that each feature is seen for a short,consecutive period of time.

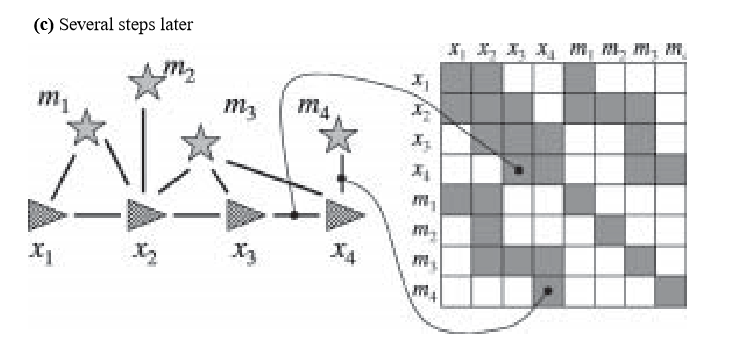

The more common case, however, involves features that are observed multiple times, with large time delays in between. This might be the case because the robot goes back and forth through a corridor, or because the world possesses cycles. In either situation, there will exist features

mj

that are seen at drastically different time steps

xt1

and

xt2

, with t2>> t1. In our constraint graph,this introduces a cyclic dependence:

xt1

and

xt2

are linked through the sequence of controls

ut1+1,ut1+2,...,ut2

and through the joint observation links between

xt1

and

mj

,and

xt2

and

mj

,respectively.Such link smake our variable reordering trick inapplicable, and recovering the map becomes more complex. In fact, since the inverse of is multiplied with a vector, the result can be computed with optimization techniques such as conjugate gradient, without explicitly computing the full inverse matrix. Since most worlds possess cycles, this is the case of interest.

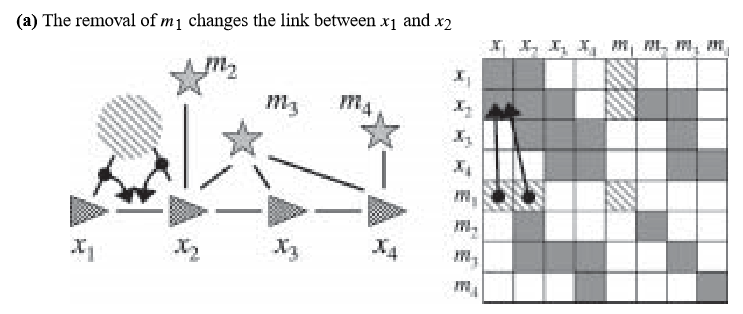

The GraphSLAM algorithm now employs an important factorization trick, which we can think of as propagating information trough the information matrix (in fact, it is a generalization of the well-known variable elimination algorithm for matrix inversion). Suppose we would like to remove a feature

mj

from the information matrix

Ω

and the information state

ϵ

. In our spring mass model, this is equivalent to removing the node and all springs attached to this node.As we shall see below, this is possible by a remarkably simple operation. we can remove all those springs between

mj

and the poses at which

mj

was observed, by introducing new springs between any pair of such poses.

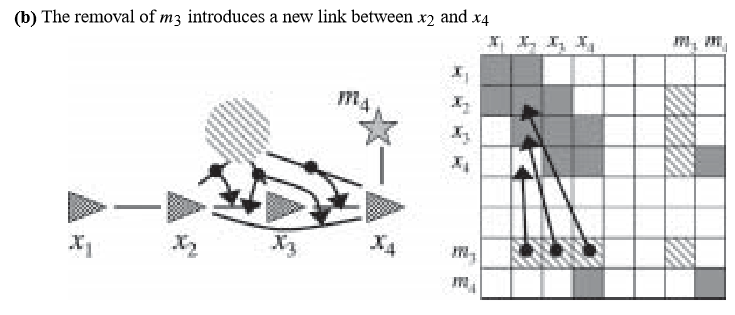

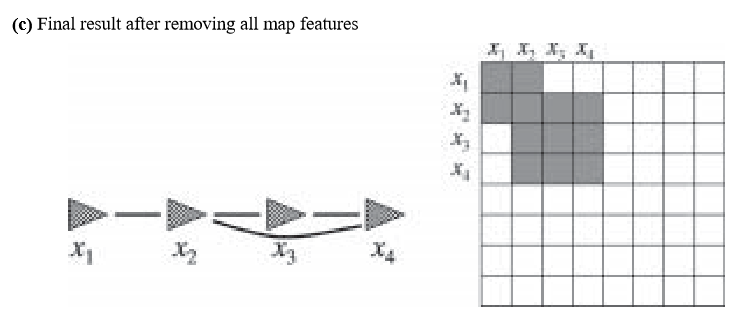

This process is illustrated in Figure 3, which shows the removal of two map features,

m1

and

m3

(the removal of

m2

and

m4

is trivial in this example). In both cases, the feature removal modifies the link between any pair of poses from which a feature was originally observed.As illustrated in Figure 3(b), this operation may lead to the introduction of new links in the graph. In the example shown there, the removal of

m3

leads to a new link between

x2

and

x4

.

Graph-slam(三)

最新推荐文章于 2023-03-05 15:55:47 发布

2123

2123

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?