1. 为了更加稳定的设计准则[更新中]

Reference: cs231n

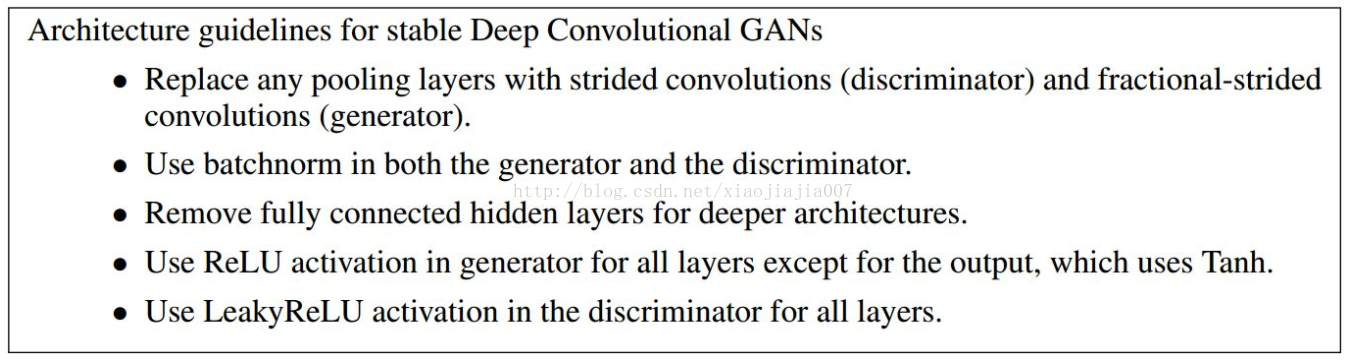

Radford et al, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016

Generator is an upsampling network with fractionally-strided convolutions

Discriminator is a convolutional network

Keras作者指出的trick

## A bag of tricks

Training GANs and tuning GAN implementations is notoriously difficult. There are a number of known "tricks" that one should keep in mind.

Like most things in deep learning, it is more alchemy than science: these tricks are really just heuristics, not theory-backed guidelines.

They are backed by some level of intuitive understanding of the phenomenon at hand, and they are known to work well empirically, albeit not

necessarily in every context.

Here are a few of the tricks that we leverage in our own implementation of a GAN generator and discriminator below. It is not an exhaustive

list of GAN-related tricks; you will find many more across the GAN literature.

* We use `tanh` as the last activation in the generator, instead of `sigmoid`, which would be more commonly found in other types of models.

* We sample points from the latent space using a _normal distribution_ (Gaussian distribution), not a uniform distribution.

* Stochasticity is good to induce robustness. Since GAN training results in a dynamic equilibrium, GANs are likely to get "stuck" in all sorts of ways. Introducing randomness during training helps prevent this. We introduce randomness in two ways: 1) we use dropout in the discriminator, 2) we add some random noise to the labels for the discriminator.

* Sparse gradients can hinder GAN training. In deep learning, sparsity is often a desirable property, but not in GANs. There are two things that can induce gradient sparsity: 1) max pooling operations, 2) ReLU activations. Instead of max pooling, we recommend using strided convolutions for downsampling, and we recommend using a `LeakyReLU` layer instead of a ReLU activation. It is similar to ReLU but it relaxes sparsity constraints by allowing small negative activation values.

* In generated images, it is common to see "checkerboard artifacts" (棋盘效应)caused by unequal coverage of the pixel space in the generator. To fix this, we use a kernel size that is divisible by the stride size (比如kernel选择4, stride选择2), whenever we use a strided `Conv2DTranpose` or `Conv2D` in both the generator and discriminator.

generator loss 越来越大,discriminator越来越小的问题一般是判别器过拟合,它似乎只注意到没有噪声的图像都是真的,在判别器前面加一个高斯噪声层就好了。另外软标签和分批向判别器送入真假图片貌似也能缓解这个问题?

Gan zoo: 点击打开链接

How to train a Gan 点击打开链接

2. GAN的应用

GAN的训练及其改进

上面使用GAN产生的图像虽然效果不错,但其实GAN网络的训练过程是非常不稳定的。

通常在实际训练GAN中所碰到的一个问题就是判别模型的收敛速度要比生成模型的收敛速度要快很多,通常的做法就是让生成模型多训练几次来赶上生成模型,但是存在的一个问题就是通常生成模型和判别模型的训练是相辅相成的,理想的状态是让生成模型和判别模型在每次的训练过程中同时变得更好。判别模型理想的minimum loss应该为0.5,这样才说明判别模型分不出是真实数据还是生成模型产生的数据。

Improved GANs

Improved techniques for training GANs这篇文章提出了很多改进GANs训练的方法,其中提出一个想法叫Feature matching,之前判别模型只判别输入数据是来自真实数据还是生成模型。现在为判别模型提出了一个新的目标函数来判别生成模型产生图像的统计信息是否和真实数据的相似。让f(x)

, 其实就是要求真实图像和合成图像在判别模型中间层的距离要最小。这样可以防止生成模型在当前判别模型上过拟合。

InfoGAN

到这可能有些同学会想到,我要是想通过GAN产生我想要的特定属性的图片改怎么办?普通的GAN输入的是随机的噪声,输出也是与之对应的随机图片,我们并不能控制输出噪声和输出图片的对应关系。这样在训练的过程中也会倒置生成模型倾向于产生更容易欺骗判别模型的某一类特定图片,而不是更好的去学习训练数据的分布,这样对模型的训练肯定是不好的。InfoGAN的提出就是为了解决这一问题,通过对输入噪声添加一些类别信息以及控制图像特征(如mnist数字的角度和厚度)的隐含变量来使得生成模型的输入不在是随机噪声。虽然现在输入不再是随机噪声,但是生成模型可能会忽略这些输入的额外信息还是把输入当成和输出无关的噪声,所以需要定义一个生成模型输入输出的互信息,互信息越高,说明输入输出的关联越大。

下面三张图片展示了通过分别控制输入噪声的类别信息,数字角度信息,数字笔画厚度信息产生指定输出的图片,可以看出InfoGAN产生图片的效果还是很好的。

3. GAN的理论学习

机器之心公众号的发布文章 点击打开链接

604

604

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?