一 得到原始文本内容

- def FileRead(self,filePath):

- f = open(filePath)

- raw=f.read()

- return raw

二 中文分词

参考之前的一篇博客Python下的中文分词实现

- def NlpirTokener(self,raw):

- result=''

- tokens = nlpir.Seg(raw)

- for w in tokens:

- # result+= w[0]+"/"+w[1] #加词性标注

- result+= w[0] +'/'#加词性标注

- return result

- def JiebaTokener(self,raw):

- result=''

- words = pseg.cut(raw) #进行分词

- result="" #记录最终结果的变量

- for w in words:

- # result+= str(w.word)+"/"+str(w.flag) #加词性标注

- result+= str(w.word)+"/" #加词

- return result

三 去停用词

- def StopwordsRm(self,words):

- result=''

- print words

- wordList=[word for word in words.split('#')]

- print wordList[:20]

- stopwords = {}.fromkeys([ line.rstrip()for line in open(conf.PreConfig.CHSTOPWORDS)])

- cleanTokens= [w for w in wordList ifw not in stopwords]

- print cleanTokens[:20]

- for c in cleanTokens:

- result+=c+"#"

- print result

- returnresult

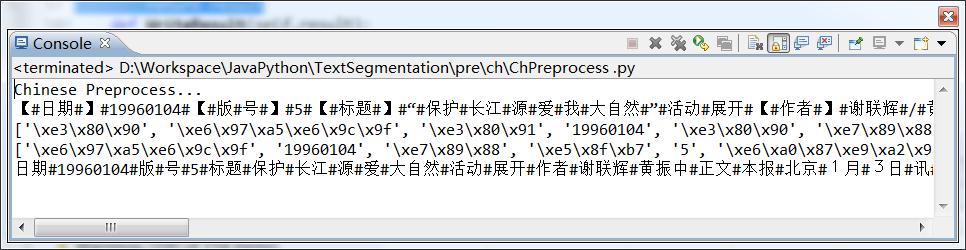

在这个地方我遇到了一个很烦人的问题,那就是Python的中文解码问题,在最开始的一个小时里我在在去停用词之后一直看到的结果是这样的:

\xe3\x80\x90/\xe6\x97\xa5\xe6\x9c\x9f/\xe3\这种东西没说的肯定是解码造成的,于是开始找解决的方法。

后来找到CSDN上http://blog.csdn.net/samxx8/article/details/6286407

感觉说的很详细,于是便开始尝试里面介绍的方法。

在经过一些尝试以后我发现,虽然这并不影响最后的得到分词和去除停用词的结果,但是是没有办法解决在print wordList[:20]和print cleanTokens[:20]出现的乱码让我很不爽。所以我决定继续尝试一下。在网上查找一下这个问题,发现好像很多人都曾经遇到过,并且给出来一些解决方案。从大家的博客内容了可以总结出几个问题,python对中文支持不是很好,Python 2.x对中文的支持不好,windows默认字符集下Python2.x经常会出现乱码情况,windows下的eclipse里面写的python 2.x程序对中文支持很不好。后来我还是找到了解决方案,那就是:

- defmdcode(self,str):

- for c in ('utf-8', 'gbk', 'gb2312'):

- try:

- return str.decode(c).encode( 'utf-8' )

- except:

- pass

- return 'unknown'

自动检测转化,基本上问题上解决了。

四 半角与全角转换

参考的网上的方法( http://www.cnblogs.com/kaituorensheng/p/3554571.html)拿过来用的。

方法就是检查字符是不是全角的,是全角的就做减法变成半角的。其实我不是很懂,为什么会有全角字符这么bug的东西,给处理带来了很多麻烦。

- def strQ2B(self,ustring):

- """把字符串全角转半角"""

- ustring=ustring.decode('utf-8')

- rstring = ""

- for uchar in ustring:

- inside_code=ord(uchar)

- if inside_code==0x3000:

- inside_code=0x0020

- else:

- inside_code-=0xfee0

- if inside_code<0x0020 or inside_code>0x7e: #转完之后不是半角字符则返回原来的字符

- rstring+=uchar.encode('utf-8')

- else:

- rstring+=(unichr(inside_code)).encode('utf-8')

- return rstring

五 完整代码

- #coding=utf-8

- '''''

- Created on 2014-3-20

- 中文的预处理

- @author: liTC

- '''

- importtime

- importstring

- importre

- importos

- importnlpir

- importjieba

- importjieba.posseg as pseg

- fromconfig import Config as conf

- importsys

- reload(sys)

- sys.setdefaultencoding('utf-8')

- classChPreprocess:

- def __init__(self):

- print 'Chinese Preprocess...'

- def mdcode(self,str):

- for c in ('utf-8', 'gbk', 'gb2312'):

- try:

- return str.decode(c).encode( 'utf-8' )

- except:

- pass

- return 'unknown'

- def FileRead(self,filePath):

- f = open(filePath,'r')

- raw=self.mdcode(f.read())

- return raw

- def NlpirTokener(self,raw):

- nlpir_result=''

- tokens = nlpir.Seg(raw)

- for w in tokens:

- # result+= w[0]+"/"+w[1] #加词性标注

- nlpir_result+= w[0] +' '#加词性标注

- return nlpir_result

- def JiebaTokener(self,raw):

- jieba_result=''

- words = pseg.cut(raw) #进行分词

- jieba_result="" #记录最终结果的变量

- for w in words:

- # jieba_result+= str(w.word)+"/"+str(w.flag) #加词性标注

- jieba_result+=str(w.word)+" " #加词

- return jieba_result

- def StopwordsRm(self,words):

- spr_result=''

- wordList=[word for word in words.split(' ')]

- stopwords = {}.fromkeys([line.rstrip() forline inopen(conf.PreConfig.CHSTOPWORDS)])

- cleanStops= [w for w in wordList if w not in stopwords]

- cleanTokens=[self.CleanEnNum(cs) for cs in cleanStops]

- for c in cleanTokens:

- if c=='。'or c=='?'or c=='!':

- spr_result+='\n'

- continue

- spr_result+=c+" "

- return spr_result

- def strQ2B(self,ustring):

- """把字符串全角转半角"""

- ustring=ustring.decode('utf-8')

- rstring = ""

- for uchar in ustring:

- inside_code=ord(uchar)

- if inside_code==0x3000:

- inside_code=0x0020

- else:

- inside_code-=0xfee0

- if inside_code<0x0020 or inside_code>0x7e: #转完之后不是半角字符则返回原来的字符

- rstring+=uchar.encode('utf-8')

- else:

- rstring+=(unichr(inside_code)).encode('utf-8')

- return rstring

- def CleanEnNum(self,raw):

- p=re.compile(r'\w*',re.L)

- clean = p.sub("", raw)

- # r =re.sub("[A-Za-z0-9

\`\~\@#$\^&\*\(\)\=∥{}\'\'\[\.\<\>\/\?\~\!\@\#\\\&\*\%]", "", s)

- return clean

- def mkdir(self,path):

- # 去除首位空格

- path=path.strip()

- # 去除尾部 \ 符号

- path=path.rstrip("\\")

- # 判断路径是否存在

- # 存在 True

- # 不存在 False

- isExists=os.path.exists(path)

- # 判断结果

- if not isExists:

- # 如果不存在则创建目录

- print path+' 创建成功'

- # 创建目录操作函数

- os.makedirs(path)

- return True

- else:

- # 如果目录存在则不创建,并提示目录已存在

- print path+' 目录已存在'

- return False

- def WriteResult(self,result,resultPath):

- self.mkdir(str(resultPath).replace(str(resultPath).split('/')[-1],''))

- f=open(resultPath,"w") #将结果保存到另一个文档中

- f.write(result)

- f.close()

- def JiebaMain(self,dir):

- for root,dirs,files in os.walk(dir):

- for eachfiles in files:

- croupPath=os.path.join(root,eachfiles)

- print croupPath

- resultPath=conf.PreConfig.JIEBARESULTPATH+croupPath.split('/')[-2]+'/'+croupPath.split('/')[-1]

- raw=self.FileRead(croupPath).strip()

- raw=self.strQ2B(raw)

- raw=raw.replace(" ",'')

- words=self.JiebaTokener(raw)

- result=self.StopwordsRm(words)

- self.WriteResult(result,resultPath)

- def NlpirMain(self,dir):

- for root,dirs,files in os.walk(dir):

- for eachfiles in files:

- croupPath=os.path.join(root,eachfiles)

- print croupPath

- resultPath=conf.PreConfig.NLPIRRESULTPATH+croupPath.split('/')[-2]+'/'+croupPath.split('/')[-1]

- raw=self.FileRead(croupPath).strip()

- raw=self.strQ2B(raw)

- raw=raw.replace(" ",'')

- words=self.NlpirTokener(raw)

- result=self.StopwordsRm(words)

- self.WriteResult(result,resultPath)

- def LoadCroupResult(self,filepath):

- raw=chPre.FileRead(filepath)

- result=[t for t in raw.split(' ')]

- for r in result:

- print self.mdcode(r)#打印显示

- return result

- def Test(self):

- chPre=ChPreprocess()

- croupPath='/corpus/mx/6/69.TXT'

- resultPath=conf.PreConfig.NLPIRRESULTPATH+croupPath.split('/')[-2]+'/'+croupPath.split('/')[-1]

- # resultPath=conf.PreConfig.JIEBARESULTPATH+croupPath.split('/')[-2]+'/'+croupPath.split('/')[-1]

- print resultPath

- raw=chPre.FileRead(croupPath)

- raw=chPre.strQ2B(raw)

- raw=raw.replace(" ",'')

- words=chPre.NlpirTokener(raw)

- # words=chPre.JiebaTokener(raw)

- result=chPre.StopwordsRm(words)

- print result

- chPre.WriteResult(result,resultPath)

- chPre=ChPreprocess()

- # chPre.JiebaMain(conf.PreConfig.CHCROPPATH)

- chPre.NlpirMain(conf.PreConfig.CHCORUPPATH)

582

582

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?