目录

为什么我用的是sparse_categorical_crossentropy?

TensorFlow 2.9的零零碎碎(二)-TensorFlow 2.9的零零碎碎(六)都是围绕使用TensorFlow 2.9在MNIST数据集上训练和评价模型来展开。

Python环境3.8。

代码调试都用的PyCharm。

在构建好一个模型的结构之后,需要进行模型编译,也就是compile函数

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Input(shape=(28, 28)))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128))

model.add(tf.keras.layers.Dropout(0.2))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['acc'])compile函数的定义在哪里?

通过model = tf.keras.models.Sequential(),我们知道model是Sequential类的一个对象,所以我们去Sequential类里看看

层层跳转,在keras.engine.sequential找到Sequential类的定义

@keras_export('keras.Sequential', 'keras.models.Sequential')

class Sequential(functional.Functional):

"""`Sequential` groups a linear stack of layers into a `tf.keras.Model`.

`Sequential` provides training and inference features on this model.可以看出,Sequential类里并没有compile函数

但是我们发现Sequential类继承了functional模块的Functional类,继续跳转

# pylint: disable=g-classes-have-attributes

class Functional(training_lib.Model):

"""A `Functional` model is a `Model` defined as a directed graph of layers.

Three types of `Model` exist: subclassed `Model`, `Functional` model,

and `Sequential` (a special case of `Functional`).

In general, more Keras features are supported with `Functional`

than with subclassed `Model`s, specifically:

- Model cloning (`keras.models.clone`)

- Serialization (`model.get_config()/from_config`, `model.to_json()`

- Whole-model saving (`model.save()`)

A `Functional` model can be instantiated by passing two arguments to

`__init__`. The first argument is the `keras.Input` Tensors that represent

the inputs to the model. The second argument specifies the output

tensors that represent the outputs of this model. Both arguments can be a

nested structure of tensors.同样,Functional类里并没有compile函数

但是我们发现Functional类继承了training模块的Model类

注意不是training_lib模块,因为training_lib只是一个别名(from keras.engine import training as training_lib)

继续跳转

@keras_export('keras.Model', 'keras.models.Model')

class Model(base_layer.Layer, version_utils.ModelVersionSelector):

"""`Model` groups layers into an object with training and inference features.在training模块的Model类中定义了compile函数。源码太长了,这里给一部分,全部的版本贴在最后。

@traceback_utils.filter_traceback

def compile(self,

optimizer='rmsprop',

loss=None,

metrics=None,

loss_weights=None,

weighted_metrics=None,

run_eagerly=None,

steps_per_execution=None,

jit_compile=None,

**kwargs):

"""Configures the model for training.

Example:

```python

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=1e-3),

loss=tf.keras.losses.BinaryCrossentropy(),

metrics=[tf.keras.metrics.BinaryAccuracy(),

tf.keras.metrics.FalseNegatives()])

```compile函数的参数

compile函数的参数比较多,但在上面我们编译模型的代码里其实就用了3个参数,分别是optimizer(优化器)、loss(损失函数)、metrics(评价指标)。

optimizer

optimizer默认值是rmsprop,RMSProp是一个优化器(算法),全称叫 Root Mean Square Prop,Geoffrey E. Hinton在Coursera课程中提出的一种优化算法,网上有很多资料。

Tieleman, T., & Hinton, G. (2012). Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA: Neural networks for machine learning, 4(2), 26-31.

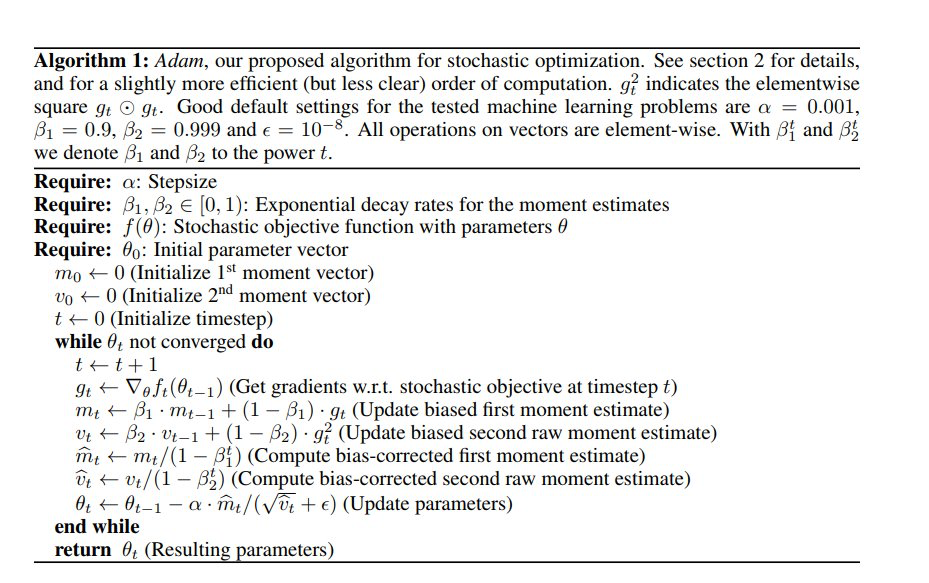

当然还有很多其他的优化器,比如面我们编译模型的代码里用到的优化器是Adam,也是一种主流的优化器。

Kingma, D. P., & Ba, J. (2014). Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

loss

loss参数的默认值是None。

上面我们编译模型的代码里传入的是sparse_categorical_crossentropy。

loss的取值根据任务而定,比如MNIST数据集对应的是一个多分类任务,常用的损失函数是categorical_crossentropy和sparse_categorical_crossentropy。

在keras.losses可以找到各种各样的损失函数。

loss: Loss function. May be a string (name of loss function), or

a `tf.keras.losses.Loss` instance. See `tf.keras.losses`. A loss

function is any callable with the signature `loss = fn(y_true,

y_pred)`, where `y_true` are the ground truth values, and

`y_pred` are the model's predictions.

`y_true` should have shape

`(batch_size, d0, .. dN)` (except in the case of

sparse loss functions such as

sparse categorical crossentropy which expects integer arrays of shape

`(batch_size, d0, .. dN-1)`).

`y_pred` should have shape `(batch_size, d0, .. dN)`.

The loss function should return a float tensor.

If a custom `Loss` instance is

used and reduction is set to `None`, return value has shape

`(batch_size, d0, .. dN-1)` i.e. per-sample or per-timestep loss

values; otherwise, it is a scalar. If the model has multiple outputs,

you can use a different loss on each output by passing a dictionary

or a list of losses. The loss value that will be minimized by the

model will then be the sum of all individual losses, unless

`loss_weights` is specified.为什么我用的是sparse_categorical_crossentropy?

import tensorflow as tf

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Input(shape=(28, 28)))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128, activation='relu'))

model.add(tf.keras.layers.Dropout(0.2))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])这段代码是使用MNIST数据集进行训练代码的一部分

MNIST数据集的读取使用的是tf.keras.datasets.mnist中的load_data()函数

完整的代码

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Input(shape=(28, 28)))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128, activation='relu'))

model.add(tf.keras.layers.Dropout(0.2))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test, verbose=2)采用sparse_categorical_crossentropy的原因是上述代码读取的数据集中标签是离散的(一个个数字)

categorical_crossentropy要求标签采用独热编码(onehot),而sparse_categorical_crossentropy接受离散值

例如数字1的标签的值是[0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0],则应使用categorical_crossentropy

例如数字1的标签的值是1,则应使用sparse_categorical_crossentropy

metrics

metrics参数的默认值是None

常用的就是准确率

在keras.metrics里可以找到各种各样的评价指标

metrics: List of metrics to be evaluated by the model during training

and testing. Each of this can be a string (name of a built-in

function), function or a `tf.keras.metrics.Metric` instance. See

`tf.keras.metrics`. Typically you will use `metrics=['accuracy']`. A

function is any callable with the signature `result = fn(y_true,

y_pred)`. To specify different metrics for different outputs of a

multi-output model, you could also pass a dictionary, such as

`metrics={'output_a': 'accuracy', 'output_b': ['accuracy', 'mse']}`.

You can also pass a list to specify a metric or a list of metrics

for each output, such as `metrics=[['accuracy'], ['accuracy', 'mse']]`

or `metrics=['accuracy', ['accuracy', 'mse']]`. When you pass the

strings 'accuracy' or 'acc', we convert this to one of

`tf.keras.metrics.BinaryAccuracy`,

`tf.keras.metrics.CategoricalAccuracy`,

`tf.keras.metrics.SparseCategoricalAccuracy` based on the loss

function used and the model output shape. We do a similar

conversion for the strings 'crossentropy' and 'ce' as well.有的地方为什么写的是acc?

不难发现,对于准确率,有的地方写法如下

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['acc'])这种写法和accuracy等效,因为TensorFlow会自动将其转换,根据损失函数和模型输出的形状来决定采用`tf.keras.metrics.BinaryAccuracy`、`tf.keras.metrics.CategoricalAccuracy`、`tf.keras.metrics.SparseCategoricalAccuracy`中的一种。

TensorFlow提供的别名

# Aliases

acc = ACC = accuracy

bce = BCE = binary_crossentropy

mse = MSE = mean_squared_error

mae = MAE = mean_absolute_error

mape = MAPE = mean_absolute_percentage_error

msle = MSLE = mean_squared_logarithmic_error

log_cosh = logcosh

cosine_proximity = cosine_similaritycompile函数源码

@traceback_utils.filter_traceback

def compile(self,

optimizer='rmsprop',

loss=None,

metrics=None,

loss_weights=None,

weighted_metrics=None,

run_eagerly=None,

steps_per_execution=None,

jit_compile=None,

**kwargs):

"""Configures the model for training.

Example:

```python

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=1e-3),

loss=tf.keras.losses.BinaryCrossentropy(),

metrics=[tf.keras.metrics.BinaryAccuracy(),

tf.keras.metrics.FalseNegatives()])

```

Args:

optimizer: String (name of optimizer) or optimizer instance. See

`tf.keras.optimizers`.

loss: Loss function. May be a string (name of loss function), or

a `tf.keras.losses.Loss` instance. See `tf.keras.losses`. A loss

function is any callable with the signature `loss = fn(y_true,

y_pred)`, where `y_true` are the ground truth values, and

`y_pred` are the model's predictions.

`y_true` should have shape

`(batch_size, d0, .. dN)` (except in the case of

sparse loss functions such as

sparse categorical crossentropy which expects integer arrays of shape

`(batch_size, d0, .. dN-1)`).

`y_pred` should have shape `(batch_size, d0, .. dN)`.

The loss function should return a float tensor.

If a custom `Loss` instance is

used and reduction is set to `None`, return value has shape

`(batch_size, d0, .. dN-1)` i.e. per-sample or per-timestep loss

values; otherwise, it is a scalar. If the model has multiple outputs,

you can use a different loss on each output by passing a dictionary

or a list of losses. The loss value that will be minimized by the

model will then be the sum of all individual losses, unless

`loss_weights` is specified.

metrics: List of metrics to be evaluated by the model during training

and testing. Each of this can be a string (name of a built-in

function), function or a `tf.keras.metrics.Metric` instance. See

`tf.keras.metrics`. Typically you will use `metrics=['accuracy']`. A

function is any callable with the signature `result = fn(y_true,

y_pred)`. To specify different metrics for different outputs of a

multi-output model, you could also pass a dictionary, such as

`metrics={'output_a': 'accuracy', 'output_b': ['accuracy', 'mse']}`.

You can also pass a list to specify a metric or a list of metrics

for each output, such as `metrics=[['accuracy'], ['accuracy', 'mse']]`

or `metrics=['accuracy', ['accuracy', 'mse']]`. When you pass the

strings 'accuracy' or 'acc', we convert this to one of

`tf.keras.metrics.BinaryAccuracy`,

`tf.keras.metrics.CategoricalAccuracy`,

`tf.keras.metrics.SparseCategoricalAccuracy` based on the loss

function used and the model output shape. We do a similar

conversion for the strings 'crossentropy' and 'ce' as well.

loss_weights: Optional list or dictionary specifying scalar coefficients

(Python floats) to weight the loss contributions of different model

outputs. The loss value that will be minimized by the model will then

be the *weighted sum* of all individual losses, weighted by the

`loss_weights` coefficients.

If a list, it is expected to have a 1:1 mapping to the model's

outputs. If a dict, it is expected to map output names (strings)

to scalar coefficients.

weighted_metrics: List of metrics to be evaluated and weighted by

`sample_weight` or `class_weight` during training and testing.

run_eagerly: Bool. Defaults to `False`. If `True`, this `Model`'s

logic will not be wrapped in a `tf.function`. Recommended to leave

this as `None` unless your `Model` cannot be run inside a

`tf.function`. `run_eagerly=True` is not supported when using

`tf.distribute.experimental.ParameterServerStrategy`.

steps_per_execution: Int. Defaults to 1. The number of batches to run

during each `tf.function` call. Running multiple batches inside a

single `tf.function` call can greatly improve performance on TPUs or

small models with a large Python overhead. At most, one full epoch

will be run each execution. If a number larger than the size of the

epoch is passed, the execution will be truncated to the size of the

epoch. Note that if `steps_per_execution` is set to `N`,

`Callback.on_batch_begin` and `Callback.on_batch_end` methods will

only be called every `N` batches (i.e. before/after each `tf.function`

execution).

jit_compile: If `True`, compile the model training step with XLA.

[XLA](https://www.tensorflow.org/xla) is an optimizing compiler for

machine learning.

`jit_compile` is not enabled for by default.

This option cannot be enabled with `run_eagerly=True`.

Note that `jit_compile=True` is

may not necessarily work for all models.

For more information on supported operations please refer to the

[XLA documentation](https://www.tensorflow.org/xla).

Also refer to

[known XLA issues](https://www.tensorflow.org/xla/known_issues) for

more details.

**kwargs: Arguments supported for backwards compatibility only.

"""

base_layer.keras_api_gauge.get_cell('compile').set(True)

with self.distribute_strategy.scope():

if 'experimental_steps_per_execution' in kwargs:

logging.warning('The argument `steps_per_execution` is no longer '

'experimental. Pass `steps_per_execution` instead of '

'`experimental_steps_per_execution`.')

if not steps_per_execution:

steps_per_execution = kwargs.pop('experimental_steps_per_execution')

# When compiling from an already-serialized model, we do not want to

# reapply some processing steps (e.g. metric renaming for multi-output

# models, which have prefixes added for each corresponding output name).

from_serialized = kwargs.pop('from_serialized', False)

self._validate_compile(optimizer, metrics, **kwargs)

self._run_eagerly = run_eagerly

self.optimizer = self._get_optimizer(optimizer)

self.compiled_loss = compile_utils.LossesContainer(

loss, loss_weights, output_names=self.output_names)

self.compiled_metrics = compile_utils.MetricsContainer(

metrics, weighted_metrics, output_names=self.output_names,

from_serialized=from_serialized)

self._configure_steps_per_execution(steps_per_execution or 1)

# Initializes attrs that are reset each time `compile` is called.

self._reset_compile_cache()

self._is_compiled = True

self.loss = loss or {}

if (self._run_eagerly or self.dynamic) and jit_compile:

raise ValueError(

'You cannot enable `run_eagerly` and `jit_compile` '

'at the same time.')

else:

self._jit_compile = jit_compile

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?