MapReduce之使用马尔可夫模型的智能邮件营销(二)

在这一篇博客中,通过MapReduce计算框架将顾客的历史交易数据,为每一个customer-id生成以下输出

customerID (

D

a

t

e

1

Date_1

Date1,

A

m

o

u

n

t

1

Amount_1

Amount1) ; (

D

a

t

e

2

Date_2

Date2,

A

m

o

u

n

t

2

Amount_2

Amount2);…(

D

a

t

e

N

Date_N

DateN,

A

m

o

u

n

t

N

Amount_N

AmountN)

使得:

D

a

t

e

1

≤

D

a

t

e

2

≤

.

.

.

≤

D

a

t

e

N

Date_1 \leq Date_2 \leq ...\leq Date_N

Date1≤Date2≤...≤DateN

这个Mapreduce输出安交易日期的升序排序,在这里直接使用Mapreduce的二次排序技术按日期对数据排序(因为这种方法不需要占用太多的内存),实现过程如下

数据可以自己使用程序生成,也可以使用《数据算法》一书给出的数据 Date

自定义类型CompositeKey

这个类型包括(customer-id,pucharse-date)对的一个自定义类型,这是自然键和要排序的自然值的一个组合

CompositeKey编码如下

package com.deng.MarkovState;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class CompositeKey implements WritableComparable<CompositeKey> {

private String customerID;

private long timestamp;

public CompositeKey(String customerID,long timestamp){

set(customerID,timestamp);

}

public CompositeKey(){

}

public void set(String customerID,long timestamp){

this.customerID=customerID;

this.timestamp=timestamp;

}

public String getCustomerID() {

return customerID;

}

public long getTimestamp() {

return timestamp;

}

//自定义比较

@Override

public int compareTo(CompositeKey o) {

if(this.customerID.compareTo(o.customerID)!=0){

return this.customerID.compareTo(o.customerID);

}else if(this.timestamp!=o.timestamp){

return timestamp<o.timestamp?-1:1;

}else{

return 0;

}

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(this.customerID);

dataOutput.writeLong(this.timestamp);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.customerID=dataInput.readUTF();

this.timestamp=dataInput.readLong();

}

public String toString(){

StringBuffer sb=new StringBuffer();

sb.append(getCustomerID()).append(",").append(getTimestamp());

return sb.toString();

}

}

分组比较器CompositeKeyComparator

对自定义类CompositeKey进行排序

CompositeKeyComparator编码

package com.deng.MarkovState;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

public class CompositeKeyComparator extends WritableComparator {

protected CompositeKeyComparator(){

super(CompositeKey.class,true);

}

public int compare(WritableComparable w1,WritableComparable w2){

CompositeKey key1=(CompositeKey) w1;

CompositeKey key2=(CompositeKey) w2;

int comparison=key1.getCustomerID().compareTo(key2.getCustomerID());

if(comparison==0){

if(key1.getTimestamp()==key2.getTimestamp()){

return 0;

}else if(key1.getTimestamp()<key2.getTimestamp()){

return -1;

}else {

return 1;

}

}else {

return comparison;

}

}

}

分组比较器NaturalKeyGroupComparator

对customer-id进行分组

NaturalKeyGroupComparator编码

package com.deng.MarkovState;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

public class NaturalKeyGroupingComparator extends WritableComparator {

protected NaturalKeyGroupingComparator(){

super(CompositeKey.class,true);

}

public int compare(WritableComparable w1,WritableComparable w2){

CompositeKey key1=(CompositeKey) w1;

CompositeKey key2=(CompositeKey) w2;

return key1.getCustomerID().compareTo(key2.getCustomerID());

}

}

mapper阶段任务

获取< customer-id,< timestamp,amount>>键值对

mapper阶段编码

package com.deng.MarkovState;

import com.deng.util.DateUtil;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class SecondarySortProjectionMapper extends Mapper<LongWritable, Text,CompositeKey,PairOfLongInt> {

private final CompositeKey reduceKey=new CompositeKey();

private final PairOfLongInt reduceValue=new PairOfLongInt();

public void map(LongWritable key,Text value,Context context){

String line=value.toString();

String[] tokens=line.split(",");

if(tokens.length!=4){

return ;

}

long date = 0;

try{

date= DateUtil.getDateAsMilliSeconds(tokens[2]);

}catch (Exception e){

e.printStackTrace();

}

int amount=Integer.parseInt(tokens[3]);

reduceKey.set(tokens[0],date);

reduceValue.set(date,amount);

try {

context.write(reduceKey,reduceValue);

} catch (IOException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

其中自定义类PairOfLongInt设计如下

package com.deng.MarkovState;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class PairOfLongInt implements WritableComparable<PairOfLongInt> {

private long timestamp;

private Integer amount;

public PairOfLongInt(){

}

public PairOfLongInt(long timestamp,Integer amount){

set(timestamp,amount);

}

public void set(long timestamp,Integer amount){

this.timestamp=timestamp;

this.amount=amount;

}

public long getTimestamp() {

return timestamp;

}

public Integer getAmount() {

return amount;

}

@Override

public int compareTo(PairOfLongInt o) {

return 0;

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeLong(timestamp);

dataOutput.writeInt(amount);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.timestamp=dataInput.readLong();

this.amount=dataInput.readInt();

}

public String toString(){

StringBuffer sb=new StringBuffer();

sb.append(getTimestamp()).append(",").append(getAmount());

return sb.toString();

}

}

DateUtil设计如下

package com.deng.util;

import java.text.SimpleDateFormat;

import java.util.Date;

public class DateUtil {

static final String DATE_FORMAT="yyyy-MM-dd";

static final SimpleDateFormat SIMPLE_DATE_FORMAT=new SimpleDateFormat(DATE_FORMAT);

public static Date getDate(String dateAsString){

try{

return SIMPLE_DATE_FORMAT.parse(dateAsString);

}catch (Exception e){

return null;

}

}

public static long getDateAsMilliSeconds(String dateAsString) throws Exception{

Date date=getDate(dateAsString);

return date.getTime();

}

public static String getDateAsString(long timestamp){

return SIMPLE_DATE_FORMAT.format(timestamp);

}

}

reducer阶段任务

对数据进行整理生成 如下输出

customerID (

D

a

t

e

1

Date_1

Date1,

A

m

o

u

n

t

1

Amount_1

Amount1) ; (

D

a

t

e

2

Date_2

Date2,

A

m

o

u

n

t

2

Amount_2

Amount2);…(

D

a

t

e

N

Date_N

DateN,

A

m

o

u

n

t

N

Amount_N

AmountN)

reducer阶段编码

package com.deng.MarkovState;

import com.deng.util.DateUtil;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class SecondarySortProjectionReducer extends Reducer<CompositeKey,PairOfLongInt, NullWritable, Text> {

public void reduce(CompositeKey key,Iterable<PairOfLongInt> values,Context context){

StringBuilder sb=new StringBuilder();

sb.append(key.getCustomerID());

for(PairOfLongInt pair: values){

sb.append(",");

long timestamp=pair.getTimestamp();

String date= DateUtil.getDateAsString(timestamp);

sb.append(date);

sb.append(",");

sb.append(pair.getAmount());

}

try {

context.write(NullWritable.get(),new Text(sb.toString()));

} catch (IOException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

该MapReduce阶段驱动如下

package com.deng.MarkovState;

import com.deng.util.FileUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MarkovStateDriver {

public static void main(String[] args) throws Exception {

FileUtil.deleteDirs("output");

FileUtil.deleteDirs("output2");

FileUtil.deleteDirs("MarkovState");

Configuration conf=new Configuration();

String[] otherArgs=new String[]{"input/smart_email_training.txt","output"};

Job secondSortJob=new Job(conf,"Markov");

FileInputFormat.setInputPaths(secondSortJob,new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(secondSortJob,new Path(otherArgs[1]));

secondSortJob.setJarByClass(MarkovStateDriver.class);

secondSortJob.setMapperClass(SecondarySortProjectionMapper.class);

secondSortJob.setReducerClass(SecondarySortProjectionReducer.class);

secondSortJob.setMapOutputKeyClass(CompositeKey.class);

secondSortJob.setMapOutputValueClass(PairOfLongInt.class);

secondSortJob.setOutputKeyClass(NullWritable.class);

secondSortJob.setOutputValueClass(Text.class);

secondSortJob.setCombinerKeyGroupingComparatorClass(CompositeKeyComparator.class);

secondSortJob.setGroupingComparatorClass(NaturalKeyGroupingComparator.class);

System.exit(secondSortJob.waitForCompletion(true)?0:1)==0);

}

}

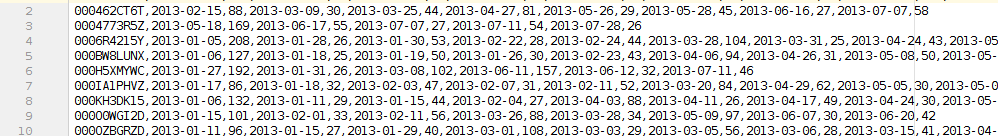

运行结果如下

2361

2361

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?