Markov model

- sequence of random variables that are not independent

- weather report

- text

Properties

- limited horizon

- P(Xt+1=sk∣X1,...,Xt)=P(Xt+1=s∣Xt) (first order)

- time invariant(stationary)

- P(X2=sk)=P(X1)

Visible MM

Hidden MM

- Motivation

- observing a sequence of symbols

- the sequence of state that led to the generation of the symbols is hidden

- Definition

- Q = sequence of states

- O = sequence of observations, drawn from a vocabulary

- q0,qf = special (start, final) states

- A = state transition probabilities

- B = symbol emission probabilities

- Π = initial state probabilities

- μ(A,B,Pi) = complete probabilistic model

used to model state sequences and observation sequences

Generative algorithm

- pick start state from Pi

- For t = 1…T

- move to another state based on A

- emit an observation based on B

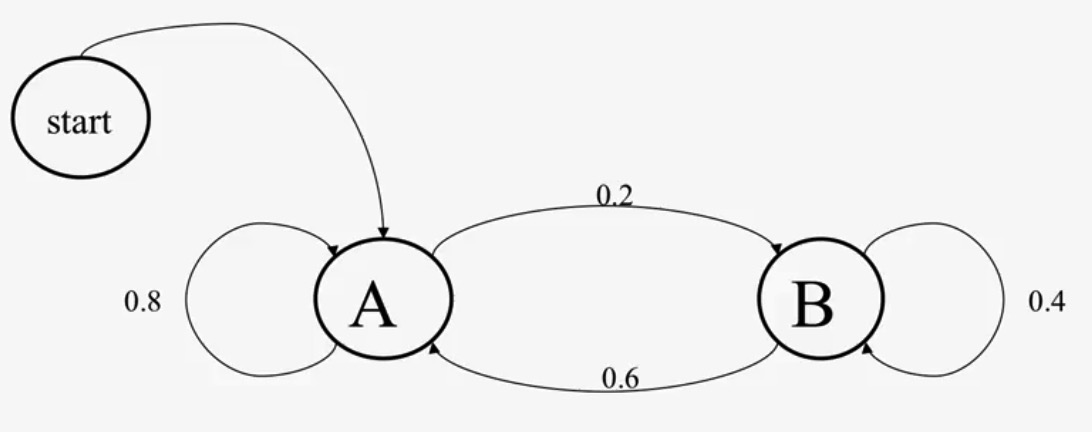

Example

State probability

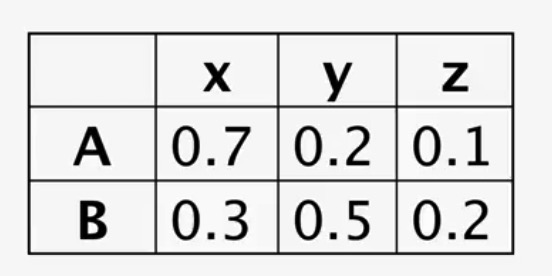

Emission probability

Initial

P(A∣start)=1.0P(B∣start)=0.0Transition

P(A∣A)=0.8P(A∣B)=0.6P(B∣A)=0.2P(B∣B)=0.4Emission: see previous table

observation of the sequence “yz”

- Possible sequences of states:

- AA

- AB

- BA

- BB

In this way we could compute all the possibilities of any sequence.

State and transitions

states:

- the states encode the most recent history

- the transitions encode likely sequences of states

- use MLE to estimate the transition probabilities

transitions:

- estimating the emission probabilities

- possible to use standard smoothing and heuristic methods

Sequence of observation

- observers can only see the emitted symbols

- observation likelihood

- given the observation sequence S and the model μ , what is the probability P(S∣μ) that the sequence was generated by that model

- HMM turn to language model

Tasks with HMM

- tasks

- Given a μ(A,B,Π) , find the distribution P(O∣μ)

- Given O,μ , what is (X1.X2,...,Xt)

- Given O and a space of all possible μ , find the best μ

- decoding

- tag each token with a label

Inference

- find the most likely tag, given the word

- t∗=argmaxtp(t∣w)

- given the model μ , we could find the best sequence of tags {ti}n1∗ given the sequence of words {wi}n1

- too many ways for combinations

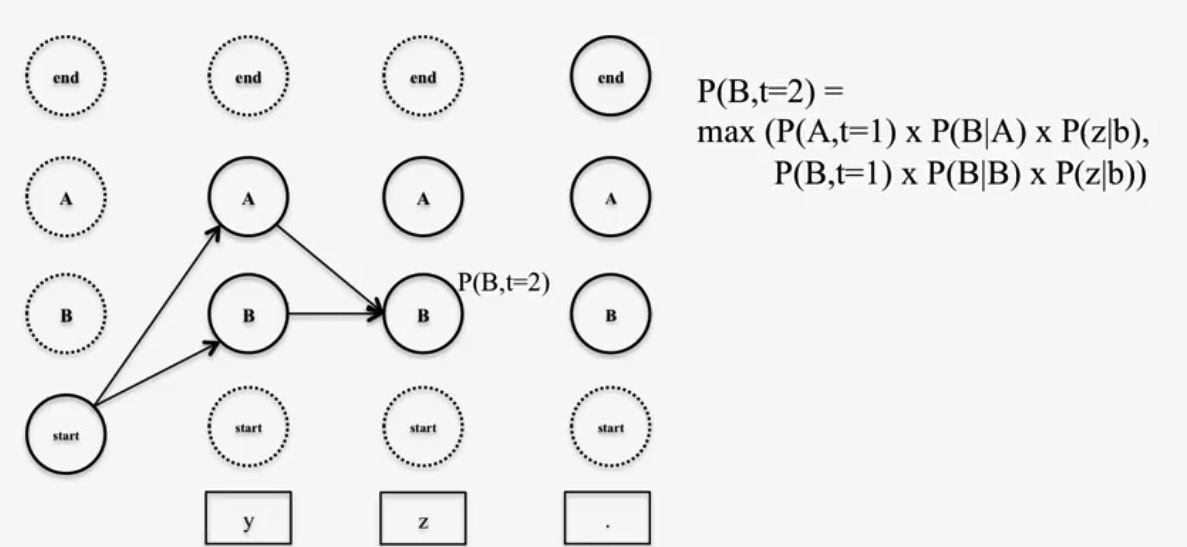

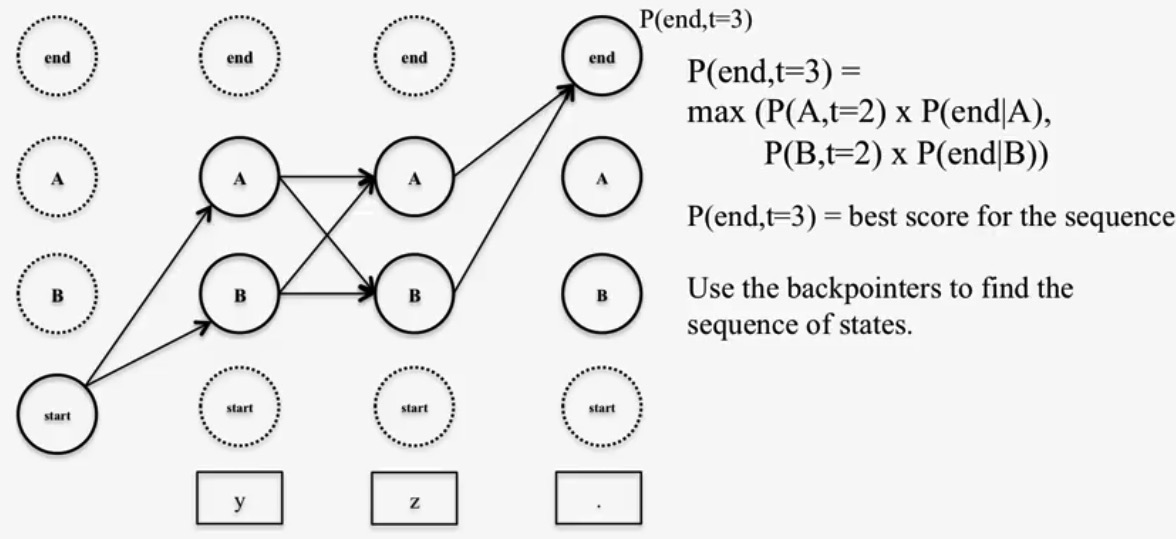

Viterbi algorithm

Find the best path up to observation i and state s(partial best path), and if we condition on the whole string of i, we will get the best path for the whole sentence.

- dynamic programming

- memoization

- backpointers

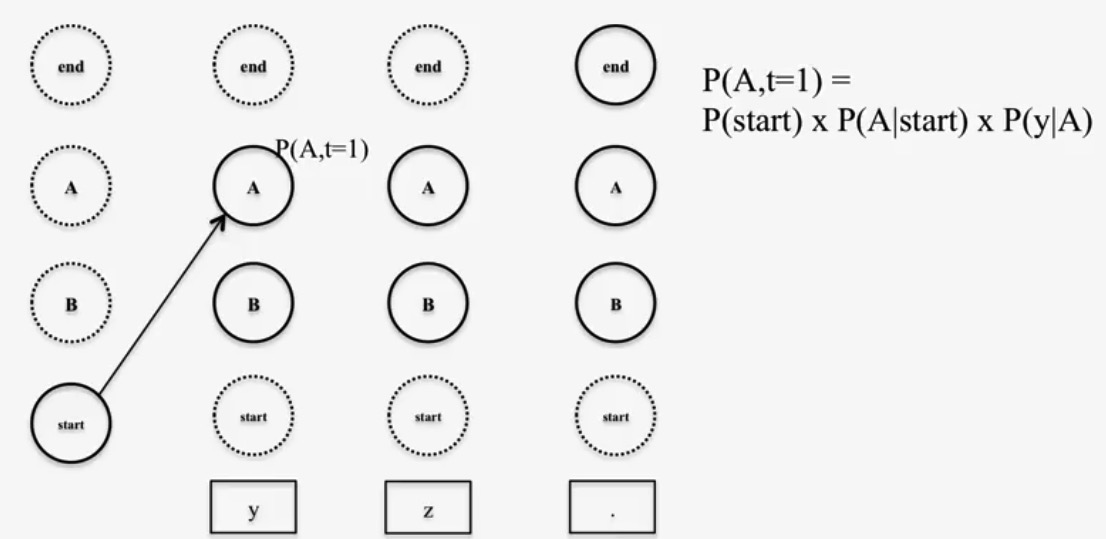

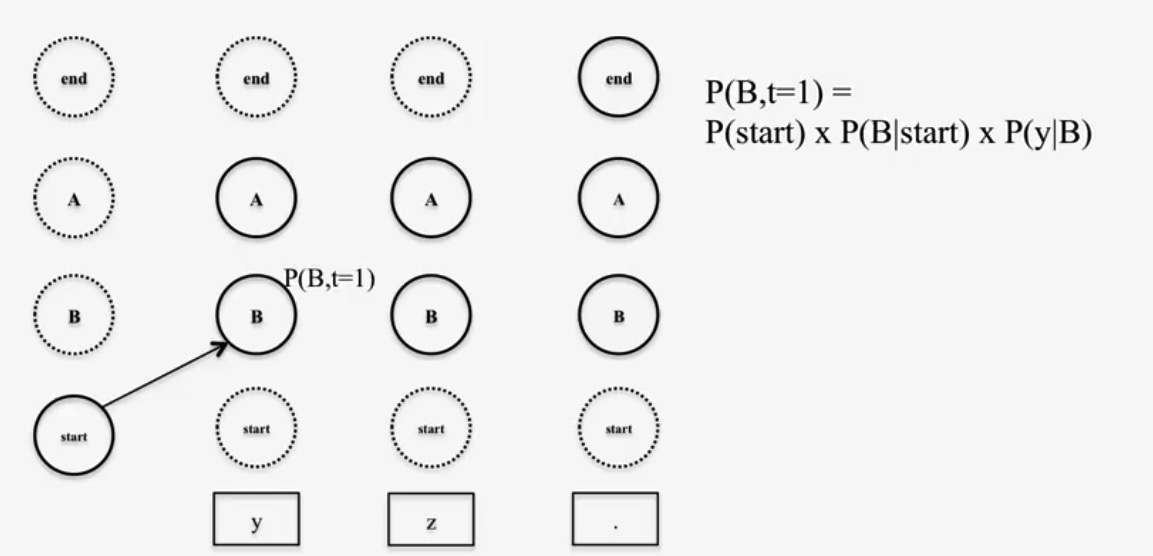

initial state

we could calculate the first state P(t=1)

say if we want to calculate P(B,t=2) and calculating P(A,t=2) is similar

and we could find the best path and best sequence of states

finally we could find the best sequence for all observations

269

269

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?