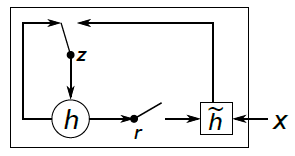

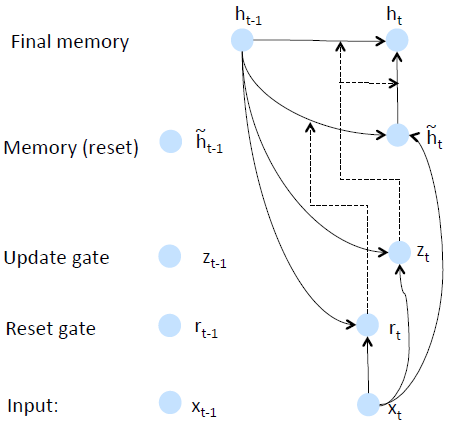

Illustration:

- update gate

ut=σ(Wuxxt+Wuhht−1)

Update gate u controls how much of past state should matter now. Ifu close to 1, then we can copy information in that unit through many time steps. Less vanishing gradient. - reset gate

rt=σ(Wrxxt+Wrhht−1)

If reset is close to 0, ignore previous hidden state → Allows model to drop information that is irrelevant in the future. - new memory content

h˜t=tanh(Wh˜xxt+rt∘Wh˜hht−1) - final memory at time step combines current and previous time steps:

ht=ut∘ht−1+(1−ut)∘h˜t

1116

1116

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?