~~有兴趣的小伙伴,加kinect算法交流群:462964980。

1, 打开骨骼帧的方式

对于V1,

- 方法NuiSkeletonTrackingEnable实现

- m_hNextSkeletonEvent = CreateEvent(NULL, TRUE, FALSE, NULL );

- hr =m_PNuiSensor->NuiSkeletonTrackingEnable(

- m_hNextSkeletonEvent,

- NUI_SKELETON_TRACKING_FLAG_ENABLE_IN_NEAR_RANGE//|

- );

- if( FAILED( hr ) )

- {

- cout<<"Couldnot open skeleton stream video"<<endl;

- return hr;

- }

对于V2

- // Initialize the Kinect andget coordinate mapper and the body reader

- IBodyFrameSource* pBodyFrameSource = NULL;

- hr = m_pKinectSensor->Open();

- if (SUCCEEDED(hr))

- {

- hr = m_pKinectSensor->get_CoordinateMapper(&m_pCoordinateMapper);

- }

- 方法get_CoordinateMapper得到坐标映射

- if (SUCCEEDED(hr))

- {

- hr =m_pKinectSensor->get_BodyFrameSource(&pBodyFrameSource);

- }

- 方法get_BodyFrameSource得到骨骼帧源

- if (SUCCEEDED(hr))

- {

- hr =pBodyFrameSource->OpenReader(&m_pBodyFrameReader);

- }

- 方法get_BodyFrameSource打开骨骼帧读取器

- SafeRelease(pBodyFrameSource);

- }

2,更新骨骼帧方式

对于V1,方法NuiSkeletonGetNextFrame实现

- NUI_SKELETON_FRAMESkeletonFrame;//骨骼帧的定义

- bool bFoundSkeleton = false;

- if(SUCCEEDED(NuiSkeletonGetNextFrame( 0, &SkeletonFrame )) )//Get the next frameof skeleton data.直接从kinect中提取骨骼帧

对于V2,

- if (!m_pBodyFrameReader)

- {

- return;

- }

- <pre name="code" class="cpp"> //更新骨骼帧

- //更新骨骼数据

- hResult = pBodyFrame->GetAndRefreshBodyData( BODY_COUNT, pBody );

3,画骨架方式:

对于V1,主要用opencv辅助来画,用到cvLine方法

例如左上肢的实现为:

- //左上肢

- if((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x!=0 ||pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y!=0) &&

- (pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x!=0 ||pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y!=0))

- cvLine(SkeletonImage, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER],pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], color, 2);

- if((pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x!=0 ||pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y!=0) &&

- (pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x!=0|| pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y!=0))

- cvLine(SkeletonImage,pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT],pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], color, 2);

- if((pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x!=0 ||pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y!=0) &&

- (pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x!=0 ||pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y!=0))

- cvLine(SkeletonImage,pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT],pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], color, 2);

- if((pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x!=0 ||pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y!=0) &&

- (pointSet[NUI_SKELETON_POSITION_HAND_LEFT].x!=0 ||pointSet[NUI_SKELETON_POSITION_HAND_LEFT].y!=0))

- cvLine(SkeletonImage,pointSet[NUI_SKELETON_POSITION_WRIST_LEFT],pointSet[NUI_SKELETON_POSITION_HAND_LEFT], color, 2);

对于V2,主要借助Direct2D微软的图形图像API,具体详细可以查阅资料。。当然也可以转换为用opencv来画。下面用OpenCV2.4.10中drawing functions 里边的line()函数:

- line(SkeletonImage,pointSet[joint0], pointSet[joint1], color, 2);

4,V2+VS2012+OpenCV代码

- #include <Windows.h>

- #include <Kinect.h>

- #include <opencv2/opencv.hpp>

- using namespace std;

- using namespace cv;

- //释放接口需要自己定义

- template<class Interface>

- inline void SafeRelease( Interface *& pInterfaceToRelease )

- {

- if( pInterfaceToRelease != NULL ){

- pInterfaceToRelease->Release();

- pInterfaceToRelease = NULL;

- }

- }

- void DrawBone( Mat& SkeletonImage, CvPoint pointSet[], const Joint* pJoints, int whichone, JointType joint0, JointType joint1);

- void drawSkeleton( Mat& SkeletonImage, CvPoint pointSet[],const Joint* pJoints, int whichone);

- int main( int argc, char **argv[] )

- {

- //OpenCV中开启CPU的硬件指令优化功能函数

- setUseOptimized( true );

- // Sensor

- IKinectSensor* pSensor;

- HRESULT hResult = S_OK;

- hResult = GetDefaultKinectSensor( &pSensor );

- if( FAILED( hResult ) ){

- std::cerr << "Error : GetDefaultKinectSensor" << std::endl;

- return -1;

- }

- hResult = pSensor->Open( );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IKinectSensor::Open()" << std::endl;

- return -1;

- }

- //Source

- IColorFrameSource* pColorSource;

- hResult = pSensor->get_ColorFrameSource( &pColorSource );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IKinectSensor::get_ColorFrameSource()" << std::endl;

- return -1;

- }

- IBodyFrameSource* pBodySource;

- hResult = pSensor->get_BodyFrameSource( &pBodySource );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IKinectSensor::get_BodyFrameSource()" << std::endl;

- return -1;

- }

- // Reader

- IColorFrameReader* pColorReader;

- hResult = pColorSource->OpenReader( &pColorReader );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IColorFrameSource::OpenReader()" << std::endl;

- return -1;

- }

- IBodyFrameReader* pBodyReader;

- hResult = pBodySource->OpenReader( &pBodyReader );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IBodyFrameSource::OpenReader()" << std::endl;

- return -1;

- }

- // Description

- IFrameDescription* pDescription;

- hResult = pColorSource->get_FrameDescription( &pDescription );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IColorFrameSource::get_FrameDescription()" << std::endl;

- return -1;

- }

- int width = 0;

- int height = 0;

- pDescription->get_Width( &width ); // 1920

- pDescription->get_Height( &height ); // 1080

- unsigned int bufferSize = width * height * 4 * sizeof( unsigned char );

- cv::Mat bufferMat( height, width, CV_8UC4 );

- cv::Mat bodyMat( height / 2, width / 2, CV_8UC4 );

- cv::namedWindow( "Body" );

- // Color Table

- cv::Vec3b color[BODY_COUNT];

- color[0] = cv::Vec3b( 255, 0, 0 );

- color[1] = cv::Vec3b( 0, 255, 0 );

- color[2] = cv::Vec3b( 0, 0, 255 );

- color[3] = cv::Vec3b( 255, 255, 0 );

- color[4] = cv::Vec3b( 255, 0, 255 );

- color[5] = cv::Vec3b( 0, 255, 255 );

- // Coordinate Mapper

- ICoordinateMapper* pCoordinateMapper;

- hResult = pSensor->get_CoordinateMapper( &pCoordinateMapper );

- if( FAILED( hResult ) ){

- std::cerr << "Error : IKinectSensor::get_CoordinateMapper()" << std::endl;

- return -1;

- }

- while(1){

- // Frame

- IColorFrame* pColorFrame = nullptr;

- hResult = pColorReader->AcquireLatestFrame( &pColorFrame );

- if( SUCCEEDED( hResult ) ){

- hResult = pColorFrame->CopyConvertedFrameDataToArray( bufferSize, reinterpret_cast<BYTE*>( bufferMat.data ), ColorImageFormat::ColorImageFormat_Bgra );

- if( SUCCEEDED( hResult ) ){

- cv::resize( bufferMat, bodyMat, cv::Size(), 0.5, 0.5 );

- }

- }

- //更新骨骼帧

- IBodyFrame* pBodyFrame = nullptr;

- hResult = pBodyReader->AcquireLatestFrame( &pBodyFrame );

- if( SUCCEEDED( hResult ) ){

- IBody* pBody[BODY_COUNT] = { 0 };

- //更新骨骼数据

- hResult = pBodyFrame->GetAndRefreshBodyData( BODY_COUNT, pBody );

- if( SUCCEEDED( hResult ) ){

- for( int count = 0; count < BODY_COUNT; count++ ){

- BOOLEAN bTracked = false;

- hResult = pBody[count]->get_IsTracked( &bTracked );

- if( SUCCEEDED( hResult ) && bTracked ){

- Joint joint[JointType::JointType_Count];

- hResult = pBody[ count ]->GetJoints( JointType::JointType_Count, joint );

- if( SUCCEEDED( hResult ) ){

- // Left Hand State

- HandState leftHandState = HandState::HandState_Unknown;

- hResult = pBody[count]->get_HandLeftState( &leftHandState );

- if( SUCCEEDED( hResult ) ){

- ColorSpacePoint colorSpacePoint = { 0 };

- hResult = pCoordinateMapper->MapCameraPointToColorSpace( joint[JointType::JointType_HandLeft].Position, &colorSpacePoint );

- if( SUCCEEDED( hResult ) ){

- int x = static_cast<int>( colorSpacePoint.X );

- int y = static_cast<int>( colorSpacePoint.Y );

- if( ( x >= 0 ) && ( x < width ) && ( y >= 0 ) && ( y < height ) ){

- if( leftHandState == HandState::HandState_Open ){

- cv::circle( bufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 128, 0 ), 5, CV_AA );

- }

- else if( leftHandState == HandState::HandState_Closed ){

- cv::circle( bufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 0, 128 ), 5, CV_AA );

- }

- else if( leftHandState == HandState::HandState_Lasso ){

- cv::circle( bufferMat, cv::Point( x, y ), 75, cv::Scalar( 128, 128, 0 ), 5, CV_AA );

- }

- }

- }

- }

- // Right Hand State

- HandState rightHandState = HandState::HandState_Unknown;

- hResult = pBody[count]->get_HandRightState( &rightHandState );

- if( SUCCEEDED( hResult ) ){

- ColorSpacePoint colorSpacePoint = { 0 };

- hResult = pCoordinateMapper->MapCameraPointToColorSpace( joint[JointType::JointType_HandRight].Position, &colorSpacePoint );

- if( SUCCEEDED( hResult ) ){

- int x = static_cast<int>( colorSpacePoint.X );

- int y = static_cast<int>( colorSpacePoint.Y );

- if( ( x >= 0 ) && ( x < width ) && ( y >= 0 ) && ( y < height ) ){

- if( rightHandState == HandState::HandState_Open ){

- cv::circle( bufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 128, 0 ), 5, CV_AA );

- }

- else if( rightHandState == HandState::HandState_Closed ){

- cv::circle( bufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 0, 128 ), 5, CV_AA );

- }

- else if( rightHandState == HandState::HandState_Lasso ){

- cv::circle( bufferMat, cv::Point( x, y ), 75, cv::Scalar( 128, 128, 0 ), 5, CV_AA );

- }

- }

- }

- }

- CvPoint skeletonPoint[BODY_COUNT][JointType_Count]={cvPoint(0,0)};

- // Joint

- for( int type = 0; type < JointType::JointType_Count; type++ ){

- ColorSpacePoint colorSpacePoint = { 0 };

- pCoordinateMapper->MapCameraPointToColorSpace( joint[type].Position, &colorSpacePoint );

- int x = static_cast<int>( colorSpacePoint.X );

- int y = static_cast<int>( colorSpacePoint.Y );

- skeletonPoint[count][type].x = x;

- skeletonPoint[count][type].y = y;

- if( ( x >= 0 ) && ( x < width ) && ( y >= 0 ) && ( y < height ) ){

- cv::circle( bufferMat, cv::Point( x, y ), 5, static_cast< cv::Scalar >( color[count] ), -1, CV_AA );

- }

- }

- //画骨骼

- drawSkeleton( bufferMat, skeletonPoint[count], joint,count);

- }

- //// Lean

- //PointF amount;

- //hResult = pBody[count]->get_Lean( &amount );

- //if( SUCCEEDED( hResult ) ){

- // std::cout << "amount : " << amount.X << ", " << amount.Y << std::endl;

- //}

- }

- }

- cv::resize( bufferMat, bodyMat, cv::Size(), 0.5, 0.5 );

- }

- for( int count = 0; count < BODY_COUNT; count++ ){

- SafeRelease( pBody[count] );

- }

- }

- SafeRelease( pColorFrame );

- SafeRelease( pBodyFrame );

- waitKey(1);

- cv::imshow( "Body", bodyMat );

- }

- SafeRelease( pColorSource );

- SafeRelease( pColorReader );

- SafeRelease( pDescription );

- SafeRelease(pBodySource);

- // done with body frame reader

- SafeRelease(pBodyReader);

- SafeRelease( pDescription );

- // done with coordinate mapper

- SafeRelease(pCoordinateMapper);

- if( pSensor ){

- pSensor->Close();

- }

- SafeRelease( pSensor );

- return 0;

- }

- void DrawBone( Mat& SkeletonImage, CvPoint pointSet[], const Joint* pJoints, int whichone, JointType joint0, JointType joint1)

- {

- TrackingState joint0State = pJoints[joint0].TrackingState;

- TrackingState joint1State = pJoints[joint1].TrackingState;

- // If we can't find either of these joints, exit

- if ((joint0State == TrackingState_NotTracked) || (joint1State == TrackingState_NotTracked))

- {

- return;

- }

- // Don't draw if both points are inferred

- if ((joint0State == TrackingState_Inferred) && (joint1State == TrackingState_Inferred))

- {

- return;

- }

- CvScalar color;

- switch(whichone) //跟踪不同的人显示不同的颜色

- {

- case 0:

- color = cvScalar(255);

- break;

- case 1:

- color = cvScalar(0,255);

- break;

- case 2:

- color = cvScalar(0, 0, 255);

- break;

- case 3:

- color = cvScalar(255, 255, 0);

- break;

- case 4:

- color = cvScalar(255, 0, 255);

- break;

- case 5:

- color = cvScalar(0, 255, 255);

- break;

- }

- // We assume all drawn bones are inferred unless BOTH joints are tracked

- if ((joint0State == TrackingState_Tracked) && (joint1State == TrackingState_Tracked))

- {

- line(SkeletonImage,pointSet[joint0], pointSet[joint1], color, 2);

- }

- else

- {

- line(SkeletonImage,pointSet[joint0], pointSet[joint1], color, 2);

- }

- }

- void drawSkeleton( Mat& SkeletonImage, CvPoint pointSet[],const Joint* pJoints, int whichone)

- {

- // Draw the bones

- // Torso

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_Head, JointType_Neck);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_Neck, JointType_SpineShoulder);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineShoulder, JointType_SpineMid);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineMid, JointType_SpineBase);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineShoulder, JointType_ShoulderRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineShoulder, JointType_ShoulderLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineBase, JointType_HipRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineBase, JointType_HipLeft);

- // Right Arm

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ShoulderRight, JointType_ElbowRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ElbowRight, JointType_WristRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristRight, JointType_HandRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HandRight, JointType_HandTipRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristRight, JointType_ThumbRight);

- // Left Arm

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ShoulderLeft, JointType_ElbowLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ElbowLeft, JointType_WristLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristLeft, JointType_HandLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HandLeft, JointType_HandTipLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristLeft, JointType_ThumbLeft);

- // Right Leg

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HipRight, JointType_KneeRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_KneeRight, JointType_AnkleRight);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_AnkleRight, JointType_FootRight);

- // Left Leg

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HipLeft, JointType_KneeLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_KneeLeft, JointType_AnkleLeft);

- DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_AnkleLeft, JointType_FootLeft);

- }

代码注解:主要定义一个DrawBone()函数来画骨架,而骨骼点的画出用的OpenCV的circle()函数实现。

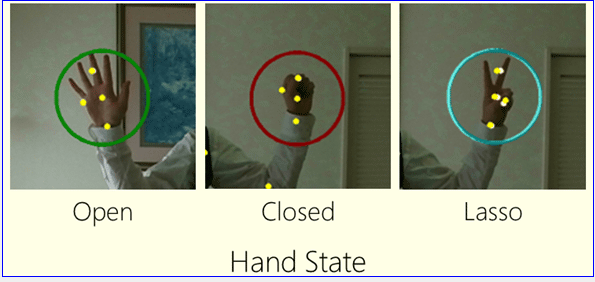

SDK自带的三种手势识别

欢迎拍砖指正!

本文介绍了如何在Kinect V1和V2中打开和更新骨骼帧,以及使用OpenCV绘制骨骼数据。通过对比V1的NuiSkeletonGetNextFrame方法和V2的BodyFrameSource接口,展示了V2如何利用Direct2D或OpenCV进行骨骼绘制。此外,还提供了V2与VS2012及OpenCV结合的代码示例,演示了如何获取和处理骨骼数据并实时显示。

本文介绍了如何在Kinect V1和V2中打开和更新骨骼帧,以及使用OpenCV绘制骨骼数据。通过对比V1的NuiSkeletonGetNextFrame方法和V2的BodyFrameSource接口,展示了V2如何利用Direct2D或OpenCV进行骨骼绘制。此外,还提供了V2与VS2012及OpenCV结合的代码示例,演示了如何获取和处理骨骼数据并实时显示。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?