反向传播整体代码

void backPropagation(int a, struct result * data, struct parameter * parameter1) //反向传播batch梯度下降更新参数函数

/*

本函数中bias结尾的变量代表每一层的梯度(用于计算传播到下一层的梯度),

wbias结尾的变量代表每一层网络参数的梯度(用于更新参数),relubias结尾的变量代表反向传播到激活层的梯度,

其中层数已经用first等序数词注明。本网络结构是两个通道的卷积加三层全连接,每个通道有三层卷积层,无池化层,

采用的是3*3卷积核,一共六个。全连接尺寸是1152,180,45,10,最后接上softmax输出十个概率判别值。

*/

{

double * outbias;

outbias = (double * ) malloc(10 * sizeof(double));

for (int i = 0; i < 10; i++) //计算softmax交叉熵损失,然后计算传回来的梯度

{

if (i == a) outbias[i] = (data -> result[i] - 1);

else outbias[i] = data -> result[i];

}

double * outwbias;

outwbias = (double * ) malloc(450 * sizeof(double));

Matrix_line_mul(45, 10, & data -> secondrelu[0][0], outbias, outwbias);

// ↑求softmax层的梯度

double * secondrelubias;

secondrelubias = (double * ) malloc(45 * sizeof(double));

Matrix_bp_mul(45, 10, & parameter1 -> outhiddenlayer[0][0], outbias, secondrelubias);

free(outbias);

outbias = NULL;

//↑softmax之前的全连接层的梯度

double * secondbias;

secondbias = (double * ) malloc(180 * sizeof(double));

bprelu(45, & data -> secondmlp[0][0], secondrelubias, secondbias);

free(secondrelubias);

secondrelubias = NULL;

//↑leakyrelu的梯度

double * secondwbias;

secondwbias = (double * ) malloc(8100 * sizeof(double));

Matrix_line_mul(180, 45, & data -> firstrelu[0][0], secondbias, secondwbias);

//又是一个softmax梯度

double * firstrelubias;

firstrelubias = (double * ) malloc(180 * sizeof(double));

Matrix_bp_mul(180, 45, & parameter1 -> secondhiddenlayer[0][0], secondbias, firstrelubias);

free(secondbias);

secondbias = NULL;

//全连接

double * firstbias;

firstbias = (double * ) malloc(180 * sizeof(double));

bprelu(180, & data -> firstmlp[0][0], firstrelubias, firstbias);

free(firstrelubias);

firstrelubias = NULL;

//relu

double * firstwbias;

firstwbias = (double * ) malloc(207360 * sizeof(double));

Matrix_line_mul(1152, 180, & data -> beforepool[0][0], firstbias, firstwbias);

//softmax

double * allconbias;

allconbias = (double * ) malloc(1152 * sizeof(double));

Matrix_bp_mul(1152, 180, & parameter1 -> firsthiddenlayer[0][0], firstbias, allconbias);

free(firstbias);

firstbias = NULL;

//全连接

//↓开启卷积梯度

double * thirdconbias;

thirdconbias = (double * ) malloc(576 * sizeof(double));

double * thirdconbias1;

thirdconbias1 = (double * ) malloc(576 * sizeof(double));

split(thirdconbias, thirdconbias1, allconbias);

free(allconbias);

allconbias = NULL;

//拆分为二

double * thirdkernelbias;

thirdkernelbias = (double * ) malloc(9 * sizeof(double));

Convolution(26, 26, 24, & data -> secondcon[0][0], thirdconbias, thirdkernelbias);

//data->secondcon-26*26, thirdconbias-24*24, thirdkernelbias-3*3

//注意,此处没问题,作者使用26*26的原图,以24*24为卷积核,

//进行卷积操作,得到3*3的卷积核梯度(这是对于最后一层卷积层的卷积核的梯度)

double * secondconbias;

secondconbias = (double * ) malloc(676 * sizeof(double));

double * turnthirdkerne3;

turnthirdkerne3 = (double * ) malloc(9 * sizeof(double));

Overturn_convolution_kernel(3, & parameter1 -> kernel3[0][0], turnthirdkerne3);

//转置

double * padthirdkernelbias;

padthirdkernelbias = (double * ) malloc(784 * sizeof(double));

//接着将全连接网络传递回来的梯度,传递回上一个卷积层

padding(26, thirdconbias, padthirdkernelbias);

free(thirdconbias);

thirdconbias = NULL;

//pad

Convolution(28, 28, 3, padthirdkernelbias, turnthirdkerne3, secondconbias);

free(turnthirdkerne3);

turnthirdkerne3 = NULL;

free(padthirdkernelbias);

padthirdkernelbias = NULL;

//再将梯度传递回上一层卷积网络

double * secondkernelbias;

secondkernelbias = (double * ) malloc(9 * sizeof(double));

Convolution(28, 28, 26, & data -> firstcon[0][0], secondconbias, secondkernelbias);

//再计算当前卷积层卷积核的梯度

double * firstconbias;

firstconbias = (double * ) malloc(784 * sizeof(double));

double * turnsecondkernel;

turnsecondkernel = (double * ) malloc(9 * sizeof(double));

Overturn_convolution_kernel(3, & parameter1 -> kernel2[0][0], turnsecondkernel);

double * padsecondkernelbias;

padsecondkernelbias = (double * ) malloc(900 * sizeof(double));

padding(28, secondconbias, padsecondkernelbias);

free(secondconbias);

secondconbias = NULL;

Convolution(30, 30, 3, padsecondkernelbias, turnsecondkernel, firstconbias);

free(turnsecondkernel);

turnsecondkernel = NULL;

free(padsecondkernelbias);

padsecondkernelbias = NULL;

double * firstkernelbias;

firstkernelbias = (double * ) malloc(9 * sizeof(double));

Convolution(30, 30, 28, & data -> picturedata[0][0], firstconbias, firstkernelbias);

free(firstconbias);

firstconbias = NULL;

double * thirdkernelbias1;

thirdkernelbias1 = (double * ) malloc(9 * sizeof(double));

Convolution(26, 26, 24, & data -> secondcon1[0][0], thirdconbias1, thirdkernelbias1);

double * secondconbias1;

secondconbias1 = (double * ) malloc(676 * sizeof(double));

double * turnthirdkernel1;

turnthirdkernel1 = (double * ) malloc(9 * sizeof(double));

Overturn_convolution_kernel(3, & parameter1 -> kernel33[0][0], turnthirdkernel1);

double * padthirdkernelbias1;

padthirdkernelbias1 = (double * ) malloc(784 * sizeof(double));

padding(26, thirdconbias1, padthirdkernelbias1);

free(thirdconbias1);

thirdconbias1 = NULL;

Convolution(28, 28, 3, padthirdkernelbias1, turnthirdkernel1, secondconbias1);

free(turnthirdkernel1);

turnthirdkernel1 = NULL;

free(padthirdkernelbias1);

padthirdkernelbias1 = NULL;

double * secondkernelbias1;

secondkernelbias1 = (double * ) malloc(9 * sizeof(double));

Convolution(28, 28, 26, & data -> firstcon1[0][0], secondconbias1, secondkernelbias1);

double * firstconbias1;

firstconbias1 = (double * ) malloc(784 * sizeof(double));

double * turnsecondkernel1;

turnsecondkernel1 = (double * ) malloc(9 * sizeof(double));

Overturn_convolution_kernel(3, & parameter1 -> kernel22[0][0], turnsecondkernel1);

double * padsecondkernelbias1;

padsecondkernelbias1 = (double * ) malloc(900 * sizeof(double));

padding(28, secondconbias1, padsecondkernelbias1);

free(secondconbias1);

secondconbias = NULL;

Convolution(30, 30, 3, padsecondkernelbias1, turnsecondkernel1, firstconbias1);

free(turnsecondkernel1);

turnsecondkernel1 = NULL;

free(padsecondkernelbias1);

padsecondkernelbias1 = NULL;

double * firstkernelbias1;

firstkernelbias1 = (double * ) malloc(9 * sizeof(double));

Convolution(30, 30, 28, & data -> picturedata[0][0], firstconbias1, firstkernelbias1);

free(firstconbias1);

firstconbias1 = NULL;

Matrix_bp(3, 3, & parameter1 -> kernel1[0][0], firstkernelbias); //iterations更新参数

Matrix_bp(3, 3, & parameter1 -> kernel2[0][0], secondkernelbias);

Matrix_bp(3, 3, & parameter1 -> kernel3[0][0], thirdkernelbias);

Matrix_bp(3, 3, & parameter1 -> kernel11[0][0], firstkernelbias1);

Matrix_bp(3, 3, & parameter1 -> kernel22[0][0], secondkernelbias1);

Matrix_bp(3, 3, & parameter1 -> kernel33[0][0], thirdkernelbias1);

Matrix_bp(1152, 180, & parameter1 -> firsthiddenlayer[0][0], firstwbias);

Matrix_bp(180, 45, & parameter1 -> secondhiddenlayer[0][0], secondwbias);

Matrix_bp(45, 10, & parameter1 -> outhiddenlayer[0][0], outwbias);

free(firstkernelbias); //释放中途动态分配的变量,防止内存溢出

firstkernelbias = NULL;

free(secondkernelbias);

secondkernelbias = NULL;

free(thirdkernelbias);

thirdkernelbias = NULL;

free(firstkernelbias1);

firstkernelbias1 = NULL;

free(secondkernelbias1);

secondkernelbias1 = NULL;

free(thirdkernelbias1);

thirdkernelbias1 = NULL;

free(firstwbias);

firstwbias = NULL;

free(secondwbias);

secondwbias = NULL;

free(outwbias);

outwbias = NULL;

return;

}

详细解析

倒数第一层softmax梯度

double * outbias;

outbias = (double * ) malloc(10 * sizeof(double));

for (int i = 0; i < 10; i++) //计算softmax交叉熵损失,然后计算传回来的梯度

{

if (i == a) outbias[i] = (data -> result[i] - 1);

else outbias[i] = data -> result[i];

}

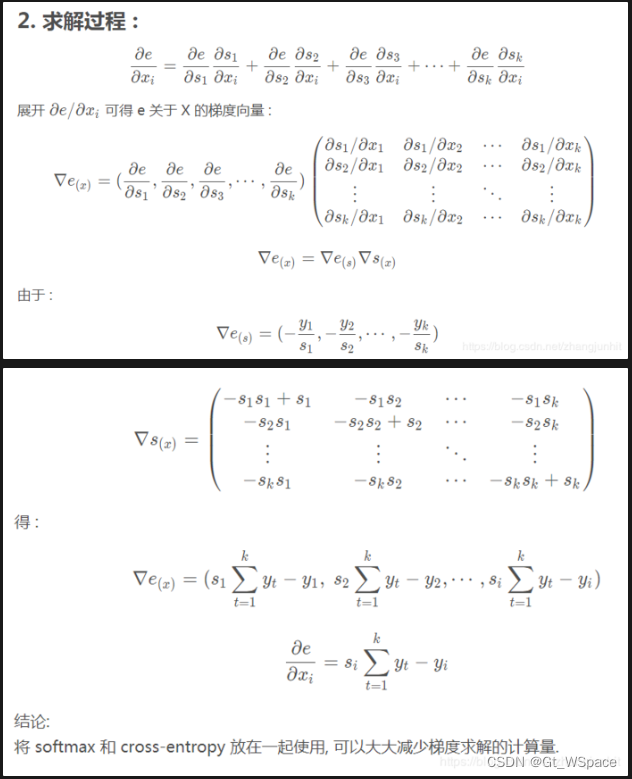

以上是softmax层的梯度(计算依据有下面的图片给出)(注:类似的梯度在网络中仅出现一次)

double * outwbias;

outwbias = (double * ) malloc(450 * sizeof(double));

Matrix_line_mul(45, 10, & data -> secondrelu[0][0], outbias, outwbias);

double * secondrelubias;

上述代码中的outbias则是单独创建了一个一维数组,在当前样本下,储存应该以(1-yi)还是yi 来进行计算。

void Matrix_line_mul(int m, int n, double * a, double * b, double * c) //反向传播计算每一层网络参数矩阵梯度的函数(前一层神经元梯度行矩阵乘本层神经元梯度列矩阵,得到本层参数梯度)

{

for (int i = 0; i < m; i++) {

for (int j = 0; j < n; j++) {

c[i * n + j] = a[i] * b[j];

}

}

}

下面的Matrix_line_mul 则是针对当前进入网络的图片,对所有神经元计算最后一层softmax的梯度。输出的outwbias维度为[450]

并将结果储存到了outwbias 中。(这个outwbias在后续几个梯度计算中都没有出现)

注意:这段代码中的矩阵计算,是逐个赋值的计算形式

-

补:带上交叉熵的损失函数以及其梯度的求解

考虑一个输入向量 x, 经 softmax 函数归一化处理后得到向量 s 作为预测的概率分布, 已知向量 y 为真实的概率分布, 由 cross-entropy 函数计算得出误差值 error (标量 e ), 求 e 关于 x 的梯度.

-

最后一个leakyrelu层之前(即全连接层)的梯度double * secondrelubias; secondrelubias = (double * ) malloc(45 * sizeof(double)); Matrix_bp_mul(45, 10, & parameter1 -> outhiddenlayer[0][0], outbias, secondrelubias); free(outbias); outbias = NULL;& parameter1 -> outhiddenlayer[0][0]即是获取网络参数结构体中全连接层的权重参数。传入的另一个参数则是之前由softmax计算出来的outbias维度为[10]。

Matrix_bp_mul实现了[45, 10]*[10, 1]的矩阵乘法,得出结果维度为[45, 1]void Matrix_bp_mul(int m, int n, double * a, double * b, double * c) //反向传播时从后一层的梯度推导到前一层梯度的函数 { for (int i = 0; i < m; i++) { c[i] = 0; //空间清空,方便做累加 for (int j = 0; j < n; j++) { c[i] += a[i * n + j] * b[j]; } } }注意:这段代码中的计算是将某一行全相加

-

leakyrelu层的梯度double * secondbias; secondbias = (double * ) malloc(180 * sizeof(double)); bprelu(45, & data -> secondmlp[0][0], secondrelubias, secondbias); free(secondrelubias); secondrelubias = NULL;输入向量和输出向量的维度都是一样的,在此处均为[45, 1]

void bprelu(int m, double * a, double * b, double * c) //激活函数的反向传播 { for (int i = 0; i < m; i++) { if (a[i] > 0) c[i] = b[i] * 1; else if (a[i] <= 0) c[i] = b[i] * 0.05; } }leakyrelu的梯度解释没什么好说的。

-

以上三个流程循环几次即可,最后停留在1152之前的全连接处即可

double * outbias; outbias = (double * ) malloc(10 * sizeof(double)); for (int i = 0; i < 10; i++) { if (i == a) outbias[i] = (data -> result[i] - 1); else outbias[i] = data -> result[i]; } double * outwbias; outwbias = (double * ) malloc(450 * sizeof(double)); Matrix_line_mul(45, 10, & data -> secondrelu[0][0], outbias, outwbias); // ↑求softmax层的梯度 double * secondrelubias; secondrelubias = (double * ) malloc(45 * sizeof(double)); Matrix_bp_mul(45, 10, & parameter1 -> outhiddenlayer[0][0], outbias, secondrelubias); free(outbias); outbias = NULL; //↑softmax之前的全连接层的梯度 double * secondbias; secondbias = (double * ) malloc(180 * sizeof(double)); bprelu(45, & data -> secondmlp[0][0], secondrelubias, secondbias); free(secondrelubias); secondrelubias = NULL; //↑leakyrelu的梯度 double * secondwbias; secondwbias = (double * ) malloc(8100 * sizeof(double)); Matrix_line_mul(180, 45, & data -> firstrelu[0][0], secondbias, secondwbias); //又是一个softmax梯度 double * firstrelubias; firstrelubias = (double * ) malloc(180 * sizeof(double)); Matrix_bp_mul(180, 45, & parameter1 -> secondhiddenlayer[0][0], secondbias, firstrelubias); free(secondbias); secondbias = NULL; //全连接 double * firstbias; firstbias = (double * ) malloc(180 * sizeof(double)); bprelu(180, & data -> firstmlp[0][0], firstrelubias, firstbias); free(firstrelubias); firstrelubias = NULL; //relu double * firstwbias; firstwbias = (double * ) malloc(207360 * sizeof(double)); Matrix_line_mul(1152, 180, & data -> beforepool[0][0], firstbias, firstwbias); //softmax double * allconbias; allconbias = (double * ) malloc(1152 * sizeof(double)); Matrix_bp_mul(1152, 180, & parameter1 -> firsthiddenlayer[0][0], firstbias, allconbias); free(firstbias); firstbias = NULL; //全连接注意到:在代码中几处

Matrix_line_mul输出的结果均在后文中较远部分使用

以下是卷积部分梯度计算

-

先将全连接传入梯度拆分回原来的两个通道double * thirdconbias; thirdconbias = (double * ) malloc(576 * sizeof(double)); double * thirdconbias1; thirdconbias1 = (double * ) malloc(576 * sizeof(double)); split(thirdconbias, thirdconbias1, allconbias); free(allconbias); allconbias = NULL;split函数实现:

void split(double * a, double * b, double * c) //把全连接反向传播来的梯度拆成两部分分别输入两个通道的函数 { int e = 0; for (int i = 0; i < 576; i++) { a[i] = c[e]; e++; } for (int i = 0; i < 576; i++) { b[i] = c[e]; e++; } } -

前置数学知识

-

对于卷积操作的梯度的计算:具体参见:

-

简单介绍

-

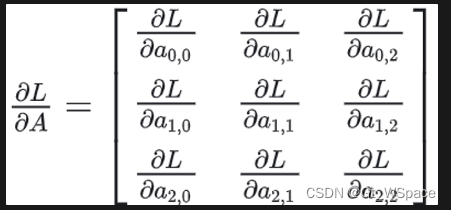

在反向传播过程中,求损失对于参数的梯度,根据链式法则:

其中 ∂𝐿/∂𝐴 是已知的,由前一层的负责计算,在本例中,是一个矩阵,大小和卷积结果一样:

现在需要做的只是求∂𝐴/∂𝑊就可以了,根据卷积的公式(conv实现的链接中有推导):

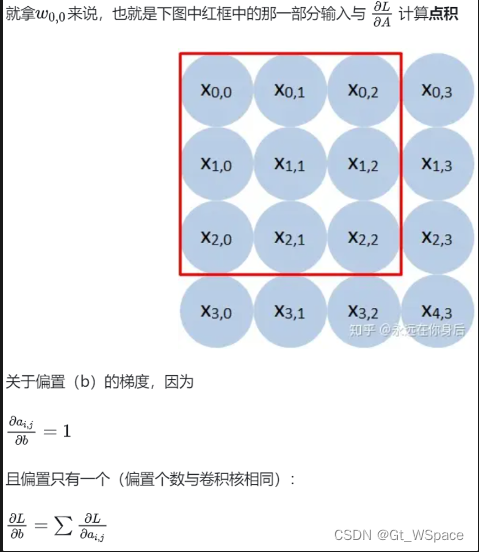

先求单个输出对任意一个参数(这里表示为 w h ′ , w ′ w_{ℎ^{'},w^{′}} wh′,w′的偏导:

-

反卷积具体数学算法

- 介绍反卷积为什么要反转卷积核:

-

计算当前卷积层卷积核的梯度使用全连接[即卷积后输出的结果]在于卷积核进行卷积运算

源代码:

double * thirdkernelbias; thirdkernelbias = (double * ) malloc(9 * sizeof(double)); Convolution(26, 26, 24, & data -> secondcon[0][0], thirdconbias, thirdkernelbias);注意,此处没问题,作者使用2626的原图,以2424为卷积核,进行卷积操作,得到3*3的卷积核梯度(这是对于最后一层卷积层的卷积核的梯度)

接下来将全连接网络传递回来的梯度,传递回上一个卷积层。即得出对于卷积后的26*26个像素位分别的梯度。

第一步就是将2424的梯度反向传递回2626

double * secondconbias;

secondconbias = (double * ) malloc(676 * sizeof(double));

double * turnthirdkerne3;

turnthirdkerne3 = (double * ) malloc(9 * sizeof(double));

Overturn_convolution_kernel(3, & parameter1 -> kernel3[0][0], turnthirdkerne3);

double * padthirdkernelbias;

padthirdkernelbias = (double * ) malloc(784 * sizeof(double));

参考我们的数学原理,我们应该先将目前的kernel转置,这样我们反卷积的结果才是正确的。

-

pad,反向填充至28*28padding(26, thirdconbias, padthirdkernelbias); free(thirdconbias); thirdconbias = NULL;其中,

padding为:void padding(int o, double * a, double * b) //反向传播时填充本层梯度的函数,相当于在梯度矩阵周围填充一圈0,这样和翻转后的卷积核做卷积后梯度矩阵大小才能恢复到前一层大小 { for (int i = 0; i < (o + 2); i++) { for (int j = 0; j < (o + 2); j++) b[i * (o + 2) + j] = 0; } int m = 0; for (int k = 2; k < o; k++) { b[k * (o + 2)] = 0; b[k * (o + 2) + 1] = 0; int n = 0; for (int j = 2; j < o; j++) { b[k * (o + 2) + j] = a[m * (o - 2) + n]; n++; } m++; b[k * (o + 2) + o] = 0; b[k * (o + 2) + o + 1] = 0; } }将2424pad成2828(周围两圈0)

将转置后的3*3作为卷积核进行卷积,得出对于倒数第二层卷积层的梯度

-

反向传播卷积梯度Convolution(28, 28, 3, padthirdkernelbias, turnthirdkerne3, secondconbias); free(turnthirdkerne3); turnthirdkerne3 = NULL; free(padthirdkernelbias); padthirdkernelbias = NULL;与上文中代码流程相同

-

再计算当前卷积层卷积核的梯度使用全连接[即卷积后输出的结果]在于卷积核进行卷积运算

源代码:

double * secondkernelbias; secondkernelbias = (double * ) malloc(9 * sizeof(double)); Convolution(28, 28, 26, & data -> firstcon[0][0], secondconbias, secondkernelbias);

以此类推,直到对所有卷积层均完成反向传播

-

后续代码double * secondkernelbias; secondkernelbias = (double * ) malloc(9 * sizeof(double)); Convolution(28, 28, 26, & data -> firstcon[0][0], secondconbias, secondkernelbias); //再计算当前卷积层卷积核的梯度 double * firstconbias; firstconbias = (double * ) malloc(784 * sizeof(double)); double * turnsecondkernel; turnsecondkernel = (double * ) malloc(9 * sizeof(double)); Overturn_convolution_kernel(3, & parameter1 -> kernel2[0][0], turnsecondkernel); double * padsecondkernelbias; padsecondkernelbias = (double * ) malloc(900 * sizeof(double)); padding(28, secondconbias, padsecondkernelbias); free(secondconbias); secondconbias = NULL; Convolution(30, 30, 3, padsecondkernelbias, turnsecondkernel, firstconbias); free(turnsecondkernel); turnsecondkernel = NULL; free(padsecondkernelbias); padsecondkernelbias = NULL; double * firstkernelbias; firstkernelbias = (double * ) malloc(9 * sizeof(double)); Convolution(30, 30, 28, & data -> picturedata[0][0], firstconbias, firstkernelbias); free(firstconbias); firstconbias = NULL;

注意,输入图像有两个通道,因此将卷积处的反向传播重复一遍

-

重复传播double * thirdkernelbias1; thirdkernelbias1 = (double * ) malloc(9 * sizeof(double)); Convolution(26, 26, 24, & data -> secondcon1[0][0], thirdconbias1, thirdkernelbias1); double * secondconbias1; secondconbias1 = (double * ) malloc(676 * sizeof(double)); double * turnthirdkernel1; turnthirdkernel1 = (double * ) malloc(9 * sizeof(double)); Overturn_convolution_kernel(3, & parameter1 -> kernel33[0][0], turnthirdkernel1); double * padthirdkernelbias1; padthirdkernelbias1 = (double * ) malloc(784 * sizeof(double)); padding(26, thirdconbias1, padthirdkernelbias1); free(thirdconbias1); thirdconbias1 = NULL; Convolution(28, 28, 3, padthirdkernelbias1, turnthirdkernel1, secondconbias1); free(turnthirdkernel1); turnthirdkernel1 = NULL; free(padthirdkernelbias1); padthirdkernelbias1 = NULL; double * secondkernelbias1; secondkernelbias1 = (double * ) malloc(9 * sizeof(double)); Convolution(28, 28, 26, & data -> firstcon1[0][0], secondconbias1, secondkernelbias1); double * firstconbias1; firstconbias1 = (double * ) malloc(784 * sizeof(double)); double * turnsecondkernel1; turnsecondkernel1 = (double * ) malloc(9 * sizeof(double)); Overturn_convolution_kernel(3, & parameter1 -> kernel22[0][0], turnsecondkernel1); double * padsecondkernelbias1; padsecondkernelbias1 = (double * ) malloc(900 * sizeof(double)); padding(28, secondconbias1, padsecondkernelbias1); free(secondconbias1); secondconbias = NULL; Convolution(30, 30, 3, padsecondkernelbias1, turnsecondkernel1, firstconbias1); free(turnsecondkernel1); turnsecondkernel1 = NULL; free(padsecondkernelbias1); padsecondkernelbias1 = NULL; double * firstkernelbias1; firstkernelbias1 = (double * ) malloc(9 * sizeof(double)); Convolution(30, 30, 28, & data -> picturedata[0][0], firstconbias1, firstkernelbias1); free(firstconbias1); firstconbias1 = NULL; -

更新参数Matrix_bp(3, 3, & parameter1 -> kernel1[0][0], firstkernelbias); //iterations更新参数 Matrix_bp(3, 3, & parameter1 -> kernel2[0][0], secondkernelbias); Matrix_bp(3, 3, & parameter1 -> kernel3[0][0], thirdkernelbias); Matrix_bp(3, 3, & parameter1 -> kernel11[0][0], firstkernelbias1); Matrix_bp(3, 3, & parameter1 -> kernel22[0][0], secondkernelbias1); Matrix_bp(3, 3, & parameter1 -> kernel33[0][0], thirdkernelbias1); Matrix_bp(1152, 180, & parameter1 -> firsthiddenlayer[0][0], firstwbias); Matrix_bp(180, 45, & parameter1 -> secondhiddenlayer[0][0], secondwbias); Matrix_bp(45, 10, & parameter1 -> outhiddenlayer[0][0], outwbias);以下是梯度参数更新代码

void Matrix_bp(int m, int n, double * a, double * b) //更新网络参数的函数 { for (int i = 0; i < m; i++) for (int j = 0; j < n; j++) a[i * n + j] -= learningrate * b[i * n + j]; } -

清除动态分配的变量,防止内存溢出free(firstkernelbias); //释放中途动态分配的变量,防止内存溢出 firstkernelbias = NULL; free(secondkernelbias); secondkernelbias = NULL; free(thirdkernelbias); thirdkernelbias = NULL; free(firstkernelbias1); firstkernelbias1 = NULL; free(secondkernelbias1); secondkernelbias1 = NULL; free(thirdkernelbias1); thirdkernelbias1 = NULL; free(firstwbias); firstwbias = NULL; free(secondwbias); secondwbias = NULL; free(outwbias); outwbias = NULL; return; -

计算交叉熵损失Cross_entropydouble Cross_entropy(double * a, int m) //计算每一次训练结果的交叉熵损失函数 { double u = 0; u = (-log10(a[m])); return u; }正如前文所说,确实暂时未发现交叉熵函数在代码中的使用

test_network

-

sprintf(s, "%s%d%s", "Training_set//Test_set//", i + 1, ".bmp");将路径写入s中,用以读取测试集图片

这行代码是使用

sprintf函数来格式化字符串,并将其存储在字符数组s中。sprintf是标准C库中的一个函数,用于将格式化的数据写入字符串。这里是它的详细解释:sprintf(s, ...):sprintf函数的第一个参数是指向字符数组s的指针,这个数组将存储生成的字符串。"%s%d%s":这是格式字符串,它定义了如何将后续参数组合成一个字符串。%s是字符串的占位符,%d是整数的占位符。"Training_set//Test_set//":这是第一个参数,它是一个字符串,将被插入到格式字符串的第一个%s占位符处。i + 1:这是第二个参数,是一个整数。由于i是从0开始的,所以i + 1将产生从1到10的整数,这将插入到格式字符串的%d占位符处。".bmp":这是第三个参数,它是一个字符串,将被插入到格式字符串的最后一个%s占位符处。

综合起来,这行代码的作用是构建一个文件路径字符串,格式为

"Training_set//Test_set//1.bmp","Training_set//Test_set//2.bmp",一直到"Training_set//Test_set//10.bmp",取决于循环变量i的值。这个字符串表示测试集中的图像文件名,其中i + 1是文件编号。请注意,路径中的

//可能是为了演示目的而使用的,实际上在大多数操作系统中,文件路径使用单个/或操作系统特定的路径分隔符(例如Windows上的\\)。如果这段代码是在Windows系统上运行,应该使用\\而不是//。 -

接下来即是读取所需数据集的前置操作,具体解析见:

即:

char e[120]; int l[960]; double data[30][30]; for (int i = 0; i < 10; i++) { FILE * fp; char s[30]; sprintf(s, "%s%d%s", "Training_set//Test_set//", i + 1, ".bmp"); printf("\n打开的文件名:%s\n", s); fp = fopen(s, "rb"); if (fp == NULL) { printf("Cann't open the file!\n"); system("pause"); return; } fseek(fp, 62, SEEK_SET); fread(e, sizeof(char), 120, fp); fclose(fp); int y = 0; for (int r = 0; r < 120; r++) { for (int u = 1; u < 9; u++) { l[y] = (int)((e[r]) >> (8 - u) & 0x01); y++; if (y > 960) break; }; }; y = 0; int g = 0; for (int u = 0; u < 30; u++) { y = 0; for (int j = 0; j < 32; j++) { if ((j != 30) && (j != 31)) { data[u][y] = l[g]; y++; }; g++; } } int q = data[0][0]; if (q == 1) { int n = 0; int z = 0; for (int b = 0; b < 30; b++) { n = 0; for (;;) { if (n >= 30) break; if (data[z][n] == 0) data[z][n] = 1; else if (data[z][n] == 1) data[z][n] = 0; n++; } z++; } } -

将前向传播,并且求出

softmax后输出结果列表中概率最大的值,即为预测值forward_propagating(data2, & data[0][0], parameter2); //把获取的样本数据正向传播一次 double sum = 0; int k = 0; for (int j = 0; j < 10; j++) { if (result[j] > sum) { sum = result[j]; k = j; //获取分类结果 } else continue; } printf("\n"); for (int i = 0; i < 10; i++) //打印分类结果 { printf("预测值是%d的概率:%lf\n", i, result[i]); } printf("最终预测值:%d\n", k);

输出并写入网络参数

void printf_file(struct parameter * parameter4) //用于训练结束后保存网络参数的函数

{

FILE * fp;

fp = fopen("Training_set//Network_parameter.bin", "wb"); //采用二进制格式保存参数,便于读取

struct parameter * parameter1;

parameter1 = (struct parameter * ) malloc(sizeof(struct parameter));

( * parameter1) = ( * parameter4);

fwrite(parameter1, sizeof(struct parameter), 1, fp); //打印网络结构体

fclose(fp);

free(parameter1);

parameter1 = NULL;

return;

}

保存训练结束后的网络参数

完结撒花

这篇文章写了我好几天😭😭😭

希望能给你带来帮助

399

399

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?