一、安装环境

1.准备四台虚拟机,配置ip,网关,DNS(四台都做)

配置方法一:vim /etc/sysconfig/network-scripts/ifcfg-ens33

配置方法二:nmtui

| 主机名 | ip地址 | 网关 | DNS | |

| master01 | 192.168.8.201 | 192.168.8.2 | 8.8.8.8 | |

| master02 | 192.168.8.202 | 192.168.8.2 | 8.8.8.8 | |

| node01 | 192.168.8.203 | 192.168.8.2 | 8.8.8.8 | |

| node02 | 192.168.8.204 | 192.168.8.2 | 8.8.8.8 |

2.重启网络(四台都做)

systemctl restart network

3.关闭防火墙 (四台都做)

systemctl stop firewalld

查看防火墙状态:systemctl status firewalld

4.关闭沙盒(四台都做)

setenforce 0

二、基本环境配置

1.修改主机名(四台都做)

方法一:hostname master01

bash

方法二:nmcli g hostname master01

bash

2.将主机名 ,ip添加进/etc/hosts文件(四台都做)

vim /etc/hosts

192.168.8.201 master01

192.168.8.202 master02

192.168.8.203 node01

192.168.8.204 node02

3.下载镜像(两个都下载)(四台都做)

镜像一:wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.huaweicloud.com/repository/conf/CentOS-7-anon.repo

镜像二:wget http://192.168.3.200/mirrors/local.repo

【若下载不下第二个,可以 cd /etc/yum.repos.d 直接编辑】

[local-base]

name=CentOS-$releasever - Base

baseurl=http://192.168.3.200/mirrors/centos/$releasever/os/$basearch/

enabled=1

gpgcheck=1

gpgkey=http://192.168.3.200/mirrors/centos/$releasever/os/$basearch/RPM-GPG-KEY-CentOS-7

[local-updates]

name=CentOS-$releasever - Updates

baseurl=http://192.168.3.200/mirrors/centos/$releasever/updates/$basearch/

enabled=1

gpgcheck=1

gpgkey=http://192.168.3.200/mirrors/centos/$releasever/os/$basearch/RPM-GPG-KEY-CentOS-7

[local-extras]

name=CentOS-$releasever - Extras

baseurl=http://192.168.3.200/mirrors/centos/$releasever/extras/$basearch/

enabled=0

gpgcheck=1

gpgkey=http://192.168.3.200/mirrors/centos/$releasever/os/$basearch/RPM-GPG-KEY-CentOS-7

[local-centosplus]

name= CentOS-$releasever - Plus

baseurl=http://192.168.3.200/mirrors/centos/$releasever/mirrors/centosplus/$basearch/

enabled=0

gpgcheck=1

gpgkey=http://192.168.3.200/mirrors/centos/$releasever/os/$basearch/RPM-GPG-KEY-CentOS-7

[local-epel]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=http://192.168.3.200/mirrors/epel/$releasever/$basearch/

enabled=1

gpgcheck=1

gpgkey=http://192.168.3.200/mirrors/epel/RPM-GPG-KEY-EPEL-$releasever

4.编辑文件(四台都做)

cat > /etc/pip.conf << EOF

[global]

index-url = https://mirrors.huaweicloud.com/repository/pypi/simple

trusted-host = mirrors.huaweicloud.com

timeout = 120

EOF

5.进行如下操作(四台都做)

yum -y install python3 python3-pip

pip3 install pip --upgrade -i https://pypi.tuna.tsinghua.edu.cn/simple/

rm /usr/bin/pip

ln -s /usr/bin/pip3 /usr/bin/pip

6.下载压缩包(master01都做)

wget http://192.168.3.200/Software/kubeasz-install.tar.xz

7.解压压缩包(master01做)

tar -xvf kubeasz-install.tar.xz

8.切换目录(master01)

cd kubeasz-install

9.编辑脚本(master01)

# 创建k8s安装脚本,适用CentOS7/Ubuntu22.4

vim k8s_install.sh

#!/bin/bash

# descriptions: the shell scripts will use ansible to deploy K8S at binary for siample

# docker-tag

# curl -s -S "https://registry.hub.docker.com/v2/repositories/easzlab/kubeasz-k8s-bin/tags/" | jq '."results"[]["name"]' |sort -rn

# github: https://github.com/easzlab/kubeasz

#########################################################################

# 此脚本安装过的操作系统 CentOS/RedHat 7, Ubuntu 16.04/18.04/20.04/22.04

#########################################################################

# 传参检测

[ $# -ne 7 ] && echo -e "Usage: $0 rootpasswd netnum nethosts cri cni k8s-cluster-name\nExample: bash $0 rootPassword 10.0.1 201\ 202\ 203\ 204 [containerd|docker] [calico|flannel|cilium] boge.com test-cn\n" && exit 11

# 变量定义

export release=3.6.2 # 支持k8s多版本使用,定义下面k8s_ver变量版本范围: 1.28.1 v1.27.5 v1.26.8 v1.25.13 v1.24.17

export k8s_ver=v1.27.5 # | docker-tag tags easzlab/kubeasz-k8s-bin 注意: k8s 版本 >= 1.24 时,仅支持 containerd

rootpasswd=$1

netnum=$2

nethosts=$3

cri=$4

cni=$5

domainName=$6

clustername=$7

if ls -1v ./kubeasz*.tar.gz &>/dev/null;then software_packet="$(ls -1v ./kubeasz*.tar.gz )";else software_packet="";fi

pwd="/etc/kubeasz"

# deploy机器升级软件库

if cat /etc/redhat-release &>/dev/null;then

yum update -y

else

apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y

[ $? -ne 0 ] && apt-get -yf install

fi

# deploy机器检测python环境

python2 -V &>/dev/null

if [ $? -ne 0 ];then

if cat /etc/redhat-release &>/dev/null;then

yum install gcc openssl-devel bzip2-devel

wget https://www.python.org/ftp/python/2.7.16/Python-2.7.16.tgz

tar xzf Python-2.7.16.tgz

cd Python-2.7.16

./configure --enable-optimizations

make altinstall

ln -s /usr/bin/python2.7 /usr/bin/python

cd -

else

apt-get install -y python2.7 && ln -s /usr/bin/python2.7 /usr/bin/python

fi

fi

python3 -V &>/dev/null

if [ $? -ne 0 ];then

if cat /etc/redhat-release &>/dev/null;then

yum install python3 -y

else

apt-get install -y python3

fi

fi

# deploy机器设置pip安装加速源

if `echo $clustername |grep -iwE cn &>/dev/null`; then

mkdir ~/.pip

cat > ~/.pip/pip.conf <<CB

[global]

index-url = https://mirrors.aliyun.com/pypi/simple

[install]

trusted-host=mirrors.aliyun.com

CB

fi

# deploy机器安装相应软件包

which python || ln -svf `which python2.7` /usr/bin/python

if cat /etc/redhat-release &>/dev/null;then

yum install git epel-release python-pip sshpass -y

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

else

if grep -Ew '20.04|22.04' /etc/issue &>/dev/null;then apt-get install sshpass -y;else apt-get install python-pip sshpass -y;fi

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

fi

python -m pip install --upgrade "pip < 21.0"

which pip || ln -svf `which pip` /usr/bin/pip

pip -V

pip install setuptools -U

pip install --no-cache-dir ansible netaddr

# 在deploy机器做其他node的ssh免密操作

for host in `echo "${nethosts}"`

do

echo "============ ${netnum}.${host} ===========";

if [[ ${USER} == 'root' ]];then

[ ! -f /${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /${USER}/.ssh/id_rsa

else

[ ! -f /home/${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /home/${USER}/.ssh/id_rsa

fi

sshpass -p ${rootpasswd} ssh-copy-id -o StrictHostKeyChecking=no ${USER}@${netnum}.${host}

if cat /etc/redhat-release &>/dev/null;then

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "yum update -y"

else

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y"

[ $? -ne 0 ] && ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get -yf install"

fi

done

# deploy机器下载k8s二进制安装脚本(注:这里下载可能会因网络原因失败,可以多尝试运行该脚本几次)

if [[ ${software_packet} == '' ]];then

if [[ ! -f ./ezdown ]];then

curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

fi

# 使用工具脚本下载

sed -ri "s+^(K8S_BIN_VER=).*$+\1${k8s_ver}+g" ezdown

chmod +x ./ezdown

# ubuntu_22 to download package of Ubuntu 22.04

./ezdown -D && ./ezdown -P ubuntu_22 && ./ezdown -X

else

tar xvf ${software_packet} -C /etc/

sed -ri "s+^(K8S_BIN_VER=).*$+\1${k8s_ver}+g" ${pwd}/ezdown

chmod +x ${pwd}/{ezctl,ezdown}

chmod +x ./ezdown

./ezdown -D # 离线安装 docker,检查本地文件,正常会提示所有文件已经下载完成,并上传到本地私有镜像仓库

./ezdown -S # 启动 kubeasz 容器

fi

# 初始化一个名为$clustername的k8s集群配置

CLUSTER_NAME="$clustername"

${pwd}/ezctl new ${CLUSTER_NAME}

if [[ $? -ne 0 ]];then

echo "cluster name [${CLUSTER_NAME}] was exist in ${pwd}/clusters/${CLUSTER_NAME}."

exit 1

fi

if [[ ${software_packet} != '' ]];then

# 设置参数,启用离线安装

# 离线安装文档:https://github.com/easzlab/kubeasz/blob/3.6.2/docs/setup/offline_install.md

sed -i 's/^INSTALL_SOURCE.*$/INSTALL_SOURCE: "offline"/g' ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# to check ansible service

ansible all -m ping

#---------------------------------------------------------------------------------------------------

#修改二进制安装脚本配置 config.yml

sed -ri "s+^(CLUSTER_NAME:).*$+\1 \"${CLUSTER_NAME}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

## k8s上日志及容器数据存独立磁盘步骤(参考阿里云的)

mkdir -p /var/lib/container/{kubelet,docker,nfs_dir} /var/lib/{kubelet,docker} /nfs_dir

## 不用fdisk分区,直接格式化数据盘 mkfs.ext4 /dev/vdb,按下面添加到fstab后,再mount -a刷新挂载(blkid /dev/sdx)

## cat /etc/fstab

# UUID=105fa8ff-bacd-491f-a6d0-f99865afc3d6 / ext4 defaults 1 1

# /dev/vdb /var/lib/container/ ext4 defaults 0 0

# /var/lib/container/kubelet /var/lib/kubelet none defaults,bind 0 0

# /var/lib/container/docker /var/lib/docker none defaults,bind 0 0

# /var/lib/container/nfs_dir /nfs_dir none defaults,bind 0 0

## tree -L 1 /var/lib/container

# /var/lib/container

# ├── docker

# ├── kubelet

# └── lost+found

# docker data dir

DOCKER_STORAGE_DIR="/var/lib/container/docker"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${DOCKER_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# containerd data dir

CONTAINERD_STORAGE_DIR="/var/lib/container/containerd"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${CONTAINERD_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# kubelet logs dir

KUBELET_ROOT_DIR="/var/lib/container/kubelet"

sed -ri "s+^(KUBELET_ROOT_DIR:).*$+KUBELET_ROOT_DIR: \"${KUBELET_ROOT_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

if [[ $clustername != 'aws' ]]; then

# docker aliyun repo

REG_MIRRORS="https://pqbap4ya.mirror.aliyuncs.com"

sed -ri "s+^REG_MIRRORS:.*$+REG_MIRRORS: \'[\"${REG_MIRRORS}\"]\'+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# [docker]信任的HTTP仓库

sed -ri "s+127.0.0.1/8+${netnum}.0/24+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# disable dashboard auto install

sed -ri "s+^(dashboard_install:).*$+\1 \"no\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 融合配置准备(按示例部署命令这里会生成testk8s.boge.com这个域名,部署脚本会基于这个域名签证书,优势是后面访问kube-apiserver,可以基于此域名解析任意IP来访问,灵活性更高)

CLUSEER_WEBSITE="${CLUSTER_NAME}k8s.${domainName}"

lb_num=$(grep -wn '^MASTER_CERT_HOSTS:' ${pwd}/clusters/${CLUSTER_NAME}/config.yml |awk -F: '{print $1}')

lb_num1=$(expr ${lb_num} + 1)

lb_num2=$(expr ${lb_num} + 2)

sed -ri "${lb_num1}s+.*$+ - "${CLUSEER_WEBSITE}"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

sed -ri "${lb_num2}s+(.*)$+#\1+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# node节点最大pod 数

MAX_PODS="120"

sed -ri "s+^(MAX_PODS:).*$+\1 ${MAX_PODS}+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# calico 自建机房都在二层网络可以设置 CALICO_IPV4POOL_IPIP=“off”,以提高网络性能; 公有云上VPC在三层网络,需设置CALICO_IPV4POOL_IPIP: "Always"开启ipip隧道

#sed -ri "s+^(CALICO_IPV4POOL_IPIP:).*$+\1 \"off\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 修改二进制安装脚本配置 hosts

# clean old ip

sed -ri '/192.168.1.1/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.2/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.3/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.4/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.5/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

# 输入准备创建ETCD集群的主机位

echo "enter etcd hosts here (example: 203 202 201) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[etcd/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-MASTER集群的主机位

echo "enter kube-master hosts here (example: 202 201) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_master/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-NODE集群的主机位

echo "enter kube-node hosts here (example: 204 203) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_node/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 配置容器运行时CNI

case ${cni} in

flannel)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

calico)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

cilium)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

*)

echo "cni need be flannel or calico or cilium."

exit 11

esac

# 配置K8S的ETCD数据备份的定时任务

# https://github.com/easzlab/kubeasz/blob/master/docs/op/cluster_restore.md

if cat /etc/redhat-release &>/dev/null;then

if ! grep -w '94.backup.yml' /var/spool/cron/root &>/dev/null;then echo "00 00 * * * /usr/local/bin/ansible-playbook -i /etc/kubeasz/clusters/${CLUSTER_NAME}/hosts -e @/etc/kubeasz/clusters/${CLUSTER_NAME}/config.yml /etc/kubeasz/playbooks/94.backup.yml &> /dev/null; find /etc/kubeasz/clusters/${CLUSTER_NAME}/backup/ -type f -name '*.db' -mtime +3|xargs rm -f" >> /var/spool/cron/root;else echo exists ;fi

chown root.crontab /var/spool/cron/root

chmod 600 /var/spool/cron/root

rm -f /var/run/cron.reboot

service crond restart

else

if ! grep -w '94.backup.yml' /var/spool/cron/crontabs/root &>/dev/null;then echo "00 00 * * * /usr/local/bin/ansible-playbook -i /etc/kubeasz/clusters/${CLUSTER_NAME}/hosts -e @/etc/kubeasz/clusters/${CLUSTER_NAME}/config.yml /etc/kubeasz/playbooks/94.backup.yml &> /dev/null; find /etc/kubeasz/clusters/${CLUSTER_NAME}/backup/ -type f -name '*.db' -mtime +3|xargs rm -f" >> /var/spool/cron/crontabs/root;else echo exists ;fi

chown root.crontab /var/spool/cron/crontabs/root

chmod 600 /var/spool/cron/crontabs/root

rm -f /var/run/crond.reboot

service cron restart

fi

#---------------------------------------------------------------------------------------------------

# 准备开始安装了

rm -rf ${pwd}/{dockerfiles,docs,.gitignore,pics,dockerfiles} &&\

find ${pwd}/ -name '*.md'|xargs rm -f

read -p "Enter to continue deploy k8s to all nodes >>>" YesNobbb

# now start deploy k8s cluster

cd ${pwd}/

# to prepare CA/certs & kubeconfig & other system settings

${pwd}/ezctl setup ${CLUSTER_NAME} 01

sleep 1

# to setup the etcd cluster

${pwd}/ezctl setup ${CLUSTER_NAME} 02

sleep 1

# to setup the container runtime(docker or containerd)

case ${cri} in

containerd)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

docker)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

*)

echo "cri need be containerd or docker."

exit 11

esac

sleep 1

# to setup the master nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 04

sleep 1

# to setup the worker nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 05

sleep 1

# to setup the network plugin(flannel、calico...)

${pwd}/ezctl setup ${CLUSTER_NAME} 06

sleep 1

# to setup other useful plugins(metrics-server、coredns...)

${pwd}/ezctl setup ${CLUSTER_NAME} 07

sleep 1

# [可选]对集群所有节点进行操作系统层面的安全加固 https://github.com/dev-sec/ansible-os-hardening

#ansible-playbook roles/os-harden/os-harden.yml

#sleep 1

#cd `dirname ${software_packet:-/tmp}`

k8s_bin_path='/opt/kube/bin'

echo "------------------------- k8s version list ---------------------------"

${k8s_bin_path}/kubectl version

echo

echo "------------------------- All Healthy status check -------------------"

${k8s_bin_path}/kubectl get componentstatus

echo

echo "------------------------- k8s cluster info list ----------------------"

${k8s_bin_path}/kubectl cluster-info

echo

echo "------------------------- k8s all nodes list -------------------------"

${k8s_bin_path}/kubectl get node -o wide

echo

echo "------------------------- k8s all-namespaces's pods list ------------"

${k8s_bin_path}/kubectl get pod --all-namespaces

echo

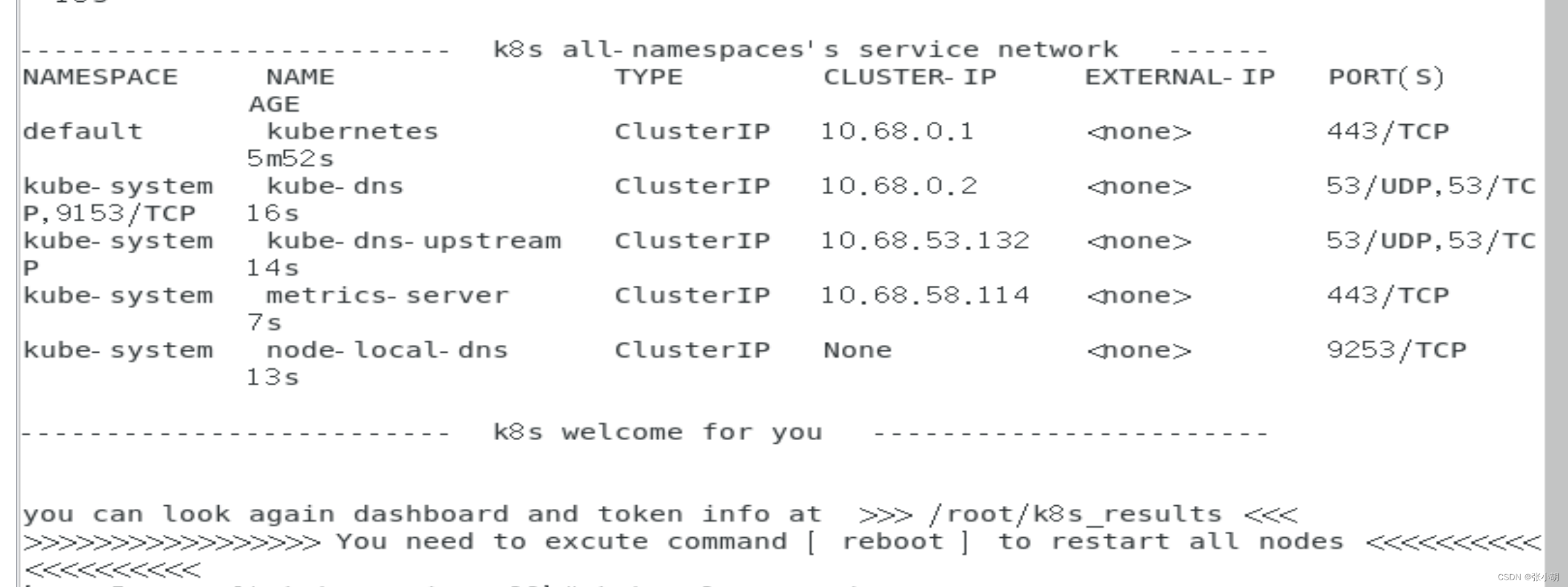

echo "------------------------- k8s all-namespaces's service network ------"

${k8s_bin_path}/kubectl get svc --all-namespaces

echo

echo "------------------------- k8s welcome for you -----------------------"

echo

# you can use k alias kubectl to siample

echo "alias k=kubectl && complete -F __start_kubectl k" >> ~/.bashrc

# get dashboard url

${k8s_bin_path}/kubectl cluster-info|grep dashboard|awk '{print $NF}'|tee -a /root/k8s_results

# get login token

${k8s_bin_path}/kubectl -n kube-system describe secret $(${k8s_bin_path}/kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')|grep 'token:'|awk '{print $NF}'|tee -a /root/k8s_results

echo

echo "you can look again dashboard and token info at >>> /root/k8s_results <<<"

echo ">>>>>>>>>>>>>>>>> You need to excute command [ reboot ] to restart all nodes <<<<<<<<<<<<<<<<<<<<"

#find / -type f -name "kubeasz*.tar.gz" -o -name "k8s_install_new.sh"|xargs rm -f

10.执行脚本(在master01执行即可)

# 安装命令示例(假设我这里root的密码是123.com;192.168.8 为内网网段;后面的依次是主机位;CRI容器运行时;CNI网络插件;域名是yun4;要设定k8s集群名称为test):

# 单台节点部署 #

bash k8s_install.sh 123.com 192.168.8 201 containerd calico test

# 多台节点部署例如:192.168.8.[201\202\203\204]四台机器

bash k8s_install.sh 123.com 192.168.8 201\ 202\ 203\ 204 containerd calico yun4 test

安装完会显示这个,代表成功

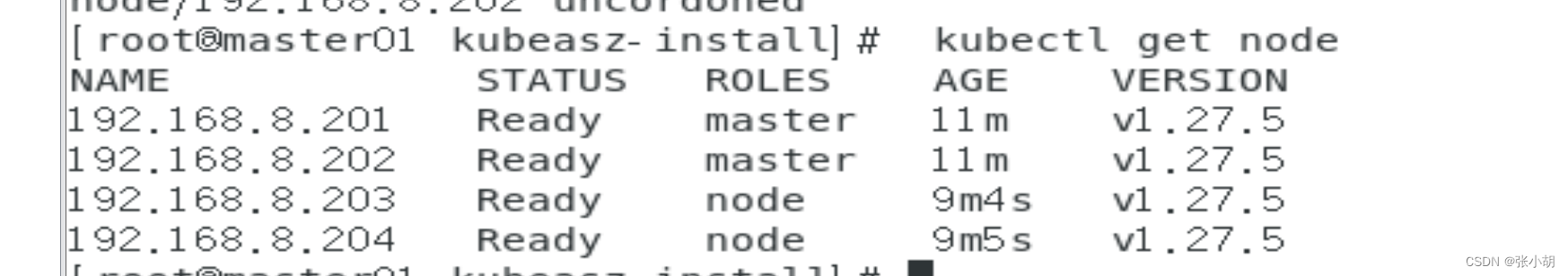

10.安装完成后执行以下操作

# 安装完成后重新加载下环境变量以实现kubectl命令补齐

. ~/.bashrc

# 获取node节点,master会出现SchedulingDisabled

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.8.201 Ready,SchedulingDisabled master 9m42s v1.27.5

192.168.8.202 Ready,SchedulingDisabled master 9m42s v1.27.5

192.168.8.203 Ready node 7m31s v1.27.5

192.168.8.204 Ready node 7m31s v1.27.5

# SchedulingDisabled,是因为节点被隔离,需要uncordon节点

[root@k8s-master01 ~]# systemctl stop kube-apiserver

[root@k8s-master01 ~]# systemctl start kube-apiserver

[root@k8s-master01 ~]# systemctl restart kubelet

[root@k8s-master01 ~]# kubectl uncordon 192.168.8.201

node/192.168.8.100 uncordoned

[root@k8s-master01 ~]# kubectl uncordon 192.168.8.202

node/192.168.8.101 uncordoned

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.8.201 Ready master 11m v1.27.5

192.168.8.202 Ready master 11m v1.27.5

192.168.8.203 Ready node 9m44s v1.27.5

192.168.8.204 Ready node 9m44s v1.27.5

成功样式如图:

三、k8s-1.27.5拉取镜像

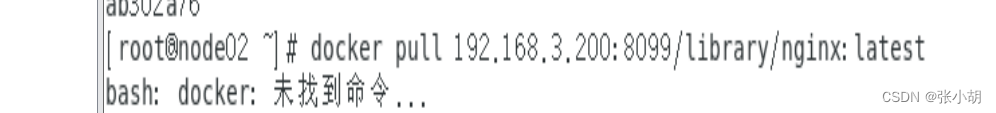

k8s-1.27.5拉取镜像报错:

情况1:

情况2:

crictl pull ,,,,,,

bash:crictl : 未找到命令....

报错解释:

在Kubernetes集群中,crictl是一个用于与容器运行时接口(CRI)交互的命令行工具。如果你在Kubernetes的工作节点上收到一个错误,提示找不到crictl命令,这通常意味着crictl没有被安装在系统的PATH路径中,或者根本就没有安装。

解决方法:

-

确认

crictl是否已经安装。你可以使用which crictl或者crictl --version来检查。 -

如果没有安装,你需要根据你的操作系统和容器运行时安装

crictl。通常,crictl与CRI运行时(如Docker或containerd)一起安装。 -

对于基于Docker的CRI实现,

crictl通常会与Docker一起安装。你可以通过Docker的官方安装指南来安装Docker,这通常也会包括crictl。 -

对于containerd作为CRI运行时,你可以直接从containerd的官方仓库获取

crictl。可以使用包管理器或者从源码构建。 -

如果你确认已经安装了

crictl但仍然收到找不到命令的错误,可能是因为crictl没有被添加到PATH环境变量中。你可以手动添加crictl的路径到你的shell配置文件中(如.bashrc或.zshrc)。

例如,如果你使用的是基于Docker的CRI运行时,你可以按照Docker的官方安装指南来安装Docker和crictl。

如果你使用的是containerd作为CRI运行时,你可以从它的GitHub仓库下载crictl:

官网:

# 下载crictl wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.24.0/crictl-v1.24.0-linux-amd64.tar.gz # 解压缩 tar zxvf crictl-v1.24.0-linux-amd64.tar.gz # 将crictl移动到合适的位置 sudo mv crictl /usr/local/bin/ # 给crictl可执行权限 sudo chmod +x /usr/local/bin/crictl

局域网:

步骤一:wget http://192.168.3.200/Software/cri-containerd-cni-1.6.4-linux-amd64.tar.gz

步骤二:

k8s-1.24及以上版本默认使用containerd作为容器运行时,containerd兼容docker镜像 # 修改containerd配置,添加192.168.3.200作为私有镜像站 cat > /etc/containerd/config.toml << EOF disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_image_defined_volumes = false max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false sandbox_image = "easzlab.io.local:5000/easzlab/pause:3.9" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = false tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "/etc/cni/net.d/10-default.conf" max_conf_num = 1 [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.configs."easzlab.io.local:5000".tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.easzlab.io.local:8443".tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.3.200:8099".tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."easzlab.io.local:5000"] endpoint = ["http://easzlab.io.local:5000"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.easzlab.io.local:8443"] endpoint = ["https://harbor.easzlab.io.local:8443"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://docker.nju.edu.cn/", "https://kuamavit.mirror.aliyuncs.com"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."gcr.io"] endpoint = ["https://gcr.nju.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"] endpoint = ["https://gcr.nju.edu.cn/google-containers/"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"] endpoint = ["https://quay.nju.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."ghcr.io"] endpoint = ["https://ghcr.nju.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."nvcr.io"] endpoint = ["https://ngc.nju.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.3.200:8099"] endpoint = ["http://192.168.3.200:8099"] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 EOF

842

842

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?