// create a checkpoint every 5 seconds

env.enableCheckpointing(5000)

// make parameters available in the web interface

env.getConfig.setGlobalJobParameters(params)

// create a Kafka streaming source consumer for Kafka 0.10.x

val kafkaConsumer = new FlinkKafkaConsumer010(

params.getRequired(“input-topic”),

new SimpleStringSchema,

params.getProperties)

val messageStream = env

.addSource(kafkaConsumer)

.map(in => prefix + in)

// create a Kafka producer for Kafka 0.10.x

val kafkaProducer = new FlinkKafkaProducer010(

params.getRequired(“output-topic”),

new SimpleStringSchema,

params.getProperties)

// write data into Kafka

messageStream.addSink(kafkaProducer)

env.execute(“Kafka 0.10 Example”)

从上面的demo可以看出,数据源的入口就是FlinkKafkaConsumer010,当然这里面只是简单的构建了一个对象,并进行了一些配置的初始化,真正source的启动是在其run方法中run方法的调用过程在这里不讲解,后面会出教程讲解。

首先看一下类的继承关系

public class FlinkKafkaConsumer010 extends FlinkKafkaConsumer09

public class FlinkKafkaConsumer09 extends FlinkKafkaConsumerBase

其中,run方法就在FlinkKafkaConsumerBase里,当然其中open方法里面对kafka相关内容进行里初始化。

从输入到计算到输出完整的计算链条的调用过程,后面浪尖会出文章介绍。在这里只关心flink如何从主动消费数据,然后变成事件处理机制的过程。

由于其FlinkKafkaConsumerBase的run比较长,我这里只看重要的部分,首先是会创建Kafka09Fetcher。

this.kafkaFetcher = createFetcher(

sourceContext,

subscribedPartitionsToStartOffsets,

periodicWatermarkAssigner,

punctuatedWatermarkAssigner,

(StreamingRuntimeContext) getRuntimeContext(),

offsetCommitMode,

getRuntimeContext().getMetricGroup().addGroup(KAFKA_CONSUMER_METRICS_GROUP),

useMetrics);

接着下面有段神器,flink严重优越于Spark Streaming的,代码如下:

final AtomicReference discoveryLoopErrorRef = new AtomicReference<>();

this.discoveryLoopThread = new Thread(new Runnable() {

@Override

public void run() {

try {

// --------------------- partition discovery loop ---------------------

List discoveredPartitions;

// throughout the loop, we always eagerly check if we are still running before

// performing the next operation, so that we can escape the loop as soon as possible

while (running) {

if (LOG.isDebugEnabled()) {

LOG.debug(“Consumer subtask {} is trying to discover new partitions …”, getRuntimeContext().getIndexOfThisSubtask());

}

try {

discoveredPartitions = partitionDiscoverer.discoverPartitions();

} catch (AbstractPartitionDiscoverer.WakeupException | AbstractPartitionDiscoverer.ClosedException e) {

// the partition discoverer may have been closed or woken up before or during the discovery;

// this would only happen if the consumer was canceled; simply escape the loop

break;

}

// no need to add the discovered partitions if we were closed during the meantime

if (running && !discoveredPartitions.isEmpty()) {

kafkaFetcher.addDiscoveredPartitions(discoveredPartitions);

}

// do not waste any time sleeping if we’re not running anymore

if (running && discoveryIntervalMillis != 0) {

try {

Thread.sleep(discoveryIntervalMillis);

} catch (InterruptedException iex) {

// may be interrupted if the consumer was canceled midway; simply escape the loop

break;

}

}

}

} catch (Exception e) {

discoveryLoopErrorRef.set(e);

} finally {

// calling cancel will also let the fetcher loop escape

// (if not running, cancel() was already called)

if (running) {

cancel();

}

}

}

}, "Kafka Partition Discovery for " + getRuntimeContext().getTaskNameWithSubtasks());

它定义了一个线程池对象,去动态发现kafka新增的topic(支持正则形式指定消费的topic),或者动态发现kafka新增的分区。

接着肯定是启动动态发现分区或者topic线程,并且启动kafkaFetcher。

discoveryLoopThread.start();

kafkaFetcher.runFetchLoop();

// --------------------------------------------------------------------

// make sure that the partition discoverer is properly closed

partitionDiscoverer.close();

discoveryLoopThread.join();

接着,我们进入kafkaFetcher的runFetchLoop方法,映入眼帘的是

// kick off the actual Kafka consumer

consumerThread.start();

这个线程是在构建kafka09Fetcher的时候创建的

this.consumerThread = new KafkaConsumerThread(

LOG,

handover,

kafkaProperties,

unassignedPartitionsQueue,

createCallBridge(),

getFetcherName() + " for " + taskNameWithSubtasks,

pollTimeout,

useMetrics,

consumerMetricGroup,

subtaskMetricGroup);

KafkaConsumerThread 继承自Thread,然后在其run方法里,首先看到的是

// this is the means to talk to FlinkKafkaConsumer’s main thread

final Handover handover = this.handover;

这个handover的作用呢暂且不提,接着分析run方法里面内容

1,获取消费者

try {

this.consumer = getConsumer(kafkaProperties);

}

2,检测分区并且会重分配新增的分区

try {

if (hasAssignedPartitions) {

newPartitions = unassignedPartitionsQueue.pollBatch();

}

else {

// if no assigned partitions block until we get at least one

// instead of hot spinning this loop. We rely on a fact that

// unassignedPartitionsQueue will be closed on a shutdown, so

// we don’t block indefinitely

newPartitions = unassignedPartitionsQueue.getBatchBlocking();

}

if (newPartitions != null) {

reassignPartitions(newPartitions);

}

3,消费数据

// get the next batch of records, unless we did not manage to hand the old batch over

if (records == null) {

try {

records = consumer.poll(pollTimeout);

}

catch (WakeupException we) {

continue;

}

}

4,通过handover将数据发出去

try {

handover.produce(records);

records = null;

}

由于被kafkaConsumerThread打断了kafkaFetcher的runFetchLoop方法的分析,我们在这里继续

1,拉取handover.producer生产的数据

while (running) {

最后

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长,自己不成体系的自学效果低效漫长且无助。

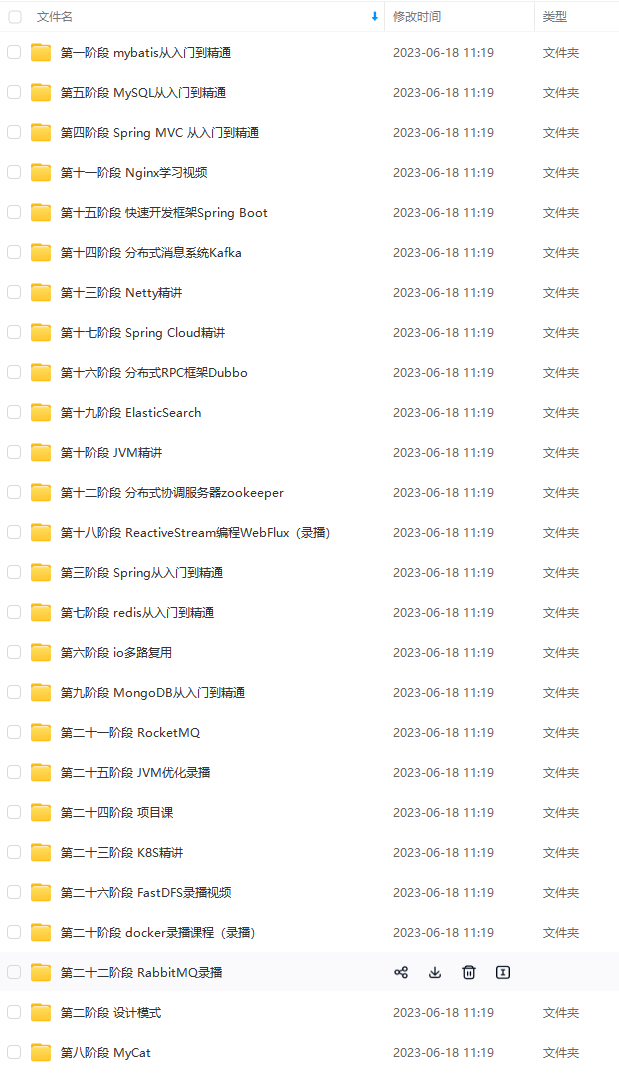

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,不论你是刚入门Java开发的新手,还是希望在技术上不断提升的资深开发者,这些资料都将为你打开新的学习之门!

如果你觉得这些内容对你有帮助,需要这份全套学习资料的朋友可以戳我获取!!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。**

[外链图片转存中…(img-hnfruQTu-1715675891447)]

[外链图片转存中…(img-6WsEdEmS-1715675891448)]

[外链图片转存中…(img-2nGXJ5IP-1715675891448)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,不论你是刚入门Java开发的新手,还是希望在技术上不断提升的资深开发者,这些资料都将为你打开新的学习之门!

如果你觉得这些内容对你有帮助,需要这份全套学习资料的朋友可以戳我获取!!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

249

249

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?