先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

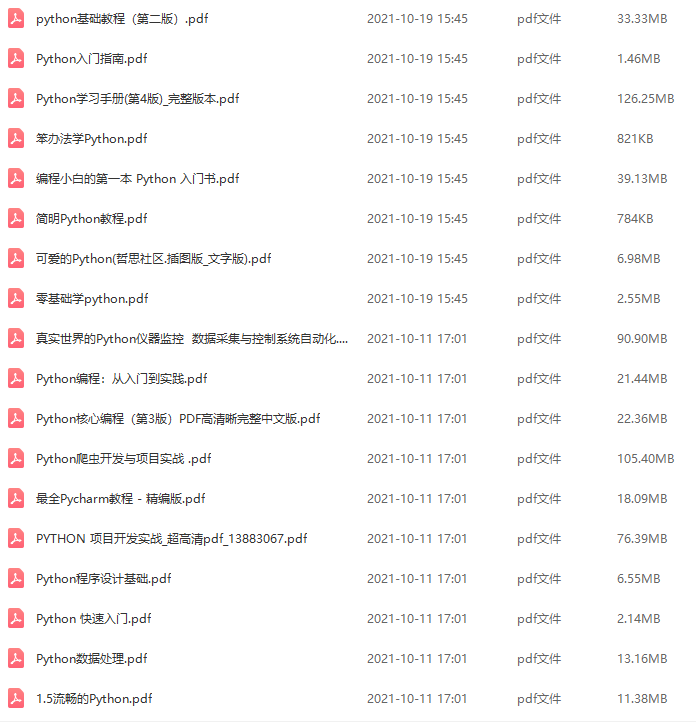

因此收集整理了一份《2024年最新Python全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Python知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024c (备注Python)

正文

===================================================================================

LightTrack: Finding Lightweight Neural Networks for Object Tracking via One-Shot Architecture Search

-

Paper: https://arxiv.org/abs/2104.14545

-

Code: https://github.com/researchmm/LightTrack

Towards More Flexible and Accurate Object Tracking with Natural Language: Algorithms and Benchmark

-

Homepage: https://sites.google.com/view/langtrackbenchmark/

-

Paper: https://arxiv.org/abs/2103.16746

-

Evaluation Toolkit: https://github.com/wangxiao5791509/TNL2K_evaluation_toolkit

-

Demo Video: https://www.youtube.com/watch?v=7lvVDlkkff0&ab_channel=XiaoWang

IoU Attack: Towards Temporally Coherent Black-Box Adversarial Attack for Visual Object Tracking

-

Paper: https://arxiv.org/abs/2103.14938

-

Code: https://github.com/VISION-SJTU/IoUattack

Graph Attention Tracking

-

Paper: https://arxiv.org/abs/2011.11204

-

Code: https://github.com/ohhhyeahhh/SiamGAT

Rotation Equivariant Siamese Networks for Tracking

-

Paper: https://arxiv.org/abs/2012.13078

-

Code: None

Track to Detect and Segment: An Online Multi-Object Tracker

-

Homepage: https://jialianwu.com/projects/TraDeS.html

-

Paper: None

-

Code: None

Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking

-

Paper(Oral): https://arxiv.org/abs/2103.11681

-

Code: https://github.com/594422814/TransformerTrack

Transformer Tracking

-

Paper: https://arxiv.org/abs/2103.15436

-

Code: https://github.com/chenxin-dlut/TransT

Tracking Pedestrian Heads in Dense Crowd

-

Homepage: https://project.inria.fr/crowdscience/project/dense-crowd-head-tracking/

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Sundararaman_Tracking_Pedestrian_Heads_in_Dense_Crowd_CVPR_2021_paper.html

-

Code1: https://github.com/Sentient07/HeadHunter

-

Code2: https://github.com/Sentient07/HeadHunter%E2%80%93T

-

Dataset: https://project.inria.fr/crowdscience/project/dense-crowd-head-tracking/

Multiple Object Tracking with Correlation Learning

-

Paper: https://arxiv.org/abs/2104.03541

-

Code: None

Probabilistic Tracklet Scoring and Inpainting for Multiple Object Tracking

-

Paper: https://arxiv.org/abs/2012.02337

-

Code: None

Learning a Proposal Classifier for Multiple Object Tracking

-

Paper: https://arxiv.org/abs/2103.07889

-

Code: https://github.com/daip13/LPC_MOT.git

Track to Detect and Segment: An Online Multi-Object Tracker

-

Homepage: https://jialianwu.com/projects/TraDeS.html

-

Paper: https://arxiv.org/abs/2103.08808

-

Code: https://github.com/JialianW/TraDeS

======================================================================================

1. HyperSeg: Patch-wise Hypernetwork for Real-time Semantic Segmentation

-

作者单位: Facebook AI, 巴伊兰大学, 特拉维夫大学

-

Homepage: https://nirkin.com/hyperseg/

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/papers/Nirkin_HyperSeg_Patch-Wise_Hypernetwork_for_Real-Time_Semantic_Segmentation_CVPR_2021_paper.pdf

-

Code: https://github.com/YuvalNirkin/hyperseg

2. Rethinking BiSeNet For Real-time Semantic Segmentation

-

作者单位: 美团

-

Paper: https://arxiv.org/abs/2104.13188

-

Code: https://github.com/MichaelFan01/STDC-Seg

3. Progressive Semantic Segmentation

-

作者单位: VinAI Research, VinUniversity, 阿肯色大学, 石溪大学

-

Paper: https://arxiv.org/abs/2104.03778

-

Code: https://github.com/VinAIResearch/MagNet

4. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

-

作者单位: 复旦大学, 牛津大学, 萨里大学, 腾讯优图, Facebook AI

-

Homepage: https://fudan-zvg.github.io/SETR

-

Paper: https://arxiv.org/abs/2012.15840

-

Code: https://github.com/fudan-zvg/SETR

5. Capturing Omni-Range Context for Omnidirectional Segmentation

-

作者单位: 卡尔斯鲁厄理工学院, 卡尔·蔡司, 华为

-

Paper: https://arxiv.org/abs/2103.05687

-

Code: None

6. Learning Statistical Texture for Semantic Segmentation

-

作者单位: 北航, 商汤科技

-

Paper: https://arxiv.org/abs/2103.04133

-

Code: None

7. InverseForm: A Loss Function for Structured Boundary-Aware Segmentation

-

作者单位: 高通AI研究院

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Borse_InverseForm_A_Loss_Function_for_Structured_Boundary-Aware_Segmentation_CVPR_2021_paper.html

-

Code: None

8. DCNAS: Densely Connected Neural Architecture Search for Semantic Image Segmentation

-

作者单位: Joyy Inc, 快手, 北航等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Zhang_DCNAS_Densely_Connected_Neural_Architecture_Search_for_Semantic_Image_Segmentation_CVPR_2021_paper.html

-

Code: None

9. Railroad Is Not a Train: Saliency As Pseudo-Pixel Supervision for Weakly Supervised Semantic Segmentation

-

作者单位: 延世大学, 成均馆大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Lee_Railroad_Is_Not_a_Train_Saliency_As_Pseudo-Pixel_Supervision_for_CVPR_2021_paper.html

-

Code: https://github.com/halbielee/EPS

10. Background-Aware Pooling and Noise-Aware Loss for Weakly-Supervised Semantic Segmentation

-

作者单位: 延世大学

-

Homepage: https://cvlab.yonsei.ac.kr/projects/BANA/

-

Paper: https://arxiv.org/abs/2104.00905

-

Code: None

11. Non-Salient Region Object Mining for Weakly Supervised Semantic Segmentation

-

作者单位: 南京理工大学, MBZUAI, 电子科技大学, 阿德莱德大学, 悉尼科技大学

-

Paper: https://arxiv.org/abs/2103.14581

-

Code: https://github.com/NUST-Machine-Intelligence-Laboratory/nsrom

12. Embedded Discriminative Attention Mechanism for Weakly Supervised Semantic Segmentation

-

作者单位: 北京理工大学, 美团

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wu_Embedded_Discriminative_Attention_Mechanism_for_Weakly_Supervised_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/allenwu97/EDAM

13. BBAM: Bounding Box Attribution Map for Weakly Supervised Semantic and Instance Segmentation

-

作者单位: 首尔大学

-

Paper: https://arxiv.org/abs/2103.08907

-

Code: https://github.com/jbeomlee93/BBAM

14. Semi-Supervised Semantic Segmentation with Cross Pseudo Supervision

-

作者单位: 北京大学, 微软亚洲研究院

-

Paper: https://arxiv.org/abs/2106.01226

-

Code: https://github.com/charlesCXK/TorchSemiSeg

15. Semi-supervised Domain Adaptation based on Dual-level Domain Mixing for Semantic Segmentation

-

作者单位: 华为, 大连理工大学, 北京大学

-

Paper: https://arxiv.org/abs/2103.04705

-

Code: None

16. Semi-Supervised Semantic Segmentation With Directional Context-Aware Consistency

-

作者单位: 香港中文大学, 思谋科技, 牛津大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Lai_Semi-Supervised_Semantic_Segmentation_With_Directional_Context-Aware_Consistency_CVPR_2021_paper.html

-

Code: None

17. Semantic Segmentation With Generative Models: Semi-Supervised Learning and Strong Out-of-Domain Generalization

-

作者单位: NVIDIA, 多伦多大学, 耶鲁大学, MIT, Vector Institute

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_Semantic_Segmentation_With_Generative_Models_Semi-Supervised_Learning_and_Strong_Out-of-Domain_CVPR_2021_paper.html

-

Code: https://nv-tlabs.github.io/semanticGAN/

18. Three Ways To Improve Semantic Segmentation With Self-Supervised Depth Estimation

-

作者单位: ETH Zurich, 伯恩大学, 鲁汶大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Hoyer_Three_Ways_To_Improve_Semantic_Segmentation_With_Self-Supervised_Depth_Estimation_CVPR_2021_paper.html

-

Code: https://github.com/lhoyer/improving_segmentation_with_selfsupervised_depth

19. Cluster, Split, Fuse, and Update: Meta-Learning for Open Compound Domain Adaptive Semantic Segmentation

-

作者单位: ETH Zurich, 鲁汶大学, 电子科技大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Gong_Cluster_Split_Fuse_and_Update_Meta-Learning_for_Open_Compound_Domain_CVPR_2021_paper.html

-

Code: None

20. Source-Free Domain Adaptation for Semantic Segmentation

-

作者单位: 华东师范大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_Source-Free_Domain_Adaptation_for_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: None

21. Uncertainty Reduction for Model Adaptation in Semantic Segmentation

-

作者单位: Idiap Research Institute, EPFL, 日内瓦大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/S_Uncertainty_Reduction_for_Model_Adaptation_in_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://git.io/JthPp

22. Self-Supervised Augmentation Consistency for Adapting Semantic Segmentation

-

作者单位: 达姆施塔特工业大学, hessian.AI

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Araslanov_Self-Supervised_Augmentation_Consistency_for_Adapting_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/visinf/da-sac

23. RobustNet: Improving Domain Generalization in Urban-Scene Segmentation via Instance Selective Whitening

-

作者单位: LG AI研究院, KAIST等

-

Paper: https://arxiv.org/abs/2103.15597

-

Code: https://github.com/shachoi/RobustNet

24. Coarse-to-Fine Domain Adaptive Semantic Segmentation with Photometric Alignment and Category-Center Regularization

-

作者单位: 香港大学, 深睿医疗

-

Paper: https://arxiv.org/abs/2103.13041

-

Code: None

25. MetaCorrection: Domain-aware Meta Loss Correction for Unsupervised Domain Adaptation in Semantic Segmentation

-

作者单位: 香港城市大学, 百度

-

Paper: https://arxiv.org/abs/2103.05254

-

Code: https://github.com/cyang-cityu/MetaCorrection

26. Multi-Source Domain Adaptation with Collaborative Learning for Semantic Segmentation

-

作者单位: 华为云, 华为诺亚, 大连理工大学

-

Paper: https://arxiv.org/abs/2103.04717

-

Code: None

27. Prototypical Pseudo Label Denoising and Target Structure Learning for Domain Adaptive Semantic Segmentation

-

作者单位: 中国科学技术大学, 微软亚洲研究院

-

Paper: https://arxiv.org/abs/2101.10979

-

Code: https://github.com/microsoft/ProDA

28. DANNet: A One-Stage Domain Adaptation Network for Unsupervised Nighttime Semantic Segmentation

-

作者单位: 南卡罗来纳大学, 天远视科技

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wu_DANNet_A_One-Stage_Domain_Adaptation_Network_for_Unsupervised_Nighttime_Semantic_CVPR_2021_paper.html

-

Code: https://github.com/W-zx-Y/DANNet

29. Scale-Aware Graph Neural Network for Few-Shot Semantic Segmentation

-

作者单位: MBZUAI, IIAI, 哈工大

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Xie_Scale-Aware_Graph_Neural_Network_for_Few-Shot_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: None

30. Anti-Aliasing Semantic Reconstruction for Few-Shot Semantic Segmentation

-

作者单位: 国科大, 清华大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_Anti-Aliasing_Semantic_Reconstruction_for_Few-Shot_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/Bibkiller/ASR

31. PiCIE: Unsupervised Semantic Segmentation Using Invariance and Equivariance in Clustering

-

作者单位: UT-Austin, 康奈尔大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Cho_PiCIE_Unsupervised_Semantic_Segmentation_Using_Invariance_and_Equivariance_in_Clustering_CVPR_2021_paper.html

-

Code: https:// github.com/janghyuncho/PiCIE

32. VSPW: A Large-scale Dataset for Video Scene Parsing in the Wild

-

作者单位: 浙江大学, 百度, 悉尼科技大学

-

Homepage: https://www.vspwdataset.com/

-

Paper: https://www.vspwdataset.com/CVPR2021__miao.pdf

-

GitHub: https://github.com/sssdddwww2/vspw_dataset_download

33. Continual Semantic Segmentation via Repulsion-Attraction of Sparse and Disentangled Latent Representations

-

作者单位: 帕多瓦大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Michieli_Continual_Semantic_Segmentation_via_Repulsion-Attraction_of_Sparse_and_Disentangled_Latent_CVPR_2021_paper.html

-

Code: https://lttm.dei.unipd.it/paper_data/SDR/

34. Exploit Visual Dependency Relations for Semantic Segmentation

-

作者单位: 伊利诺伊大学芝加哥分校

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_Exploit_Visual_Dependency_Relations_for_Semantic_Segmentation_CVPR_2021_paper.html

-

Code: None

35. Revisiting Superpixels for Active Learning in Semantic Segmentation With Realistic Annotation Costs

-

作者单位: Institute for Infocomm Research, 新加坡国立大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Cai_Revisiting_Superpixels_for_Active_Learning_in_Semantic_Segmentation_With_Realistic_CVPR_2021_paper.html

-

Code: None

36. PLOP: Learning without Forgetting for Continual Semantic Segmentation

-

作者单位: 索邦大学, Heuritech, Datakalab, Valeo.ai

-

Paper: https://arxiv.org/abs/2011.11390

-

Code: https://github.com/arthurdouillard/CVPR2021_PLOP

37. 3D-to-2D Distillation for Indoor Scene Parsing

-

作者单位: 香港中文大学, 香港大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Liu_3D-to-2D_Distillation_for_Indoor_Scene_Parsing_CVPR_2021_paper.html

-

Code: None

38. Bidirectional Projection Network for Cross Dimension Scene Understanding

-

作者单位: 香港中文大学, 牛津大学等

-

Paper(Oral): https://arxiv.org/abs/2103.14326

-

Code: https://github.com/wbhu/BPNet

39. PointFlow: Flowing Semantics Through Points for Aerial Image Segmentation

-

作者单位: 北京大学, 中科院, 国科大, ETH Zurich, 商汤科技等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Li_PointFlow_Flowing_Semantics_Through_Points_for_Aerial_Image_Segmentation_CVPR_2021_paper.html

-

Code: https://github.com/lxtGH/PFSegNets

======================================================================================

DCT-Mask: Discrete Cosine Transform Mask Representation for Instance Segmentation

-

Paper: https://arxiv.org/abs/2011.09876

-

Code: https://github.com/aliyun/DCT-Mask

Incremental Few-Shot Instance Segmentation

-

Paper: https://arxiv.org/abs/2105.05312

-

Code: https://github.com/danganea/iMTFA

A^2-FPN: Attention Aggregation based Feature Pyramid Network for Instance Segmentation

-

Paper: https://arxiv.org/abs/2105.03186

-

Code: None

RefineMask: Towards High-Quality Instance Segmentation with Fine-Grained Features

-

Paper: https://arxiv.org/abs/2104.08569

-

Code: https://github.com/zhanggang001/RefineMask/

Look Closer to Segment Better: Boundary Patch Refinement for Instance Segmentation

-

Paper: https://arxiv.org/abs/2104.05239

-

Code: https://github.com/tinyalpha/BPR

Multi-Scale Aligned Distillation for Low-Resolution Detection

-

Paper: https://jiaya.me/papers/ms_align_distill_cvpr21.pdf

-

Code: https://github.com/Jia-Research-Lab/MSAD

Boundary IoU: Improving Object-Centric Image Segmentation Evaluation

-

Homepage: https://bowenc0221.github.io/boundary-iou/

-

Paper: https://arxiv.org/abs/2103.16562

-

Code: https://github.com/bowenc0221/boundary-iou-api

Deep Occlusion-Aware Instance Segmentation with Overlapping BiLayers

-

Paper: https://arxiv.org/abs/2103.12340

-

Code: https://github.com/lkeab/BCNet

Zero-shot instance segmentation(Not Sure)

-

Paper: None

-

Code: https://github.com/CVPR2021-pape-id-1395/CVPR2021-paper-id-1395

STMask: Spatial Feature Calibration and Temporal Fusion for Effective One-stage Video Instance Segmentation

-

Paper: http://www4.comp.polyu.edu.hk/~cslzhang/papers.htm

-

Code: https://github.com/MinghanLi/STMask

End-to-End Video Instance Segmentation with Transformers

-

Paper(Oral): https://arxiv.org/abs/2011.14503

-

Code: https://github.com/Epiphqny/VisTR

======================================================================================

ViP-DeepLab: Learning Visual Perception with Depth-aware Video Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2012.05258

-

Code: https://github.com/joe-siyuan-qiao/ViP-DeepLab

-

Dataset: https://github.com/joe-siyuan-qiao/ViP-DeepLab

Part-aware Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2106.06351

-

Code: https://github.com/tue-mps/panoptic_parts

-

Dataset: https://github.com/tue-mps/panoptic_parts

Exemplar-Based Open-Set Panoptic Segmentation Network

-

Homepage: https://cv.snu.ac.kr/research/EOPSN/

-

Paper: https://arxiv.org/abs/2105.08336

-

Code: https://github.com/jd730/EOPSN

MaX-DeepLab: End-to-End Panoptic Segmentation With Mask Transformers

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Wang_MaX-DeepLab_End-to-End_Panoptic_Segmentation_With_Mask_Transformers_CVPR_2021_paper.html

-

Code: None

Panoptic Segmentation Forecasting

-

Paper: https://arxiv.org/abs/2104.03962

-

Code: https://github.com/nianticlabs/panoptic-forecasting

Fully Convolutional Networks for Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2012.00720

-

Code: https://github.com/yanwei-li/PanopticFCN

Cross-View Regularization for Domain Adaptive Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2103.02584

-

Code: None

=================================================================

1. Learning Calibrated Medical Image Segmentation via Multi-Rater Agreement Modeling

-

作者单位: 腾讯天衍实验室, 北京同仁医院

-

Paper(Best Paper Candidate): https://openaccess.thecvf.com/content/CVPR2021/html/Ji_Learning_Calibrated_Medical_Image_Segmentation_via_Multi-Rater_Agreement_Modeling_CVPR_2021_paper.html

-

Code: https://github.com/jiwei0921/MRNet/

2. Every Annotation Counts: Multi-Label Deep Supervision for Medical Image Segmentation

-

作者单位: 卡尔斯鲁厄理工学院, 卡尔·蔡司等

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Reiss_Every_Annotation_Counts_Multi-Label_Deep_Supervision_for_Medical_Image_Segmentation_CVPR_2021_paper.html

-

Code: None

3. FedDG: Federated Domain Generalization on Medical Image Segmentation via Episodic Learning in Continuous Frequency Space

-

作者单位: 香港中文大学, 香港理工大学

-

Paper: https://arxiv.org/abs/2103.06030

-

Code: https://github.com/liuquande/FedDG-ELCFS

4. DiNTS: Differentiable Neural Network Topology Search for 3D Medical Image Segmentation

-

作者单位: 约翰斯·霍普金斯大大学, NVIDIA

-

Paper(Oral): https://arxiv.org/abs/2103.15954

-

Code: None

5. DARCNN: Domain Adaptive Region-Based Convolutional Neural Network for Unsupervised Instance Segmentation in Biomedical Images

-

作者单位: 斯坦福大学

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Hsu_DARCNN_Domain_Adaptive_Region-Based_Convolutional_Neural_Network_for_Unsupervised_Instance_CVPR_2021_paper.html

-

Code: None

视频目标分割(Video-Object-Segmentation)

============================================================================================

Learning Position and Target Consistency for Memory-based Video Object Segmentation

-

Paper: https://arxiv.org/abs/2104.04329

-

Code: None

SSTVOS: Sparse Spatiotemporal Transformers for Video Object Segmentation

-

Paper(Oral): https://arxiv.org/abs/2101.08833

-

Code: https://github.com/dukebw/SSTVOS

交互式视频目标分割(Interactive-Video-Object-Segmentation)

===========================================================================================================

Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion

-

Homepage: https://hkchengrex.github.io/MiVOS/

-

Paper: https://arxiv.org/abs/2103.07941

-

Code: https://github.com/hkchengrex/MiVOS

-

Demo: https://hkchengrex.github.io/MiVOS/video.html#partb

Learning to Recommend Frame for Interactive Video Object Segmentation in the Wild

-

Paper: https://arxiv.org/abs/2103.10391

-

Code: https://github.com/svip-lab/IVOS-W

====================================================================================

Uncertainty-aware Joint Salient Object and Camouflaged Object Detection

-

Paper: https://arxiv.org/abs/2104.02628

-

Code: https://github.com/JingZhang617/Joint_COD_SOD

Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion

-

Paper(Oral): https://arxiv.org/abs/2103.11832

-

Code: https://github.com/sunpeng1996/DSA2F

伪装物体检测(Camouflaged Object Detection)

===============================================================================================

Uncertainty-aware Joint Salient Object and Camouflaged Object Detection

-

Paper: https://arxiv.org/abs/2104.02628

-

Code: https://github.com/JingZhang617/Joint_COD_SOD

协同显著性检测(Co-Salient Object Detection)

===============================================================================================

Group Collaborative Learning for Co-Salient Object Detection

-

Paper: https://arxiv.org/abs/2104.01108

-

Code: https://github.com/fanq15/GCoNet

=================================================================================

Semantic Image Matting

-

Paper: https://arxiv.org/abs/2104.08201

-

Code: https://github.com/nowsyn/SIM

-

Dataset: https://github.com/nowsyn/SIM

行人重识别(Person Re-identification)

==========================================================================================

Generalizable Person Re-identification with Relevance-aware Mixture of Experts

-

Paper: https://arxiv.org/abs/2105.09156

-

Code: None

Unsupervised Multi-Source Domain Adaptation for Person Re-Identification

-

Paper: https://arxiv.org/abs/2104.12961

-

Code: None

Combined Depth Space based Architecture Search For Person Re-identification

-

Paper: https://arxiv.org/abs/2104.04163

-

Code: None

==============================================================================

Anchor-Free Person Search

-

Paper: https://arxiv.org/abs/2103.11617

-

Code: https://github.com/daodaofr/AlignPS

-

Interpretation: 首个无需锚框(Anchor-Free)的行人搜索框架 | CVPR 2021

视频理解/行为识别(Video Understanding)

=========================================================================================

Temporal-Relational CrossTransformers for Few-Shot Action Recognition

-

Paper: https://arxiv.org/abs/2101.06184

-

Code: https://github.com/tobyperrett/trx

FrameExit: Conditional Early Exiting for Efficient Video Recognition

-

Paper(Oral): https://arxiv.org/abs/2104.13400

-

Code: None

No frame left behind: Full Video Action Recognition

-

Paper: https://arxiv.org/abs/2103.15395

-

Code: None

Learning Salient Boundary Feature for Anchor-free Temporal Action Localization

-

Paper: https://arxiv.org/abs/2103.13137

-

Code: None

Temporal Context Aggregation Network for Temporal Action Proposal Refinement

-

Paper: https://arxiv.org/abs/2103.13141

-

Code: None

-

Interpretation: CVPR 2021 | TCANet:最强时序动作提名修正网络

ACTION-Net: Multipath Excitation for Action Recognition

-

Paper: https://arxiv.org/abs/2103.07372

-

Code: https://github.com/V-Sense/ACTION-Net

Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Learning

-

Homepage: https://fingerrec.github.io/index_files/jinpeng/papers/CVPR2021/project_website.html

-

Paper: https://arxiv.org/abs/2009.05769

-

Code: https://github.com/FingerRec/BE

TDN: Temporal Difference Networks for Efficient Action Recognition

-

Paper: https://arxiv.org/abs/2012.10071

-

Code: https://github.com/MCG-NJU/TDN

=================================================================================

A 3D GAN for Improved Large-pose Facial Recognition

-

Paper: https://arxiv.org/abs/2012.10545

-

Code: None

MagFace: A Universal Representation for Face Recognition and Quality Assessment

-

Paper(Oral): https://arxiv.org/abs/2103.06627

-

Code: https://github.com/IrvingMeng/MagFace

WebFace260M: A Benchmark Unveiling the Power of Million-Scale Deep Face Recognition

-

Homepage: https://www.face-benchmark.org/

-

Paper: https://arxiv.org/abs/2103.04098

-

Dataset: https://www.face-benchmark.org/

When Age-Invariant Face Recognition Meets Face Age Synthesis: A Multi-Task Learning Framework

-

Paper(Oral): https://arxiv.org/abs/2103.01520

-

Code: https://github.com/Hzzone/MTLFace

-

Dataset: https://github.com/Hzzone/MTLFace

===============================================================================

HLA-Face: Joint High-Low Adaptation for Low Light Face Detection

-

Homepage: https://daooshee.github.io/HLA-Face-Website/

-

Paper: https://arxiv.org/abs/2104.01984

-

Code: https://github.com/daooshee/HLA-Face-Code

CRFace: Confidence Ranker for Model-Agnostic Face Detection Refinement

-

Paper: https://arxiv.org/abs/2103.07017

-

Code: None

=====================================================================================

Cross Modal Focal Loss for RGBD Face Anti-Spoofing

-

Paper: https://arxiv.org/abs/2103.00948

-

Code: None

Deepfake检测(Deepfake Detection)

=========================================================================================

Spatial-Phase Shallow Learning: Rethinking Face Forgery Detection in Frequency Domain

-

Paper:https://arxiv.org/abs/2103.01856

-

Code: None

Multi-attentional Deepfake Detection

-

Paper:https://arxiv.org/abs/2103.02406

-

Code: None

=================================================================================

Continuous Face Aging via Self-estimated Residual Age Embedding

-

Paper: https://arxiv.org/abs/2105.00020

-

Code: None

PML: Progressive Margin Loss for Long-tailed Age Classification

-

Paper: https://arxiv.org/abs/2103.02140

-

Code: None

人脸表情识别(Facial Expression Recognition)

================================================================================================

Affective Processes: stochastic modelling of temporal context for emotion and facial expression recognition

-

Paper: https://arxiv.org/abs/2103.13372

-

Code: None

====================================================================

MagDR: Mask-guided Detection and Reconstruction for Defending Deepfakes

-

Paper: https://arxiv.org/abs/2103.14211

-

Code: None

==============================================================================

Differentiable Multi-Granularity Human Representation Learning for Instance-Aware Human Semantic Parsing

-

Paper: https://arxiv.org/abs/2103.04570

-

Code: https://github.com/tfzhou/MG-HumanParsing

2D/3D人体姿态估计(2D/3D Human Pose Estimation)

===================================================================================================

ViPNAS: Efficient Video Pose Estimation via Neural Architecture Search

-

Paper: ttps://arxiv.org/abs/2105.10154

-

Code: None

When Human Pose Estimation Meets Robustness: Adversarial Algorithms and Benchmarks

-

Paper: https://arxiv.org/abs/2105.06152

-

Code: None

Pose Recognition with Cascade Transformers

-

Paper: https://arxiv.org/abs/2104.06976

-

Code: https://github.com/mlpc-ucsd/PRTR

DCPose: Deep Dual Consecutive Network for Human Pose Estimation

-

Paper: https://arxiv.org/abs/2103.07254

-

Code: https://github.com/Pose-Group/DCPose

End-to-End Human Pose and Mesh Reconstruction with Transformers

-

Paper: https://arxiv.org/abs/2012.09760

-

Code: https://github.com/microsoft/MeshTransformer

PoseAug: A Differentiable Pose Augmentation Framework for 3D Human Pose Estimation

-

Paper(Oral): https://arxiv.org/abs/2105.02465

-

Code: https://github.com/jfzhang95/PoseAug

Camera-Space Hand Mesh Recovery via Semantic Aggregation and Adaptive 2D-1D Registration

-

Paper: https://arxiv.org/abs/2103.02845

-

Code: https://github.com/SeanChenxy/HandMesh

Monocular 3D Multi-Person Pose Estimation by Integrating Top-Down and Bottom-Up Networks

-

Paper: https://arxiv.org/abs/2104.01797

-

https://github.com/3dpose/3D-Multi-Person-Pose

HybrIK: A Hybrid Analytical-Neural Inverse Kinematics Solution for 3D Human Pose and Shape Estimation

-

Homepage: https://jeffli.site/HybrIK/

-

Paper: https://arxiv.org/abs/2011.14672

-

Code: https://github.com/Jeff-sjtu/HybrIK

动物姿态估计(Animal Pose Estimation)

=========================================================================================

From Synthetic to Real: Unsupervised Domain Adaptation for Animal Pose Estimation

-

Paper: https://arxiv.org/abs/2103.14843

-

Code: None

=======================================================================================

Semi-Supervised 3D Hand-Object Poses Estimation with Interactions in Time

-

Homepage: https://stevenlsw.github.io/Semi-Hand-Object/

-

Paper: https://arxiv.org/abs/2106.05266

-

Code: https://github.com/stevenlsw/Semi-Hand-Object

===================================================================================

POSEFusion: Pose-guided Selective Fusion for Single-view Human Volumetric Capture

-

Homepage: http://www.liuyebin.com/posefusion/posefusion.html

-

Paper(Oral): https://arxiv.org/abs/2103.15331

-

Code: None

=======================================================================================

Fourier Contour Embedding for Arbitrary-Shaped Text Detection

-

Paper: https://arxiv.org/abs/2104.10442

-

Code: None

场景文本识别(Scene Text Recognition)

=========================================================================================

Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition

-

Paper: https://arxiv.org/abs/2103.06495

-

Code: https://github.com/FangShancheng/ABINet

===============================================================

Checkerboard Context Model for Efficient Learned Image Compression

-

Paper: https://arxiv.org/abs/2103.15306

-

Code: None

Slimmable Compressive Autoencoders for Practical Neural Image Compression

-

Paper: https://arxiv.org/abs/2103.15726

-

Code: None

Attention-guided Image Compression by Deep Reconstruction of Compressive Sensed Saliency Skeleton

-

Paper: https://arxiv.org/abs/2103.15368

-

Code: None

=====================================================================

Teachers Do More Than Teach: Compressing Image-to-Image Models

-

Paper: https://arxiv.org/abs/2103.03467

-

Code: https://github.com/snap-research/CAT

Dynamic Slimmable Network

-

Paper: https://arxiv.org/abs/2103.13258

-

Code: https://github.com/changlin31/DS-Net

Network Quantization with Element-wise Gradient Scaling

-

Paper: https://arxiv.org/abs/2104.00903

-

Code: None

Zero-shot Adversarial Quantization

-

Paper(Oral): https://arxiv.org/abs/2103.15263

-

Code: https://git.io/Jqc0y

Learnable Companding Quantization for Accurate Low-bit Neural Networks

-

Paper: https://arxiv.org/abs/2103.07156

-

Code: None

=======================================================================================

Distilling Knowledge via Knowledge Review

-

Paper: https://arxiv.org/abs/2104.09044

-

Code: https://github.com/Jia-Research-Lab/ReviewKD

Distilling Object Detectors via Decoupled Features

-

Paper: https://arxiv.org/abs/2103.14475

-

Code: https://github.com/ggjy/DeFeat.pytorch

=================================================================================

Image Super-Resolution with Non-Local Sparse Attention

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/papers/Mei_Image_Super-Resolution_With_Non-Local_Sparse_Attention_CVPR_2021_paper.pdf

-

Code: https://github.com/HarukiYqM/Non-Local-Sparse-Attention

Towards Fast and Accurate Real-World Depth Super-Resolution: Benchmark Dataset and Baseline

-

Homepage: http://mepro.bjtu.edu.cn/resource.html

-

Paper: https://arxiv.org/abs/2104.06174

-

Code: None

ClassSR: A General Framework to Accelerate Super-Resolution Networks by Data Characteristic

-

Paper: https://arxiv.org/abs/2103.04039

-

Code: https://github.com/Xiangtaokong/ClassSR

AdderSR: Towards Energy Efficient Image Super-Resolution

-

Paper: https://arxiv.org/abs/2009.08891

-

Code: None

=======================================================================

Contrastive Learning for Compact Single Image Dehazing

-

Paper: https://arxiv.org/abs/2104.09367

-

Code: https://github.com/GlassyWu/AECR-Net

Temporal Modulation Network for Controllable Space-Time Video Super-Resolution

-

Paper: None

-

Code: https://github.com/CS-GangXu/TMNet

==================================================================================

Multi-Stage Progressive Image Restoration

-

Paper: https://arxiv.org/abs/2102.02808

-

Code: https://github.com/swz30/MPRNet

=================================================================================

PD-GAN: Probabilistic Diverse GAN for Image Inpainting

-

Paper: https://arxiv.org/abs/2105.02201

-

Code: https://github.com/KumapowerLIU/PD-GAN

TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations

-

Homepage: https://yzhouas.github.io/projects/TransFill/index.html

-

Paper: https://arxiv.org/abs/2103.15982

-

Code: None

==============================================================================

StyleMapGAN: Exploiting Spatial Dimensions of Latent in GAN for Real-time Image Editing

-

Paper: https://arxiv.org/abs/2104.14754

-

Code: https://github.com/naver-ai/StyleMapGAN

-

Demo Video: https://youtu.be/qCapNyRA_Ng

High-Fidelity and Arbitrary Face Editing

-

Paper: https://arxiv.org/abs/2103.15814

-

Code: None

Anycost GANs for Interactive Image Synthesis and Editing

-

Paper: https://arxiv.org/abs/2103.03243

-

Code: https://github.com/mit-han-lab/anycost-gan

PISE: Person Image Synthesis and Editing with Decoupled GAN

-

Paper: https://arxiv.org/abs/2103.04023

-

Code: https://github.com/Zhangjinso/PISE

DeFLOCNet: Deep Image Editing via Flexible Low-level Controls

-

Paper: http://raywzy.com/

-

Code: http://raywzy.com/

Exploiting Spatial Dimensions of Latent in GAN for Real-time Image Editing

-

Paper: None

-

Code: None

=================================================================================

Towards Accurate Text-based Image Captioning with Content Diversity Exploration

-

Paper: https://arxiv.org/abs/2105.03236

-

Code: None

================================================================================

DG-Font: Deformable Generative Networks for Unsupervised Font Generation

-

Paper: https://arxiv.org/abs/2104.03064

-

Code: https://github.com/ecnuycxie/DG-Font

==============================================================================

LoFTR: Detector-Free Local Feature Matching with Transformers

-

Homepage: https://zju3dv.github.io/loftr/

-

Paper: https://arxiv.org/abs/2104.00680

-

Code: https://github.com/zju3dv/LoFTR

Convolutional Hough Matching Networks

-

Homapage: http://cvlab.postech.ac.kr/research/CHM/

-

Paper(Oral): https://arxiv.org/abs/2103.16831

-

Code: None

===============================================================================

Bridging the Visual Gap: Wide-Range Image Blending

-

Paper: https://arxiv.org/abs/2103.15149

-

Code: https://github.com/julia0607/Wide-Range-Image-Blending

===================================================================================

Robust Reflection Removal with Reflection-free Flash-only Cues

-

Paper: https://arxiv.org/abs/2103.04273

-

Code: https://github.com/ChenyangLEI/flash-reflection-removal

3D点云分类(3D Point Clouds Classification)

=================================================================================================

Equivariant Point Network for 3D Point Cloud Analysis

-

Paper: https://arxiv.org/abs/2103.14147

-

Code: None

PAConv: Position Adaptive Convolution with Dynamic Kernel Assembling on Point Clouds

-

Paper: https://arxiv.org/abs/2103.14635

-

Code: https://github.com/CVMI-Lab/PAConv

======================================================================================

3D-MAN: 3D Multi-frame Attention Network for Object Detection

-

Paper: https://arxiv.org/abs/2103.16054

-

Code: None

Back-tracing Representative Points for Voting-based 3D Object Detection in Point Clouds

-

Paper: https://arxiv.org/abs/2104.06114

-

Code: https://github.com/cheng052/BRNet

HVPR: Hybrid Voxel-Point Representation for Single-stage 3D Object Detection

-

Homepage: https://cvlab.yonsei.ac.kr/projects/HVPR/

-

Paper: https://arxiv.org/abs/2104.00902

-

Code: https://github.com/cvlab-yonsei/HVPR

LiDAR R-CNN: An Efficient and Universal 3D Object Detector

-

Paper: https://arxiv.org/abs/2103.15297

-

Code: https://github.com/tusimple/LiDAR_RCNN

M3DSSD: Monocular 3D Single Stage Object Detector

-

Paper: https://arxiv.org/abs/2103.13164

-

Code: https://github.com/mumianyuxin/M3DSSD

SE-SSD: Self-Ensembling Single-Stage Object Detector From Point Cloud

-

Paper: None

-

Code: https://github.com/Vegeta2020/SE-SSD

Center-based 3D Object Detection and Tracking

-

Paper: https://arxiv.org/abs/2006.11275

-

Code: https://github.com/tianweiy/CenterPoint

Categorical Depth Distribution Network for Monocular 3D Object Detection

-

Paper: https://arxiv.org/abs/2103.01100

-

Code: None

3D语义分割(3D Semantic Segmentation)

===========================================================================================

Bidirectional Projection Network for Cross Dimension Scene Understanding

-

Paper(Oral): https://arxiv.org/abs/2103.14326

-

Code: https://github.com/wbhu/BPNet

Semantic Segmentation for Real Point Cloud Scenes via Bilateral Augmentation and Adaptive Fusion

-

Paper: https://arxiv.org/abs/2103.07074

-

Code: https://github.com/ShiQiu0419/BAAF-Net

Cylindrical and Asymmetrical 3D Convolution Networks for LiDAR Segmentation

-

Paper: https://arxiv.org/abs/2011.10033

-

Code: https://github.com/xinge008/Cylinder3D

Towards Semantic Segmentation of Urban-Scale 3D Point Clouds: A Dataset, Benchmarks and Challenges

-

Homepage: https://github.com/QingyongHu/SensatUrban

-

Paper: http://arxiv.org/abs/2009.03137

-

Code: https://github.com/QingyongHu/SensatUrban

-

Dataset: https://github.com/QingyongHu/SensatUrban

3D全景分割(3D Panoptic Segmentation)

===========================================================================================

Panoptic-PolarNet: Proposal-free LiDAR Point Cloud Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2103.14962

-

Code: https://github.com/edwardzhou130/Panoptic-PolarNet

======================================================================================

Center-based 3D Object Detection and Tracking

-

Paper: https://arxiv.org/abs/2006.11275

-

Code: https://github.com/tianweiy/CenterPoint

3D点云配准(3D Point Cloud Registration)

==============================================================================================

ReAgent: Point Cloud Registration using Imitation and Reinforcement Learning

-

Paper: https://arxiv.org/abs/2103.15231

-

Code: None

PointDSC: Robust Point Cloud Registration using Deep Spatial Consistency

-

Paper: https://arxiv.org/abs/2103.05465

-

Code: https://github.com/XuyangBai/PointDSC

PREDATOR: Registration of 3D Point Clouds with Low Overlap

-

Paper: https://arxiv.org/abs/2011.13005

-

Code: https://github.com/ShengyuH/OverlapPredator

3D点云补全(3D Point Cloud Completion)

============================================================================================

Unsupervised 3D Shape Completion through GAN Inversion

-

Homepage: https://junzhezhang.github.io/projects/ShapeInversion/

-

Paper: https://arxiv.org/abs/2104.13366

-

Code: https://github.com/junzhezhang/shape-inversion

Variational Relational Point Completion Network

-

Homepage: https://paul007pl.github.io/projects/VRCNet

-

Paper: https://arxiv.org/abs/2104.10154

-

Code: https://github.com/paul007pl/VRCNet

Style-based Point Generator with Adversarial Rendering for Point Cloud Completion

-

Homepage: https://alphapav.github.io/SpareNet/

-

Paper: https://arxiv.org/abs/2103.02535

-

Code: https://github.com/microsoft/SpareNet

==================================================================================

Learning to Aggregate and Personalize 3D Face from In-the-Wild Photo Collection

-

Paper: http://arxiv.org/abs/2106.07852

-

Code: https://github.com/TencentYoutuResearch/3DFaceReconstruction-LAP

Fully Understanding Generic Objects: Modeling, Segmentation, and Reconstruction

-

Paper: https://arxiv.org/abs/2104.00858

-

Code: None

NeuralRecon: Real-Time Coherent 3D Reconstruction from Monocular Video

-

Homepage: https://zju3dv.github.io/neuralrecon/

-

Paper(Oral): https://arxiv.org/abs/2104.00681

-

Code: https://github.com/zju3dv/NeuralRecon

=====================================================================================

FS-Net: Fast Shape-based Network for Category-Level 6D Object Pose Estimation with Decoupled Rotation Mechanism

-

Paper(Oral): https://arxiv.org/abs/2103.07054

-

Code: https://github.com/DC1991/FS-Net

GDR-Net: Geometry-Guided Direct Regression Network for Monocular 6D Object Pose Estimation

-

Paper: http://arxiv.org/abs/2102.12145

-

code: https://git.io/GDR-Net

FFB6D: A Full Flow Bidirectional Fusion Network for 6D Pose Estimation

-

Paper: https://arxiv.org/abs/2103.02242

-

Code: https://github.com/ethnhe/FFB6D

=================================================================

Back to the Feature: Learning Robust Camera Localization from Pixels to Pose

-

Paper: https://arxiv.org/abs/2103.09213

-

Code: https://github.com/cvg/pixloc

=================================================================================

S2R-DepthNet: Learning a Generalizable Depth-specific Structural Representation

-

Paper(Oral): https://arxiv.org/abs/2104.00877

-

Code: None

Beyond Image to Depth: Improving Depth Prediction using Echoes

-

Homepage: https://krantiparida.github.io/projects/bimgdepth.html

-

Paper: https://arxiv.org/abs/2103.08468

-

Code: https://github.com/krantiparida/beyond-image-to-depth

S3: Learnable Sparse Signal Superdensity for Guided Depth Estimation

-

Paper: https://arxiv.org/abs/2103.02396

-

Code: None

Depth from Camera Motion and Object Detection

-

Paper: https://arxiv.org/abs/2103.01468

-

Code: https://github.com/griffbr/ODMD

-

Dataset: https://github.com/griffbr/ODMD

================================================================================

A Decomposition Model for Stereo Matching

-

Paper: https://arxiv.org/abs/2104.07516

-

Code: None

================================================================================

Self-Supervised Multi-Frame Monocular Scene Flow

-

Paper: https://arxiv.org/abs/2105.02216

-

Code: https://github.com/visinf/multi-mono-sf

RAFT-3D: Scene Flow using Rigid-Motion Embeddings

-

Paper: https://arxiv.org/abs/2012.00726v1

-

Code: None

Learning Optical Flow From Still Images

-

Homepage: https://mattpoggi.github.io/projects/cvpr2021aleotti/

-

Paper: https://mattpoggi.github.io/assets/papers/aleotti2021cvpr.pdf

-

Code: https://github.com/mattpoggi/depthstillation

FESTA: Flow Estimation via Spatial-Temporal Attention for Scene Point Clouds

-

Paper: https://arxiv.org/abs/2104.00798

-

Code: None

================================================================================

Focus on Local: Detecting Lane Marker from Bottom Up via Key Point

-

Paper: https://arxiv.org/abs/2105.13680

-

Code: None

Keep your Eyes on the Lane: Real-time Attention-guided Lane Detection

-

Paper: https://arxiv.org/abs/2010.12035

-

Code: https://github.com/lucastabelini/LaneATT

======================================================================================

Divide-and-Conquer for Lane-Aware Diverse Trajectory Prediction

-

Paper(Oral): https://arxiv.org/abs/2104.08277

-

Code: None

===============================================================================

Detection, Tracking, and Counting Meets Drones in Crowds: A Benchmark

-

Paper: https://arxiv.org/abs/2105.02440

-

Code: https://github.com/VisDrone/DroneCrowd

-

Dataset: https://github.com/VisDrone/DroneCrowd

=====================================================================================

Enhancing the Transferability of Adversarial Attacks through Variance Tuning

-

Paper: https://arxiv.org/abs/2103.15571

-

Code: https://github.com/JHL-HUST/VT

LiBRe: A Practical Bayesian Approach to Adversarial Detection

-

Paper: https://arxiv.org/abs/2103.14835

-

Code: None

Natural Adversarial Examples

-

Paper: https://arxiv.org/abs/1907.07174

-

Code: https://github.com/hendrycks/natural-adv-examples

================================================================================

StyleMeUp: Towards Style-Agnostic Sketch-Based Image Retrieval

-

Paper: https://arxiv.org/abs/2103.15706

-

COde: None

QAIR: Practical Query-efficient Black-Box Attacks for Image Retrieval

-

Paper: https://arxiv.org/abs/2103.02927

-

Code: None

================================================================================

On Semantic Similarity in Video Retrieval

-

Paper: https://arxiv.org/abs/2103.10095

-

Homepage: https://mwray.github.io/SSVR/

-

Code: https://github.com/mwray/Semantic-Video-Retrieval

=======================================================================================

Cross-Modal Center Loss for 3D Cross-Modal Retrieval

-

Paper: https://arxiv.org/abs/2008.03561

-

Code: https://github.com/LongLong-Jing/Cross-Modal-Center-Loss

Thinking Fast and Slow: Efficient Text-to-Visual Retrieval with Transformers

-

Paper: https://arxiv.org/abs/2103.16553

-

Code: None

Revamping cross-modal recipe retrieval with hierarchical Transformers and self-supervised learning

-

Paper: https://www.amazon.science/publications/revamping-cross-modal-recipe-retrieval-with-hierarchical-transformers-and-self-supervised-learning

-

Code: https://github.com/amzn/image-to-recipe-transformers

=============================================================================

Counterfactual Zero-Shot and Open-Set Visual Recognition

-

Paper: https://arxiv.org/abs/2103.00887

-

Code: https://github.com/yue-zhongqi/gcm-cf

===================================================================================

FedDG: Federated Domain Generalization on Medical Image Segmentation via Episodic Learning in Continuous Frequency Space

-

Paper: https://arxiv.org/abs/2103.06030

-

Code: https://github.com/liuquande/FedDG-ELCFS

视频插帧(Video Frame Interpolation)

==========================================================================================

CDFI: Compression-Driven Network Design for Frame Interpolation

-

Paper: None

-

Code: https://github.com/tding1/CDFI

FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation

-

Homepage: https://tarun005.github.io/FLAVR/

-

Paper: https://arxiv.org/abs/2012.08512

-

Code: https://github.com/tarun005/FLAVR

=================================================================================

Transformation Driven Visual Reasoning

-

homepage: https://hongxin2019.github.io/TVR/

-

Paper: https://arxiv.org/abs/2011.13160

-

Code: https://github.com/hughplay/TVR

================================================================================

GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields

-

Homepage: https://m-niemeyer.github.io/project-pages/giraffe/index.html

-

Paper(Oral): https://arxiv.org/abs/2011.12100

-

Code: https://github.com/autonomousvision/giraffe

-

Demo: http://www.youtube.com/watch?v=fIaDXC-qRSg&vq=hd1080&autoplay=1

Taming Transformers for High-Resolution Image Synthesis

-

Homepage: https://compvis.github.io/taming-transformers/

-

Paper(Oral): https://arxiv.org/abs/2012.09841

-

Code: https://github.com/CompVis/taming-transformers

===============================================================================

Stereo Radiance Fields (SRF): Learning View Synthesis for Sparse Views of Novel Scenes

-

Homepage: https://virtualhumans.mpi-inf.mpg.de/srf/

-

Paper: https://arxiv.org/abs/2104.06935

Self-Supervised Visibility Learning for Novel View Synthesis

-

Paper: https://arxiv.org/abs/2103.15407

-

Code: None

NeX: Real-time View Synthesis with Neural Basis Expansion

-

Homepage: https://nex-mpi.github.io/

-

Paper(Oral): https://arxiv.org/abs/2103.05606

===============================================================================

Drafting and Revision: Laplacian Pyramid Network for Fast High-Quality Artistic Style Transfer

-

Paper: https://arxiv.org/abs/2104.05376

-

Code: https://github.com/PaddlePaddle/PaddleGAN/

==================================================================================

LayoutTransformer: Scene Layout Generation With Conceptual and Spatial Diversity

-

Paper: None

-

Code: None

Variational Transformer Networks for Layout Generation

-

Paper: https://arxiv.org/abs/2104.02416

-

Code: None

================================================================================

Generalizable Person Re-identification with Relevance-aware Mixture of Experts

-

Paper: https://arxiv.org/abs/2105.09156

-

Code: None

RobustNet: Improving Domain Generalization in Urban-Scene Segmentation via Instance Selective Whitening

-

Paper: https://arxiv.org/abs/2103.15597

-

Code: https://github.com/shachoi/RobustNet

Adaptive Methods for Real-World Domain Generalization

-

Paper: https://arxiv.org/abs/2103.15796

-

Code: None

FSDR: Frequency Space Domain Randomization for Domain Generalization

-

Paper: https://arxiv.org/abs/2103.02370

-

Code: None

============================================================================

Curriculum Graph Co-Teaching for Multi-Target Domain Adaptation

-

Paper: https://arxiv.org/abs/2104.00808

-

Code: None

Domain Consensus Clustering for Universal Domain Adaptation

-

Paper: http://reler.net/papers/guangrui_cvpr2021.pdf

-

Code: https://github.com/Solacex/Domain-Consensus-Clustering

===================================================================

Towards Open World Object Detection

-

Paper(Oral): https://arxiv.org/abs/2103.02603

-

Code: https://github.com/JosephKJ/OWOD

Exemplar-Based Open-Set Panoptic Segmentation Network

-

Homepage: https://cv.snu.ac.kr/research/EOPSN/

-

Paper: https://arxiv.org/abs/2105.08336

-

Code: https://github.com/jd730/EOPSN

Learning Placeholders for Open-Set Recognition

-

Paper(Oral): https://arxiv.org/abs/2103.15086

-

Code: None

=============================================================================

IoU Attack: Towards Temporally Coherent Black-Box Adversarial Attack for Visual Object Tracking

-

Paper: https://arxiv.org/abs/2103.14938

-

Code: https://github.com/VISION-SJTU/IoUattack

=========================================================================

HOTR: End-to-End Human-Object Interaction Detection with Transformers

-

Paper: https://arxiv.org/abs/2104.13682

-

Code: None

Query-Based Pairwise Human-Object Interaction Detection with Image-Wide Contextual Information

-

Paper: https://arxiv.org/abs/2103.05399

-

Code: https://github.com/hitachi-rd-cv/qpic

Reformulating HOI Detection as Adaptive Set Prediction

-

Paper: https://arxiv.org/abs/2103.05983

-

Code: https://github.com/yoyomimi/AS-Net

Detecting Human-Object Interaction via Fabricated Compositional Learning

-

Paper: https://arxiv.org/abs/2103.08214

-

Code: https://github.com/zhihou7/FCL

End-to-End Human Object Interaction Detection with HOI Transformer

-

Paper: https://arxiv.org/abs/2103.04503

-

Code: https://github.com/bbepoch/HoiTransformer

===============================================================================

Auto-Exposure Fusion for Single-Image Shadow Removal

-

Paper: https://arxiv.org/abs/2103.01255

-

Code: https://github.com/tsingqguo/exposure-fusion-shadow-removal

===============================================================================

Parser-Free Virtual Try-on via Distilling Appearance Flows

基于外观流蒸馏的无需人体解析的虚拟换装

-

Paper: https://arxiv.org/abs/2103.04559

-

Code: https://github.com/geyuying/PF-AFN

============================================================================

A Second-Order Approach to Learning with Instance-Dependent Label Noise

-

Paper(Oral): https://arxiv.org/abs/2012.11854

-

Code: https://github.com/UCSC-REAL/CAL

====================================================================================

Real-Time Selfie Video Stabilization

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/papers/Yu_Real-Time_Selfie_Video_Stabilization_CVPR_2021_paper.pdf

-

Code: https://github.com/jiy173/selfievideostabilization

========================================================================

Tracking Pedestrian Heads in Dense Crowd

-

Homepage: https://project.inria.fr/crowdscience/project/dense-crowd-head-tracking/

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Sundararaman_Tracking_Pedestrian_Heads_in_Dense_Crowd_CVPR_2021_paper.html

-

Code1: https://github.com/Sentient07/HeadHunter

-

Code2: https://github.com/Sentient07/HeadHunter%E2%80%93T

-

Dataset: https://project.inria.fr/crowdscience/project/dense-crowd-head-tracking/

Part-aware Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2106.06351

-

Code: https://github.com/tue-mps/panoptic_parts

-

Dataset: https://github.com/tue-mps/panoptic_parts

Learning High Fidelity Depths of Dressed Humans by Watching Social Media Dance Videos

-

Homepage: https://www.yasamin.page/hdnet_tiktok

-

Paper(Oral): https://arxiv.org/abs/2103.03319

-

Code: https://github.com/yasaminjafarian/HDNet_TikTok

-

Dataset: https://www.yasamin.page/hdnet_tiktok#h.jr9ifesshn7v

High-Resolution Photorealistic Image Translation in Real-Time: A Laplacian Pyramid Translation Network

-

Paper: https://arxiv.org/abs/2105.09188

-

Code: https://github.com/csjliang/LPTN

-

Dataset: https://github.com/csjliang/LPTN

Detection, Tracking, and Counting Meets Drones in Crowds: A Benchmark

-

Paper: https://arxiv.org/abs/2105.02440

-

Code: https://github.com/VisDrone/DroneCrowd

-

Dataset: https://github.com/VisDrone/DroneCrowd

Towards Good Practices for Efficiently Annotating Large-Scale Image Classification Datasets

-

Homepage: https://fidler-lab.github.io/efficient-annotation-cookbook/

-

Paper(Oral): https://arxiv.org/abs/2104.12690

-

Code: https://github.com/fidler-lab/efficient-annotation-cookbook

论文下载链接:

ViP-DeepLab: Learning Visual Perception with Depth-aware Video Panoptic Segmentation

-

Paper: https://arxiv.org/abs/2012.05258

-

Code: https://github.com/joe-siyuan-qiao/ViP-DeepLab

-

Dataset: https://github.com/joe-siyuan-qiao/ViP-DeepLab

Learning To Count Everything

-

Paper: https://arxiv.org/abs/2104.08391

-

Code: https://github.com/cvlab-stonybrook/LearningToCountEverything

-

Dataset: https://github.com/cvlab-stonybrook/LearningToCountEverything

Semantic Image Matting

-

Paper: https://arxiv.org/abs/2104.08201

-

Code: https://github.com/nowsyn/SIM

-

Dataset: https://github.com/nowsyn/SIM

Towards Fast and Accurate Real-World Depth Super-Resolution: Benchmark Dataset and Baseline

-

Homepage: http://mepro.bjtu.edu.cn/resource.html

-

Paper: https://arxiv.org/abs/2104.06174

-

Code: None

Visual Semantic Role Labeling for Video Understanding

-

Homepage: https://vidsitu.org/

-

Paper: https://arxiv.org/abs/2104.00990

-

Code: https://github.com/TheShadow29/VidSitu

-

Dataset: https://github.com/TheShadow29/VidSitu

VSPW: A Large-scale Dataset for Video Scene Parsing in the Wild

-

Homepage: https://www.vspwdataset.com/

-

Paper: https://www.vspwdataset.com/CVPR2021__miao.pdf

-

GitHub: https://github.com/sssdddwww2/vspw_dataset_download

Sewer-ML: A Multi-Label Sewer Defect Classification Dataset and Benchmark

-

Homepage: https://vap.aau.dk/sewer-ml/

-

Paper: https://arxiv.org/abs/2103.10619

Sewer-ML: A Multi-Label Sewer Defect Classification Dataset and Benchmark

-

Homepage: https://vap.aau.dk/sewer-ml/

-

Paper: https://arxiv.org/abs/2103.10895

Nutrition5k: Towards Automatic Nutritional Understanding of Generic Food

-

Paper: https://arxiv.org/abs/2103.03375

-

Dataset: None

Towards Semantic Segmentation of Urban-Scale 3D Point Clouds: A Dataset, Benchmarks and Challenges

-

Homepage: https://github.com/QingyongHu/SensatUrban

-

Paper: http://arxiv.org/abs/2009.03137

-

Code: https://github.com/QingyongHu/SensatUrban

-

Dataset: https://github.com/QingyongHu/SensatUrban

When Age-Invariant Face Recognition Meets Face Age Synthesis: A Multi-Task Learning Framework

-

Paper(Oral): https://arxiv.org/abs/2103.01520

-

Code: https://github.com/Hzzone/MTLFace

-

Dataset: https://github.com/Hzzone/MTLFace

Depth from Camera Motion and Object Detection

-

Paper: https://arxiv.org/abs/2103.01468

-

Code: https://github.com/griffbr/ODMD

-

Dataset: https://github.com/griffbr/ODMD

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge

-

Homepage: http://rl.uni-freiburg.de/research/multimodal-distill

-

Paper: https://arxiv.org/abs/2103.01353

-

Code: http://rl.uni-freiburg.de/research/multimodal-distill

Scan2Cap: Context-aware Dense Captioning in RGB-D Scans

-

Paper: https://arxiv.org/abs/2012.02206

-

Code: https://github.com/daveredrum/Scan2Cap

-

Dataset: https://github.com/daveredrum/ScanRefer

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge

-

Paper: https://arxiv.org/abs/2103.01353

-

Code: http://rl.uni-freiburg.de/research/multimodal-distill

-

Dataset: http://rl.uni-freiburg.de/research/multimodal-distill

=====================================================================

Fast and Accurate Model Scaling

-

Paper: https://openaccess.thecvf.com/content/CVPR2021/html/Dollar_Fast_and_Accurate_Model_Scaling_CVPR_2021_paper.html

-

Code: https://github.com/facebookresearch/pycls

Learning High Fidelity Depths of Dressed Humans by Watching Social Media Dance Videos

-

Homepage: https://www.yasamin.page/hdnet_tiktok

-

Paper(Oral): https://arxiv.org/abs/2103.03319

-

Code: https://github.com/yasaminjafarian/HDNet_TikTok

-

Dataset: https://www.yasamin.page/hdnet_tiktok#h.jr9ifesshn7v

Omnimatte: Associating Objects and Their Effects in Video

-

Homepage: https://omnimatte.github.io/

-

Paper(Oral): https://arxiv.org/abs/2105.06993

-

Code: https://omnimatte.github.io/#code

Towards Good Practices for Efficiently Annotating Large-Scale Image Classification Datasets

-

Homepage: https://fidler-lab.github.io/efficient-annotation-cookbook/

-

Paper(Oral): https://arxiv.org/abs/2104.12690

-

Code: https://github.com/fidler-lab/efficient-annotation-cookbook

Motion Representations for Articulated Animation

-

Paper: https://arxiv.org/abs/2104.11280

-

Code: https://github.com/snap-research/articulated-animation

Deep Lucas-Kanade Homography for Multimodal Image Alignment

-

Paper: https://arxiv.org/abs/2104.11693

-

Code: https://github.com/placeforyiming/CVPR21-Deep-Lucas-Kanade-Homography

Skip-Convolutions for Efficient Video Processing

-

Paper: https://arxiv.org/abs/2104.11487

-

Code: None

KeypointDeformer: Unsupervised 3D Keypoint Discovery for Shape Control

-

Homepage: http://tomasjakab.github.io/KeypointDeformer

-

Paper(Oral): https://arxiv.org/abs/2104.11224

-

Code: https://github.com/tomasjakab/keypoint_deformer/

Learning To Count Everything

-

Paper: https://arxiv.org/abs/2104.08391

-

Code: https://github.com/cvlab-stonybrook/LearningToCountEverything

-

Dataset: https://github.com/cvlab-stonybrook/LearningToCountEverything

SOLD2: Self-supervised Occlusion-aware Line Description and Detection

-

Paper(Oral): https://arxiv.org/abs/2104.03362

-

Code: https://github.com/cvg/SOLD2

Learning Probabilistic Ordinal Embeddings for Uncertainty-Aware Regression

-

Homepage: https://li-wanhua.github.io/POEs/

-

Paper: https://arxiv.org/abs/2103.13629

-

Code: https://github.com/Li-Wanhua/POEs

LEAP: Learning Articulated Occupancy of People

-

Paper: https://arxiv.org/abs/2104.06849

-

Code: None

Visual Semantic Role Labeling for Video Understanding

-

Homepage: https://vidsitu.org/

-

Paper: https://arxiv.org/abs/2104.00990

-

Code: https://github.com/TheShadow29/VidSitu

-

Dataset: https://github.com/TheShadow29/VidSitu

UAV-Human: A Large Benchmark for Human Behavior Understanding with Unmanned Aerial Vehicles

-

Paper: https://arxiv.org/abs/2104.00946

-

Code: https://github.com/SUTDCV/UAV-Human

Video Prediction Recalling Long-term Motion Context via Memory Alignment Learning

-

Paper(Oral): https://arxiv.org/abs/2104.00924

-

Code: None

Fully Understanding Generic Objects: Modeling, Segmentation, and Reconstruction

-

Paper: https://arxiv.org/abs/2104.00858

-

Code: None

Towards High Fidelity Face Relighting with Realistic Shadows

-

Paper: https://arxiv.org/abs/2104.00825

-

Code: None

BRepNet: A topological message passing system for solid models

-

Paper(Oral): https://arxiv.org/abs/2104.00706

-

Code: None

Visually Informed Binaural Audio Generation without Binaural Audios

-

Homepage: https://sheldontsui.github.io/projects/PseudoBinaural

-

Paper: None

-

GitHub: https://github.com/SheldonTsui/PseudoBinaural_CVPR2021

-

Demo: https://www.youtube.com/watch?v=r-uC2MyAWQc

Exploring intermediate representation for monocular vehicle pose estimation

-

Paper: None

-

Code: https://github.com/Nicholasli1995/EgoNet

Tuning IR-cut Filter for Illumination-aware Spectral Reconstruction from RGB

-

Paper(Oral): https://arxiv.org/abs/2103.14708

-

Code: None

Invertible Image Signal Processing

-

Paper: https://arxiv.org/abs/2103.15061

-

Code: https://github.com/yzxing87/Invertible-ISP

Video Rescaling Networks with Joint Optimization Strategies for Downscaling and Upscaling

-

Paper: https://arxiv.org/abs/2103.14858

-

Code: None

SceneGraphFusion: Incremental 3D Scene Graph Prediction from RGB-D Sequences

-

Paper: https://arxiv.org/abs/2103.14898

-

Code: None

Embedding Transfer with Label Relaxation for Improved Metric Learning

-

Paper: https://arxiv.org/abs/2103.14908

-

Code: None

Picasso: A CUDA-based Library for Deep Learning over 3D Meshes

-

Paper: https://arxiv.org/abs/2103.15076

-

Code: https://github.com/hlei-ziyan/Picasso

Meta-Mining Discriminative Samples for Kinship Verification

-

Paper: https://arxiv.org/abs/2103.15108

-

Code: None

Cloud2Curve: Generation and Vectorization of Parametric Sketches

-

Paper: https://arxiv.org/abs/2103.15536

-

Code: None

TrafficQA: A Question Answering Benchmark and an Efficient Network for Video Reasoning over Traffic Events

-

Paper: https://arxiv.org/abs/2103.15538

-

Code: https://github.com/SUTDCV/SUTD-TrafficQA

Abstract Spatial-Temporal Reasoning via Probabilistic Abduction and Execution

-

Homepage: http://wellyzhang.github.io/project/prae.html

-

Paper: https://arxiv.org/abs/2103.14230

-

Code: None

ACRE: Abstract Causal REasoning Beyond Covariation

-

Homepage: http://wellyzhang.github.io/project/acre.html

-

Paper: https://arxiv.org/abs/2103.14232

-

Code: None

Confluent Vessel Trees with Accurate Bifurcations

-

Paper: https://arxiv.org/abs/2103.14268

-

Code: None

Few-Shot Human Motion Transfer by Personalized Geometry and Texture Modeling

-

Paper: https://arxiv.org/abs/2103.14338

-

Code: https://github.com/HuangZhiChao95/FewShotMotionTransfer

Neural Parts: Learning Expressive 3D Shape Abstractions with Invertible Neural Networks

-

Homepage: https://paschalidoud.github.io/neural_parts

-

Paper: None

-

Code: https://github.com/paschalidoud/neural_parts

Knowledge Evolution in Neural Networks

-

Paper(Oral): https://arxiv.org/abs/2103.05152

-

Code: https://github.com/ahmdtaha/knowledge_evolution

Multi-institutional Collaborations for Improving Deep Learning-based Magnetic Resonance Image Reconstruction Using Federated Learning

-

Paper: https://arxiv.org/abs/2103.02148

-

Code: https://github.com/guopengf/FLMRCM

SGP: Self-supervised Geometric Perception

-

Oral

-

Paper: https://arxiv.org/abs/2103.03114

-

Code: https://github.com/theNded/SGP

Multi-institutional Collaborations for Improving Deep Learning-based Magnetic Resonance Image Reconstruction Using Federated Learning

-

Paper: https://arxiv.org/abs/2103.02148

-

Code: https://github.com/guopengf/FLMRCM

Diffusion Probabilistic Models for 3D Point Cloud Generation

-

Paper: https://arxiv.org/abs/2103.01458

-

Code: https://github.com/luost26/diffusion-point-cloud

Scan2Cap: Context-aware Dense Captioning in RGB-D Scans

-

Paper: https://arxiv.org/abs/2012.02206

-

Code: https://github.com/daveredrum/Scan2Cap

-

Dataset: https://github.com/daveredrum/ScanRefer

文末有福利领取哦~

👉一、Python所有方向的学习路线

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

👉二、Python必备开发工具

👉三、Python视频合集

观看零基础学习视频,看视频学习是最快捷也是最有效果的方式,跟着视频中老师的思路,从基础到深入,还是很容易入门的。

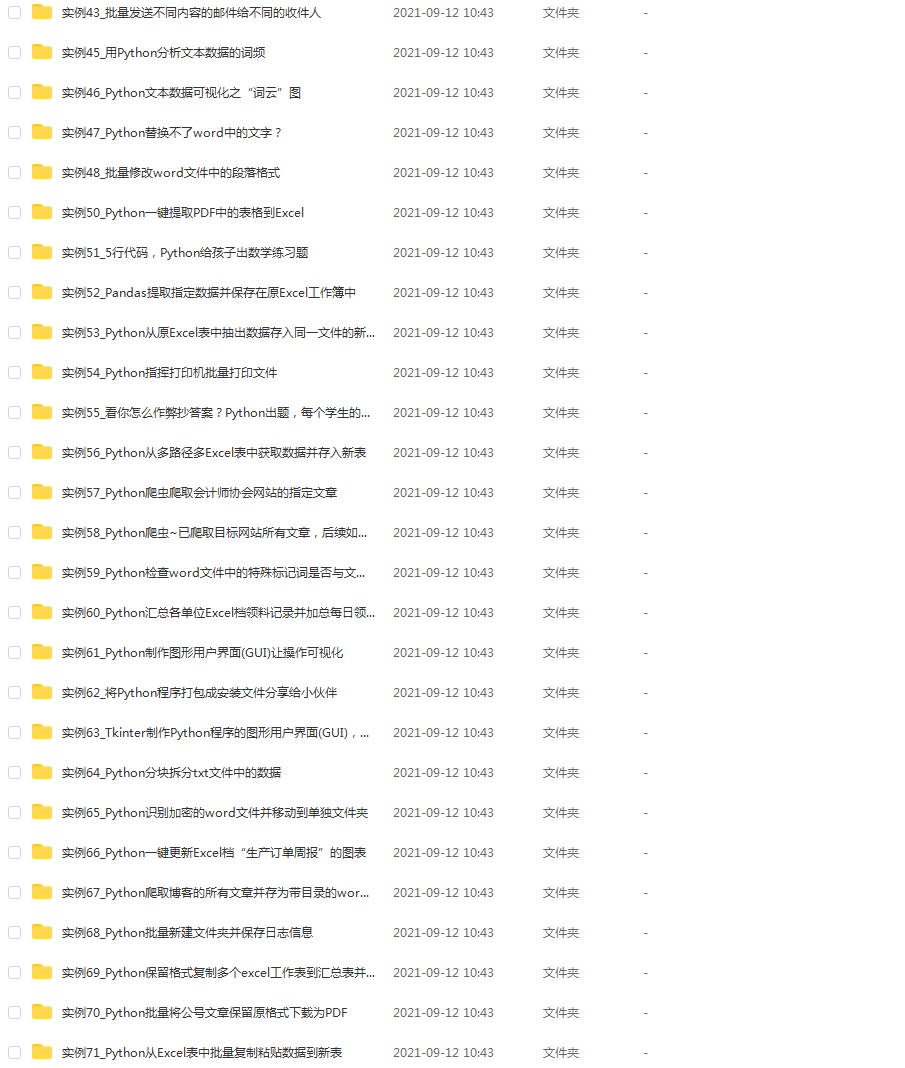

👉 四、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。(文末领读者福利)

👉五、Python练习题

检查学习结果。

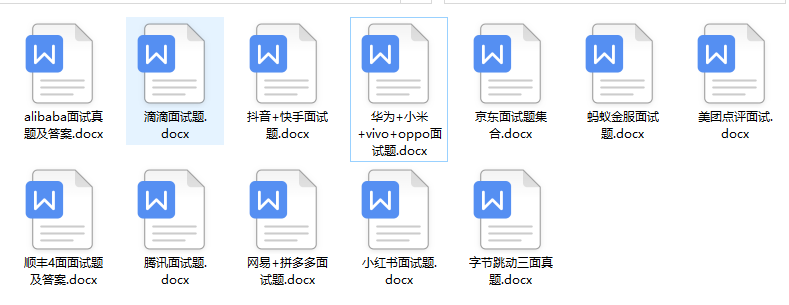

👉六、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

👉因篇幅有限,仅展示部分资料,这份完整版的Python全套学习资料已经上传

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024c (备注python)

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

rk and an Efficient Network for Video Reasoning over Traffic Events**

-

Paper: https://arxiv.org/abs/2103.15538

-

Code: https://github.com/SUTDCV/SUTD-TrafficQA

Abstract Spatial-Temporal Reasoning via Probabilistic Abduction and Execution

-

Homepage: http://wellyzhang.github.io/project/prae.html

-

Paper: https://arxiv.org/abs/2103.14230

-

Code: None

ACRE: Abstract Causal REasoning Beyond Covariation

-

Homepage: http://wellyzhang.github.io/project/acre.html

-

Paper: https://arxiv.org/abs/2103.14232

-

Code: None

Confluent Vessel Trees with Accurate Bifurcations

-

Paper: https://arxiv.org/abs/2103.14268

-

Code: None

Few-Shot Human Motion Transfer by Personalized Geometry and Texture Modeling

-

Paper: https://arxiv.org/abs/2103.14338

-

Code: https://github.com/HuangZhiChao95/FewShotMotionTransfer

Neural Parts: Learning Expressive 3D Shape Abstractions with Invertible Neural Networks

-

Homepage: https://paschalidoud.github.io/neural_parts

-

Paper: None

-

Code: https://github.com/paschalidoud/neural_parts

Knowledge Evolution in Neural Networks

-

Paper(Oral): https://arxiv.org/abs/2103.05152

-

Code: https://github.com/ahmdtaha/knowledge_evolution

Multi-institutional Collaborations for Improving Deep Learning-based Magnetic Resonance Image Reconstruction Using Federated Learning

-

Paper: https://arxiv.org/abs/2103.02148

-

Code: https://github.com/guopengf/FLMRCM

SGP: Self-supervised Geometric Perception

-

Oral

-

Paper: https://arxiv.org/abs/2103.03114

-

Code: https://github.com/theNded/SGP

Multi-institutional Collaborations for Improving Deep Learning-based Magnetic Resonance Image Reconstruction Using Federated Learning

-

Paper: https://arxiv.org/abs/2103.02148

-

Code: https://github.com/guopengf/FLMRCM

Diffusion Probabilistic Models for 3D Point Cloud Generation

-

Paper: https://arxiv.org/abs/2103.01458

-

Code: https://github.com/luost26/diffusion-point-cloud

Scan2Cap: Context-aware Dense Captioning in RGB-D Scans

-

Paper: https://arxiv.org/abs/2012.02206

-

Code: https://github.com/daveredrum/Scan2Cap

-

Dataset: https://github.com/daveredrum/ScanRefer

文末有福利领取哦~

👉一、Python所有方向的学习路线

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

👉二、Python必备开发工具

👉三、Python视频合集

观看零基础学习视频,看视频学习是最快捷也是最有效果的方式,跟着视频中老师的思路,从基础到深入,还是很容易入门的。

👉 四、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。(文末领读者福利)

👉五、Python练习题

检查学习结果。

👉六、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

👉因篇幅有限,仅展示部分资料,这份完整版的Python全套学习资料已经上传

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024c (备注python)

[外链图片转存中…(img-6v91yRNO-1713291683547)]

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?