等式中的 λ λ λ是梯度惩罚与其他评论家损失的比率,在本这里中设置为10。现在,我们将所有评论家损失和梯度惩罚添加到反向传播并更新权重:

total_loss = loss_real + loss_fake + LAMBDA * grad_loss

gradients = total_tape.gradient(total_loss, self.critic.variables)

self.optimizer_critic.apply_gradients(zip(gradients, self.critic.variables))

这就是需要添加到WGAN中以使其成为WGAN-GP的所有内容。不过,需要删除以下部分:

-

权重裁剪

-

评论家中的批标准化

梯度惩罚是针对每个输入独立地对评论者的梯度范数进行惩罚。但是,批规范化会随着批处理统计信息更改梯度。为避免此问题,批规范化从评论家中删除。

评论家体系结构与WGAN相同,但不包括批规范化:

以下是经过训练的WGAN-GP生成的样本:

它们看起来清晰漂亮,非常类似于Fashion-MNIST数据集中的样本。训练非常稳定,很快就收敛了!

wgan_and_wgan_gp.py

import tensorflow as tf

from tensorflow.keras import layers, Model

from tensorflow.keras.activations import relu

from tensorflow.keras.models import Sequential, load_model

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

from tensorflow.keras.losses import BinaryCrossentropy

from tensorflow.keras.optimizers import RMSprop, Adam

from tensorflow.keras.metrics import binary_accuracy

import tensorflow_datasets as tfds

import numpy as np

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings(‘ignore’)

print(“Tensorflow”, tf.version)

ds_train, ds_info = tfds.load(‘fashion_mnist’, split=‘train’,shuffle_files=True,with_info=True)

fig = tfds.show_examples(ds_train, ds_info)

batch_size = 64

image_shape = (32, 32, 1)

def preprocess(features):

image = tf.image.resize(features[‘image’], image_shape[:2])

image = tf.cast(image, tf.float32)

image = (image-127.5)/127.5

return image

ds_train = ds_train.map(preprocess)

ds_train = ds_train.shuffle(ds_info.splits[‘train’].num_examples)

ds_train = ds_train.batch(batch_size, drop_remainder=True).repeat()

train_num = ds_info.splits[‘train’].num_examples

train_steps_per_epoch = round(train_num/batch_size)

print(train_steps_per_epoch)

“”"

WGAN

“”"

class WGAN():

def init(self, input_shape):

self.z_dim = 128

self.input_shape = input_shape

losses

self.loss_critic_real = {}

self.loss_critic_fake = {}

self.loss_critic = {}

self.loss_generator = {}

critic

self.n_critic = 5

self.critic = self.build_critic()

self.critic.trainable = False

self.optimizer_critic = RMSprop(5e-5)

build generator pipeline with frozen critic

self.generator = self.build_generator()

critic_output = self.critic(self.generator.output)

self.model = Model(self.generator.input, critic_output)

self.model.compile(loss = self.wasserstein_loss,

optimizer = RMSprop(5e-5))

self.critic.trainable = True

def wasserstein_loss(self, y_true, y_pred):

w_loss = -tf.reduce_mean(y_true*y_pred)

return w_loss

def build_generator(self):

DIM = 128

model = tf.keras.Sequential(name=‘Generator’)

model.add(layers.Input(shape=[self.z_dim]))

model.add(layers.Dense(444*DIM))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.Reshape((4,4,4*DIM)))

model.add(layers.UpSampling2D((2,2), interpolation=“bilinear”))

model.add(layers.Conv2D(2*DIM, 5, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.UpSampling2D((2,2), interpolation=“bilinear”))

model.add(layers.Conv2D(DIM, 5, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.UpSampling2D((2,2), interpolation=“bilinear”))

model.add(layers.Conv2D(image_shape[-1], 5, padding=‘same’, activation=‘tanh’))

return model

def build_critic(self):

DIM = 128

model = tf.keras.Sequential(name=‘critics’)

model.add(layers.Input(shape=self.input_shape))

model.add(layers.Conv2D(1*DIM, 5, strides=2, padding=‘same’))

model.add(layers.LeakyReLU(0.2))

model.add(layers.Conv2D(2*DIM, 5, strides=2, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU(0.2))

model.add(layers.Conv2D(4*DIM, 5, strides=2, padding=‘same’))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU(0.2))

model.add(layers.Flatten())

model.add(layers.Dense(1))

return model

def train_critic(self, real_images, batch_size):

real_labels = tf.ones(batch_size)

fake_labels = -tf.ones(batch_size)

g_input = tf.random.normal((batch_size, self.z_dim))

fake_images = self.generator.predict(g_input)

with tf.GradientTape() as total_tape:

forward pass

pred_fake = self.critic(fake_images)

pred_real = self.critic(real_images)

calculate losses

loss_fake = self.wasserstein_loss(fake_labels, pred_fake)

loss_real = self.wasserstein_loss(real_labels, pred_real)

total loss

total_loss = loss_fake + loss_real

apply gradients

gradients = total_tape.gradient(total_loss, self.critic.trainable_variables)

self.optimizer_critic.apply_gradients(zip(gradients, self.critic.trainable_variables))

for layer in self.critic.layers:

weights = layer.get_weights()

weights = [tf.clip_by_value(w, -0.01, 0.01) for w in weights]

layer.set_weights(weights)

return loss_fake, loss_real

def train(self, data_generator, batch_size, steps, interval=200):

val_g_input = tf.random.normal((batch_size, self.z_dim))

real_labels = tf.ones(batch_size)

for i in range(steps):

for _ in range(self.n_critic):

real_images = next(data_generator)

loss_fake, loss_real = self.train_critic(real_images, batch_size)

critic_loss = loss_fake + loss_real

train generator

g_input = tf.random.normal((batch_size, self.z_dim))

g_loss = self.model.train_on_batch(g_input, real_labels)

self.loss_critic_real[i] = loss_real.numpy()

self.loss_critic_fake[i] = loss_fake.numpy()

self.loss_critic[i] = critic_loss.numpy()

self.loss_generator[i] = g_loss

if i%interval == 0:

msg = “Step {}: g_loss {:.4f} critic_loss {:.4f} critic fake {:.4f} critic_real {:.4f}”\

.format(i, g_loss, critic_loss, loss_fake, loss_real)

print(msg)

fake_images = self.generator.predict(val_g_input)

self.plot_images(fake_images)

self.plot_losses()

def plot_images(self, images):

grid_row = 1

grid_col = 8

f, axarr = plt.subplots(grid_row, grid_col, figsize=(grid_col2.5, grid_row2.5))

for row in range(grid_row):

for col in range(grid_col):

if self.input_shape[-1]==1:

axarr[col].imshow(images[col,:,:,0]*0.5+0.5, cmap=‘gray’)

else:

axarr[col].imshow(images[col]*0.5+0.5)

axarr[col].axis(‘off’)

plt.show()

def plot_losses(self):

fig, (ax1, ax2) = plt.subplots(2, sharex=True)

fig.set_figwidth(10)

fig.set_figheight(6)

ax1.plot(list(self.loss_critic.values()), label=‘Critic loss’, alpha=0.7)

ax1.set_title(“Critic loss”)

ax2.plot(list(self.loss_generator.values()), label=‘Generator loss’, alpha=0.7)

ax2.set_title(“Generator loss”)

plt.xlabel(‘Steps’)

plt.show()

wgan = WGAN(image_shape)

wgan.generator.summary()

wgan.critic.summary()

wgan.train(iter(ds_train), batch_size, 2000, 100)

z = tf.random.normal((8, 128))

generated_images = wgan.generator.predict(z)

wgan.plot_images(generated_images)

wgan.generator.save_weights(‘./wgan_models/wgan_fashion_minist.weights’)

“”"

WGAN_GP

“”"

class WGAN_GP():

def init(self, input_shape):

self.z_dim = 128

self.input_shape = input_shape

critic

self.n_critic = 5

self.penalty_const = 10

self.critic = self.build_critic()

self.critic.trainable = False

self.optimizer_critic = Adam(1e-4, 0.5, 0.9)

build generator pipeline with frozen critic

self.generator = self.build_generator()

critic_output = self.critic(self.generator.output)

self.model = Model(self.generator.input, critic_output)

self.model.compile(loss=self.wasserstein_loss, optimizer=Adam(1e-4, 0.5, 0.9))

def wasserstein_loss(self, y_true, y_pred):

w_loss = -tf.reduce_mean(y_true*y_pred)

return w_loss

def build_generator(self):

DIM = 128

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

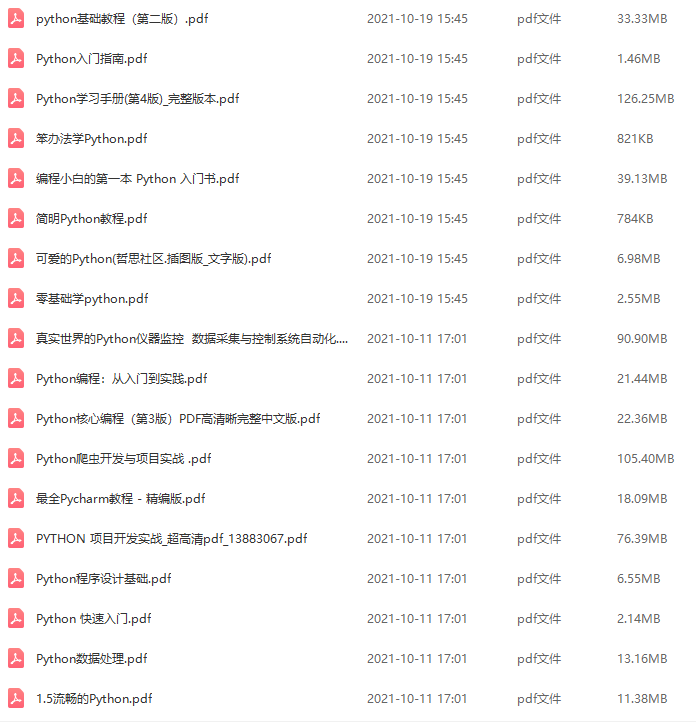

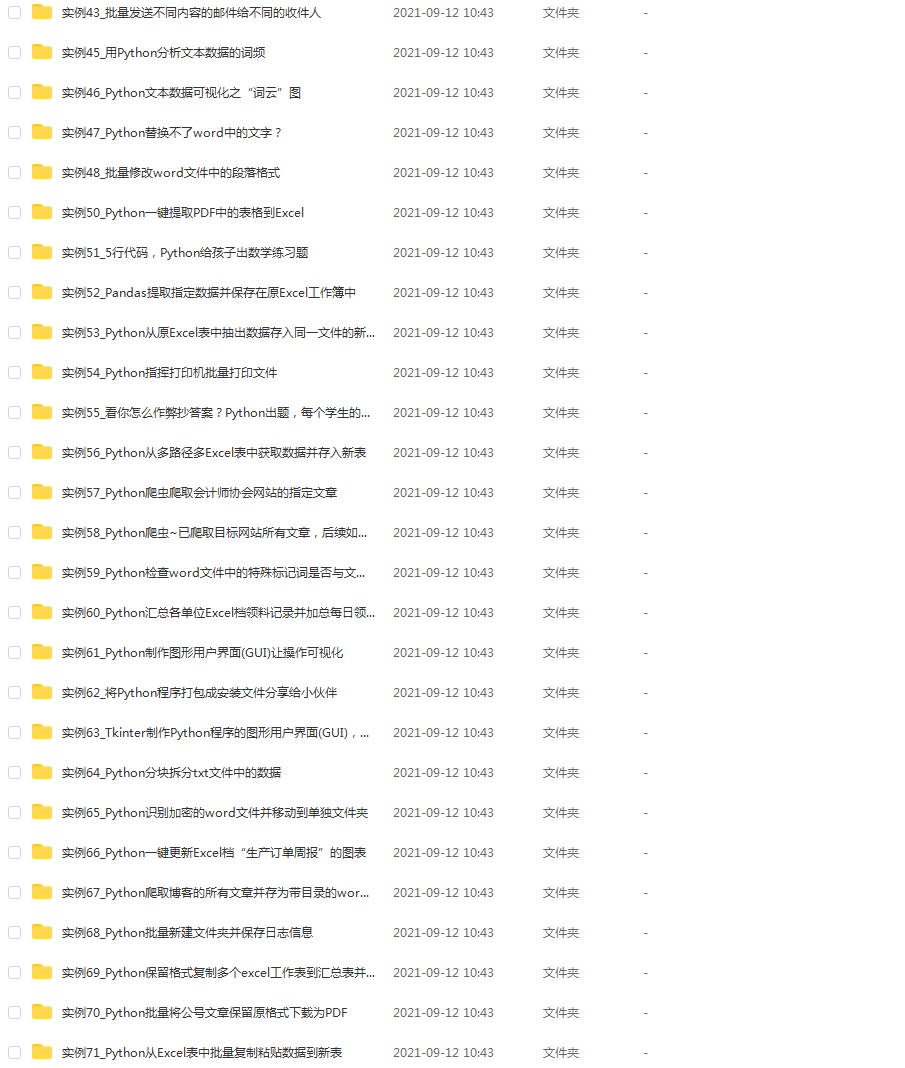

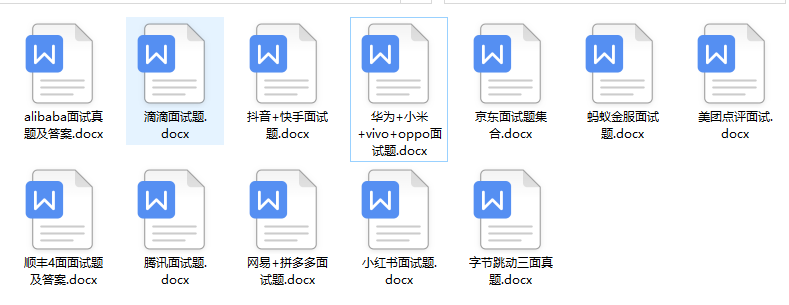

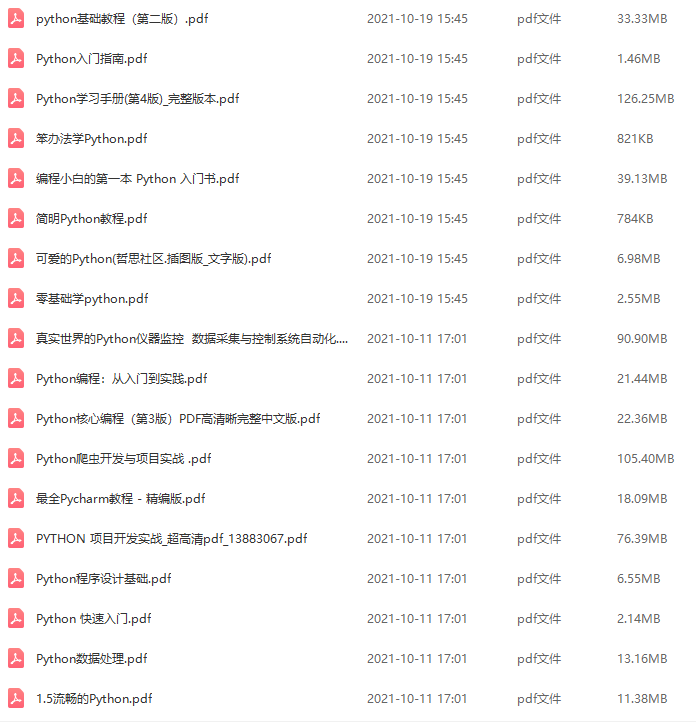

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Python开发知识点,真正体系化!

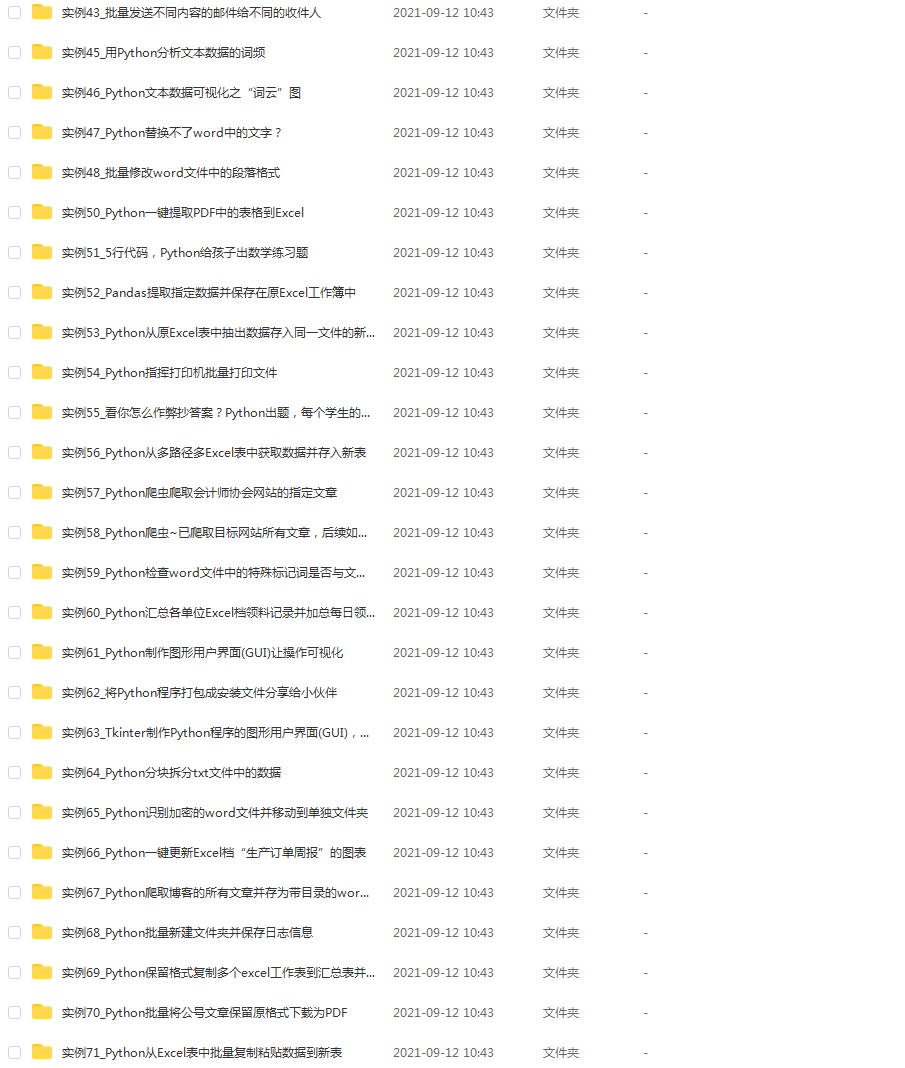

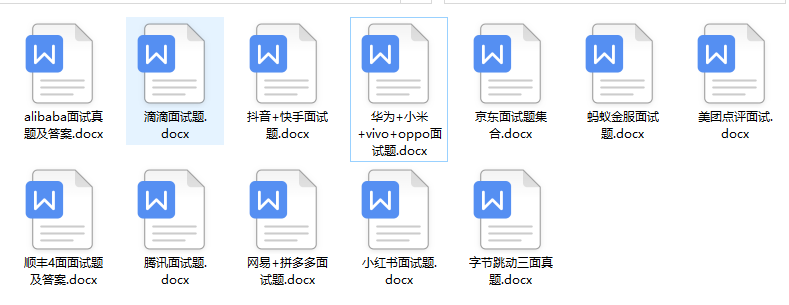

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加V获取:vip1024c (备注Python)

cUH-1712790345917)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Python开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加V获取:vip1024c (备注Python)

[外链图片转存中…(img-4cBli1xG-1712790345917)]

4885

4885

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?